Krushi Patel

Multi-Layer Dense Attention Decoder for Polyp Segmentation

Mar 27, 2024

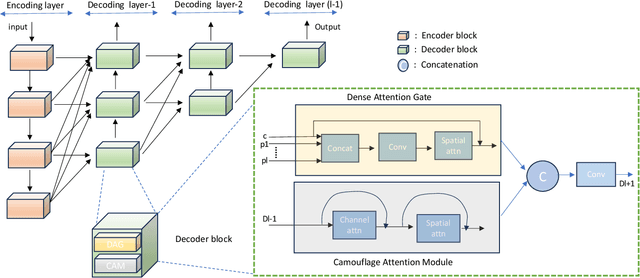

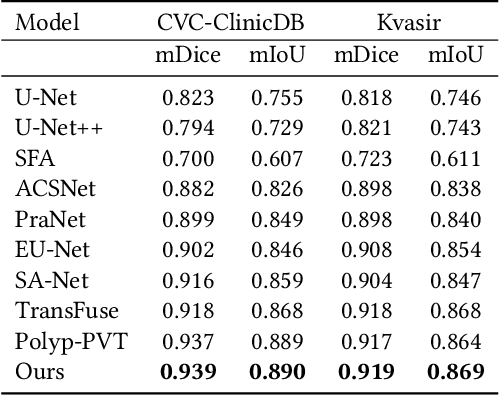

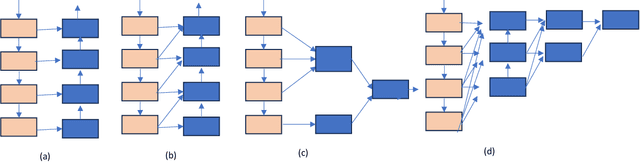

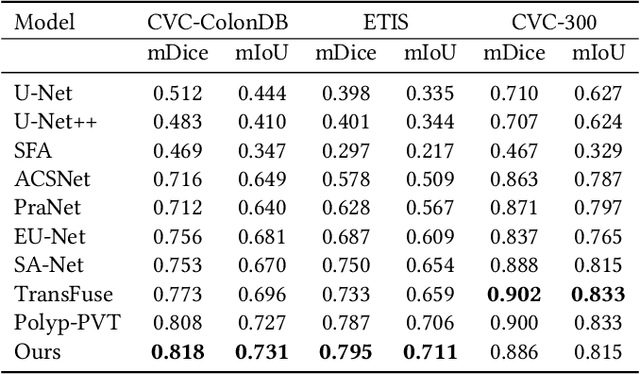

Abstract:Detecting and segmenting polyps is crucial for expediting the diagnosis of colon cancer. This is a challenging task due to the large variations of polyps in color, texture, and lighting conditions, along with subtle differences between the polyp and its surrounding area. Recently, vision Transformers have shown robust abilities in modeling global context for polyp segmentation. However, they face two major limitations: the inability to learn local relations among multi-level layers and inadequate feature aggregation in the decoder. To address these issues, we propose a novel decoder architecture aimed at hierarchically aggregating locally enhanced multi-level dense features. Specifically, we introduce a novel module named Dense Attention Gate (DAG), which adaptively fuses all previous layers' features to establish local feature relations among all layers. Furthermore, we propose a novel nested decoder architecture that hierarchically aggregates decoder features, thereby enhancing semantic features. We incorporate our novel dense decoder with the PVT backbone network and conduct evaluations on five polyp segmentation datasets: Kvasir, CVC-300, CVC-ColonDB, CVC-ClinicDB, and ETIS. Our experiments and comparisons with nine competing segmentation models demonstrate that the proposed architecture achieves state-of-the-art performance and outperforms the previous models on four datasets. The source code is available at: https://github.com/krushi1992/Dense-Decoder.

Aggregating Global Features into Local Vision Transformer

Jan 30, 2022

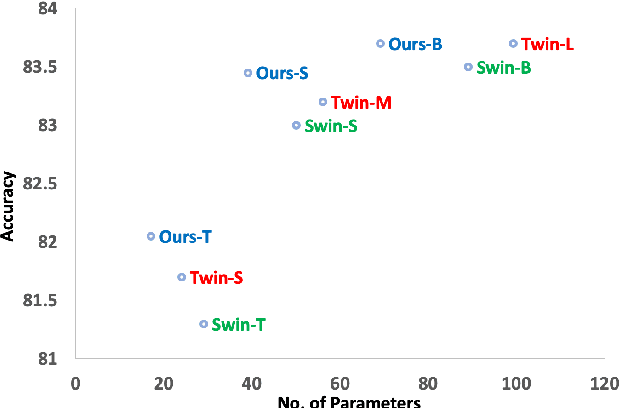

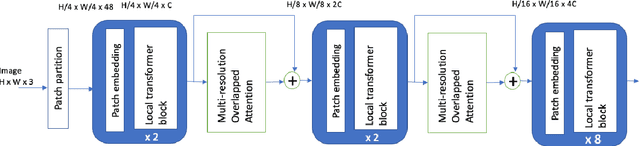

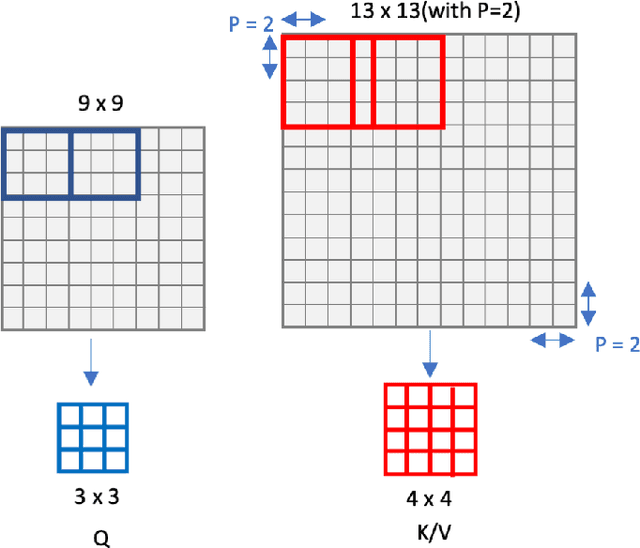

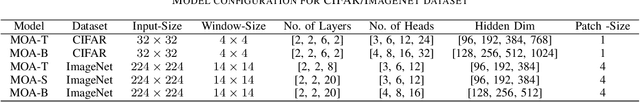

Abstract:Local Transformer-based classification models have recently achieved promising results with relatively low computational costs. However, the effect of aggregating spatial global information of local Transformer-based architecture is not clear. This work investigates the outcome of applying a global attention-based module named multi-resolution overlapped attention (MOA) in the local window-based transformer after each stage. The proposed MOA employs slightly larger and overlapped patches in the key to enable neighborhood pixel information transmission, which leads to significant performance gain. In addition, we thoroughly investigate the effect of the dimension of essential architecture components through extensive experiments and discover an optimum architecture design. Extensive experimental results CIFAR-10, CIFAR-100, and ImageNet-1K datasets demonstrate that the proposed approach outperforms previous vision Transformers with a comparatively fewer number of parameters.

A Discriminative Channel Diversification Network for Image Classification

Dec 10, 2021

Abstract:Channel attention mechanisms in convolutional neural networks have been proven to be effective in various computer vision tasks. However, the performance improvement comes with additional model complexity and computation cost. In this paper, we propose a light-weight and effective attention module, called channel diversification block, to enhance the global context by establishing the channel relationship at the global level. Unlike other channel attention mechanisms, the proposed module focuses on the most discriminative features by giving more attention to the spatially distinguishable channels while taking account of the channel activation. Different from other attention models that plugin the module in between several intermediate layers, the proposed module is embedded at the end of the backbone networks, making it easy to implement. Extensive experiments on CIFAR-10, SVHN, and Tiny-ImageNet datasets demonstrate that the proposed module improves the performance of the baseline networks by a margin of 3% on average.

Enhanced U-Net: A Feature Enhancement Network for Polyp Segmentation

May 03, 2021

Abstract:Colonoscopy is a procedure to detect colorectal polyps which are the primary cause for developing colorectal cancer. However, polyp segmentation is a challenging task due to the diverse shape, size, color, and texture of polyps, shuttle difference between polyp and its background, as well as low contrast of the colonoscopic images. To address these challenges, we propose a feature enhancement network for accurate polyp segmentation in colonoscopy images. Specifically, the proposed network enhances the semantic information using the novel Semantic Feature Enhance Module (SFEM). Furthermore, instead of directly adding encoder features to the respective decoder layer, we introduce an Adaptive Global Context Module (AGCM), which focuses only on the encoder's significant and hard fine-grained features. The integration of these two modules improves the quality of features layer by layer, which in turn enhances the final feature representation. The proposed approach is evaluated on five colonoscopy datasets and demonstrates superior performance compared to other state-of-the-art models.

Colonoscopy Polyp Detection and Classification: Dataset Creation and Comparative Evaluations

Apr 22, 2021

Abstract:Colorectal cancer (CRC) is one of the most common types of cancer with a high mortality rate. Colonoscopy is the preferred procedure for CRC screening and has proven to be effective in reducing CRC mortality. Thus, a reliable computer-aided polyp detection and classification system can significantly increase the effectiveness of colonoscopy. In this paper, we create an endoscopic dataset collected from various sources and annotate the ground truth of polyp location and classification results with the help of experienced gastroenterologists. The dataset can serve as a benchmark platform to train and evaluate the machine learning models for polyp classification. We have also compared the performance of eight state-of-the-art deep learning-based object detection models. The results demonstrate that deep CNN models are promising in CRC screening. This work can serve as a baseline for future research in polyp detection and classification.

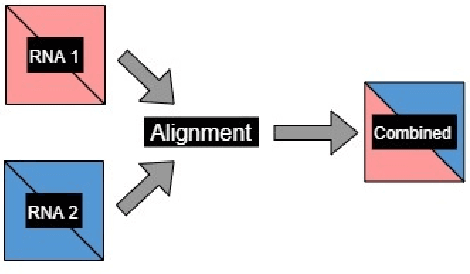

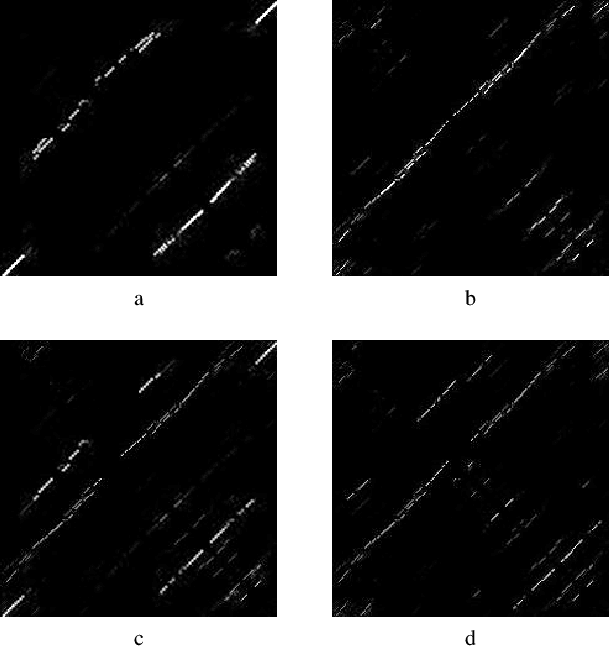

Classification of Noncoding RNA Elements Using Deep Convolutional Neural Networks

Aug 24, 2020

Abstract:The paper proposes to employ deep convolutional neural networks (CNNs) to classify noncoding RNA (ncRNA) sequences. To this end, we first propose an efficient approach to convert the RNA sequences into images characterizing their base-pairing probability. As a result, classifying RNA sequences is converted to an image classification problem that can be efficiently solved by available CNN-based classification models. The paper also considers the folding potential of the ncRNAs in addition to their primary sequence. Based on the proposed approach, a benchmark image classification dataset is generated from the RFAM database of ncRNA sequences. In addition, three classical CNN models have been implemented and compared to demonstrate the superior performance and efficiency of the proposed approach. Extensive experimental results show the great potential of using deep learning approaches for RNA classification.

A Comparative Study on Polyp Classification using Convolutional Neural Networks

Jul 12, 2020

Abstract:Colorectal cancer is the third most common cancer diagnosed in both men and women in the United States. Most colorectal cancers start as a growth on the inner lining of the colon or rectum, called 'polyp'. Not all polyps are cancerous, but some can develop into cancer. Early detection and recognition of the type of polyps is critical to prevent cancer and change outcomes. However, visual classification of polyps is challenging due to varying illumination conditions of endoscopy, variant texture, appearance, and overlapping morphology between polyps. More importantly, evaluation of polyp patterns by gastroenterologists is subjective leading to a poor agreement among observers. Deep convolutional neural networks have proven very successful in object classification across various object categories. In this work, we compare the performance of the state-of-the-art general object classification models for polyp classification. We trained a total of six CNN models end-to-end using a dataset of 157 video sequences composed of two types of polyps: hyperplastic and adenomatous. Our results demonstrate that the state-of-the-art CNN models can successfully classify polyps with an accuracy comparable or better than reported among gastroenterologists. The results of this study can guide future research in polyp classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge