Kerianne L. Hobbs

Disturbance-Robust Backup Control Barrier Functions: Safety Under Uncertain Dynamics

Sep 12, 2024

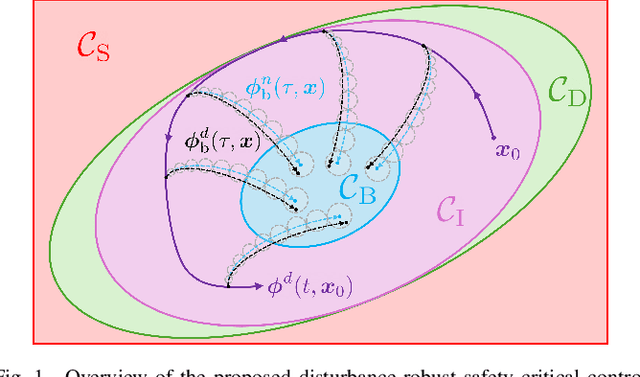

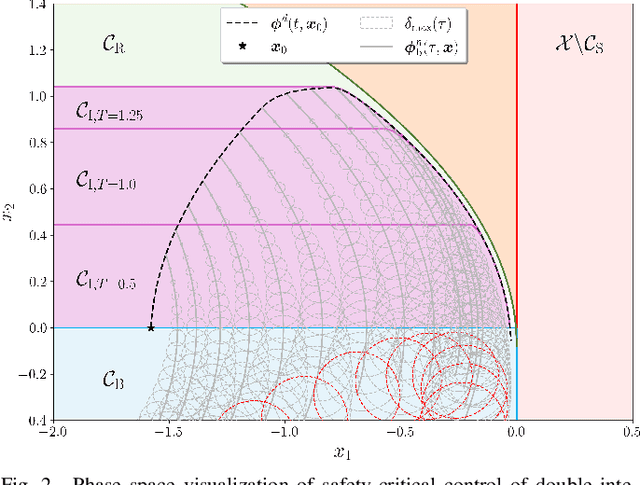

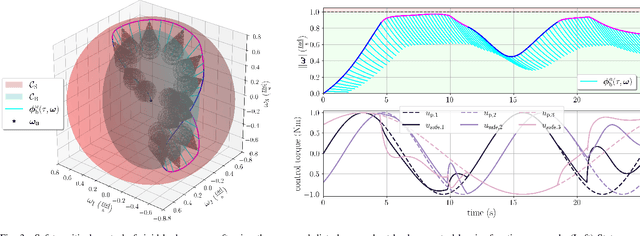

Abstract:Obtaining a controlled invariant set is crucial for safety-critical control with control barrier functions (CBFs) but is non-trivial for complex nonlinear systems and constraints. Backup control barrier functions allow such sets to be constructed online in a computationally tractable manner by examining the evolution (or flow) of the system under a known backup control law. However, for systems with unmodeled disturbances, this flow cannot be directly computed, making the current methods inadequate for assuring safety in these scenarios. To address this gap, we leverage bounds on the nominal and disturbed flow to compute a forward invariant set online by ensuring safety of an expanding norm ball tube centered around the nominal system evolution. We prove that this set results in robust control constraints which guarantee safety of the disturbed system via our Disturbance-Robust Backup Control Barrier Function (DR-BCBF) solution. Additionally, the efficacy of the proposed framework is demonstrated in simulation, applied to a double integrator problem and a rigid body spacecraft rotation problem with rate constraints.

Investigating the Impact of Choice on Deep Reinforcement Learning for Space Controls

May 20, 2024

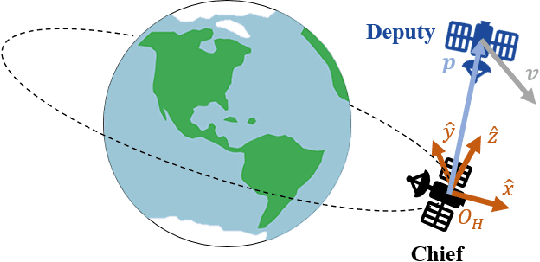

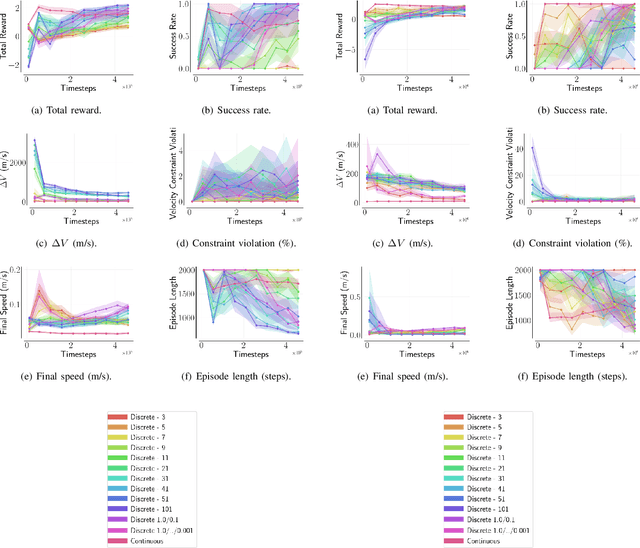

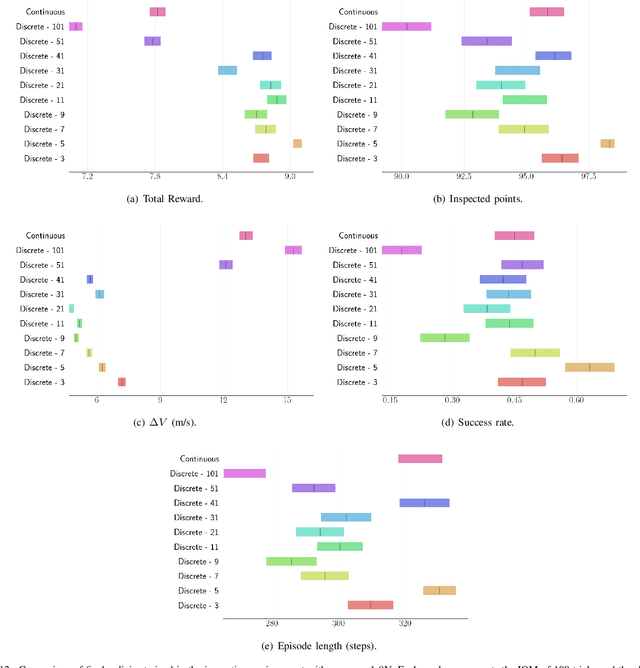

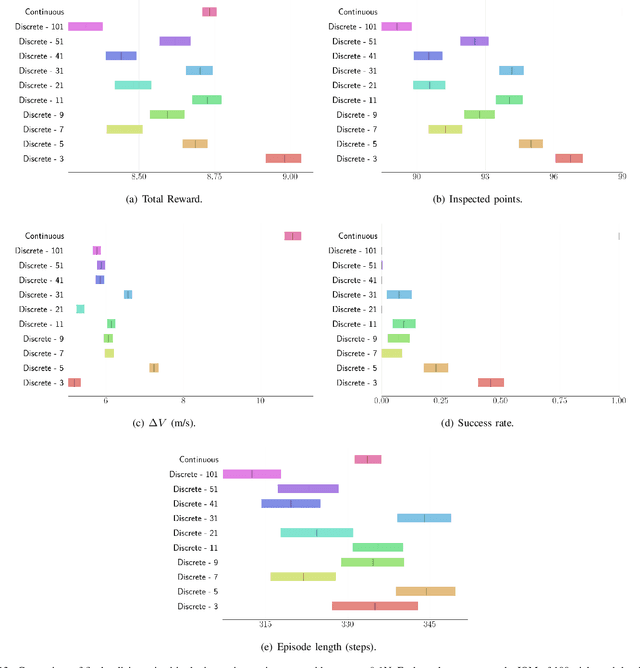

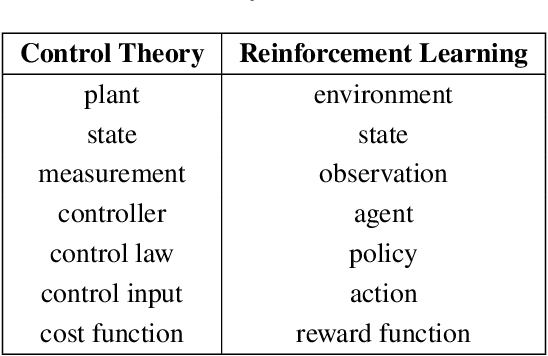

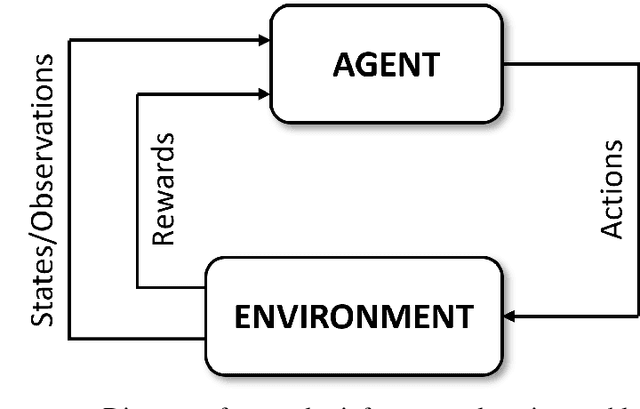

Abstract:For many space applications, traditional control methods are often used during operation. However, as the number of space assets continues to grow, autonomous operation can enable rapid development of control methods for different space related tasks. One method of developing autonomous control is Reinforcement Learning (RL), which has become increasingly popular after demonstrating promising performance and success across many complex tasks. While it is common for RL agents to learn bounded continuous control values, this may not be realistic or practical for many space tasks that traditionally prefer an on/off approach for control. This paper analyzes using discrete action spaces, where the agent must choose from a predefined list of actions. The experiments explore how the number of choices provided to the agents affects their measured performance during and after training. This analysis is conducted for an inspection task, where the agent must circumnavigate an object to inspect points on its surface, and a docking task, where the agent must move into proximity of another spacecraft and "dock" with a low relative speed. A common objective of both tasks, and most space tasks in general, is to minimize fuel usage, which motivates the agent to regularly choose an action that uses no fuel. Our results show that a limited number of discrete choices leads to optimal performance for the inspection task, while continuous control leads to optimal performance for the docking task.

Collision Avoidance and Geofencing for Fixed-wing Aircraft with Control Barrier Functions

Mar 07, 2024

Abstract:Safety-critical failures often have fatal consequences in aerospace control. Control systems on aircraft, therefore, must ensure the strict satisfaction of safety constraints, preferably with formal guarantees of safe behavior. This paper establishes the safety-critical control of fixed-wing aircraft in collision avoidance and geofencing tasks. A control framework is developed wherein a run-time assurance (RTA) system modulates the nominal flight controller of the aircraft whenever necessary to prevent it from colliding with other aircraft or crossing a boundary (geofence) in space. The RTA is formulated as a safety filter using control barrier functions (CBFs) with formal guarantees of safe behavior. CBFs are constructed and compared for a nonlinear kinematic fixed-wing aircraft model. The proposed CBF-based controllers showcase the capability of safely executing simultaneous collision avoidance and geofencing, as demonstrated by simulations on the kinematic model and a high-fidelity dynamical model.

Testing Spacecraft Formation Flying with Crazyflie Drones as Satellite Surrogates

Feb 22, 2024

Abstract:As the space domain becomes increasingly congested, autonomy is proposed as one approach to enable small numbers of human ground operators to manage large constellations of satellites and tackle more complex missions such as on-orbit or in-space servicing, assembly, and manufacturing. One of the biggest challenges in developing novel spacecraft autonomy is mechanisms to test and evaluate their performance. Testing spacecraft autonomy on-orbit can be high risk and prohibitively expensive. An alternative method is to test autonomy terrestrially using satellite surrogates such as attitude test beds on air bearings or drones for translational motion visualization. Against this background, this work develops an approach to evaluate autonomous spacecraft behavior using a surrogate platform, namely a micro-quadcopter drone developed by the Bitcraze team, the Crazyflie 2.1. The Crazyflie drones are increasingly becoming ubiquitous in flight testing labs because they are affordable, open source, readily available, and include expansion decks which allow for features such as positioning systems, distance and/or motion sensors, wireless charging, and AI capabilities. In this paper, models of Crazyflie drones are used to simulate the relative motion dynamics of spacecraft under linearized Clohessy-Wiltshire dynamics in elliptical natural motion trajectories, in pre-generated docking trajectories, and via trajectories output by neural network control systems.

Safety, Trust, and Ethics Considerations for Human-AI Teaming in Aerospace Control

Nov 15, 2023

Abstract:Designing a safe, trusted, and ethical AI may be practically impossible; however, designing AI with safe, trusted, and ethical use in mind is possible and necessary in safety and mission-critical domains like aerospace. Safe, trusted, and ethical use of AI are often used interchangeably; however, a system can be safely used but not trusted or ethical, have a trusted use that is not safe or ethical, and have an ethical use that is not safe or trusted. This manuscript serves as a primer to illuminate the nuanced differences between these concepts, with a specific focus on applications of Human-AI teaming in aerospace system control, where humans may be in, on, or out-of-the-loop of decision-making.

Deep Reinforcement Learning for Autonomous Spacecraft Inspection using Illumination

Aug 04, 2023

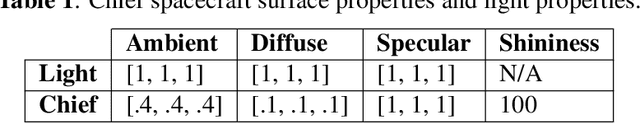

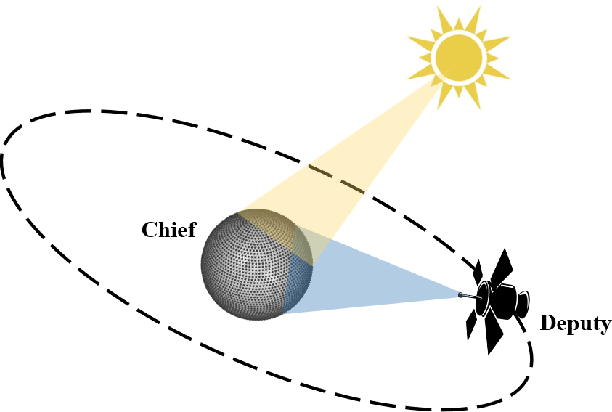

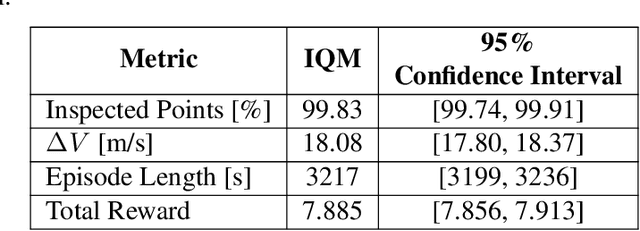

Abstract:This paper investigates the problem of on-orbit spacecraft inspection using a single free-flying deputy spacecraft, equipped with an optical sensor, whose controller is a neural network control system trained with Reinforcement Learning (RL). This work considers the illumination of the inspected spacecraft (chief) by the Sun in order to incentivize acquisition of well-illuminated optical data. The agent's performance is evaluated through statistically efficient metrics. Results demonstrate that the RL agent is able to inspect all points on the chief successfully, while maximizing illumination on inspected points in a simulated environment, using only low-level actions. Due to the stochastic nature of RL, 10 policies were trained using 10 random seeds to obtain a more holistic measure of agent performance. Over these 10 seeds, the interquartile mean (IQM) percentage of inspected points for the finalized model was 98.82%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge