Kei Kase

AIST

Deep Predictive Learning : Motion Learning Concept inspired by Cognitive Robotics

Jun 26, 2023

Abstract:A deep learning-based approach can generalize model performance while reducing feature design costs by learning end-to-end environment recognition and motion generation. However, the process incurs huge training data collection costs and time and human resources for trial-and-error when involving physical contact with robots. Therefore, we propose ``deep predictive learning,'' a motion learning concept that assumes imperfections in the predictive model and minimizes the prediction error with the real-world situation. Deep predictive learning is inspired by the ``free energy principle and predictive coding theory,'' which explains how living organisms behave to minimize the prediction error between the real world and the brain. Robots predict near-future situations based on sensorimotor information and generate motions that minimize the gap with reality. The robot can flexibly perform tasks in unlearned situations by adjusting its motion in real-time while considering the gap between learning and reality. This paper describes the concept of deep predictive learning, its implementation, and examples of its application to real robots. The code and document are available at https: //ogata-lab.github.io/eipl-docs

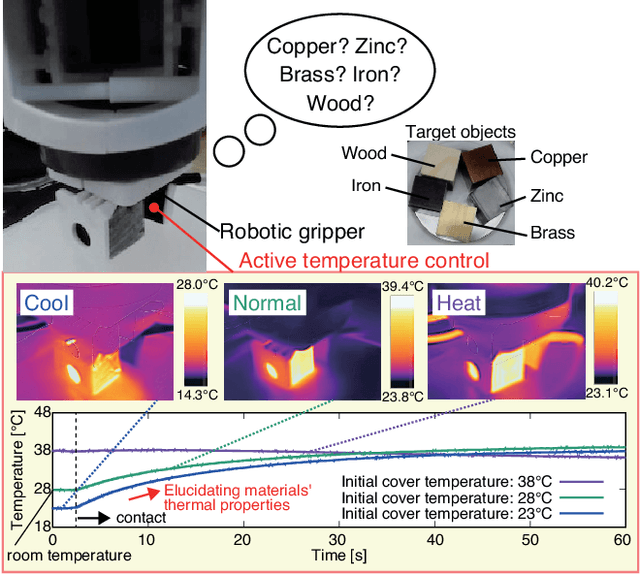

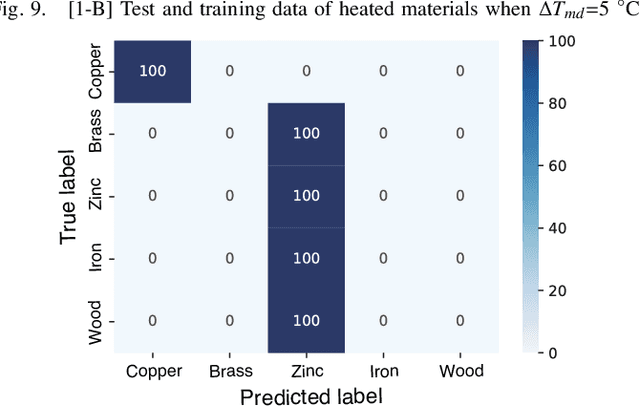

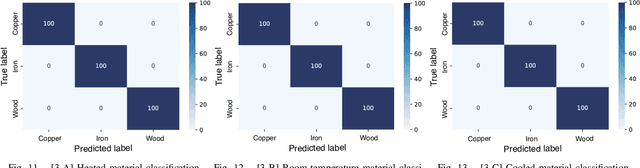

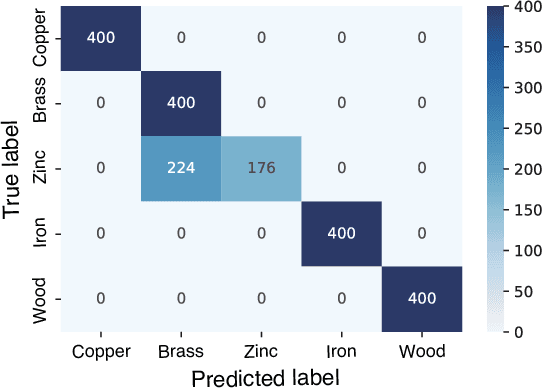

Material Classification Using Active Temperature Controllable Robotic Gripper

Nov 30, 2021

Abstract:Recognition techniques allow robots to make proper planning and control strategies to manipulate various objects. Object recognition is more reliable when made by combining several percepts, e.g., vision and haptics. One of the distinguishing features of each object's material is its heat properties, and classification can exploit heat transfer, similarly to human thermal sensation. Thermal-based recognition has the advantage of obtaining contact surface information in realtime by simply capturing temperature change using a tiny and cheap sensor. However, heat transfer between a robot surface and a contact object is strongly affected by the initial temperature and environmental conditions. A given object's material cannot be recognized when its temperature is the same as the robotic grippertip. We present a material classification system using active temperature controllable robotic gripper to induce heat flow. Subsequently, our system can recognize materials independently from their ambient temperature. The robotic gripper surface can be regulated to any temperature that differentiates it from the touched object's surface. We conducted some experiments by integrating the temperature control system with the Academic SCARA Robot, classifying them based on a long short-term memory (LSTM) using temperature data obtained from grasping target objects.

Transferable Task Execution from Pixels through Deep Planning Domain Learning

Mar 08, 2020

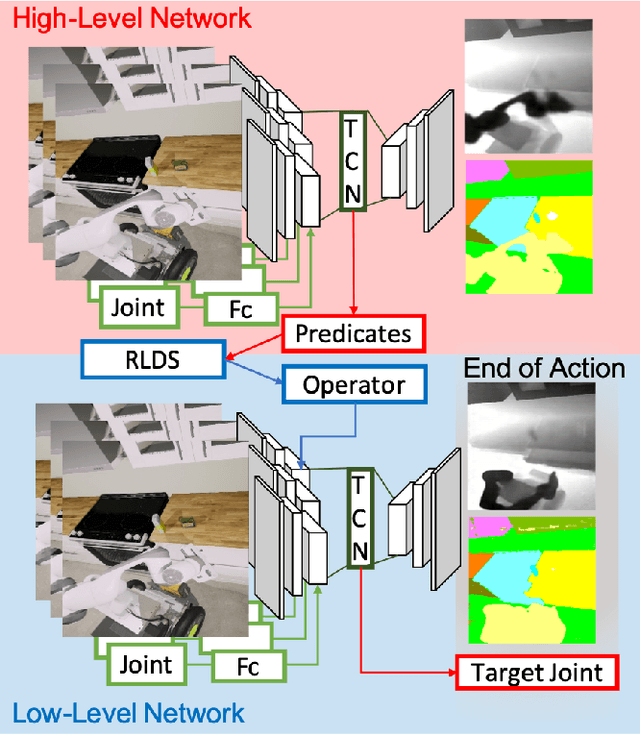

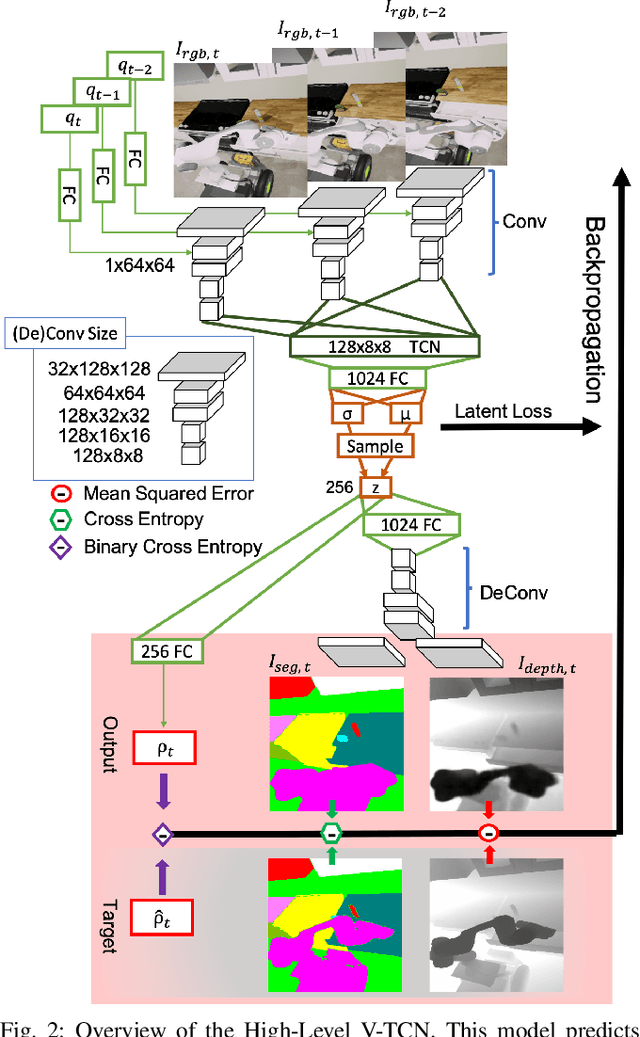

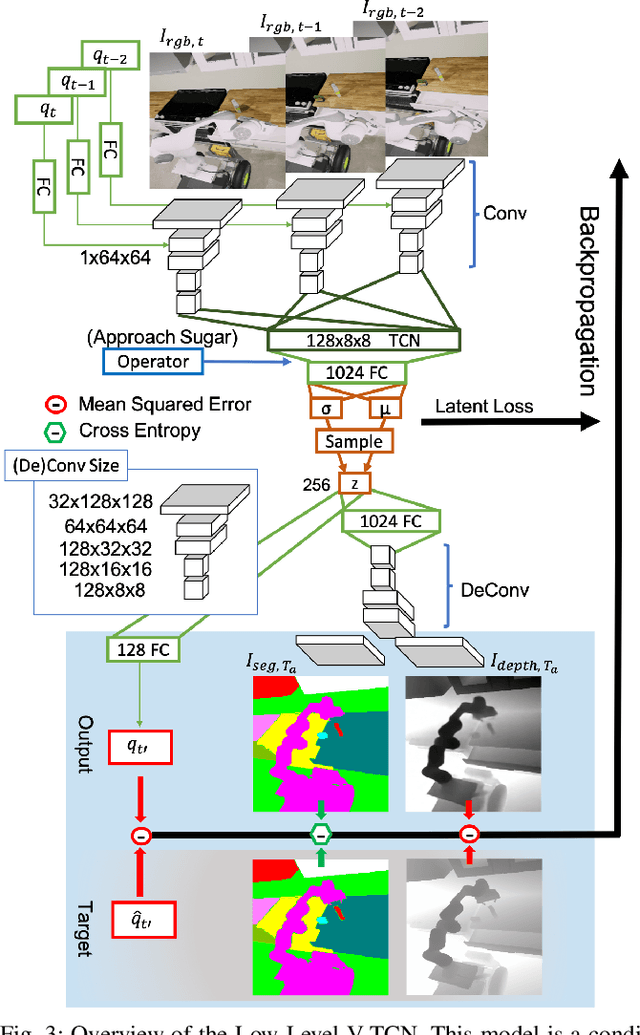

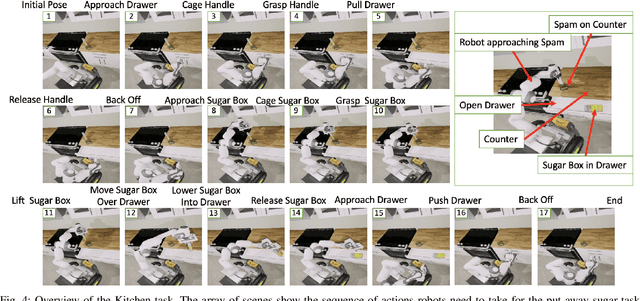

Abstract:While robots can learn models to solve many manipulation tasks from raw visual input, they cannot usually use these models to solve new problems. On the other hand, symbolic planning methods such as STRIPS have long been able to solve new problems given only a domain definition and a symbolic goal, but these approaches often struggle on the real world robotic tasks due to the challenges of grounding these symbols from sensor data in a partially-observable world. We propose Deep Planning Domain Learning (DPDL), an approach that combines the strengths of both methods to learn a hierarchical model. DPDL learns a high-level model which predicts values for a large set of logical predicates consisting of the current symbolic world state, and separately learns a low-level policy which translates symbolic operators into executable actions on the robot. This allows us to perform complex, multi-step tasks even when the robot has not been explicitly trained on them. We show our method on manipulation tasks in a photorealistic kitchen scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge