Ke-Jyun Wang

TrUMAn: Trope Understanding in Movies and Animations

Aug 21, 2021

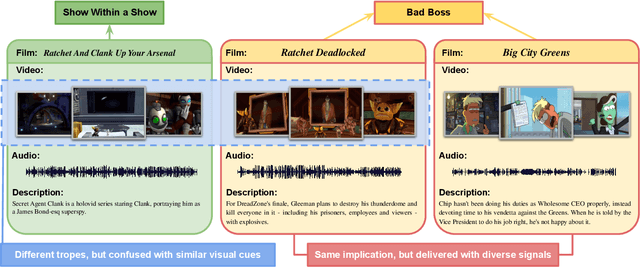

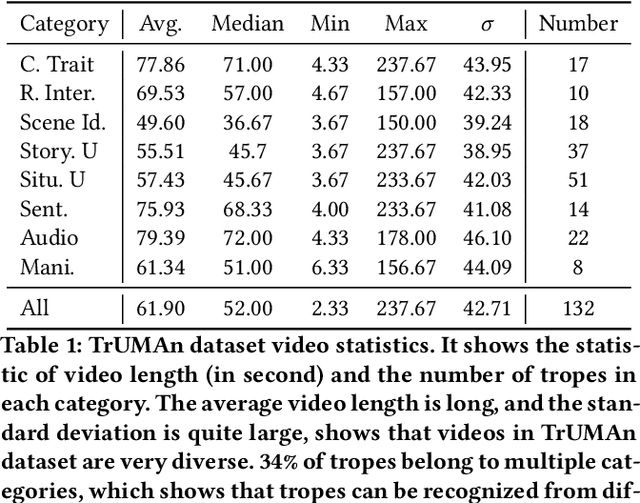

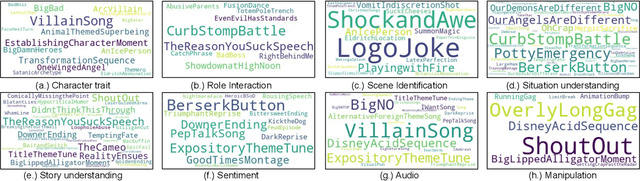

Abstract:Understanding and comprehending video content is crucial for many real-world applications such as search and recommendation systems. While recent progress of deep learning has boosted performance on various tasks using visual cues, deep cognition to reason intentions, motivation, or causality remains challenging. Existing datasets that aim to examine video reasoning capability focus on visual signals such as actions, objects, relations, or could be answered utilizing text bias. Observing this, we propose a novel task, along with a new dataset: Trope Understanding in Movies and Animations (TrUMAn), with 2423 videos associated with 132 tropes, intending to evaluate and develop learning systems beyond visual signals. Tropes are frequently used storytelling devices for creative works. By coping with the trope understanding task and enabling the deep cognition skills of machines, data mining applications and algorithms could be taken to the next level. To tackle the challenging TrUMAn dataset, we present a Trope Understanding and Storytelling (TrUSt) with a new Conceptual Storyteller module, which guides the video encoder by performing video storytelling on a latent space. Experimental results demonstrate that state-of-the-art learning systems on existing tasks reach only 12.01% of accuracy with raw input signals. Also, even in the oracle case with human-annotated descriptions, BERT contextual embedding achieves at most 28% of accuracy. Our proposed TrUSt boosts the model performance and reaches 13.94% performance. We also provide detailed analysis to pave the way for future research. TrUMAn is publicly available at:https://www.cmlab.csie.ntu.edu.tw/project/trope

OCID-Ref: A 3D Robotic Dataset with Embodied Language for Clutter Scene Grounding

Mar 13, 2021

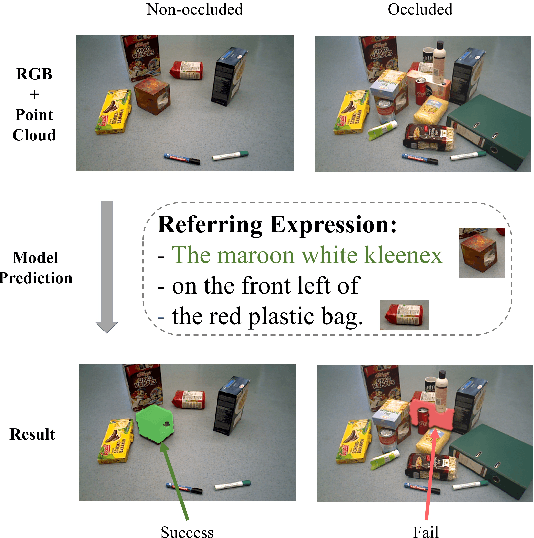

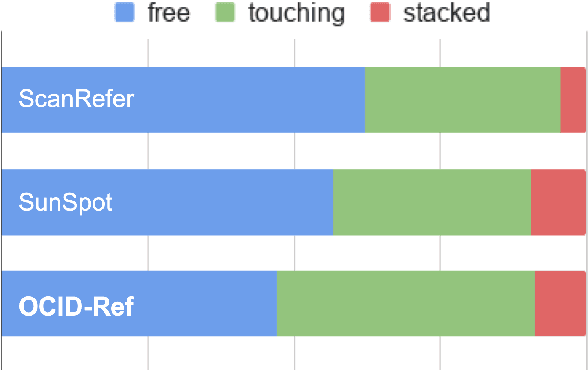

Abstract:To effectively apply robots in working environments and assist humans, it is essential to develop and evaluate how visual grounding (VG) can affect machine performance on occluded objects. However, current VG works are limited in working environments, such as offices and warehouses, where objects are usually occluded due to space utilization issues. In our work, we propose a novel OCID-Ref dataset featuring a referring expression segmentation task with referring expressions of occluded objects. OCID-Ref consists of 305,694 referring expressions from 2,300 scenes with providing RGB image and point cloud inputs. To resolve challenging occlusion issues, we argue that it's crucial to take advantage of both 2D and 3D signals to resolve challenging occlusion issues. Our experimental results demonstrate the effectiveness of aggregating 2D and 3D signals but referring to occluded objects still remains challenging for the modern visual grounding systems. OCID-Ref is publicly available at https://github.com/lluma/OCID-Ref

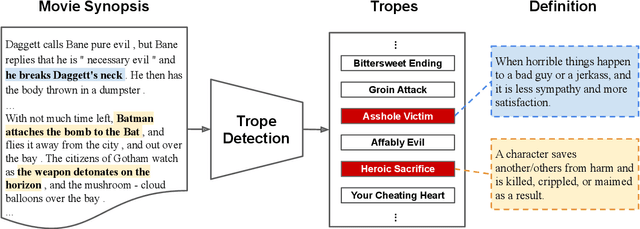

Situation and Behavior Understanding by Trope Detection on Films

Jan 19, 2021

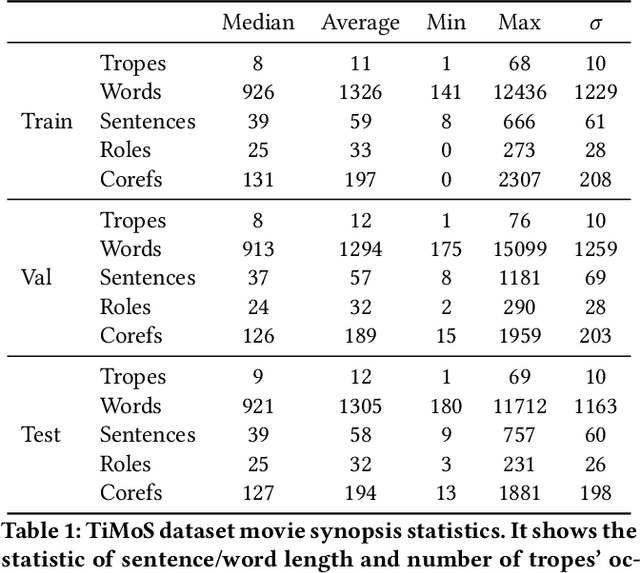

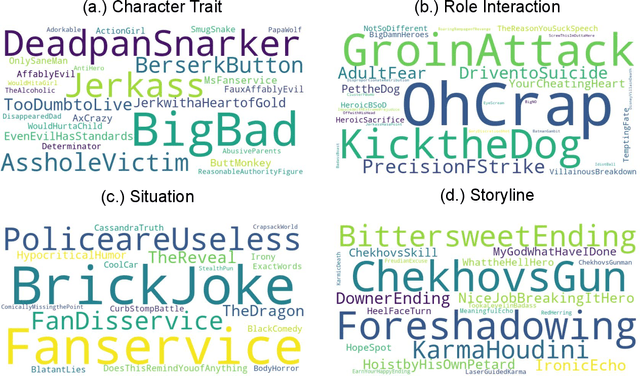

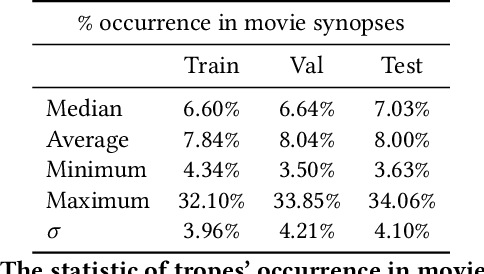

Abstract:The human ability of deep cognitive skills are crucial for the development of various real-world applications that process diverse and abundant user generated input. While recent progress of deep learning and natural language processing have enabled learning system to reach human performance on some benchmarks requiring shallow semantics, such human ability still remains challenging for even modern contextual embedding models, as pointed out by many recent studies. Existing machine comprehension datasets assume sentence-level input, lack of casual or motivational inferences, or could be answered with question-answer bias. Here, we present a challenging novel task, trope detection on films, in an effort to create a situation and behavior understanding for machines. Tropes are storytelling devices that are frequently used as ingredients in recipes for creative works. Comparing to existing movie tag prediction tasks, tropes are more sophisticated as they can vary widely, from a moral concept to a series of circumstances, and embedded with motivations and cause-and-effects. We introduce a new dataset, Tropes in Movie Synopses (TiMoS), with 5623 movie synopses and 95 different tropes collecting from a Wikipedia-style database, TVTropes. We present a multi-stream comprehension network (MulCom) leveraging multi-level attention of words, sentences, and role relations. Experimental result demonstrates that modern models including BERT contextual embedding, movie tag prediction systems, and relational networks, perform at most 37% of human performance (23.97/64.87) in terms of F1 score. Our MulCom outperforms all modern baselines, by 1.5 to 5.0 F1 score and 1.5 to 3.0 mean of average precision (mAP) score. We also provide a detailed analysis and human evaluation to pave ways for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge