Kazuki Yoshiyama

NDJIR: Neural Direct and Joint Inverse Rendering for Geometry, Lights, and Materials of Real Object

Feb 02, 2023

Abstract:The goal of inverse rendering is to decompose geometry, lights, and materials given pose multi-view images. To achieve this goal, we propose neural direct and joint inverse rendering, NDJIR. Different from prior works which relies on some approximations of the rendering equation, NDJIR directly addresses the integrals in the rendering equation and jointly decomposes geometry: signed distance function, lights: environment and implicit lights, materials: base color, roughness, specular reflectance using the powerful and flexible volume rendering framework, voxel grid feature, and Bayesian prior. Our method directly uses the physically-based rendering, so we can seamlessly export an extracted mesh with materials to DCC tools and show material conversion examples. We perform intensive experiments to show that our proposed method can decompose semantically well for real object in photogrammetric setting and what factors contribute towards accurate inverse rendering.

Neural Network Libraries: A Deep Learning Framework Designed from Engineers' Perspectives

Feb 12, 2021

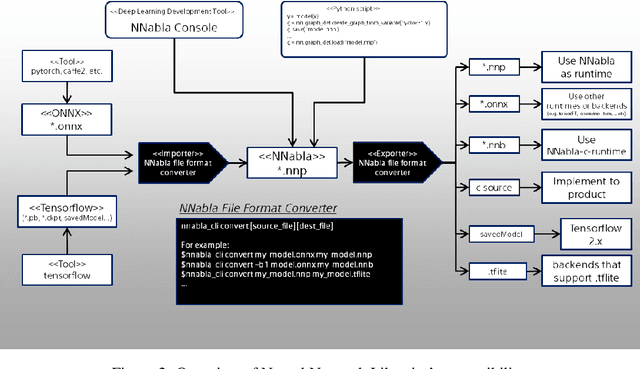

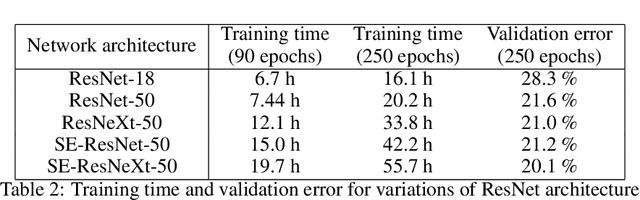

Abstract:While there exist a plethora of deep learning tools and frameworks, the fast-growing complexity of the field brings new demands and challenges, such as more flexible network design, speedy computation on distributed setting, and compatibility between different tools. In this paper, we introduce Neural Network Libraries (https://nnabla.org), a deep learning framework designed from engineer's perspective, with emphasis on usability and compatibility as its core design principles. We elaborate on each of our design principles and its merits, and validate our attempts via experiments.

Efficient Sampling for Predictor-Based Neural Architecture Search

Nov 24, 2020

Abstract:Recently, predictor-based algorithms emerged as a promising approach for neural architecture search (NAS). For NAS, we typically have to calculate the validation accuracy of a large number of Deep Neural Networks (DNNs), what is computationally complex. Predictor-based NAS algorithms address this problem. They train a proxy model that can infer the validation accuracy of DNNs directly from their network structure. During optimization, the proxy can be used to narrow down the number of architectures for which the true validation accuracy must be computed, what makes predictor-based algorithms sample efficient. Usually, we compute the proxy for all DNNs in the network search space and pick those that maximize the proxy as candidates for optimization. However, that is intractable in practice, because the search spaces are often very large and contain billions of network architectures. The contributions of this paper are threefold: 1) We define a sample efficiency gain to compare different predictor-based NAS algorithms. 2) We conduct experiments on the NASBench-101 dataset and show that the sample efficiency of predictor-based algorithms decreases dramatically if the proxy is only computed for a subset of the search space. 3) We show that if we choose the subset of the search space on which the proxy is evaluated in a smart way, the sample efficiency of the original predictor-based algorithm that has access to the full search space can be regained. This is an important step to make predictor-based NAS algorithms useful, in practice.

Iteratively Training Look-Up Tables for Network Quantization

Nov 12, 2019

Abstract:Operating deep neural networks (DNNs) on devices with limited resources requires the reduction of their memory as well as computational footprint. Popular reduction methods are network quantization or pruning, which either reduce the word length of the network parameters or remove weights from the network if they are not needed. In this article we discuss a general framework for network reduction which we call `Look-Up Table Quantization` (LUT-Q). For each layer, we learn a value dictionary and an assignment matrix to represent the network weights. We propose a special solver which combines gradient descent and a one-step k-means update to learn both the value dictionaries and assignment matrices iteratively. This method is very flexible: by constraining the value dictionary, many different reduction problems such as non-uniform network quantization, training of multiplierless networks, network pruning or simultaneous quantization and pruning can be implemented without changing the solver. This flexibility of the LUT-Q method allows us to use the same method to train networks for different hardware capabilities.

Differentiable Quantization of Deep Neural Networks

May 27, 2019

Abstract:We propose differentiable quantization (DQ) for efficient deep neural network (DNN) inference where gradient descent is used to learn the quantizer's step size, dynamic range and bitwidth. Training with differentiable quantizers brings two main benefits: first, DQ does not introduce hyperparameters; second, we can learn for each layer a different step size, dynamic range and bitwidth. Our experiments show that DNNs with heterogeneous and learned bitwidth yield better performance than DNNs with a homogeneous one. Further, we show that there is one natural DQ parametrization especially well suited for training. We confirm our findings with experiments on CIFAR-10 and ImageNet and we obtain quantized DNNs with learned quantization parameters achieving state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge