Kavan Singh Sikand

High-Speed Accurate Robot Control using Learned Forward Kinodynamics and Non-linear Least Squares Optimization

Jun 16, 2022

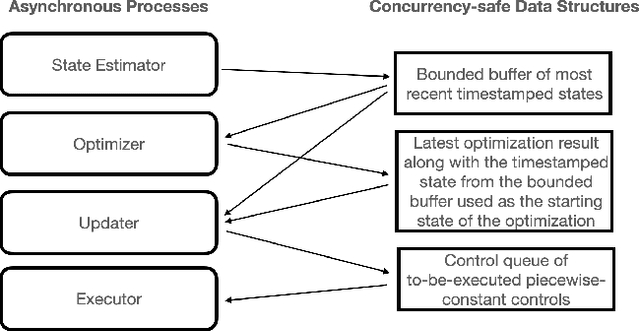

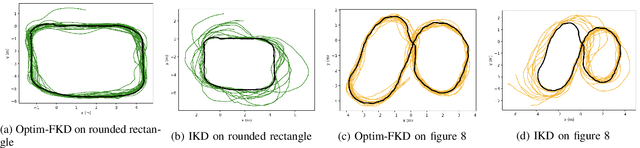

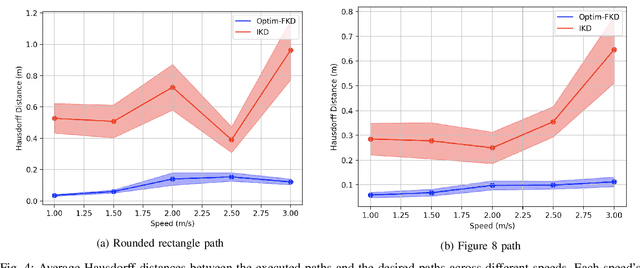

Abstract:Accurate control of robots in the real world requires a control system that is capable of taking into account the kinodynamic interactions of the robot with its environment. At high speeds, the dependence of the movement of the robot on these kinodynamic interactions becomes more pronounced, making high-speed, accurate robot control a challenging problem. Previous work has shown that learning the inverse kinodynamics (IKD) of the robot can be helpful for high-speed robot control. However a learned inverse kinodynamic model can only be applied to a limited class of control problems, and different control problems require the learning of a new IKD model. In this work we present a new formulation for accurate, high-speed robot control that makes use of a learned forward kinodynamic (FKD) model and non-linear least squares optimization. By nature of the formulation, this approach is extensible to a wide array of control problems without requiring the retraining of a new model. We demonstrate the ability of this approach to accurately control a scale one-tenth robot car at high speeds, and show improved results over baselines.

VI-IKD: High-Speed Accurate Off-Road Navigation using Learned Visual-Inertial Inverse Kinodynamics

Mar 30, 2022

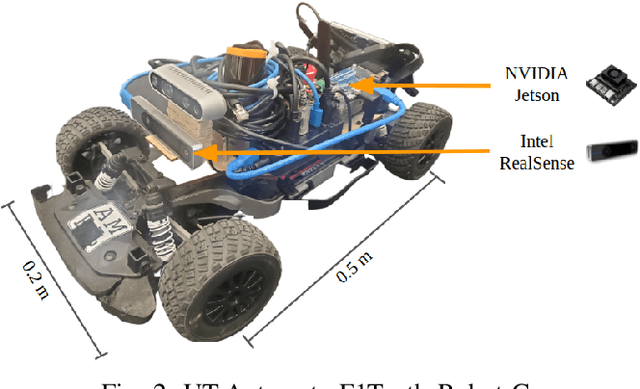

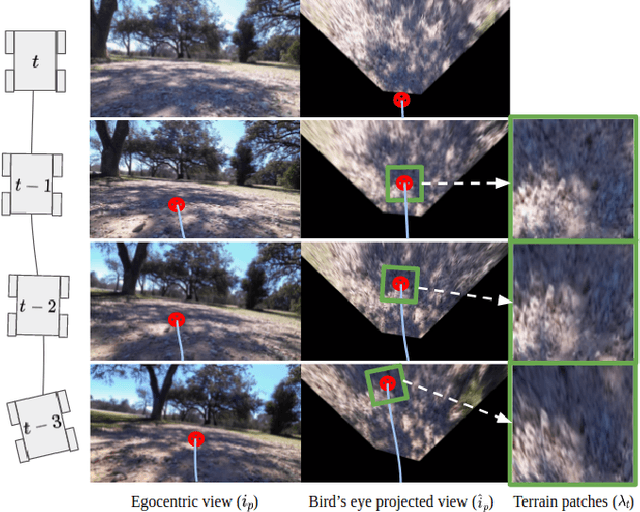

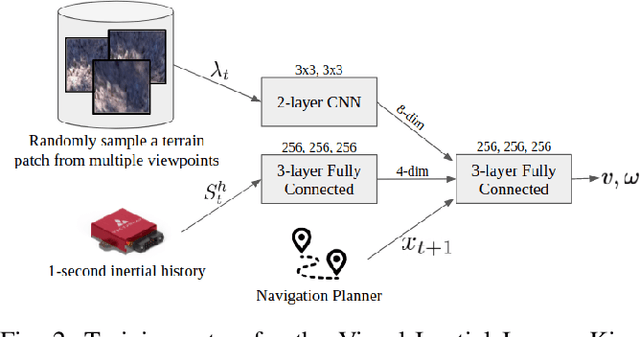

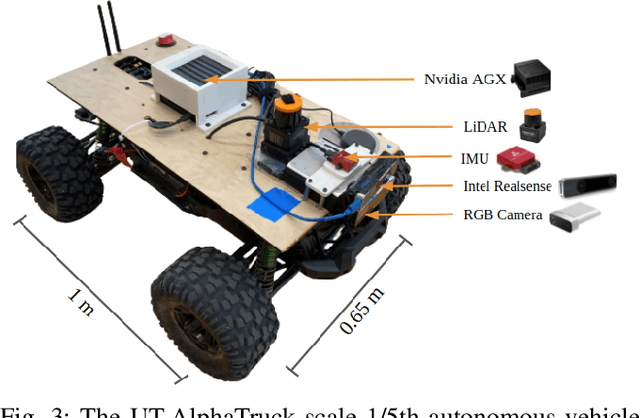

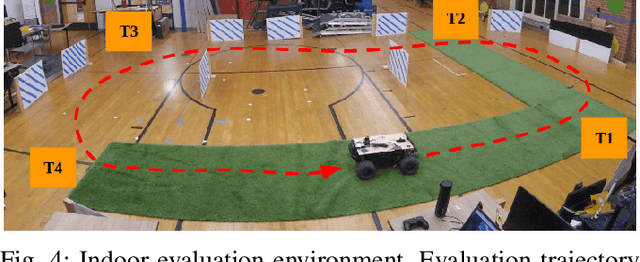

Abstract:One of the key challenges in high speed off road navigation on ground vehicles is that the kinodynamics of the vehicle terrain interaction can differ dramatically depending on the terrain. Previous approaches to addressing this challenge have considered learning an inverse kinodynamics (IKD) model, conditioned on inertial information of the vehicle to sense the kinodynamic interactions. In this paper, we hypothesize that to enable accurate high-speed off-road navigation using a learned IKD model, in addition to inertial information from the past, one must also anticipate the kinodynamic interactions of the vehicle with the terrain in the future. To this end, we introduce Visual-Inertial Inverse Kinodynamics (VI-IKD), a novel learning based IKD model that is conditioned on visual information from a terrain patch ahead of the robot in addition to past inertial information, enabling it to anticipate kinodynamic interactions in the future. We validate the effectiveness of VI-IKD in accurate high-speed off-road navigation experimentally on a scale 1/5 UT-AlphaTruck off-road autonomous vehicle in both indoor and outdoor environments and show that compared to other state-of-the-art approaches, VI-IKD enables more accurate and robust off-road navigation on a variety of different terrains at speeds of up to 3.5 m/s.

Visual Representation Learning for Preference-Aware Path Planning

Sep 18, 2021

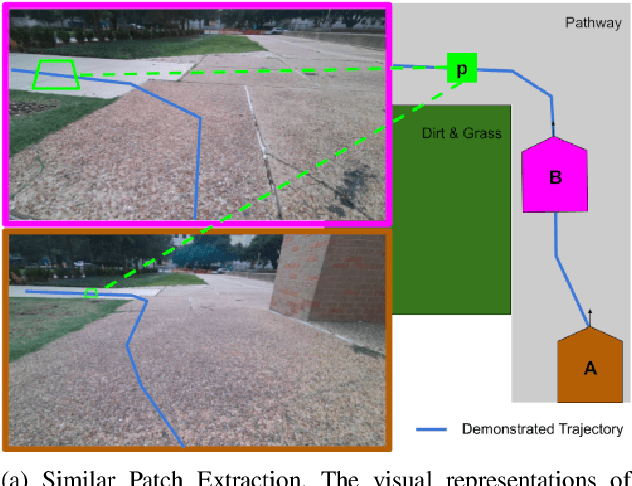

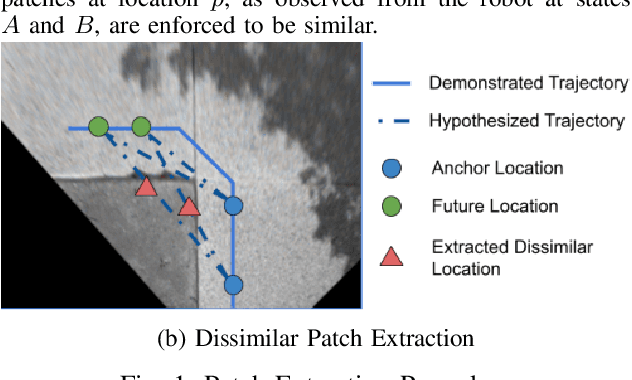

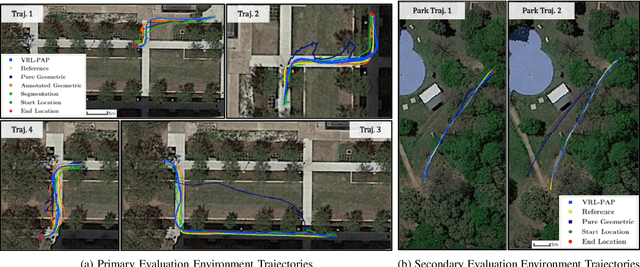

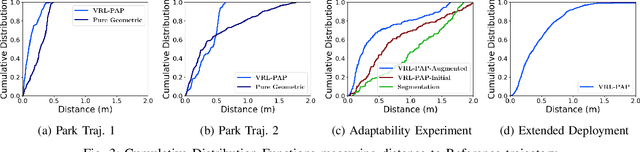

Abstract:Autonomous mobile robots deployed in outdoor environments must reason about different types of terrain for both safety (e.g., prefer dirt over mud) and deployer preferences (e.g., prefer dirt path over flower beds). Most existing solutions to this preference-aware path planning problem use semantic segmentation to classify terrain types from camera images, and then ascribe costs to each type. Unfortunately, there are three key limitations of such approaches -- they 1) require pre-enumeration of the discrete terrain types, 2) are unable to handle hybrid terrain types (e.g., grassy dirt), and 3) require expensive labelled data to train visual semantic segmentation. We introduce Visual Representation Learning for Preference-Aware Path Planning (VRL-PAP), an alternative approach that overcomes all three limitations: VRL-PAP leverages unlabeled human demonstrations of navigation to autonomously generate triplets for learning visual representations of terrain that are viewpoint invariant and encode terrain types in a continuous representation space. The learned representations are then used along with the same unlabeled human navigation demonstrations to learn a mapping from the representation space to terrain costs. At run time, VRL-PAP maps from images to representations and then representations to costs to perform preference-aware path planning. We present empirical results from challenging outdoor settings that demonstrate VRL-PAP 1) is successfully able to pick paths that reflect demonstrated preferences, 2) is comparable in execution to geometric navigation with a highly detailed manually annotated map (without requiring such annotations), 3) is able to generalize to novel terrain types with minimal additional unlabeled demonstrations.

Robofleet: Secure Open Source Communication and Management for Fleets of Autonomous Robots

Mar 11, 2021

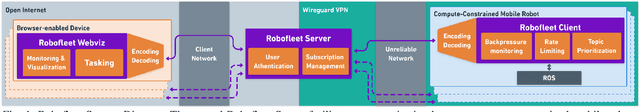

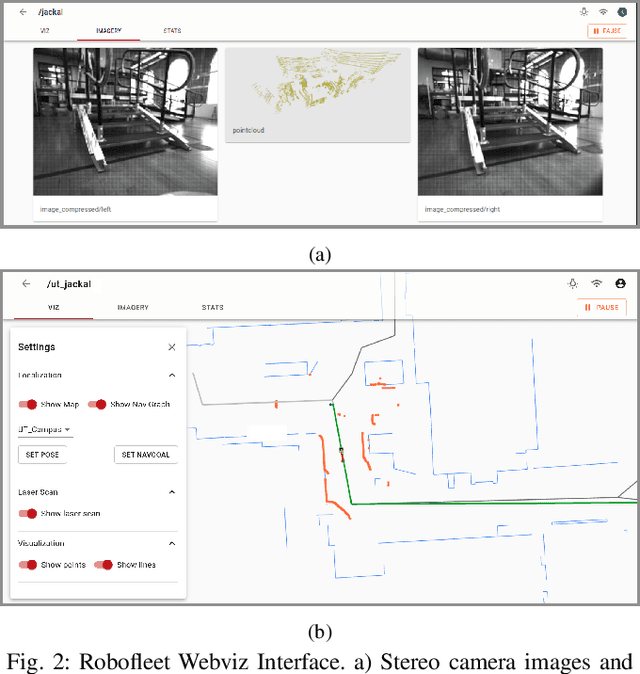

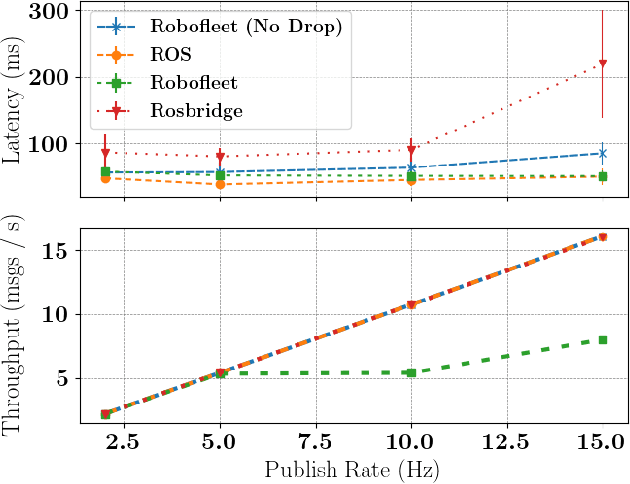

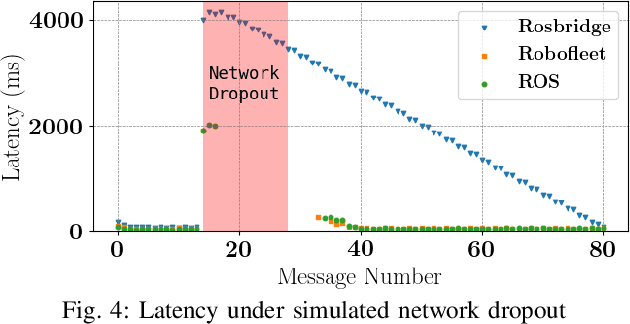

Abstract:Safe long-term deployment of a fleet of mobile robots requires reliable and secure two-way communication channels between individual robots and remote human operators for supervision and tasking. Existing open-source solutions to this problem degrade in performance in challenging real-world situations such as intermittent and low-bandwidth connectivity, do not provide security control options, and can be computationally expensive on hardware-constrained mobile robot platforms. In this paper, we present Robofleet, a lightweight open-source system which provides inter-robot communication, remote monitoring, and remote tasking for a fleet of ROS-enabled service-mobile robots that is designed with the practical goals of resilience to network variance and security control in mind. Robofleet supports multi-user, multi-robot communication via a central server. This architecture deduplicates network traffic between robots, significantly reducing overall network load when compared with native ROS communication. This server also functions as a single entrypoint into the system, enabling security control and user authentication. Individual robots run the lightweight Robofleet client, which is responsible for exchanging messages with the Robofleet server. It automatically adapts to adverse network conditions through backpressure monitoring as well as topic-level priority control, ensuring that safety-critical messages are successfully transmitted. Finally, the system includes a web-based visualization tool that can be run on any internet-connected, browser-enabled device to monitor and control the fleet. We compare Robofleet to existing methods of robotic communication, and demonstrate that it provides superior resilience to network variance while maintaining performance that exceeds that of widely-used systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge