Kathleen Champion

Discovering Governing Equations from Partial Measurements with Deep Delay Autoencoders

Jan 13, 2022

Abstract:A central challenge in data-driven model discovery is the presence of hidden, or latent, variables that are not directly measured but are dynamically important. Takens' theorem provides conditions for when it is possible to augment these partial measurements with time delayed information, resulting in an attractor that is diffeomorphic to that of the original full-state system. However, the coordinate transformation back to the original attractor is typically unknown, and learning the dynamics in the embedding space has remained an open challenge for decades. Here, we design a custom deep autoencoder network to learn a coordinate transformation from the delay embedded space into a new space where it is possible to represent the dynamics in a sparse, closed form. We demonstrate this approach on the Lorenz, R\"ossler, and Lotka-Volterra systems, learning dynamics from a single measurement variable. As a challenging example, we learn a Lorenz analogue from a single scalar variable extracted from a video of a chaotic waterwheel experiment. The resulting modeling framework combines deep learning to uncover effective coordinates and the sparse identification of nonlinear dynamics (SINDy) for interpretable modeling. Thus, we show that it is possible to simultaneously learn a closed-form model and the associated coordinate system for partially observed dynamics.

PySINDy: A comprehensive Python package for robust sparse system identification

Nov 12, 2021

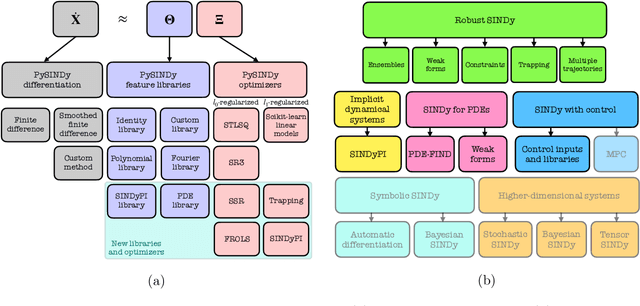

Abstract:Automated data-driven modeling, the process of directly discovering the governing equations of a system from data, is increasingly being used across the scientific community. PySINDy is a Python package that provides tools for applying the sparse identification of nonlinear dynamics (SINDy) approach to data-driven model discovery. In this major update to PySINDy, we implement several advanced features that enable the discovery of more general differential equations from noisy and limited data. The library of candidate terms is extended for the identification of actuated systems, partial differential equations (PDEs), and implicit differential equations. Robust formulations, including the integral form of SINDy and ensembling techniques, are also implemented to improve performance for real-world data. Finally, we provide a range of new optimization algorithms, including several sparse regression techniques and algorithms to enforce and promote inequality constraints and stability. Together, these updates enable entirely new SINDy model discovery capabilities that have not been reported in the literature, such as constrained PDE identification and ensembling with different sparse regression optimizers.

A unified sparse optimization framework to learn parsimonious physics-informed models from data

Jun 25, 2019

Abstract:Machine learning (ML) is redefining what is possible in data-intensive fields of science and engineering. However, applying ML to problems in the physical sciences comes with a unique set of challenges: scientists want physically interpretable models that can (i) generalize to predict previously unobserved behaviors, (ii) provide effective forecasting predictions (extrapolation), and (iii) be certifiable. Autonomous systems will necessarily interact with changing and uncertain environments, motivating the need for models that can accurately extrapolate based on physical principles (e.g. Newton's universal second law for classical mechanics, F=ma). Standard ML approaches have shown impressive performance for predicting dynamics in an interpolatory regime, but the resulting models often lack interpretability and fail to generalize. In this paper, we introduce a unified sparse optimization framework that learns governing dynamical systems models from data, selecting relevant terms in the dynamics from a library of possible functions. The resulting models are parsimonious, have physical interpretations, and can generalize to new parameter regimes. Our framework allows the use of non-convex sparsity promoting regularization functions and can be adapted to address key challenges in scientific problems and data sets, including outliers, parametric dependencies, and physical constraints. We show that the approach discovers parsimonious dynamical models on several example systems, including a spiking neuron model. This flexible approach can be tailored to the unique challenges associated with a wide range of applications and data sets, providing a powerful ML-based framework for learning governing models for physical systems from data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge