Katharina Dost

Quantifying the Impact of Modules and Their Interactions in the PSO-X Framework

Jan 07, 2026Abstract:The PSO-X framework incorporates dozens of modules that have been proposed for solving single-objective continuous optimization problems using particle swarm optimization. While modular frameworks enable users to automatically generate and configure algorithms tailored to specific optimization problems, the complexity of this process increases with the number of modules in the framework and the degrees of freedom defined for their interaction. Understanding how modules affect the performance of algorithms for different problems is critical to making the process of finding effective implementations more efficient and identifying promising areas for further investigation. Despite their practical applications and scientific relevance, there is a lack of empirical studies investigating which modules matter most in modular optimization frameworks and how they interact. In this paper, we analyze the performance of 1424 particle swarm optimization algorithms instantiated from the PSO-X framework on the 25 functions in the CEC'05 benchmark suite with 10 and 30 dimensions. We use functional ANOVA to quantify the impact of modules and their combinations on performance in different problem classes. In practice, this allows us to identify which modules have greater influence on PSO-X performance depending on problem features such as multimodality, mathematical transformations and varying dimensionality. We then perform a cluster analysis to identify groups of problem classes that share similar module effect patterns. Our results show low variability in the importance of modules in all problem classes, suggesting that particle swarm optimization performance is driven by a few influential modules.

Poison is Not Traceless: Fully-Agnostic Detection of Poisoning Attacks

Oct 24, 2023

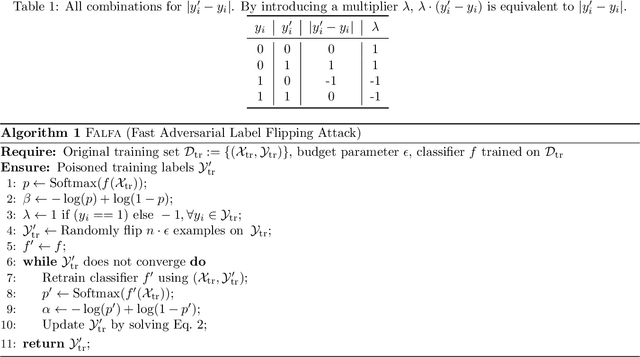

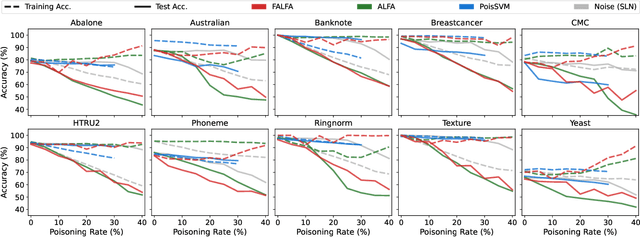

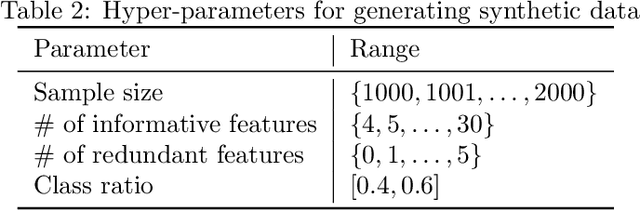

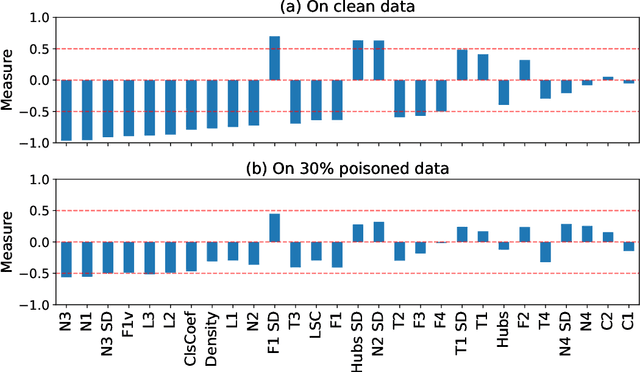

Abstract:The performance of machine learning models depends on the quality of the underlying data. Malicious actors can attack the model by poisoning the training data. Current detectors are tied to either specific data types, models, or attacks, and therefore have limited applicability in real-world scenarios. This paper presents a novel fully-agnostic framework, DIVA (Detecting InVisible Attacks), that detects attacks solely relying on analyzing the potentially poisoned data set. DIVA is based on the idea that poisoning attacks can be detected by comparing the classifier's accuracy on poisoned and clean data and pre-trains a meta-learner using Complexity Measures to estimate the otherwise unknown accuracy on a hypothetical clean dataset. The framework applies to generic poisoning attacks. For evaluation purposes, in this paper, we test DIVA on label-flipping attacks.

Memento: Facilitating Effortless, Efficient, and Reliable ML Experiments

Apr 17, 2023Abstract:Running complex sets of machine learning experiments is challenging and time-consuming due to the lack of a unified framework. This leaves researchers forced to spend time implementing necessary features such as parallelization, caching, and checkpointing themselves instead of focussing on their project. To simplify the process, in this paper, we introduce Memento, a Python package that is designed to aid researchers and data scientists in the efficient management and execution of computationally intensive experiments. Memento has the capacity to streamline any experimental pipeline by providing a straightforward configuration matrix and the ability to concurrently run experiments across multiple threads. A demonstration of Memento is available at: https://wickerlab.org/publication/memento.

Hitting the Target: Stopping Active Learning at the Cost-Based Optimum

Oct 07, 2021

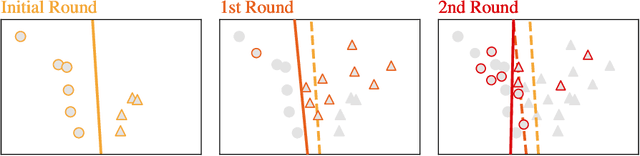

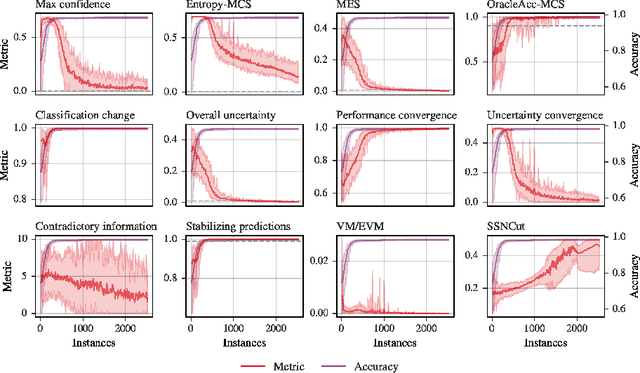

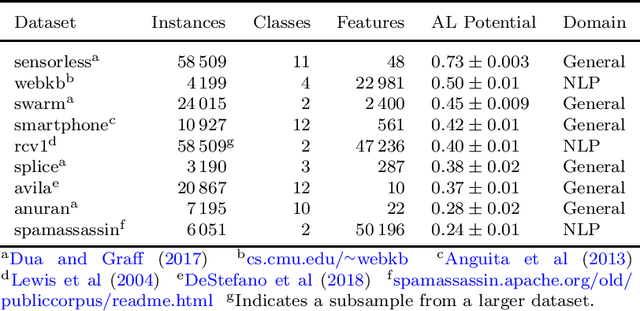

Abstract:Active learning allows machine learning models to be trained using fewer labels while retaining similar performance to traditional fully supervised learning. An active learner selects the most informative data points, requests their labels, and retrains itself. While this approach is promising, it leaves an open problem of how to determine when the model is `good enough' without the additional labels required for traditional evaluation. In the past, different stopping criteria have been proposed aiming to identify the optimal stopping point. However, optimality can only be expressed as a domain-dependent trade-off between accuracy and the number of labels, and no criterion is superior in all applications. This paper is the first to give actionable advice to practitioners on what stopping criteria they should use in a given real-world scenario. We contribute the first large-scale comparison of stopping criteria, using a cost measure to quantify the accuracy/label trade-off, public implementations of all stopping criteria we evaluate, and an open-source framework for evaluating stopping criteria. Our research enables practitioners to substantially reduce labelling costs by utilizing the stopping criterion which best suits their domain.

Intriguing Usage of Applicability Domain: Lessons from Cheminformatics Applied to Adversarial Learning

May 02, 2021

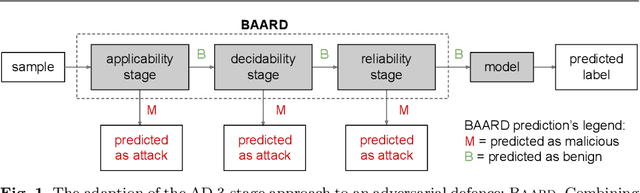

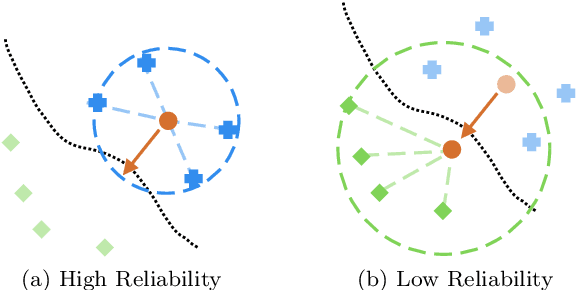

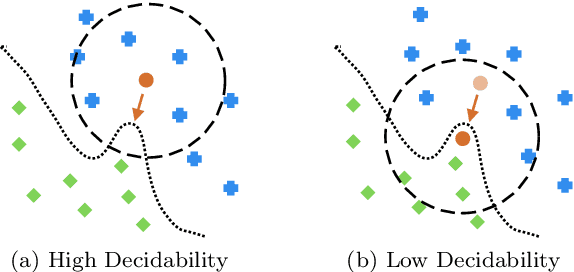

Abstract:Defending machine learning models from adversarial attacks is still a challenge: none of the robust models is utterly immune to adversarial examples to date. Different defences have been proposed; however, most of them are tailored to particular ML models and adversarial attacks, therefore their effectiveness and applicability are strongly limited. A similar problem plagues cheminformatics: Quantitative Structure-Activity Relationship (QSAR) models struggle to predict biological activity for the entire chemical space because they are trained on a very limited amount of compounds with known effects. This problem is relieved with a technique called Applicability Domain (AD), which rejects the unsuitable compounds for the model. Adversarial examples are intentionally crafted inputs that exploit the blind spots which the model has not learned to classify, and adversarial defences try to make the classifier more robust by covering these blind spots. There is an apparent similarity between AD and adversarial defences. Inspired by the concept of AD, we propose a multi-stage data-driven defence that is testing for: Applicability: abnormal values, namely inputs not compliant with the intended use case of the model; Reliability: samples far from the training data; and Decidability: samples whose predictions contradict the predictions of their neighbours.It can be applied to any classification model and is not limited to specific types of adversarial attacks. With an empirical analysis, this paper demonstrates how Applicability Domain can effectively reduce the vulnerability of ML models to adversarial examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge