Karsten Kortuem

Augenklinik der Universitat, Klinikum der Universitat Munchen, Germany

Noise as Domain Shift: Denoising Medical Images by Unpaired Image Translation

Oct 07, 2019

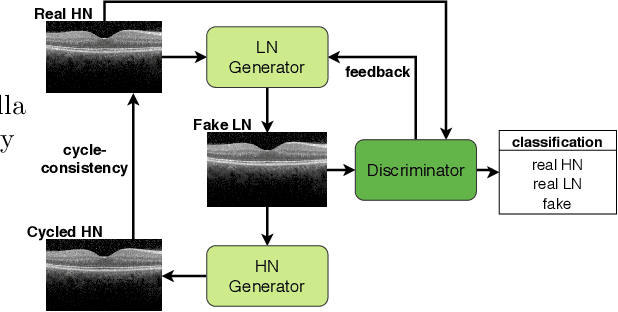

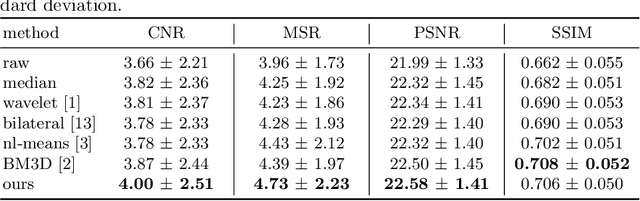

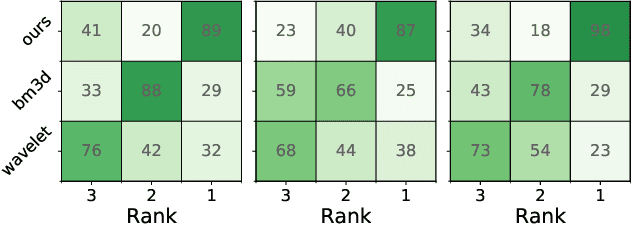

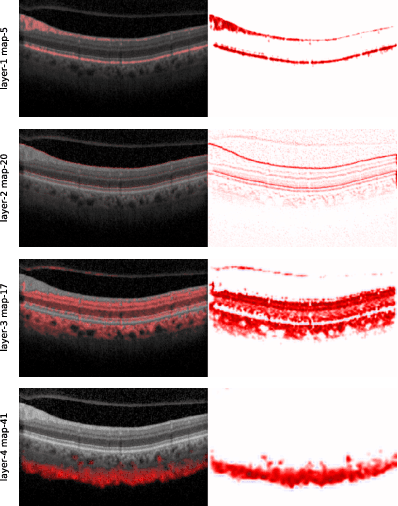

Abstract:We cast the problem of image denoising as a domain translation problem between high and low noise domains. By modifying the cycleGAN model, we are able to learn a mapping between these domains on unpaired retinal optical coherence tomography images. In quantitative measurements and a qualitative evaluation by ophthalmologists, we show how this approach outperforms other established methods. The results indicate that the network differentiates subtle changes in the level of noise in the image. Further investigation of the model's feature maps reveals that it has learned to distinguish retinal layers and other distinct regions of the images.

InceptionGCN: Receptive Field Aware Graph Convolutional Network for Disease Prediction

Mar 11, 2019

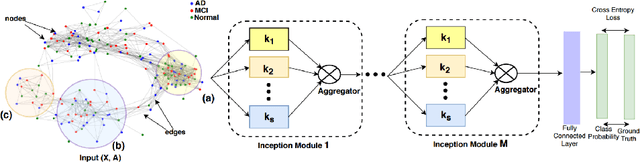

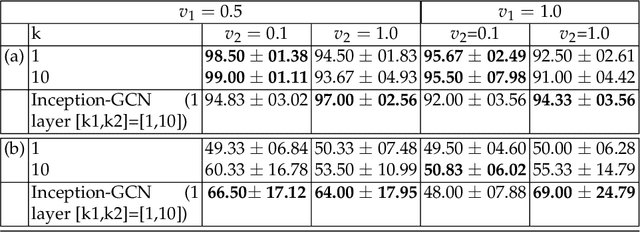

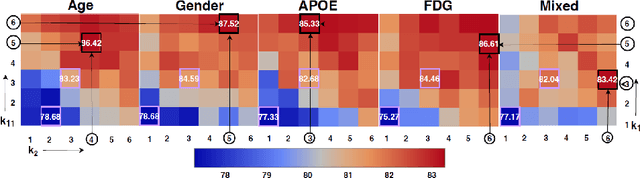

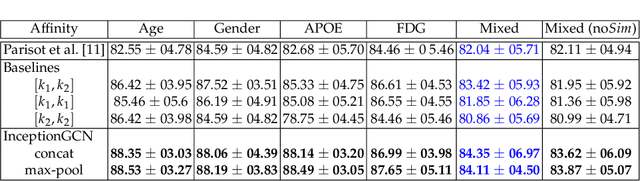

Abstract:Geometric deep learning provides a principled and versatile manner for the integration of imaging and non-imaging modalities in the medical domain. Graph Convolutional Networks (GCNs) in particular have been explored on a wide variety of problems such as disease prediction, segmentation, and matrix completion by leveraging large, multimodal datasets. In this paper, we introduce a new spectral domain architecture for deep learning on graphs for disease prediction. The novelty lies in defining geometric 'inception modules' which are capable of capturing intra- and inter-graph structural heterogeneity during convolutions. We design filters with different kernel sizes to build our architecture. We show our disease prediction results on two publicly available datasets. Further, we provide insights on the behaviour of regular GCNs and our proposed model under varying input scenarios on simulated data.

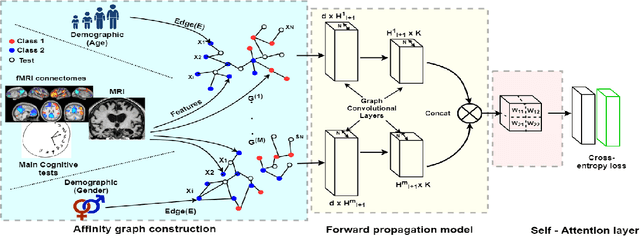

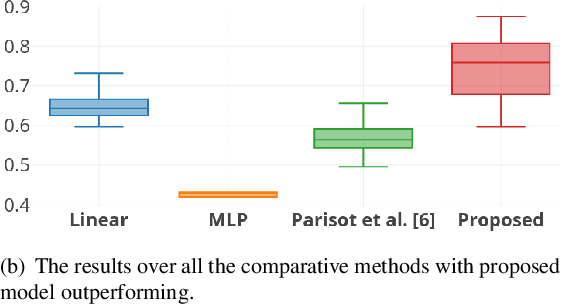

Self-Attention Equipped Graph Convolutions for Disease Prediction

Dec 24, 2018

Abstract:Multi-modal data comprising imaging (MRI, fMRI, PET, etc.) and non-imaging (clinical test, demographics, etc.) data can be collected together and used for disease prediction. Such diverse data gives complementary information about the patient\'s condition to make an informed diagnosis. A model capable of leveraging the individuality of each multi-modal data is required for better disease prediction. We propose a graph convolution based deep model which takes into account the distinctiveness of each element of the multi-modal data. We incorporate a novel self-attention layer, which weights every element of the demographic data by exploring its relation to the underlying disease. We demonstrate the superiority of our developed technique in terms of computational speed and performance when compared to state-of-the-art methods. Our method outperforms other methods with a significant margin.

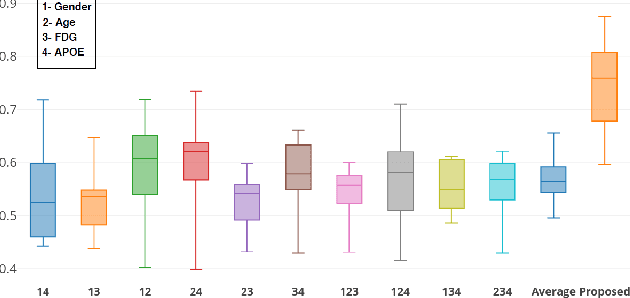

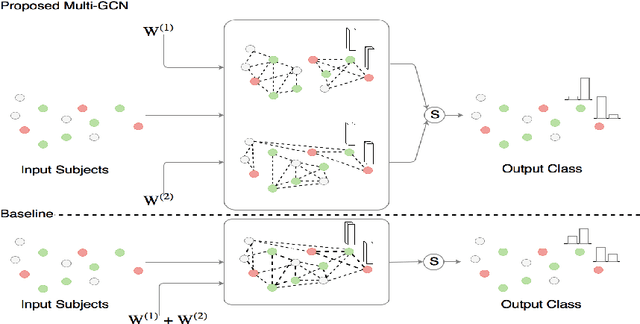

Multi Layered-Parallel Graph Convolutional Network (ML-PGCN) for Disease Prediction

Apr 28, 2018

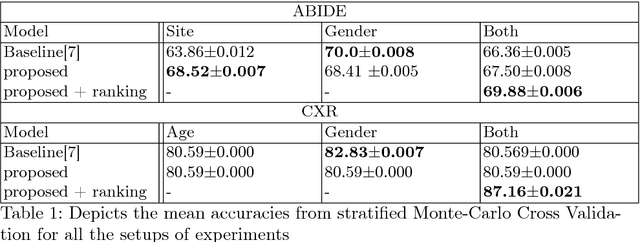

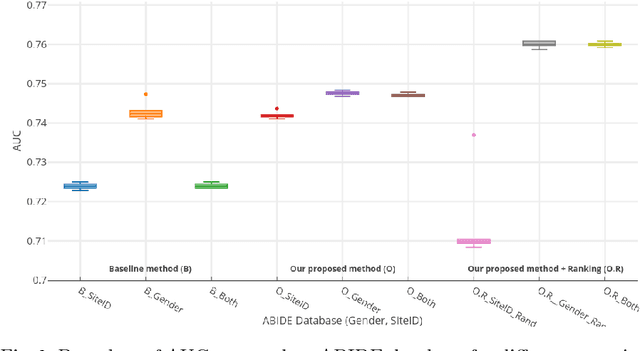

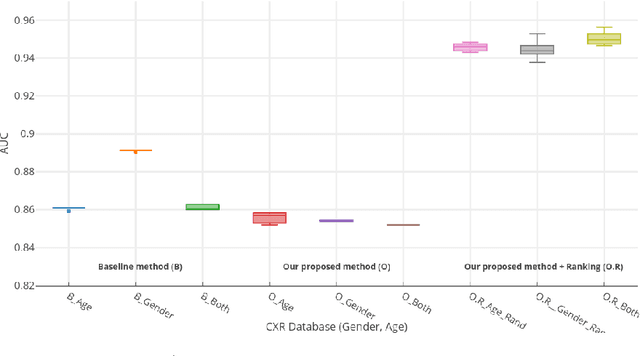

Abstract:Structural data from Electronic Health Records as complementary information to imaging data for disease prediction. We incorporate novel weighting layer into the Graph Convolutional Networks, which weights every element of structural data by exploring its relation to the underlying disease. We demonstrate the superiority of our developed technique in terms of computational speed and obtained encouraging results where our method outperforms the state-of-the-art methods when applied to two publicly available datasets ABIDE and Chest X-ray in terms of relative performance for the accuracy of prediction by 5.31 % and 8.15 % and for the area under the ROC curve by 4.96 % and 10.36 % respectively. Additionally, the model is lightweight, fast and easily trainable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge