Karl Kurzer

Cooperative Trajectory Planning in Uncertain Environments with Monte Carlo Tree Search and Risk Metrics

Mar 09, 2022

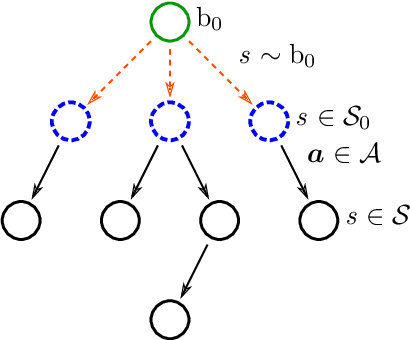

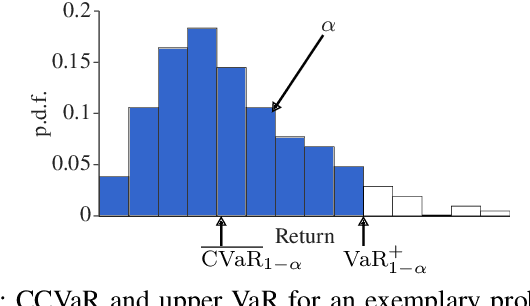

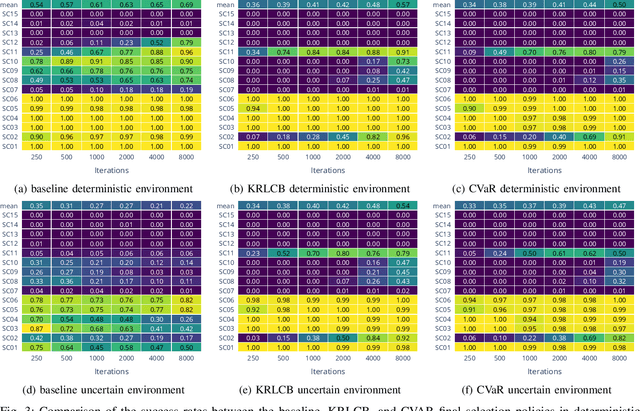

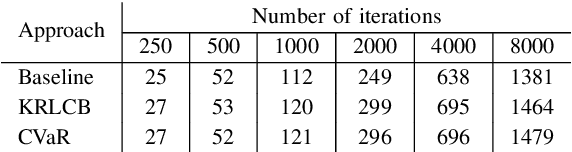

Abstract:Automated vehicles require the ability to cooperate with humans for a smooth integration into today's traffic. While the concept of cooperation is well known, the development of a robust and efficient cooperative trajectory planning method is still a challenge. One aspect of this challenge is the uncertainty surrounding the state of the environment due to limited sensor accuracy. This uncertainty can be represented by a Partially Observable Markov Decision Process. Our work addresses this problem by extending an existing cooperative trajectory planning approach based on Monte Carlo Tree Search for continuous action spaces. It does so by explicitly modeling uncertainties in the form of a root belief state, from which start states for trees are sampled. After the trees have been constructed with Monte Carlo Tree Search, their results are aggregated into return distributions using kernel regression. For the final selection, we apply two risk metrics, namely a Lower Confidence Bound and a Conditional Value at Risk. It can be demonstrated that the integration of risk metrics in the final selection policy consistently outperforms a baseline in uncertain environments, generating considerably safer trajectories.

Learning Reward Models for Cooperative Trajectory Planning with Inverse Reinforcement Learning and Monte Carlo Tree Search

Feb 16, 2022

Abstract:Cooperative trajectory planning methods for automated vehicles, are capable to solve traffic scenarios that require a high degree of cooperation between traffic participants. In order for cooperative systems to integrate in human-centered traffic, it is important that the automated systems behave human-like, so that humans can anticipate the system's decisions. While Reinforcement Learning has made remarkable progress in solving the decision making part, it is non-trivial to parameterize a reward model that yields predictable actions. This work employs feature-based Maximum Entropy Inverse Reinforcement Learning in combination with Monte Carlo Tree Search to learn reward models that maximizes the likelihood of recorded multi-agent cooperative expert trajectories. The evaluation demonstrates that the approach is capable of recovering a reasonable reward model that mimics the expert and performs similar to a manually tuned baseline reward model.

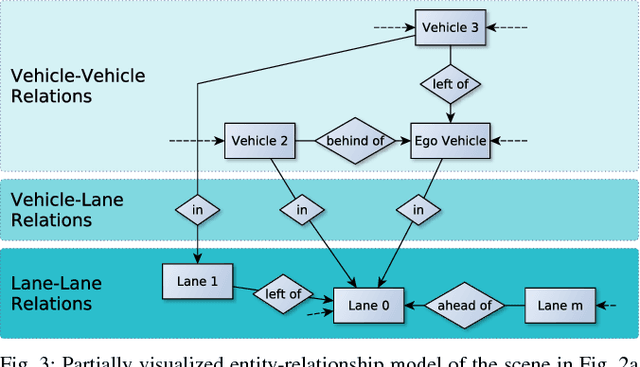

Generalizing Decision Making for Automated Driving with an Invariant Environment Representation using Deep Reinforcement Learning

Feb 12, 2021

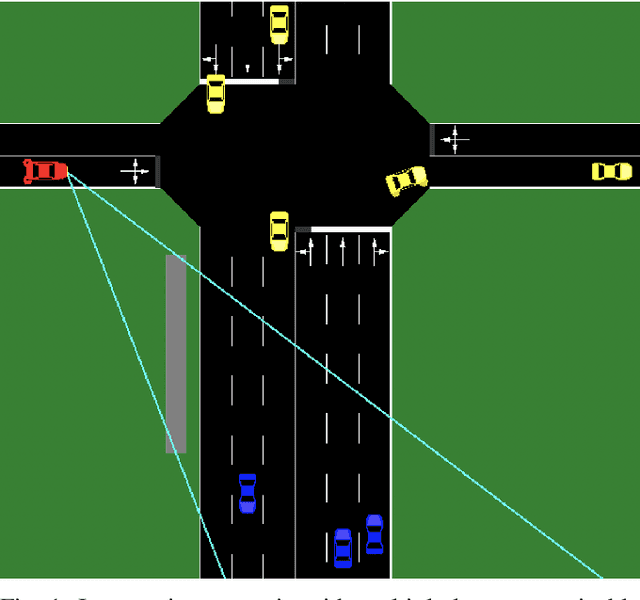

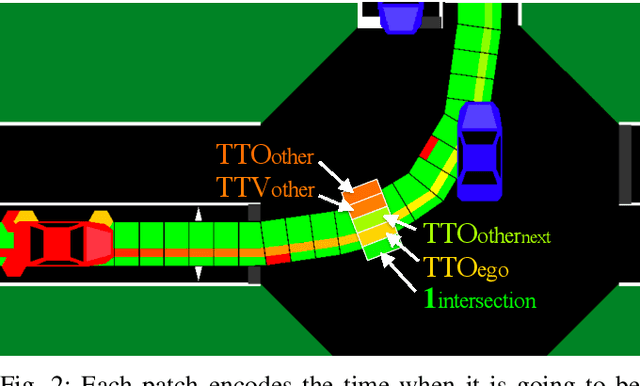

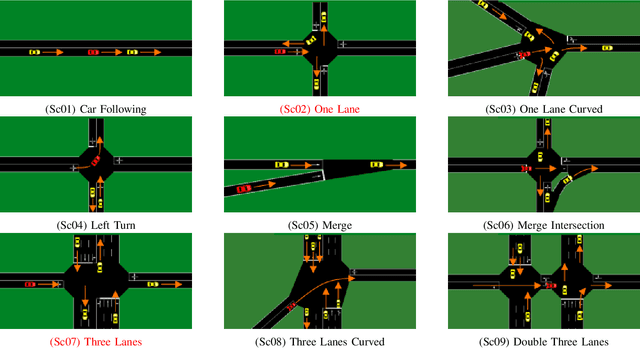

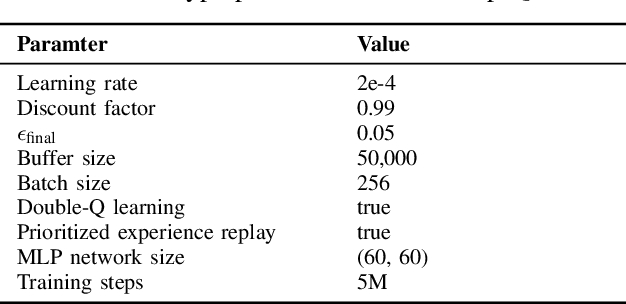

Abstract:Data driven approaches for decision making applied to automated driving require appropriate generalization strategies, to ensure applicability to the world's variability. Current approaches either do not generalize well beyond the training data or are not capable to consider a variable number of traffic participants. Therefore we propose an invariant environment representation from the perspective of the ego vehicle. The representation encodes all necessary information for safe decision making. To assess the generalization capabilities of the novel environment representation, we train our agents on a small subset of scenarios and evaluate on the entire set. Here we show that the agents are capable to generalize successfully to unseen scenarios, due to the abstraction. In addition we present a simple occlusion model that enables our agents to navigate intersections with occlusions without a significant change in performance.

Parallelization of Monte Carlo Tree Search in Continuous Domains

Mar 30, 2020

Abstract:Monte Carlo Tree Search (MCTS) has proven to be capable of solving challenging tasks in domains such as Go, chess and Atari. Previous research has developed parallel versions of MCTS, exploiting today's multiprocessing architectures. These studies focused on versions of MCTS for the discrete case. Our work builds upon existing parallelization strategies and extends them to continuous domains. In particular, leaf parallelization and root parallelization are studied and two final selection strategies that are required to handle continuous states in root parallelization are proposed. The evaluation of the resulting parallelized continuous MCTS is conducted using a challenging cooperative multi-agent system trajectory planning task in the domain of automated vehicles.

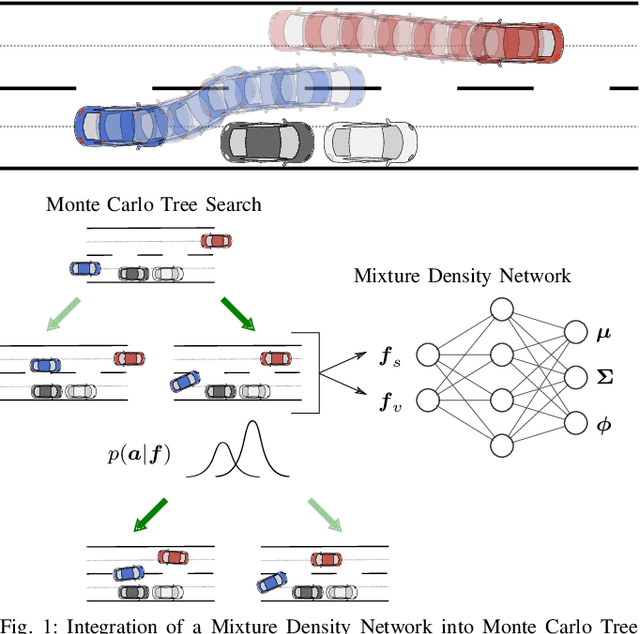

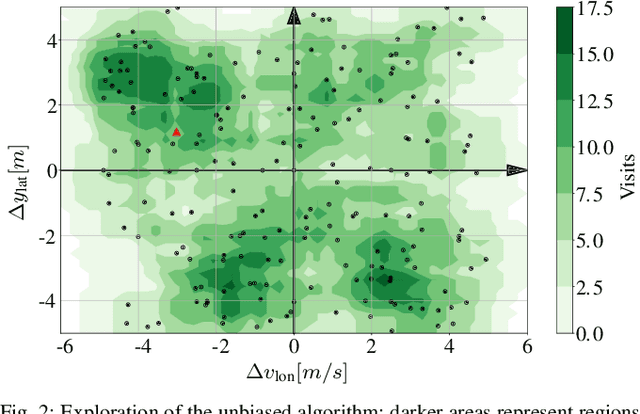

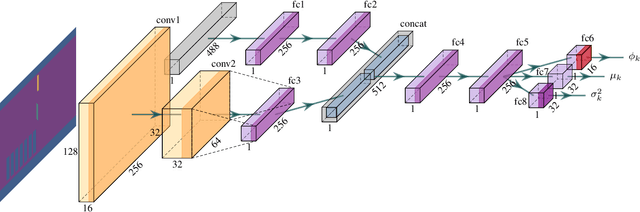

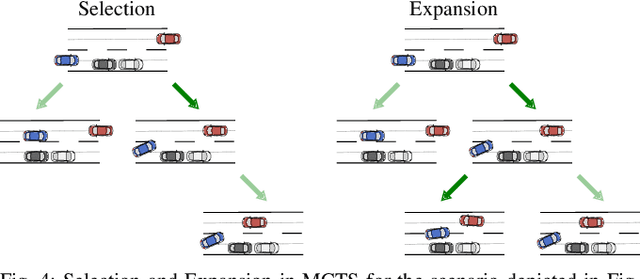

Accelerating Cooperative Planning for Automated Vehicles with Learned Heuristics and Monte Carlo Tree Search

Feb 02, 2020

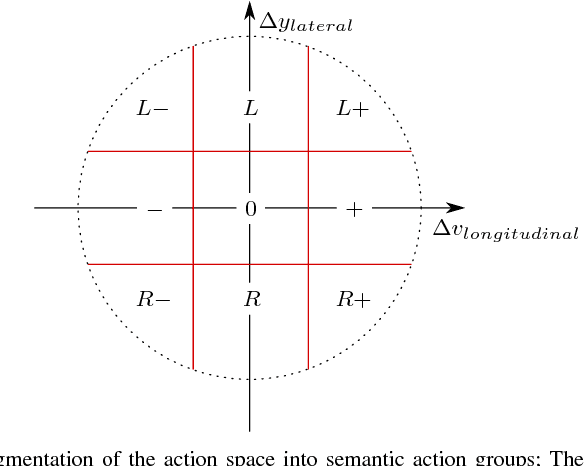

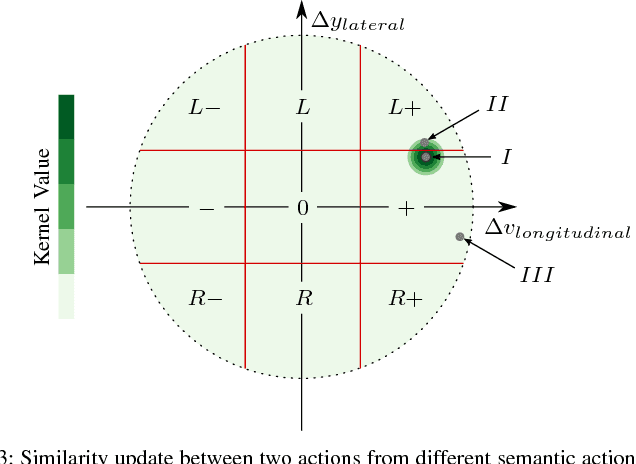

Abstract:Efficient driving in urban traffic scenarios requires foresight. The observation of other traffic participants, and the inference of their possible next actions depending on the own action is considered cooperative prediction and planning. Humans are well equipped with the capability to predict the actions of multiple interacting traffic participants and plan accordingly, without the need to directly communicate with others. Prior work has shown that it is possible to achieve effective cooperative planning without the need for explicit communication. However, the search space for cooperative plans is so large that the vast amount of the computational budget is spent on exploring the search space in unpromising regions that are far away from the solution. To accelerate the planning process, we combined learned heuristics with a cooperative planning method in order to guide the search towards regions with promising actions, yielding better results at lower computational costs.

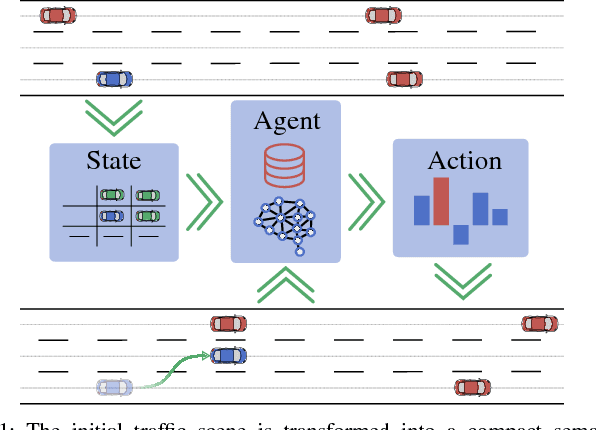

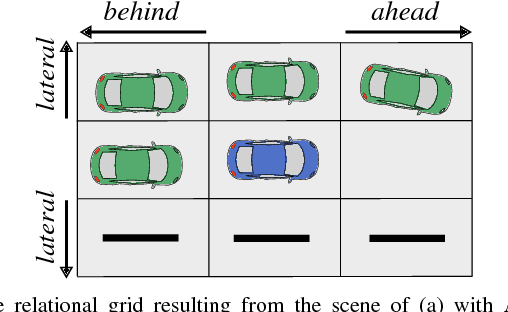

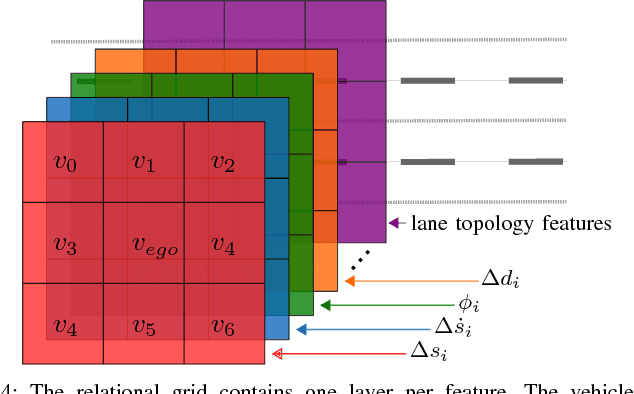

Adaptive Behavior Generation for Autonomous Driving using Deep Reinforcement Learning with Compact Semantic States

Sep 10, 2018

Abstract:Making the right decision in traffic is a challenging task that is highly dependent on individual preferences as well as the surrounding environment. Therefore it is hard to model solely based on expert knowledge. In this work we use Deep Reinforcement Learning to learn maneuver decisions based on a compact semantic state representation. This ensures a consistent model of the environment across scenarios as well as a behavior adaptation function, enabling on-line changes of desired behaviors without re-training. The input for the neural network is a simulated object list similar to that of Radar or Lidar sensors, superimposed by a relational semantic scene description. The state as well as the reward are extended by a behavior adaptation function and a parameterization respectively. With little expert knowledge and a set of mid-level actions, it can be seen that the agent is capable to adhere to traffic rules and learns to drive safely in a variety of situations.

Decentralized Cooperative Planning for Automated Vehicles with Continuous Monte Carlo Tree Search

Sep 10, 2018

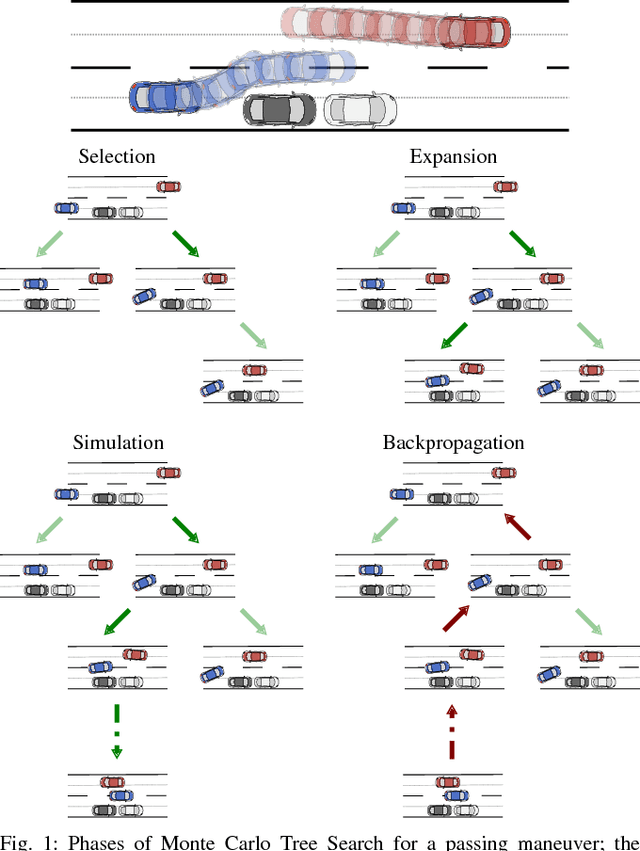

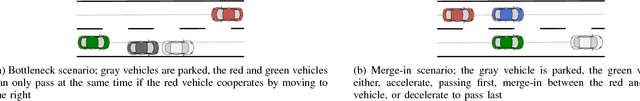

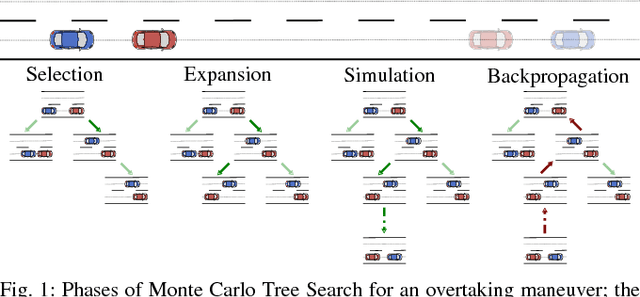

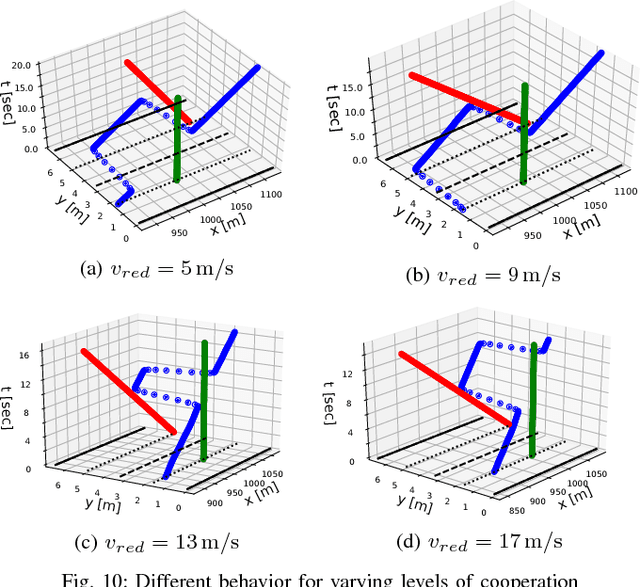

Abstract:Urban traffic scenarios often require a high degree of cooperation between traffic participants to ensure safety and efficiency. Observing the behavior of others, humans infer whether or not others are cooperating. This work aims to extend the capabilities of automated vehicles, enabling them to cooperate implicitly in heterogeneous environments. Continuous actions allow for arbitrary trajectories and hence are applicable to a much wider class of problems than existing cooperative approaches with discrete action spaces. Based on cooperative modeling of other agents, Monte Carlo Tree Search (MCTS) in conjunction with Decoupled-UCT evaluates the action-values of each agent in a cooperative and decentralized way, respecting the interdependence of actions among traffic participants. The extension to continuous action spaces is addressed by incorporating novel MCTS-specific enhancements for efficient search space exploration. The proposed algorithm is evaluated under different scenarios, showing that the algorithm is able to achieve effective cooperative planning and generate solutions egocentric planning fails to identify.

Decentralized Cooperative Planning for Automated Vehicles with Hierarchical Monte Carlo Tree Search

Jul 25, 2018

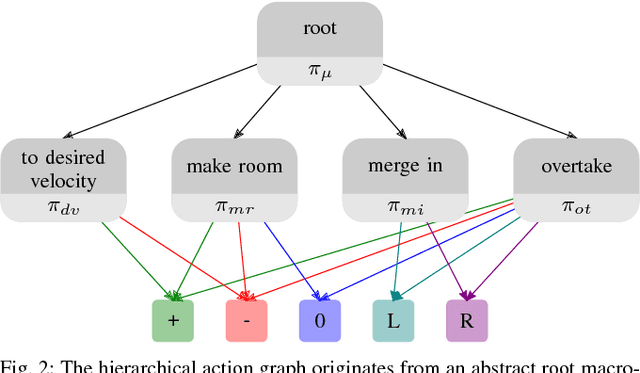

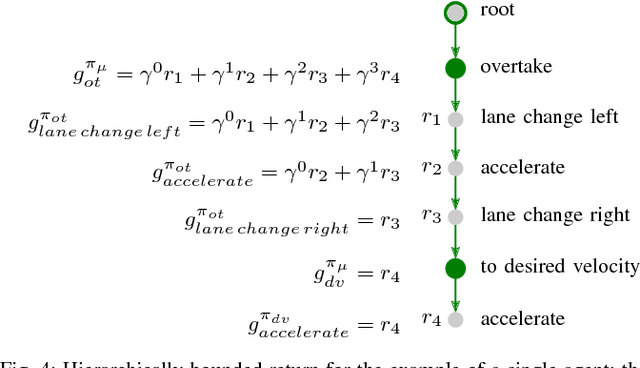

Abstract:Today's automated vehicles lack the ability to cooperate implicitly with others. This work presents a Monte Carlo Tree Search (MCTS) based approach for decentralized cooperative planning using macro-actions for automated vehicles in heterogeneous environments. Based on cooperative modeling of other agents and Decoupled-UCT (a variant of MCTS), the algorithm evaluates the state-action-values of each agent in a cooperative and decentralized manner, explicitly modeling the interdependence of actions between traffic participants. Macro-actions allow for temporal extension over multiple time steps and increase the effective search depth requiring fewer iterations to plan over longer horizons. Without predefined policies for macro-actions, the algorithm simultaneously learns policies over and within macro-actions. The proposed method is evaluated under several conflict scenarios, showing that the algorithm can achieve effective cooperative planning with learned macro-actions in heterogeneous environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge