Cooperative Trajectory Planning in Uncertain Environments with Monte Carlo Tree Search and Risk Metrics

Paper and Code

Mar 09, 2022

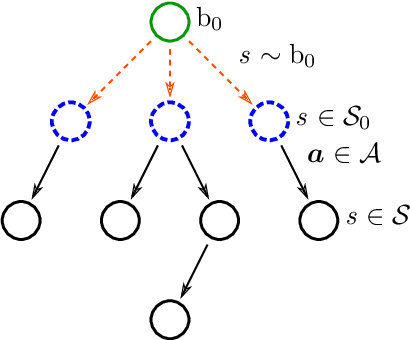

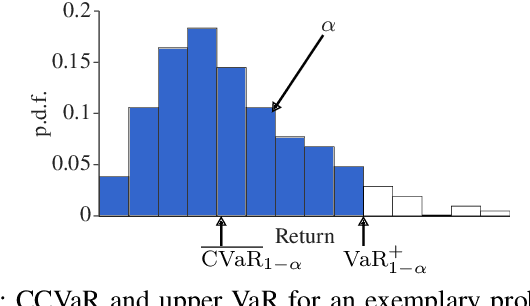

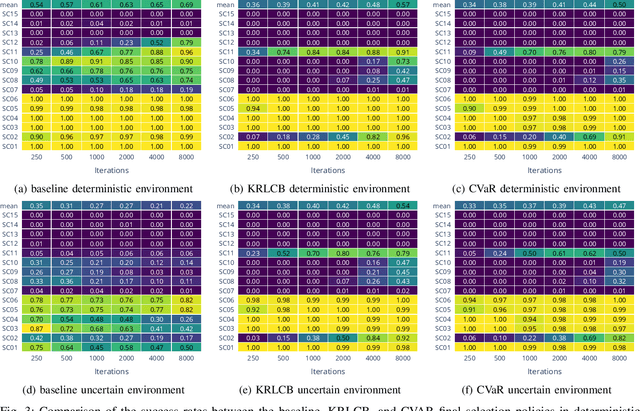

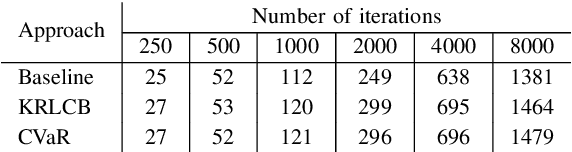

Automated vehicles require the ability to cooperate with humans for a smooth integration into today's traffic. While the concept of cooperation is well known, the development of a robust and efficient cooperative trajectory planning method is still a challenge. One aspect of this challenge is the uncertainty surrounding the state of the environment due to limited sensor accuracy. This uncertainty can be represented by a Partially Observable Markov Decision Process. Our work addresses this problem by extending an existing cooperative trajectory planning approach based on Monte Carlo Tree Search for continuous action spaces. It does so by explicitly modeling uncertainties in the form of a root belief state, from which start states for trees are sampled. After the trees have been constructed with Monte Carlo Tree Search, their results are aggregated into return distributions using kernel regression. For the final selection, we apply two risk metrics, namely a Lower Confidence Bound and a Conditional Value at Risk. It can be demonstrated that the integration of risk metrics in the final selection policy consistently outperforms a baseline in uncertain environments, generating considerably safer trajectories.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge