Kap Luk Chan

Joint Learning of Siamese CNNs and Temporally Constrained Metrics for Tracklet Association

Sep 25, 2016

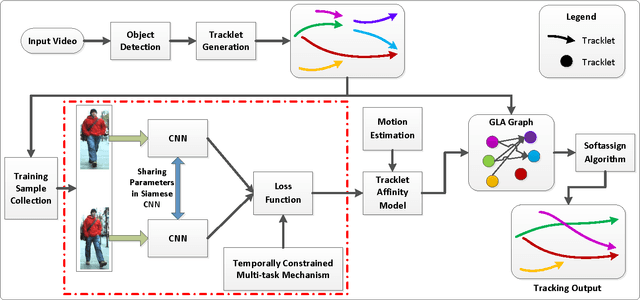

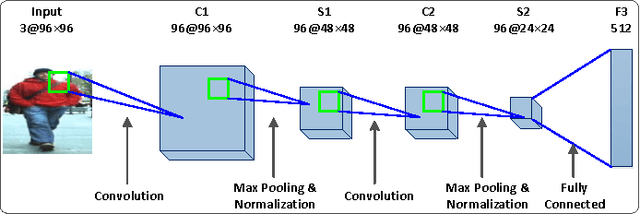

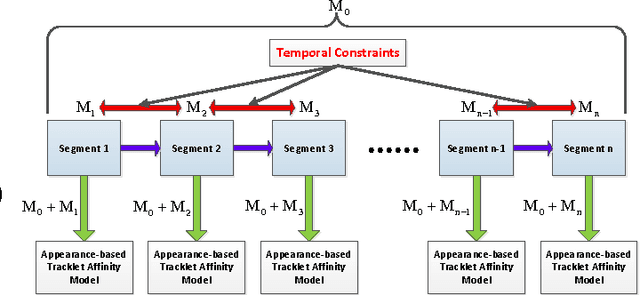

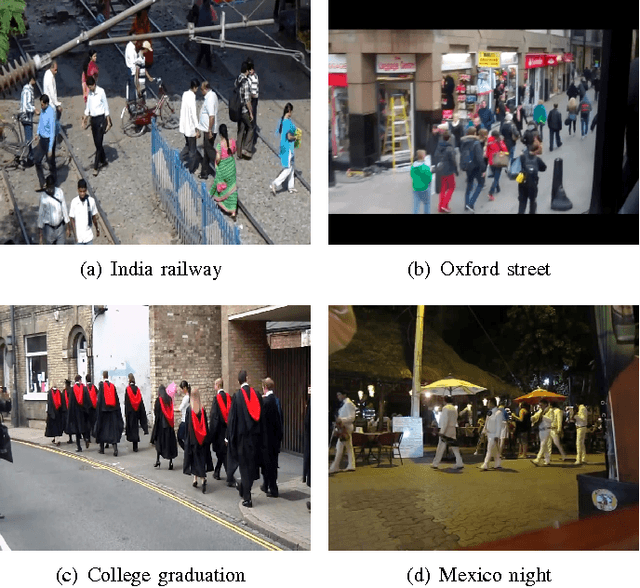

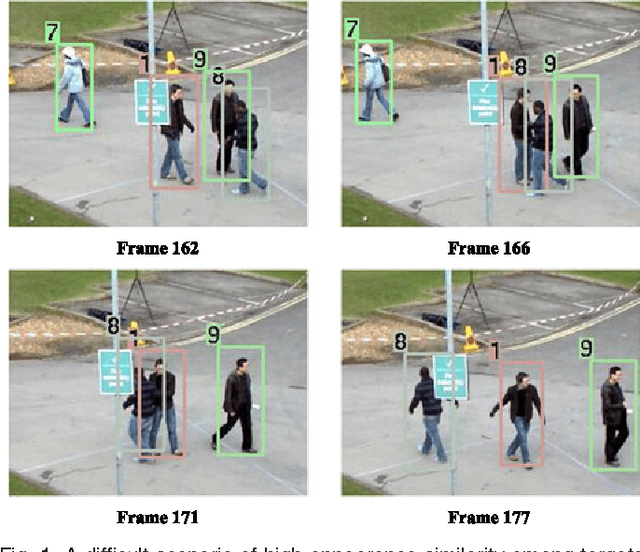

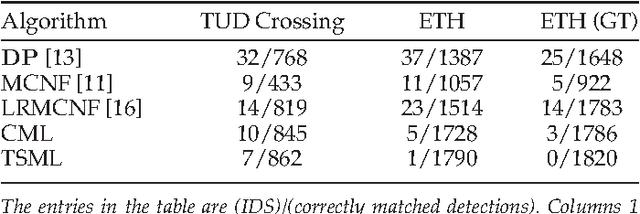

Abstract:In this paper, we study the challenging problem of multi-object tracking in a complex scene captured by a single camera. Different from the existing tracklet association-based tracking methods, we propose a novel and efficient way to obtain discriminative appearance-based tracklet affinity models. Our proposed method jointly learns the convolutional neural networks (CNNs) and temporally constrained metrics. In our method, a Siamese convolutional neural network (CNN) is first pre-trained on the auxiliary data. Then the Siamese CNN and temporally constrained metrics are jointly learned online to construct the appearance-based tracklet affinity models. The proposed method can jointly learn the hierarchical deep features and temporally constrained segment-wise metrics under a unified framework. For reliable association between tracklets, a novel loss function incorporating temporally constrained multi-task learning mechanism is proposed. By employing the proposed method, tracklet association can be accomplished even in challenging situations. Moreover, a new dataset with 40 fully annotated sequences is created to facilitate the tracking evaluation. Experimental results on five public datasets and the new large-scale dataset show that our method outperforms several state-of-the-art approaches in multi-object tracking.

Tracklet Association by Online Target-Specific Metric Learning and Coherent Dynamics Estimation

Apr 22, 2016

Abstract:In this paper, we present a novel method based on online target-specific metric learning and coherent dynamics estimation for tracklet (track fragment) association by network flow optimization in long-term multi-person tracking. Our proposed framework aims to exploit appearance and motion cues to prevent identity switches during tracking and to recover missed detections. Furthermore, target-specific metrics (appearance cue) and motion dynamics (motion cue) are proposed to be learned and estimated online, i.e. during the tracking process. Our approach is effective even when such cues fail to identify or follow the target due to occlusions or object-to-object interactions. We also propose to learn the weights of these two tracking cues to handle the difficult situations, such as severe occlusions and object-to-object interactions effectively. Our method has been validated on several public datasets and the experimental results show that it outperforms several state-of-the-art tracking methods.

Video Tracking Using Learned Hierarchical Features

Nov 25, 2015

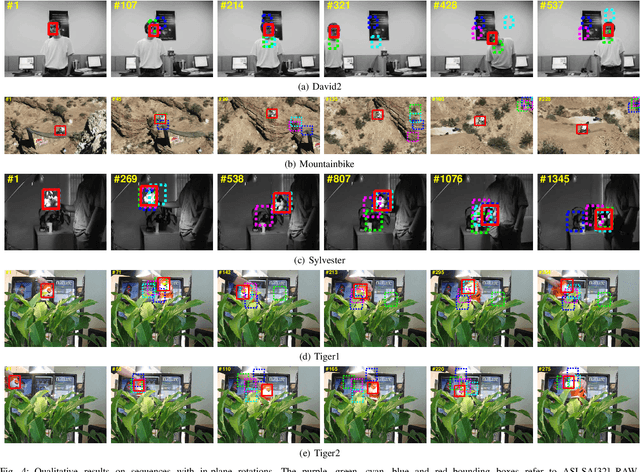

Abstract:In this paper, we propose an approach to learn hierarchical features for visual object tracking. First, we offline learn features robust to diverse motion patterns from auxiliary video sequences. The hierarchical features are learned via a two-layer convolutional neural network. Embedding the temporal slowness constraint in the stacked architecture makes the learned features robust to complicated motion transformations, which is important for visual object tracking. Then, given a target video sequence, we propose a domain adaptation module to online adapt the pre-learned features according to the specific target object. The adaptation is conducted in both layers of the deep feature learning module so as to include appearance information of the specific target object. As a result, the learned hierarchical features can be robust to both complicated motion transformations and appearance changes of target objects. We integrate our feature learning algorithm into three tracking methods. Experimental results demonstrate that significant improvement can be achieved using our learned hierarchical features, especially on video sequences with complicated motion transformations.

* 12 pages, 7 figures

Matching-Constrained Active Contours

Jul 24, 2013

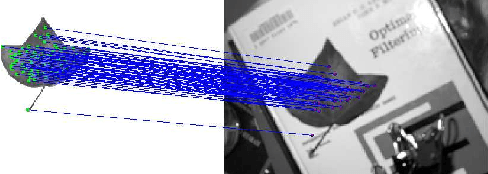

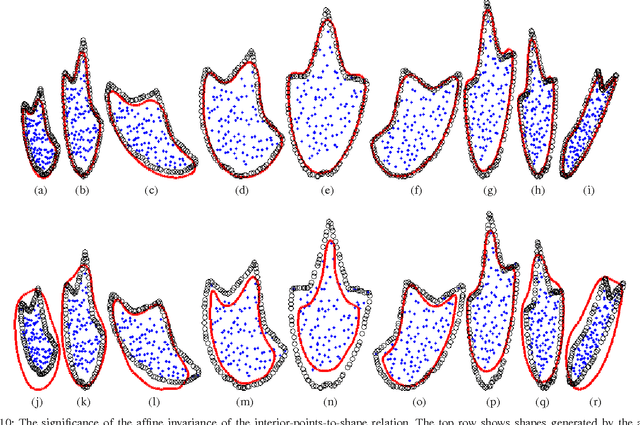

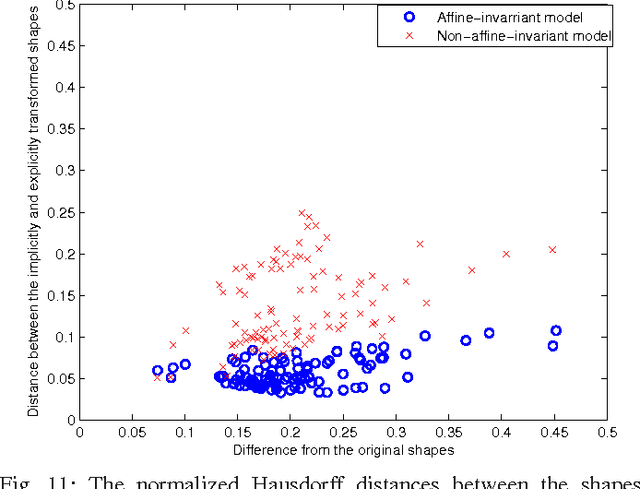

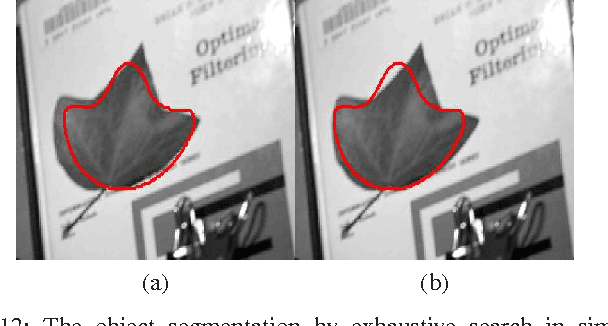

Abstract:In object segmentation by active contours, the initial contour is often required. Conventionally, the initial contour is provided by the user. This paper extends the conventional active contour model by incorporating feature matching in the formulation, which gives rise to a novel matching-constrained active contour. The numerical solution to the new optimization model provides an automated framework of object segmentation without user intervention. The main idea is to incorporate feature point matching as a constraint in active contour models. To this effect, we obtain a mathematical model of interior points to boundary contour such that matching of interior feature points gives contour alignment, and we formulate the matching score as a constraint to active contour model such that the feature matching of maximum score that gives the contour alignment provides the initial feasible solution to the constrained optimization model of segmentation. The constraint also ensures that the optimal contour does not deviate too much from the initial contour. Projected-gradient descent equations are derived to solve the constrained optimization. In the experiments, we show that our method is capable of achieving the automatic object segmentation, and it outperforms the related methods.

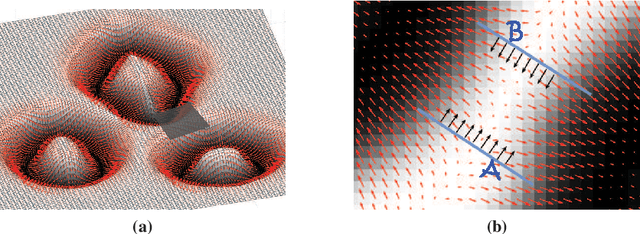

Piecewise Linear Patch Reconstruction for Segmentation and Description of Non-smooth Image Structures

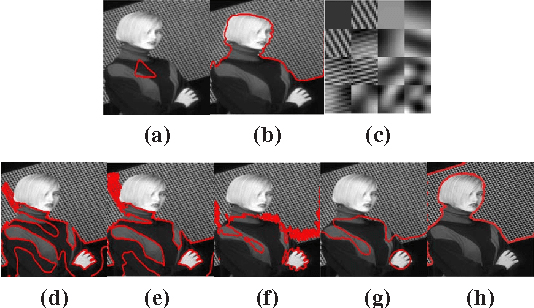

Jul 21, 2012

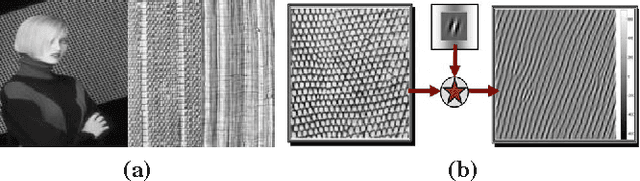

Abstract:In this paper, we propose a unified energy minimization model for the segmentation of non-smooth image structures. The energy of piecewise linear patch reconstruction is considered as an objective measure of the quality of the segmentation of non-smooth structures. The segmentation is achieved by minimizing the single energy without any separate process of feature extraction. We also prove that the error of segmentation is bounded by the proposed energy functional, meaning that minimizing the proposed energy leads to reducing the error of segmentation. As a by-product, our method produces a dictionary of optimized orthonormal descriptors for each segmented region. The unique feature of our method is that it achieves the simultaneous segmentation and description for non-smooth image structures under the same optimization framework. The experiments validate our theoretical claims and show the clear superior performance of our methods over other related methods for segmentation of various image textures. We show that our model can be coupled with the piecewise smooth model to handle both smooth and non-smooth structures, and we demonstrate that the proposed model is capable of coping with multiple different regions through the one-against-all strategy.

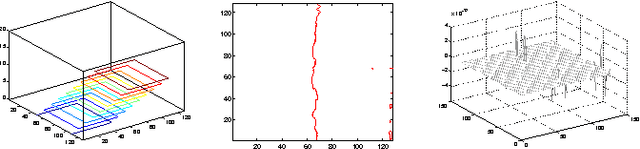

The Stability of Convergence of Curve Evolutions in Vector Fields

Jun 17, 2012

Abstract:Curve evolution is often used to solve computer vision problems. If the curve evolution fails to converge, we would not be able to solve the targeted problem in a lifetime. This paper studies the theoretical aspect of the convergence of a type of general curve evolutions. We establish a theory for analyzing and improving the stability of the convergence of the general curve evolutions. Based on this theory, we ascertain that the convergence of a known curve evolution is marginal stable. We propose a way of modifying the original curve evolution equation to improve the stability of the convergence according to our theory. Numerical experiments show that the modification improves the convergence of the curve evolution, which validates our theory.

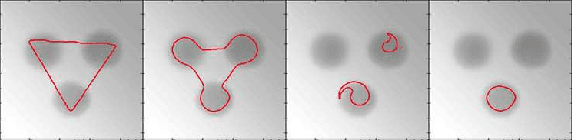

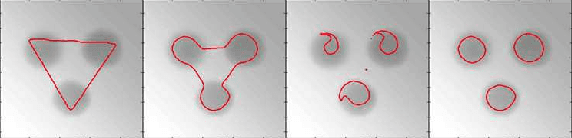

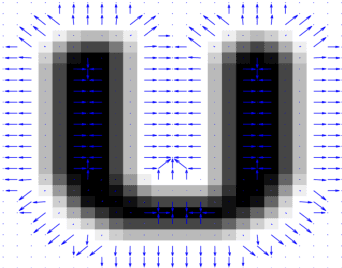

Active Contour with A Tangential Component

Apr 29, 2012

Abstract:Conventional edge-based active contours often require the normal component of an edge indicator function on the optimal contours to approximate zero, while the tangential component can still be significant. In real images, the full gradients of the edge indicator function along the object boundaries are often small. Hence, the curve evolution of edge-based active contours can terminate early before converging to the object boundaries with a careless contour initialization. We propose a novel Geodesic Snakes (GeoSnakes) active contour that requires the full gradients of the edge indicator to vanish at the optimal solution. Besides, the conventional curve evolution approach for minimizing active contour energy cannot fully solve the Euler-Lagrange (EL) equation of our GeoSnakes active contour, causing a Pseudo Stationary Phenomenon (PSP). To address the PSP problem, we propose an auxiliary curve evolution equation, named the equilibrium flow (EF) equation. Based on the EF and the conventional curve evolution, we obtain a solution to the full EL equation of GeoSnakes active contour. Experimental results validate the proposed geometrical interpretation of the early termination problem, and they also show that the proposed method overcomes the problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge