The Stability of Convergence of Curve Evolutions in Vector Fields

Paper and Code

Jun 17, 2012

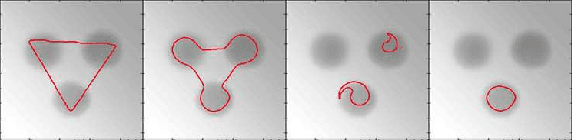

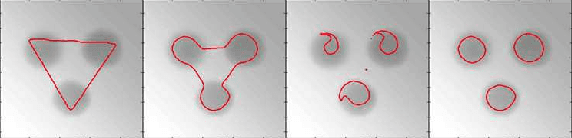

Curve evolution is often used to solve computer vision problems. If the curve evolution fails to converge, we would not be able to solve the targeted problem in a lifetime. This paper studies the theoretical aspect of the convergence of a type of general curve evolutions. We establish a theory for analyzing and improving the stability of the convergence of the general curve evolutions. Based on this theory, we ascertain that the convergence of a known curve evolution is marginal stable. We propose a way of modifying the original curve evolution equation to improve the stability of the convergence according to our theory. Numerical experiments show that the modification improves the convergence of the curve evolution, which validates our theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge