Joshua E. Siegel

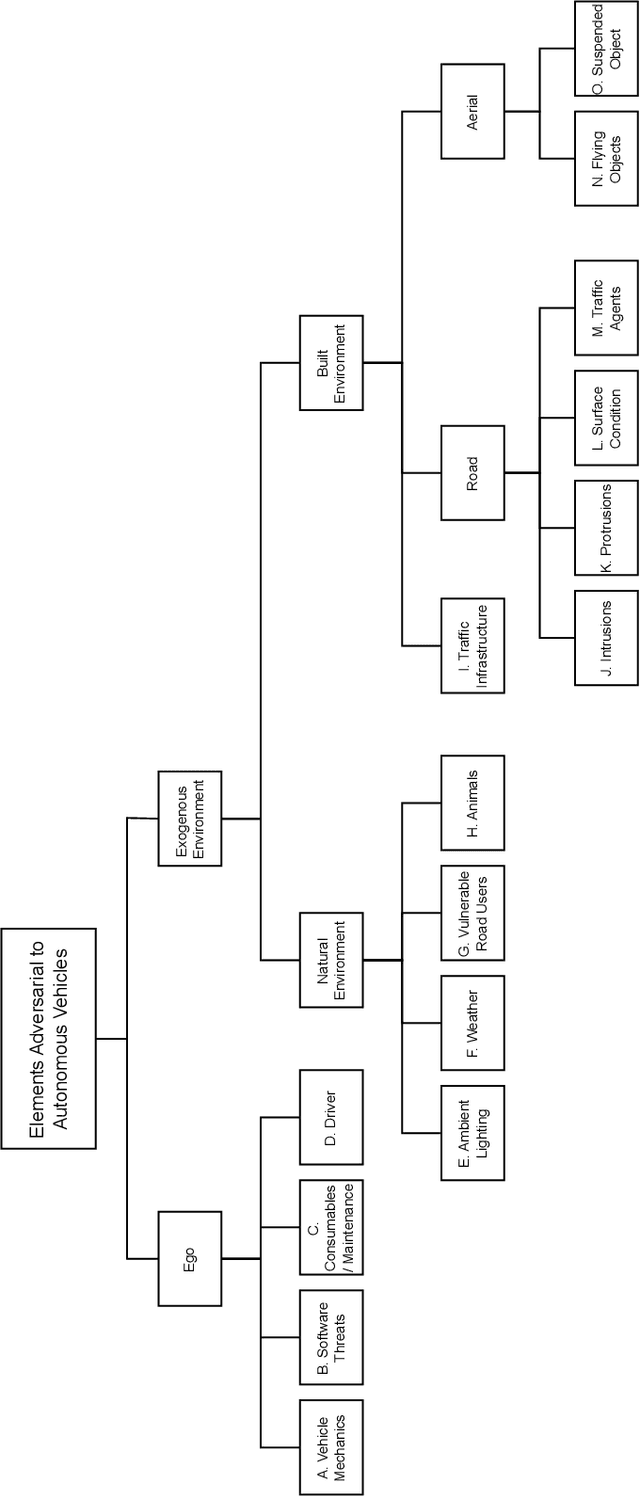

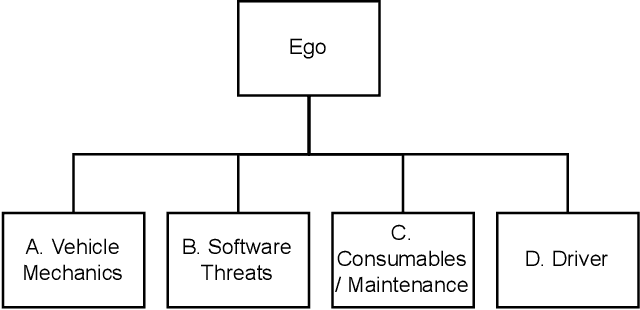

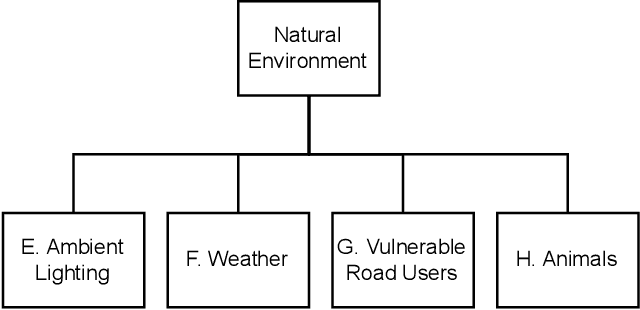

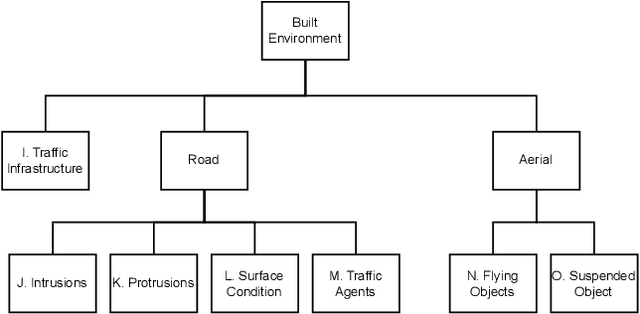

Developing a Taxonomy of Elements Adversarial to Autonomous Vehicles

Feb 29, 2024

Abstract:As highly automated vehicles reach higher deployment rates, they find themselves in increasingly dangerous situations. Knowing that the consequence of a crash is significant for the health of occupants, bystanders, and properties, as well as to the viability of autonomy and adjacent businesses, we must search for more efficacious ways to comprehensively and reliably train autonomous vehicles to better navigate the complex scenarios with which they struggle. We therefore introduce a taxonomy of potentially adversarial elements that may contribute to poor performance or system failures as a means of identifying and elucidating lesser-seen risks. This taxonomy may be used to characterize failures of automation, as well as to support simulation and real-world training efforts by providing a more comprehensive classification system for events resulting in disengagement, collision, or other negative consequences. This taxonomy is created from and tested against real collision events to ensure comprehensive coverage with minimal class overlap and few omissions. It is intended to be used both for the identification of harm-contributing adversarial events and in the generation thereof (to create extreme edge- and corner-case scenarios) in training procedures.

The AI Mechanic: Acoustic Vehicle Characterization Neural Networks

May 19, 2022

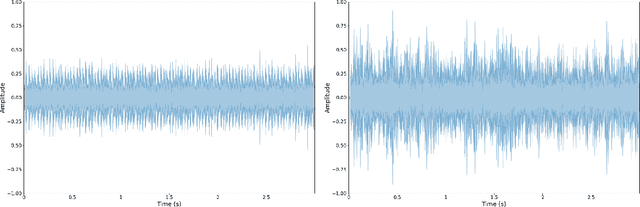

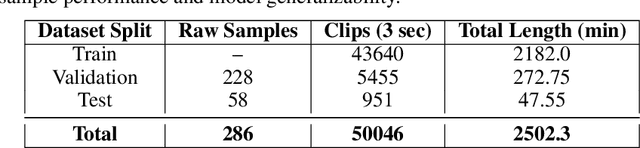

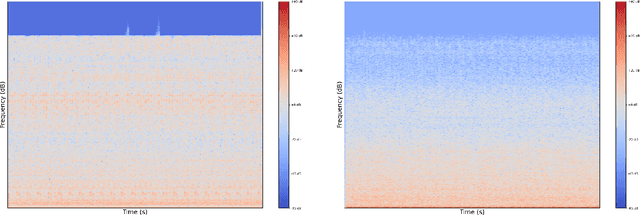

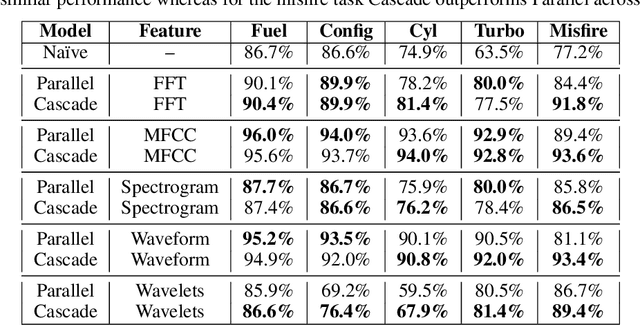

Abstract:In a world increasingly dependent on road-based transportation, it is essential to understand vehicles. We introduce the AI mechanic, an acoustic vehicle characterization deep learning system, as an integrated approach using sound captured from mobile devices to enhance transparency and understanding of vehicles and their condition for non-expert users. We develop and implement novel cascading architectures for vehicle understanding, which we define as sequential, conditional, multi-level networks that process raw audio to extract highly-granular insights. To showcase the viability of cascading architectures, we build a multi-task convolutional neural network that predicts and cascades vehicle attributes to enhance fault detection. We train and test these models on a synthesized dataset reflecting more than 40 hours of augmented audio and achieve >92% validation set accuracy on attributes (fuel type, engine configuration, cylinder count and aspiration type). Our cascading architecture additionally achieved 93.6% validation and 86.8% test set accuracy on misfire fault prediction, demonstrating margins of 16.4% / 7.8% and 4.2% / 1.5% improvement over na\"ive and parallel baselines. We explore experimental studies focused on acoustic features, data augmentation, feature fusion, and data reliability. Finally, we conclude with a discussion of broader implications, future directions, and application areas for this work.

Game and Simulation Design for Studying Pedestrian-Automated Vehicle Interactions

Sep 30, 2021

Abstract:The present cross-disciplinary research explores pedestrian-autonomous vehicle interactions in a safe, virtual environment. We first present contemporary tools in the field and then propose the design and development of a new application that facilitates pedestrian point of view research. We conduct a three-step user experience experiment where participants answer questions before and after using the application in various scenarios. Behavioral results in virtuality, especially when there were consequences, tend to simulate real life sufficiently well to make design choices, and we received valuable insights into human/vehicle interaction. Our tool seemed to start raising participant awareness of autonomous vehicles and their capabilities and limitations, which is an important step in overcoming public distrust of AVs. Further, studying how users respect or take advantage of AVs may help inform future operating mode indicator design as well as algorithm biases that might support socially-optimal AV operation.

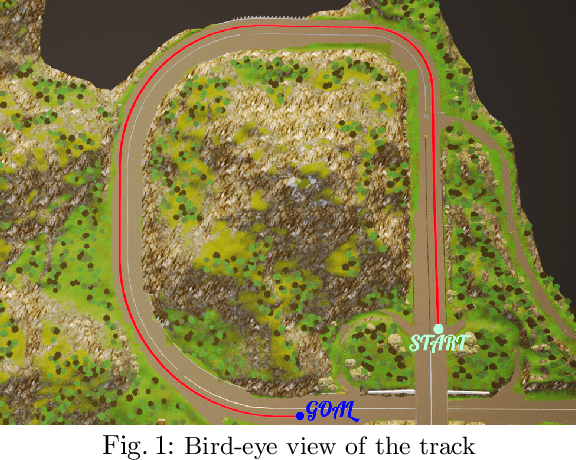

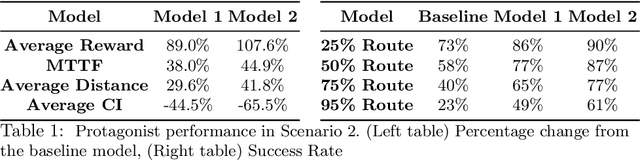

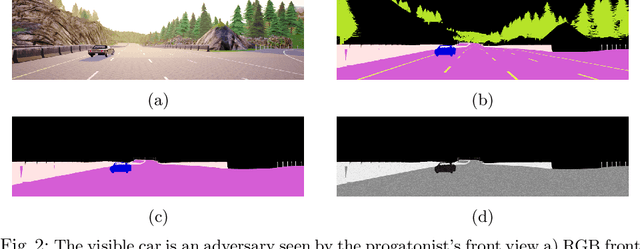

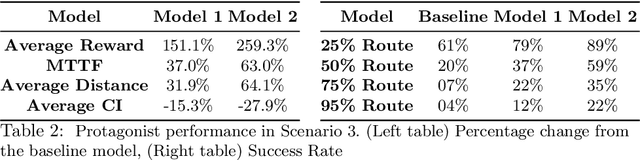

Towards Safer Self-Driving Through Great PAIN

Mar 24, 2020

Abstract:Automated vehicles' neural networks suffer from overfit, poor generalizability, and untrained edge cases due to limited data availability. Researchers synthesize randomized edge-case scenarios to assist in the training process, though simulation introduces potential for overfit to latent rules and features. Automating worst-case scenario generation could yield informative data for improving self driving. To this end, we introduce a "Physically Adversarial Intelligent Network" (PAIN), wherein self-driving vehicles interact aggressively in the CARLA simulation environment. We train two agents, a protagonist and an adversary, using dueling double deep Q networks (DDDQNs) with prioritized experience replay. The coupled networks alternately seek-to-collide and to avoid collisions such that the "defensive" avoidance algorithm increases the mean-time-to-failure and distance traveled under non-hostile operating conditions. The trained protagonist becomes more resilient to environmental uncertainty and less prone to corner case failures resulting in collisions than the agent trained without an adversary.

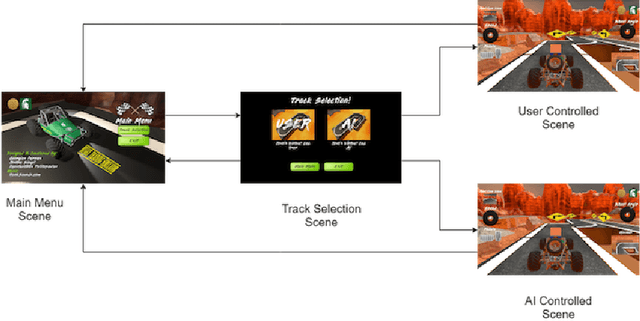

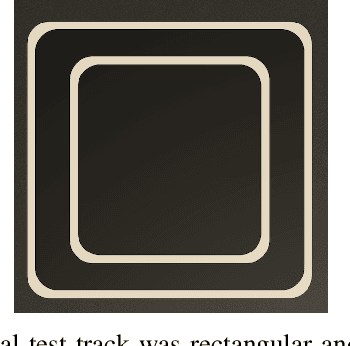

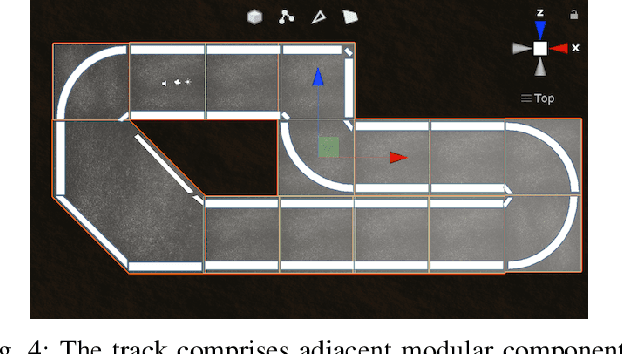

A gamified simulator and physical platform for self-driving algorithm training and validation

Nov 18, 2019

Abstract:We identify the need for a gamified self-driving simulator where game mechanics encourage high-quality data capture, and design and apply such a simulator to collecting lane-following training data. The resulting synthetic data enables a Convolutional Neural Network (CNN) to drive an in-game vehicle. We simultaneously develop a physical test platform based on a radio-controlled vehicle and the Robotic Operating System (ROS) and successfully transfer the simulation-trained model to the physical domain without modification. The cross-platform simulator facilitates unsupervised crowdsourcing, helping to collect diverse data emulating complex, dynamic environment data, infrequent events, and edge cases. The physical platform provides a low-cost solution for validating simulation-trained models or enabling rapid transfer learning, thereby improving the safety and resilience of self-driving algorithms.

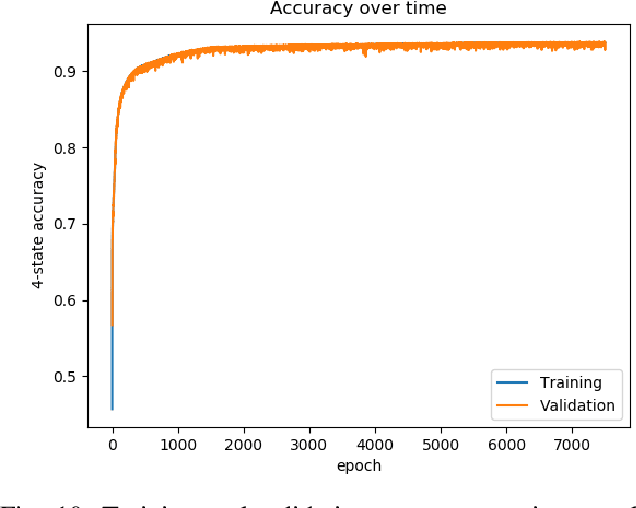

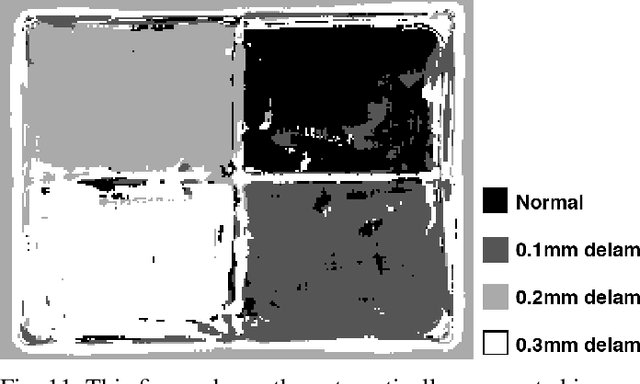

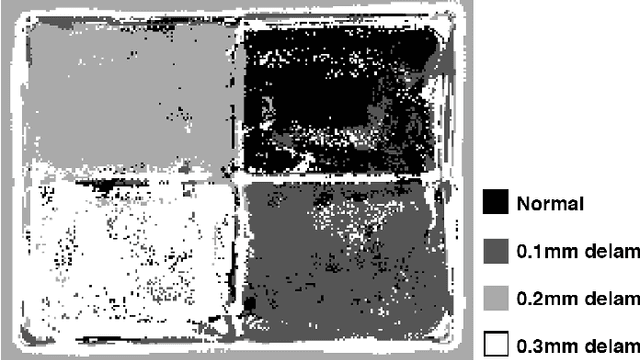

Automated Non-Destructive Inspection of Fused Filament Fabrication Components Using Thermographic Signal Reconstruction

Jul 05, 2019

Abstract:Manufacturers struggle to produce low-cost, robust and complex components at manufacturing lot-size one. Additive processes like Fused Filament Fabrication (FFF) inexpensively produce complex geometries, but defects limit viability in critical applications. We present an approach to high-accuracy, high-throughput and low-cost automated non-destructive testing (NDT) for FFF interlayer delamination using Flash Thermography (FT) data processed with Thermographic Signal Reconstruction (TSR) and Artificial Intelligence (AI). A Deep Neural Network (DNN) attains 95.4% per-pixel accuracy when differentiating four delamination thicknesses 5mm subsurface in PolyLactic Acid (PLA) widgets, and 98.6% accuracy in differentiating acceptable from unacceptable condition for the same components. Automated inspection enables time- and cost-efficient 100% inspection for delamination defects, supporting FFF's use in critical and small-batch applications.

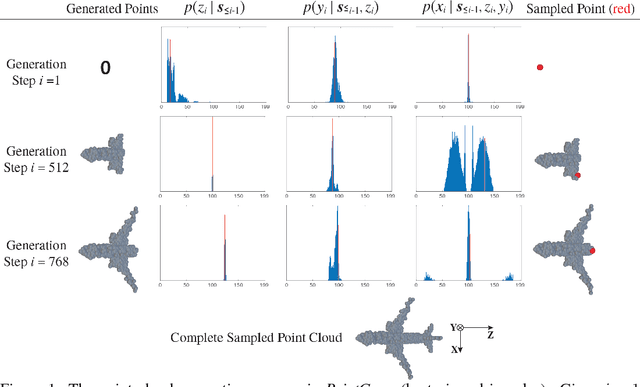

PointGrow: Autoregressively Learned Point Cloud Generation with Self-Attention

Oct 12, 2018

Abstract:A point cloud is an agile 3D representation, efficiently modeling an object's surface geometry. However, these surface-centric properties also pose challenges on designing tools to recognize and synthesize point clouds. This work presents a novel autoregressive model, PointGrow, which generates realistic point cloud samples from scratch or conditioned on given semantic contexts. Our model operates recurrently, with each point sampled according to a conditional distribution given its previously-generated points. Since point cloud object shapes are typically encoded by long-range interpoint dependencies, we augment our model with dedicated self-attention modules to capture these relations. Extensive evaluation demonstrates that PointGrow achieves satisfying performance on both unconditional and conditional point cloud generation tasks, with respect to fidelity, diversity and semantic preservation. Further, conditional PointGrow learns a smooth manifold of given image conditions where 3D shape interpolation and arithmetic calculation can be performed inside. Code and models are available at: https://github.com/syb7573330/PointGrow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge