Demetris Coleman

The Rational Selection of Goal Operations and the Integration ofSearch Strategies with Goal-Driven Autonomy

Jan 21, 2022

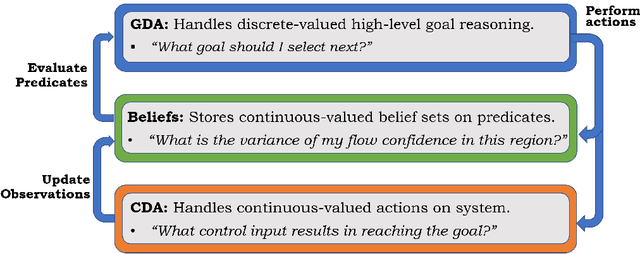

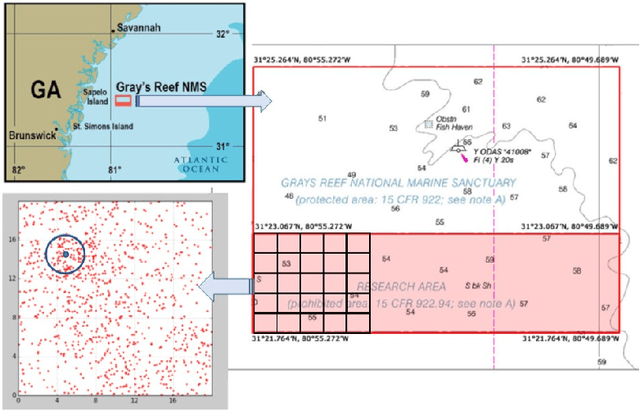

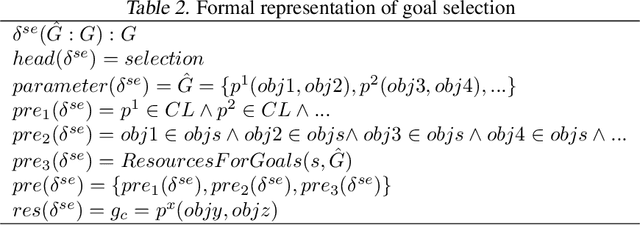

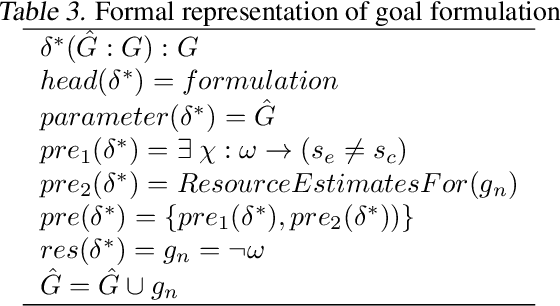

Abstract:Intelligent physical systems as embodied cognitive systems must perform high-level reasoning while concurrently managing an underlying control architecture. The link between cognition and control must manage the problem of converting continuous values from the real world to symbolic representations (and back). To generate effective behaviors, reasoning must include a capacity to replan, acquire and update new information, detect and respond to anomalies, and perform various operations on system goals. But, these processes are not independent and need further exploration. This paper examines an agent's choices when multiple goal operations co-occur and interact, and it establishes a method of choosing between them. We demonstrate the benefits and discuss the trade offs involved with this and show positive results in a dynamic marine search task.

Towards Safer Self-Driving Through Great PAIN

Mar 24, 2020

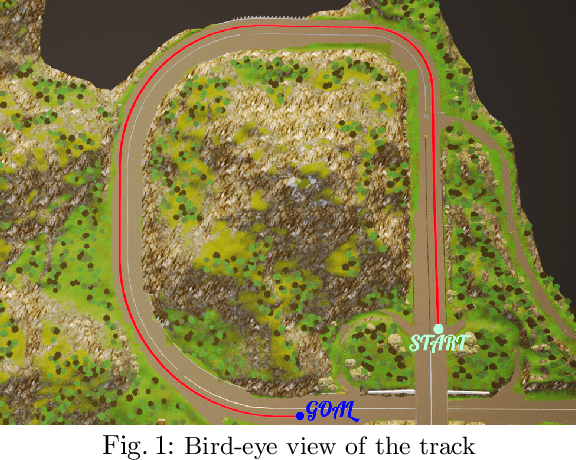

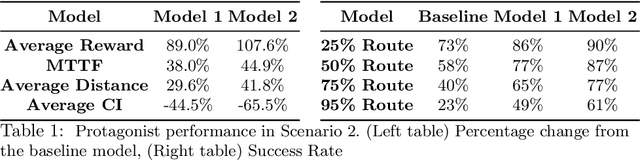

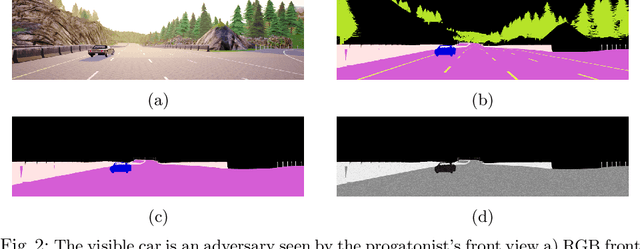

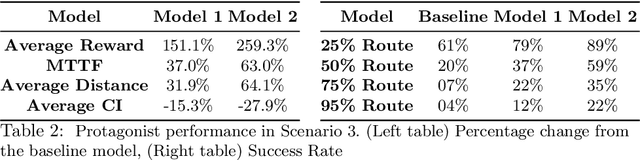

Abstract:Automated vehicles' neural networks suffer from overfit, poor generalizability, and untrained edge cases due to limited data availability. Researchers synthesize randomized edge-case scenarios to assist in the training process, though simulation introduces potential for overfit to latent rules and features. Automating worst-case scenario generation could yield informative data for improving self driving. To this end, we introduce a "Physically Adversarial Intelligent Network" (PAIN), wherein self-driving vehicles interact aggressively in the CARLA simulation environment. We train two agents, a protagonist and an adversary, using dueling double deep Q networks (DDDQNs) with prioritized experience replay. The coupled networks alternately seek-to-collide and to avoid collisions such that the "defensive" avoidance algorithm increases the mean-time-to-failure and distance traveled under non-hostile operating conditions. The trained protagonist becomes more resilient to environmental uncertainty and less prone to corner case failures resulting in collisions than the agent trained without an adversary.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge