The AI Mechanic: Acoustic Vehicle Characterization Neural Networks

Paper and Code

May 19, 2022

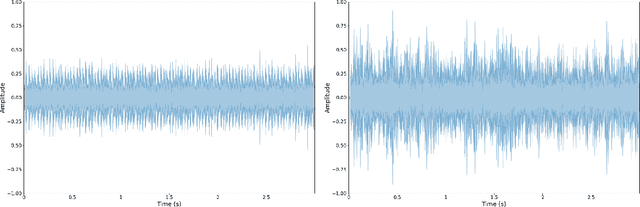

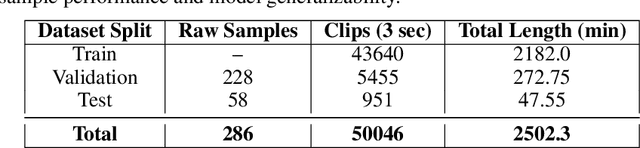

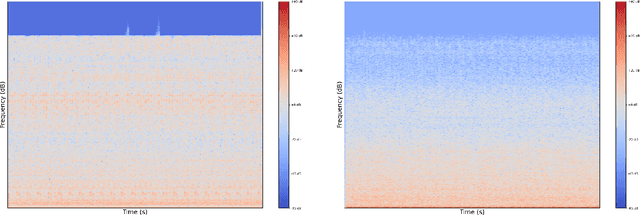

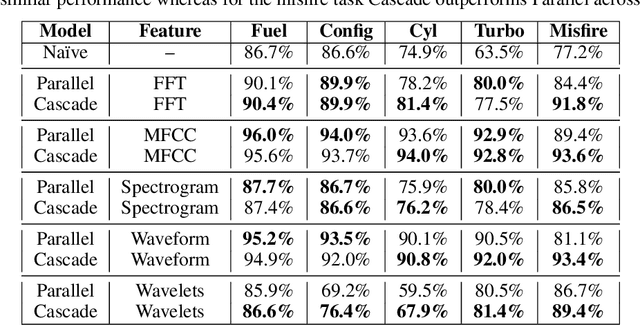

In a world increasingly dependent on road-based transportation, it is essential to understand vehicles. We introduce the AI mechanic, an acoustic vehicle characterization deep learning system, as an integrated approach using sound captured from mobile devices to enhance transparency and understanding of vehicles and their condition for non-expert users. We develop and implement novel cascading architectures for vehicle understanding, which we define as sequential, conditional, multi-level networks that process raw audio to extract highly-granular insights. To showcase the viability of cascading architectures, we build a multi-task convolutional neural network that predicts and cascades vehicle attributes to enhance fault detection. We train and test these models on a synthesized dataset reflecting more than 40 hours of augmented audio and achieve >92% validation set accuracy on attributes (fuel type, engine configuration, cylinder count and aspiration type). Our cascading architecture additionally achieved 93.6% validation and 86.8% test set accuracy on misfire fault prediction, demonstrating margins of 16.4% / 7.8% and 4.2% / 1.5% improvement over na\"ive and parallel baselines. We explore experimental studies focused on acoustic features, data augmentation, feature fusion, and data reliability. Finally, we conclude with a discussion of broader implications, future directions, and application areas for this work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge