Jinyan Wang

An Out-Of-Distribution Membership Inference Attack Approach for Cross-Domain Graph Attacks

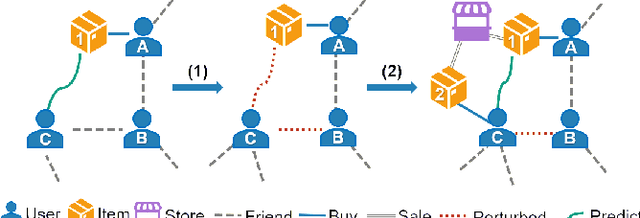

May 26, 2025Abstract:Graph Neural Network-based methods face privacy leakage risks due to the introduction of topological structures about the targets, which allows attackers to bypass the target's prior knowledge of the sensitive attributes and realize membership inference attacks (MIA) by observing and analyzing the topology distribution. As privacy concerns grow, the assumption of MIA, which presumes that attackers can obtain an auxiliary dataset with the same distribution, is increasingly deviating from reality. In this paper, we categorize the distribution diversity issue in real-world MIA scenarios as an Out-Of-Distribution (OOD) problem, and propose a novel Graph OOD Membership Inference Attack (GOOD-MIA) to achieve cross-domain graph attacks. Specifically, we construct shadow subgraphs with distributions from different domains to model the diversity of real-world data. We then explore the stable node representations that remain unchanged under external influences and consider eliminating redundant information from confounding environments and extracting task-relevant key information to more clearly distinguish between the characteristics of training data and unseen data. This OOD-based design makes cross-domain graph attacks possible. Finally, we perform risk extrapolation to optimize the attack's domain adaptability during attack inference to generalize the attack to other domains. Experimental results demonstrate that GOOD-MIA achieves superior attack performance in datasets designed for multiple domains.

FedRGL: Robust Federated Graph Learning for Label Noise

Nov 28, 2024Abstract:Federated Graph Learning (FGL) is a distributed machine learning paradigm based on graph neural networks, enabling secure and collaborative modeling of local graph data among clients. However, label noise can degrade the global model's generalization performance. Existing federated label noise learning methods, primarily focused on computer vision, often yield suboptimal results when applied to FGL. To address this, we propose a robust federated graph learning method with label noise, termed FedRGL. FedRGL introduces dual-perspective consistency noise node filtering, leveraging both the global model and subgraph structure under class-aware dynamic thresholds. To enhance client-side training, we incorporate graph contrastive learning, which improves encoder robustness and assigns high-confidence pseudo-labels to noisy nodes. Additionally, we measure model quality via predictive entropy of unlabeled nodes, enabling adaptive robust aggregation of the global model. Comparative experiments on multiple real-world graph datasets show that FedRGL outperforms 12 baseline methods across various noise rates, types, and numbers of clients.

Rethinking the impact of noisy labels in graph classification: A utility and privacy perspective

Jun 11, 2024

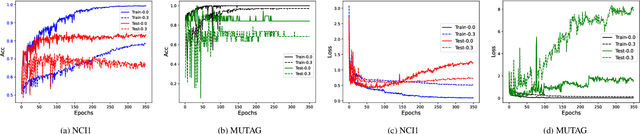

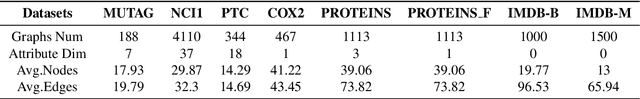

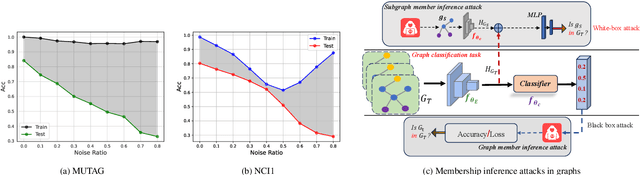

Abstract:Graph neural networks based on message-passing mechanisms have achieved advanced results in graph classification tasks. However, their generalization performance degrades when noisy labels are present in the training data. Most existing noisy labeling approaches focus on the visual domain or graph node classification tasks and analyze the impact of noisy labels only from a utility perspective. Unlike existing work, in this paper, we measure the effects of noise labels on graph classification from data privacy and model utility perspectives. We find that noise labels degrade the model's generalization performance and enhance the ability of membership inference attacks on graph data privacy. To this end, we propose the robust graph neural network approach with noisy labeled graph classification. Specifically, we first accurately filter the noisy samples by high-confidence samples and the first feature principal component vector of each class. Then, the robust principal component vectors and the model output under data augmentation are utilized to achieve noise label correction guided by dual spatial information. Finally, supervised graph contrastive learning is introduced to enhance the embedding quality of the model and protect the privacy of the training graph data. The utility and privacy of the proposed method are validated by comparing twelve different methods on eight real graph classification datasets. Compared with the state-of-the-art methods, the RGLC method achieves at most and at least 7.8% and 0.8% performance gain at 30% noisy labeling rate, respectively, and reduces the accuracy of privacy attacks to below 60%.

Heterogeneous Graph Neural Network for Privacy-Preserving Recommendation

Oct 09, 2022

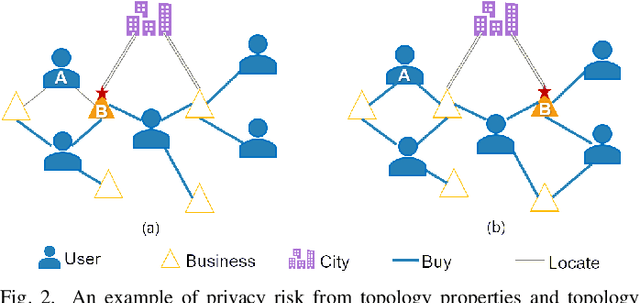

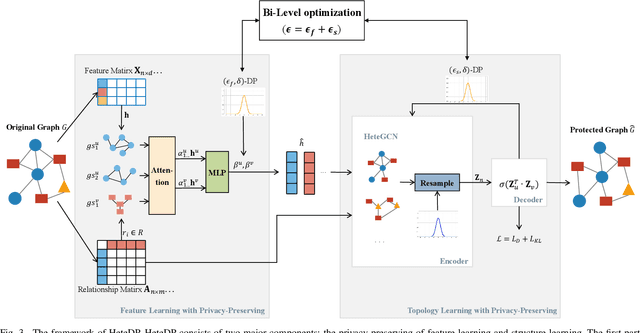

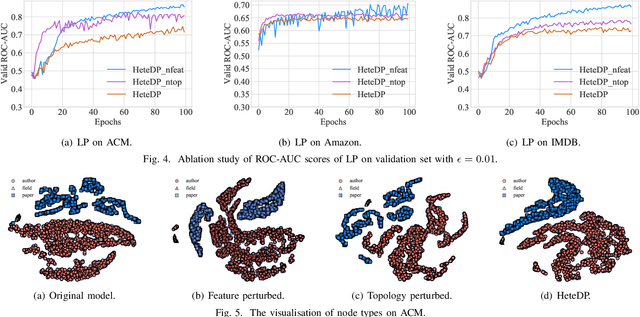

Abstract:Social networks are considered to be heterogeneous graph neural networks (HGNNs) with deep learning technological advances. HGNNs, compared to homogeneous data, absorb various aspects of information about individuals in the training stage. That means more information has been covered in the learning result, especially sensitive information. However, the privacy-preserving methods on homogeneous graphs only preserve the same type of node attributes or relationships, which cannot effectively work on heterogeneous graphs due to the complexity. To address this issue, we propose a novel heterogeneous graph neural network privacy-preserving method based on a differential privacy mechanism named HeteDP, which provides a double guarantee on graph features and topology. In particular, we first define a new attack scheme to reveal privacy leakage in the heterogeneous graphs. Specifically, we design a two-stage pipeline framework, which includes the privacy-preserving feature encoder and the heterogeneous link reconstructor with gradients perturbation based on differential privacy to tolerate data diversity and against the attack. To better control the noise and promote model performance, we utilize a bi-level optimization pattern to allocate a suitable privacy budget for the above two modules. Our experiments on four public benchmarks show that the HeteDP method is equipped to resist heterogeneous graph privacy leakage with admirable model generalization.

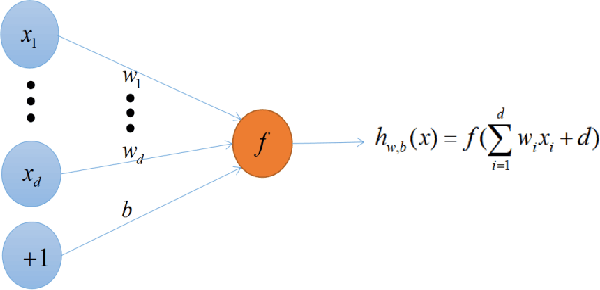

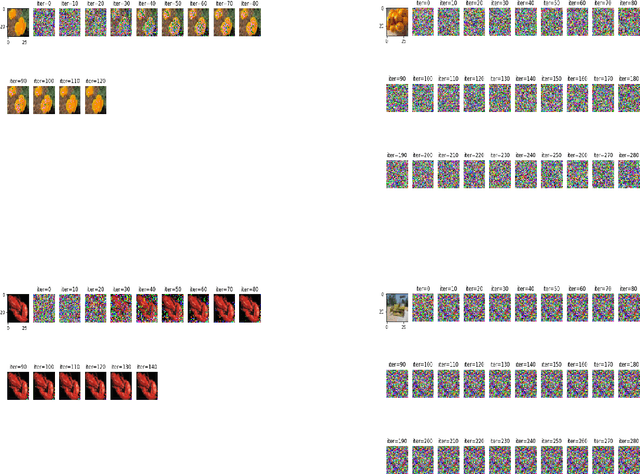

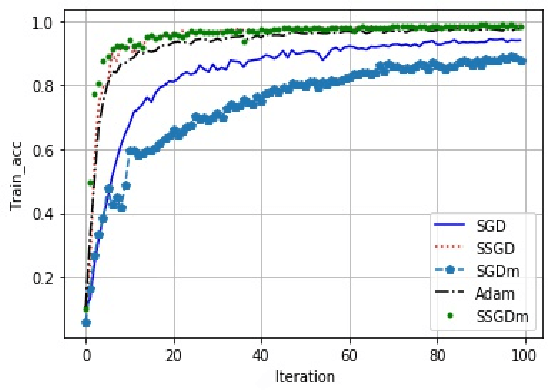

SSGD: A safe and efficient method of gradient descent

Dec 03, 2020

Abstract:With the vigorous development of artificial intelligence technology, various engineering technology applications have been implemented one after another. The gradient descent method plays an important role in solving various optimization problems, due to its simple structure, good stability and easy implementation. In multi-node machine learning system, the gradients usually need to be shared. Data reconstruction attacks can reconstruct training data simply by knowing the gradient information. In this paper, to prevent gradient leakage while keeping the accuracy of model, we propose the super stochastic gradient descent approach to update parameters by concealing the modulus length of gradient vectors and converting it or them into a unit vector. Furthermore, we analyze the security of stochastic gradient descent approach. Experiment results show that our approach is obviously superior to prevalent gradient descent approaches in terms of accuracy and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge