De Li

FedRGL: Robust Federated Graph Learning for Label Noise

Nov 28, 2024Abstract:Federated Graph Learning (FGL) is a distributed machine learning paradigm based on graph neural networks, enabling secure and collaborative modeling of local graph data among clients. However, label noise can degrade the global model's generalization performance. Existing federated label noise learning methods, primarily focused on computer vision, often yield suboptimal results when applied to FGL. To address this, we propose a robust federated graph learning method with label noise, termed FedRGL. FedRGL introduces dual-perspective consistency noise node filtering, leveraging both the global model and subgraph structure under class-aware dynamic thresholds. To enhance client-side training, we incorporate graph contrastive learning, which improves encoder robustness and assigns high-confidence pseudo-labels to noisy nodes. Additionally, we measure model quality via predictive entropy of unlabeled nodes, enabling adaptive robust aggregation of the global model. Comparative experiments on multiple real-world graph datasets show that FedRGL outperforms 12 baseline methods across various noise rates, types, and numbers of clients.

Rethinking the impact of noisy labels in graph classification: A utility and privacy perspective

Jun 11, 2024

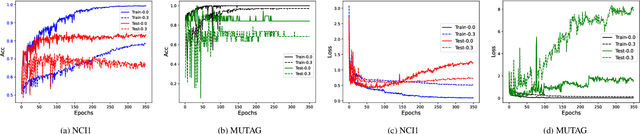

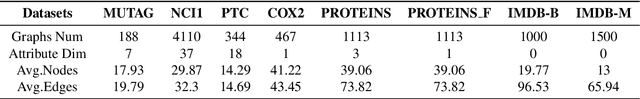

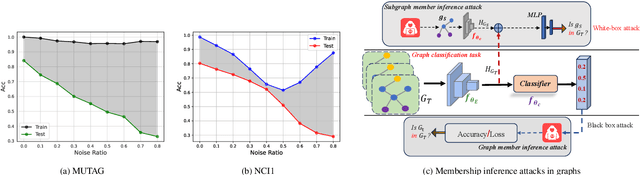

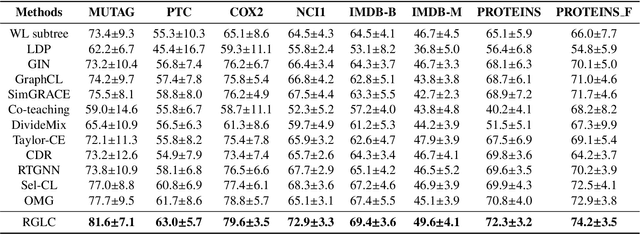

Abstract:Graph neural networks based on message-passing mechanisms have achieved advanced results in graph classification tasks. However, their generalization performance degrades when noisy labels are present in the training data. Most existing noisy labeling approaches focus on the visual domain or graph node classification tasks and analyze the impact of noisy labels only from a utility perspective. Unlike existing work, in this paper, we measure the effects of noise labels on graph classification from data privacy and model utility perspectives. We find that noise labels degrade the model's generalization performance and enhance the ability of membership inference attacks on graph data privacy. To this end, we propose the robust graph neural network approach with noisy labeled graph classification. Specifically, we first accurately filter the noisy samples by high-confidence samples and the first feature principal component vector of each class. Then, the robust principal component vectors and the model output under data augmentation are utilized to achieve noise label correction guided by dual spatial information. Finally, supervised graph contrastive learning is introduced to enhance the embedding quality of the model and protect the privacy of the training graph data. The utility and privacy of the proposed method are validated by comparing twelve different methods on eight real graph classification datasets. Compared with the state-of-the-art methods, the RGLC method achieves at most and at least 7.8% and 0.8% performance gain at 30% noisy labeling rate, respectively, and reduces the accuracy of privacy attacks to below 60%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge