Jingting Ding

Facial Affect Analysis: Learning from Synthetic Data & Multi-Task Learning Challenges

Jul 20, 2022

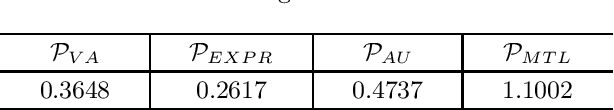

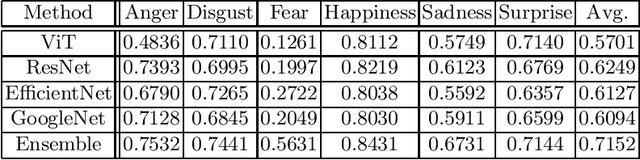

Abstract:Facial affect analysis remains a challenging task with its setting transitioned from lab-controlled to in-the-wild situations. In this paper, we present novel frameworks to handle the two challenges in the 4th Affective Behavior Analysis In-The-Wild (ABAW) competition: i) Multi-Task-Learning (MTL) Challenge and ii) Learning from Synthetic Data (LSD) Challenge. For MTL challenge, we adopt the SMM-EmotionNet with a better ensemble strategy of feature vectors. For LSD challenge, we propose respective methods to combat the problems of single labels, imbalanced distribution, fine-tuning limitations, and choice of model architectures. Experimental results on the official validation sets from the competition demonstrated that our proposed approaches outperformed baselines by a large margin. The code is available at https://github.com/sylyoung/ABAW4-HUST-ANT.

Cross-Architecture Knowledge Distillation

Jul 12, 2022

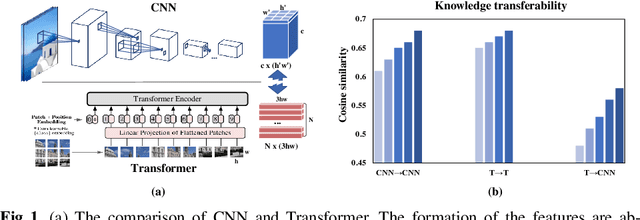

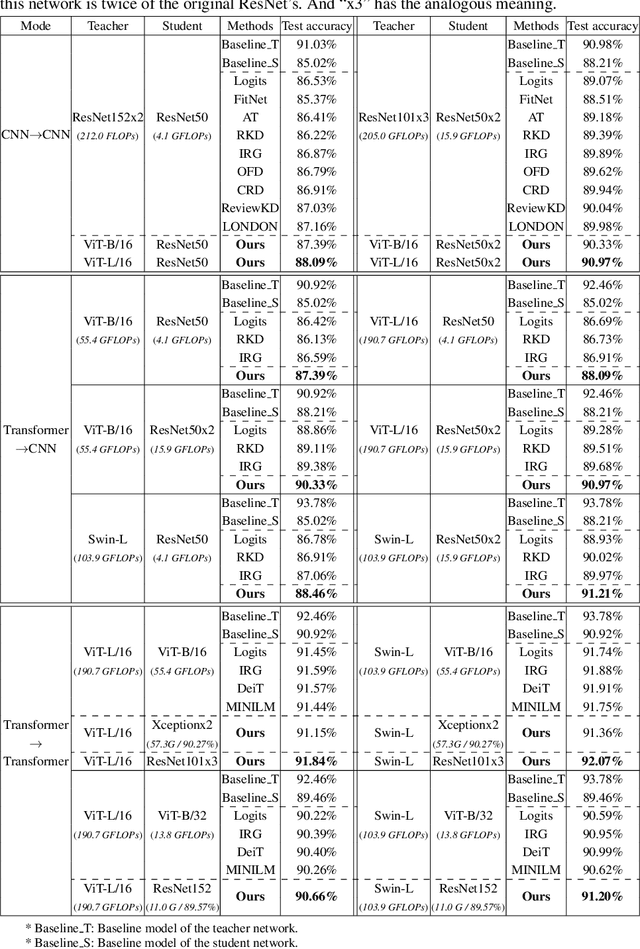

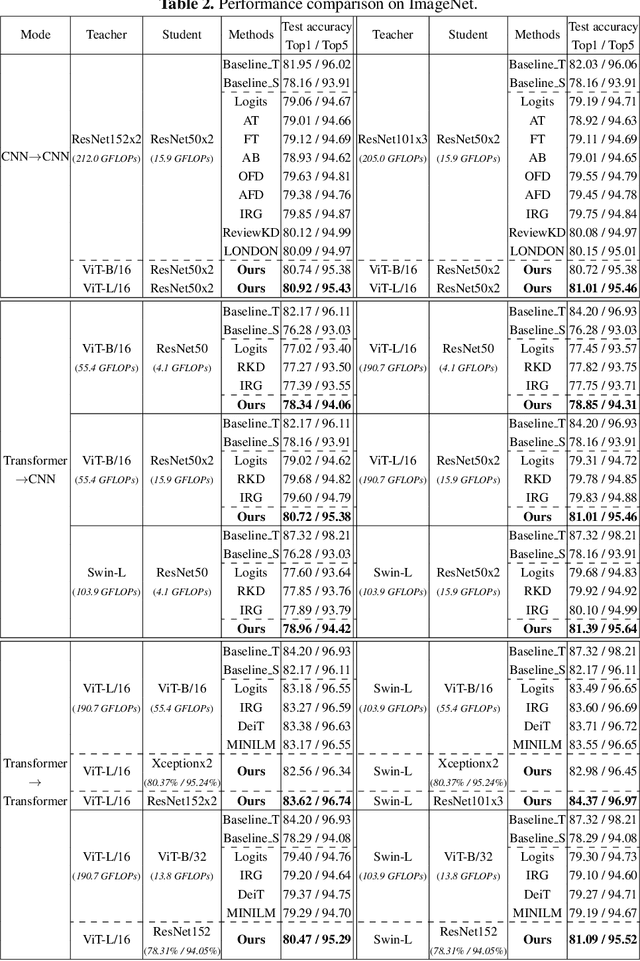

Abstract:Transformer attracts much attention because of its ability to learn global relations and superior performance. In order to achieve higher performance, it is natural to distill complementary knowledge from Transformer to convolutional neural network (CNN). However, most existing knowledge distillation methods only consider homologous-architecture distillation, such as distilling knowledge from CNN to CNN. They may not be suitable when applying to cross-architecture scenarios, such as from Transformer to CNN. To deal with this problem, a novel cross-architecture knowledge distillation method is proposed. Specifically, instead of directly mimicking output/intermediate features of the teacher, a partially cross attention projector and a group-wise linear projector are introduced to align the student features with the teacher's in two projected feature spaces. And a multi-view robust training scheme is further presented to improve the robustness and stability of the framework. Extensive experiments show that the proposed method outperforms 14 state-of-the-arts on both small-scale and large-scale datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge