Jingtai Liu

FPC-VLA: A Vision-Language-Action Framework with a Supervisor for Failure Prediction and Correction

Sep 04, 2025

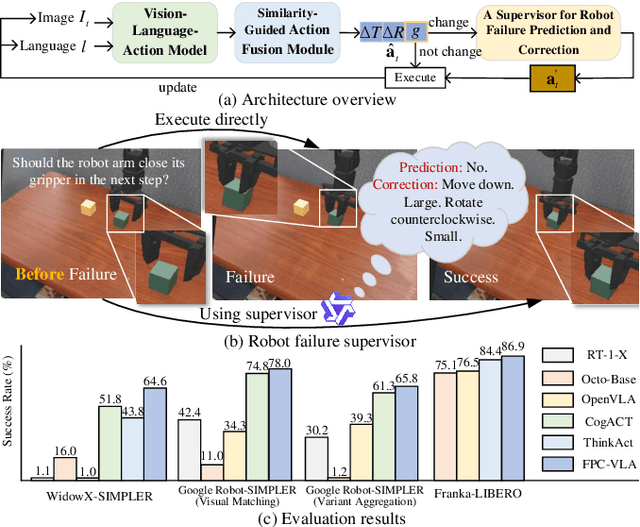

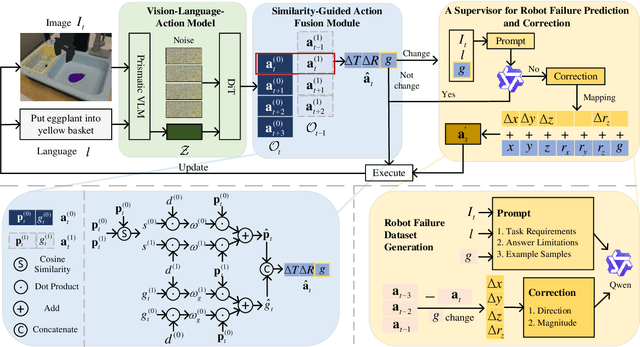

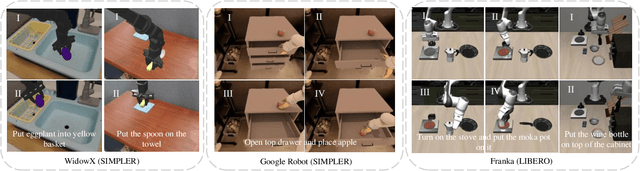

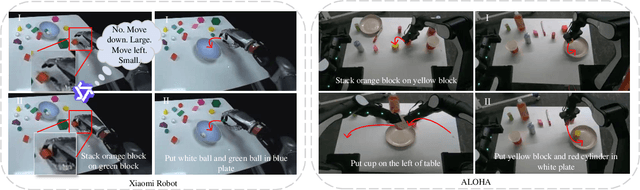

Abstract:Robotic manipulation is a fundamental component of automation. However, traditional perception-planning pipelines often fall short in open-ended tasks due to limited flexibility, while the architecture of a single end-to-end Vision-Language-Action (VLA) offers promising capabilities but lacks crucial mechanisms for anticipating and recovering from failure. To address these challenges, we propose FPC-VLA, a dual-model framework that integrates VLA with a supervisor for failure prediction and correction. The supervisor evaluates action viability through vision-language queries and generates corrective strategies when risks arise, trained efficiently without manual labeling. A similarity-guided fusion module further refines actions by leveraging past predictions. Evaluation results on multiple simulation platforms (SIMPLER and LIBERO) and robot embodiments (WidowX, Google Robot, Franka) show that FPC-VLA outperforms state-of-the-art models in both zero-shot and fine-tuned settings. By activating the supervisor only at keyframes, our approach significantly increases task success rates with minimal impact on execution time. Successful real-world deployments on diverse, long-horizon tasks confirm FPC-VLA's strong generalization and practical utility for building more reliable autonomous systems.

MK-Pose: Category-Level Object Pose Estimation via Multimodal-Based Keypoint Learning

Jul 09, 2025

Abstract:Category-level object pose estimation, which predicts the pose of objects within a known category without prior knowledge of individual instances, is essential in applications like warehouse automation and manufacturing. Existing methods relying on RGB images or point cloud data often struggle with object occlusion and generalization across different instances and categories. This paper proposes a multimodal-based keypoint learning framework (MK-Pose) that integrates RGB images, point clouds, and category-level textual descriptions. The model uses a self-supervised keypoint detection module enhanced with attention-based query generation, soft heatmap matching and graph-based relational modeling. Additionally, a graph-enhanced feature fusion module is designed to integrate local geometric information and global context. MK-Pose is evaluated on CAMERA25 and REAL275 dataset, and is further tested for cross-dataset capability on HouseCat6D dataset. The results demonstrate that MK-Pose outperforms existing state-of-the-art methods in both IoU and average precision without shape priors. Codes will be released at \href{https://github.com/yangyifanYYF/MK-Pose}{https://github.com/yangyifanYYF/MK-Pose}.

Follow Everything: A Leader-Following and Obstacle Avoidance Framework with Goal-Aware Adaptation

May 01, 2025

Abstract:Robust and flexible leader-following is a critical capability for robots to integrate into human society. While existing methods struggle to generalize to leaders of arbitrary form and often fail when the leader temporarily leaves the robot's field of view, this work introduces a unified framework addressing both challenges. First, traditional detection models are replaced with a segmentation model, allowing the leader to be anything. To enhance recognition robustness, a distance frame buffer is implemented that stores leader embeddings at multiple distances, accounting for the unique characteristics of leader-following tasks. Second, a goal-aware adaptation mechanism is designed to govern robot planning states based on the leader's visibility and motion, complemented by a graph-based planner that generates candidate trajectories for each state, ensuring efficient following with obstacle avoidance. Simulations and real-world experiments with a legged robot follower and various leaders (human, ground robot, UAV, legged robot, stop sign) in both indoor and outdoor environments show competitive improvements in follow success rate, reduced visual loss duration, lower collision rate, and decreased leader-follower distance.

Simulating Automotive Radar with Lidar and Camera Inputs

Mar 11, 2025Abstract:Low-cost millimeter automotive radar has received more and more attention due to its ability to handle adverse weather and lighting conditions in autonomous driving. However, the lack of quality datasets hinders research and development. We report a new method that is able to simulate 4D millimeter wave radar signals including pitch, yaw, range, and Doppler velocity along with radar signal strength (RSS) using camera image, light detection and ranging (lidar) point cloud, and ego-velocity. The method is based on two new neural networks: 1) DIS-Net, which estimates the spatial distribution and number of radar signals, and 2) RSS-Net, which predicts the RSS of the signal based on appearance and geometric information. We have implemented and tested our method using open datasets from 3 different models of commercial automotive radar. The experimental results show that our method can successfully generate high-fidelity radar signals. Moreover, we have trained a popular object detection neural network with data augmented by our synthesized radar. The network outperforms the counterpart trained only on raw radar data, a promising result to facilitate future radar-based research and development.

NavG: Risk-Aware Navigation in Crowded Environments Based on Reinforcement Learning with Guidance Points

Mar 03, 2025

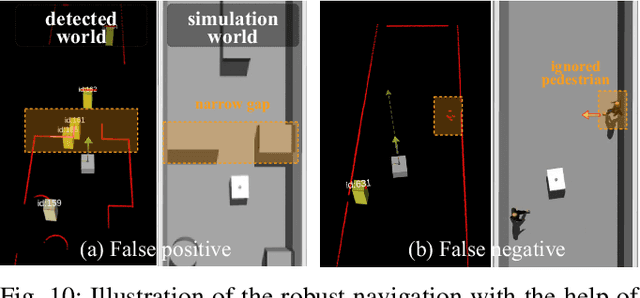

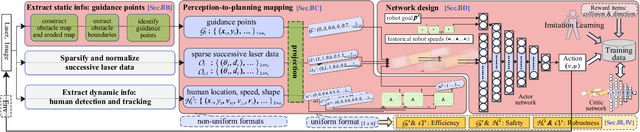

Abstract:Motion planning in navigation systems is highly susceptible to upstream perceptual errors, particularly in human detection and tracking. To mitigate this issue, the concept of guidance points--a novel directional cue within a reinforcement learning-based framework--is introduced. A structured method for identifying guidance points is developed, consisting of obstacle boundary extraction, potential guidance point detection, and redundancy elimination. To integrate guidance points into the navigation pipeline, a perception-to-planning mapping strategy is proposed, unifying guidance points with other perceptual inputs and enabling the RL agent to effectively leverage the complementary relationships among raw laser data, human detection and tracking, and guidance points. Qualitative and quantitative simulations demonstrate that the proposed approach achieves the highest success rate and near-optimal travel times, greatly improving both safety and efficiency. Furthermore, real-world experiments in dynamic corridors and lobbies validate the robot's ability to confidently navigate around obstacles and robustly avoid pedestrians.

Scene Modeling of Autonomous Vehicles Avoiding Stationary and Moving Vehicles on Narrow Roads

Dec 19, 2024

Abstract:Navigating narrow roads with oncoming vehicles is a significant challenge that has garnered considerable public interest. These scenarios often involve sections that cannot accommodate two moving vehicles simultaneously due to the presence of stationary vehicles or limited road width. Autonomous vehicles must therefore profoundly comprehend their surroundings to identify passable areas and execute sophisticated maneuvers. To address this issue, this paper presents a comprehensive model for such an intricate scenario. The primary contribution is the principle of road width occupancy minimization, which models the narrow road problem and identifies candidate meeting gaps. Additionally, the concept of homology classes is introduced to help initialize and optimize candidate trajectories, while evaluation strategies are developed to select the optimal gap and most efficient trajectory. Qualitative and quantitative simulations demonstrate that the proposed approach, SM-NR, achieves high scene pass rates, efficient movement, and robust decisions. Experiments conducted in tiny gap scenarios and conflict scenarios reveal that the autonomous vehicle can robustly select meeting gaps and trajectories, compromising flexibly for safety while advancing bravely for efficiency.

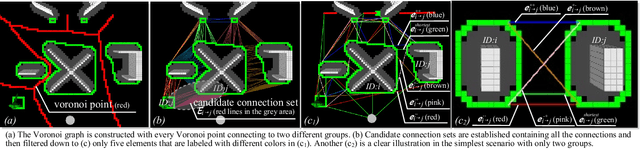

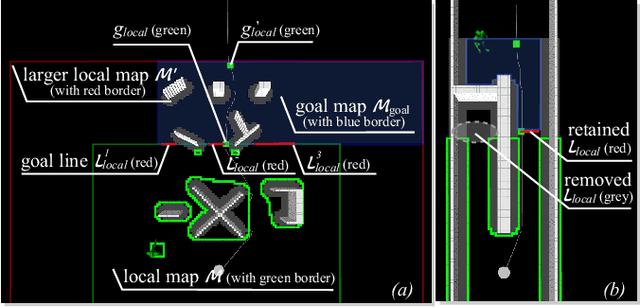

GA-TEB: Goal-Adaptive Framework for Efficient Navigation Based on Goal Lines

Sep 16, 2024

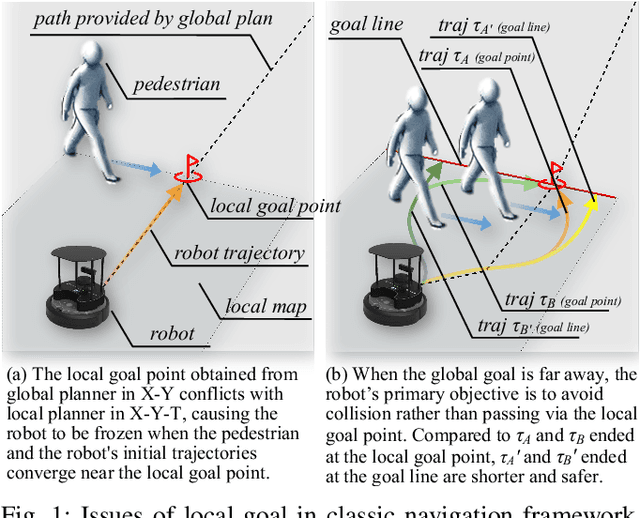

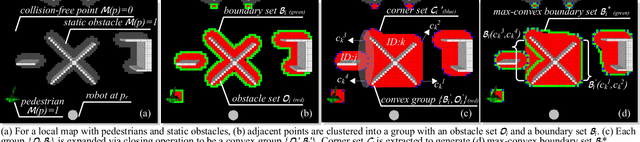

Abstract:In crowd navigation, the local goal plays a crucial role in trajectory initialization, optimization, and evaluation. Recognizing that when the global goal is distant, the robot's primary objective is avoiding collisions, making it less critical to pass through the exact local goal point, this work introduces the concept of goal lines, which extend the traditional local goal from a single point to multiple candidate lines. Coupled with a topological map construction strategy that groups obstacles to be as convex as possible, a goal-adaptive navigation framework is proposed to efficiently plan multiple candidate trajectories. Simulations and experiments demonstrate that the proposed GA-TEB framework effectively prevents deadlock situations, where the robot becomes frozen due to a lack of feasible trajectories in crowded environments. Additionally, the framework greatly increases planning frequency in scenarios with numerous non-convex obstacles, enhancing both robustness and safety.

PS6D: Point Cloud Based Symmetry-Aware 6D Object Pose Estimation in Robot Bin-Picking

May 18, 2024

Abstract:6D object pose estimation holds essential roles in various fields, particularly in the grasping of industrial workpieces. Given challenges like rust, high reflectivity, and absent textures, this paper introduces a point cloud based pose estimation framework (PS6D). PS6D centers on slender and multi-symmetric objects. It extracts multi-scale features through an attention-guided feature extraction module, designs a symmetry-aware rotation loss and a center distance sensitive translation loss to regress the pose of each point to the centroid of the instance, and then uses a two-stage clustering method to complete instance segmentation and pose estimation. Objects from the Sil\'eane and IPA datasets and typical workpieces from industrial practice are used to generate data and evaluate the algorithm. In comparison to the state-of-the-art approach, PS6D demonstrates an 11.5\% improvement in F$_{1_{inst}}$ and a 14.8\% improvement in Recall. The main part of PS6D has been deployed to the software of Mech-Mind, and achieves a 91.7\% success rate in bin-picking experiments, marking its application in industrial pose estimation tasks.

MGTR: End-to-End Mutual Gaze Detection with Transformer

Oct 06, 2022

Abstract:People's looking at each other or mutual gaze is ubiquitous in our daily interactions, and detecting mutual gaze is of great significance for understanding human social scenes. Current mutual gaze detection methods focus on two-stage methods, whose inference speed is limited by the two-stage pipeline and the performance in the second stage is affected by the first one. In this paper, we propose a novel one-stage mutual gaze detection framework called Mutual Gaze TRansformer or MGTR to perform mutual gaze detection in an end-to-end manner. By designing mutual gaze instance triples, MGTR can detect each human head bounding box and simultaneously infer mutual gaze relationship based on global image information, which streamlines the whole process with simplicity. Experimental results on two mutual gaze datasets show that our method is able to accelerate mutual gaze detection process without losing performance. Ablation study shows that different components of MGTR can capture different levels of semantic information in images. Code is available at https://github.com/Gmbition/MGTR

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge