Jing Deng

Personality-Enhanced Multimodal Depression Detection in the Elderly

Oct 09, 2025Abstract:This paper presents our solution to the Multimodal Personality-aware Depression Detection (MPDD) challenge at ACM MM 2025. We propose a multimodal depression detection model in the Elderly that incorporates personality characteristics. We introduce a multi-feature fusion approach based on a co-attention mechanism to effectively integrate LLDs, MFCCs, and Wav2Vec features in the audio modality. For the video modality, we combine representations extracted from OpenFace, ResNet, and DenseNet to construct a comprehensive visual feature set. Recognizing the critical role of personality in depression detection, we design an interaction module that captures the relationships between personality traits and multimodal features. Experimental results from the MPDD Elderly Depression Detection track demonstrate that our method significantly enhances performance, providing valuable insights for future research in multimodal depression detection among elderly populations.

Overlap-Adaptive Hybrid Speaker Diarization and ASR-Aware Observation Addition for MISP 2025 Challenge

May 28, 2025Abstract:This paper presents the system developed to address the MISP 2025 Challenge. For the diarization system, we proposed a hybrid approach combining a WavLM end-to-end segmentation method with a traditional multi-module clustering technique to adaptively select the appropriate model for handling varying degrees of overlapping speech. For the automatic speech recognition (ASR) system, we proposed an ASR-aware observation addition method that compensates for the performance limitations of Guided Source Separation (GSS) under low signal-to-noise ratio conditions. Finally, we integrated the speaker diarization and ASR systems in a cascaded architecture to address Track 3. Our system achieved character error rates (CER) of 9.48% on Track 2 and concatenated minimum permutation character error rate (cpCER) of 11.56% on Track 3, ultimately securing first place in both tracks and thereby demonstrating the effectiveness of the proposed methods in real-world meeting scenarios.

Leveraging LLM for Stuttering Speech: A Unified Architecture Bridging Recognition and Event Detection

May 28, 2025Abstract:The performance bottleneck of Automatic Speech Recognition (ASR) in stuttering speech scenarios has limited its applicability in domains such as speech rehabilitation. This paper proposed an LLM-driven ASR-SED multi-task learning framework that jointly optimized the ASR and Stuttering Event Detection (SED) tasks. We proposed a dynamic interaction mechanism where the ASR branch leveraged CTC-generated soft prompts to assist LLM context modeling, while the SED branch output stutter embeddings to enhance LLM comprehension of stuttered speech. We incorporated contrastive learning to strengthen the discriminative power of stuttering acoustic features and applied Focal Loss to mitigate the long-tailed distribution in stuttering event categories. Evaluations on the AS-70 Mandarin stuttering dataset demonstrated that our framework reduced the ASR character error rate (CER) to 5.45% (-37.71% relative reduction) and achieved an average SED F1-score of 73.63% (+46.58% relative improvement).

The More is not the Merrier: Investigating the Effect of Client Size on Federated Learning

Apr 11, 2025

Abstract:Federated Learning (FL) has been introduced as a way to keep data local to clients while training a shared machine learning model, as clients train on their local data and send trained models to a central aggregator. It is expected that FL will have a huge implication on Mobile Edge Computing, the Internet of Things, and Cross-Silo FL. In this paper, we focus on the widely used FedAvg algorithm to explore the effect of the number of clients in FL. We find a significant deterioration of learning accuracy for FedAvg as the number of clients increases. To address this issue for a general application, we propose a method called Knowledgeable Client Insertion (KCI) that introduces a very small number of knowledgeable clients to the MEC setting. These knowledgeable clients are expected to have accumulated a large set of data samples to help with training. With the help of KCI, the learning accuracy of FL increases much faster even with a normal FedAvg aggregation technique. We expect this approach to be able to provide great privacy protection for clients against security attacks such as model inversion attacks. Our code is available at https://github.com/Eleanor-W/KCI_for_FL.

FedIN: Federated Intermediate Layers Learning for Model Heterogeneity

Apr 12, 2023

Abstract:Federated learning (FL) facilitates edge devices to cooperatively train a global shared model while maintaining the training data locally and privately. However, a common but impractical assumption in FL is that the participating edge devices possess the same required resources and share identical global model architecture. In this study, we propose a novel FL method called Federated Intermediate Layers Learning (FedIN), supporting heterogeneous models without utilizing any public dataset. The training models in FedIN are divided into three parts, including an extractor, the intermediate layers, and a classifier. The model architectures of the extractor and classifier are the same in all devices to maintain the consistency of the intermediate layer features, while the architectures of the intermediate layers can vary for heterogeneous devices according to their resource capacities. To exploit the knowledge from features, we propose IN training, training the intermediate layers in line with the features from other clients. Additionally, we formulate and solve a convex optimization problem to mitigate the gradient divergence problem induced by the conflicts between the IN training and the local training. The experiment results show that FedIN achieves the best performance in the heterogeneous model environment compared with the state-of-the-art algorithms. Furthermore, our ablation study demonstrates the effectiveness of IN training and the solution to the convex optimization problem.

Synthesis of Gaussian Trees with Correlation Sign Ambiguity: An Information Theoretic Approach

Jul 07, 2016

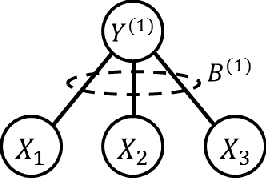

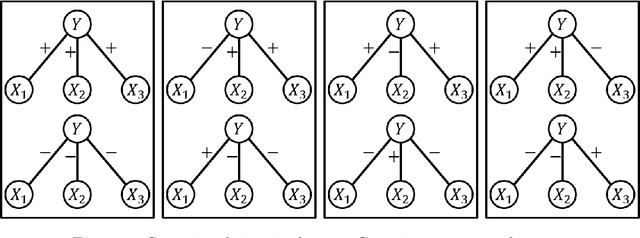

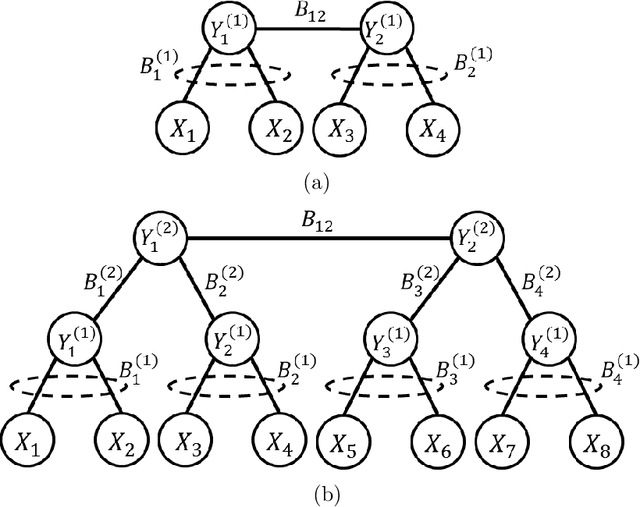

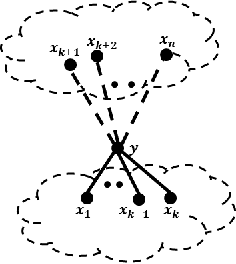

Abstract:In latent Gaussian trees the pairwise correlation signs between the variables are intrinsically unrecoverable. Such information is vital since it completely determines the direction in which two variables are associated. In this work, we resort to information theoretical approaches to achieve two fundamental goals: First, we quantify the amount of information loss due to unrecoverable sign information. Second, we show the importance of such information in determining the maximum achievable rate region, in which the observed output vector can be synthesized, given its probability density function. In particular, we model the graphical model as a communication channel and propose a new layered encoding framework to synthesize observed data using upper layer Gaussian inputs and independent Bernoulli correlation sign inputs from each layer. We find the achievable rate region for the rate tuples of multi-layer latent Gaussian messages to synthesize the desired observables.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge