Jifeng Guo

Local Path Optimization in The Latent Space Using Learned Distance Gradient

Dec 30, 2025Abstract:Constrained motion planning is a common but challenging problem in robotic manipulation. In recent years, data-driven constrained motion planning algorithms have shown impressive planning speed and success rate. Among them, the latent motion method based on manifold approximation is the most efficient planning algorithm. Due to errors in manifold approximation and the difficulty in accurately identifying collision conflicts within the latent space, time-consuming path validity checks and path replanning are required. In this paper, we propose a method that trains a neural network to predict the minimum distance between the robot and obstacles using latent vectors as inputs. The learned distance gradient is then used to calculate the direction of movement in the latent space to move the robot away from obstacles. Based on this, a local path optimization algorithm in the latent space is proposed, and it is integrated with the path validity checking process to reduce the time of replanning. The proposed method is compared with state-of-the-art algorithms in multiple planning scenarios, demonstrating the fastest planning speed

* This paper has been published in IROS 2025

Multiple Peg-in-Hole Assembly of Tightly Coupled Multi-manipulator Using Learning-based Visual Servo

Jul 15, 2024

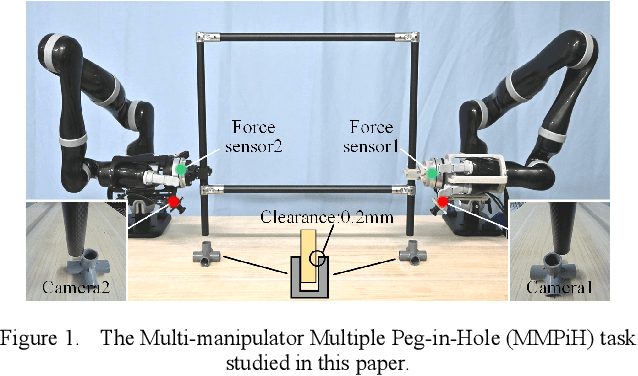

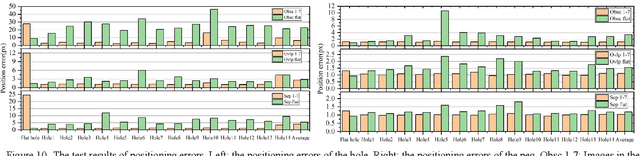

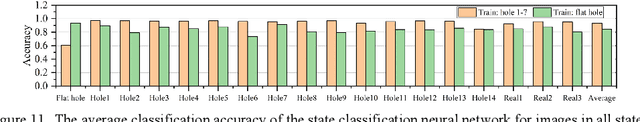

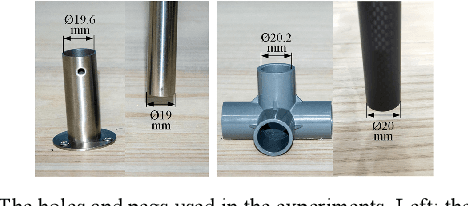

Abstract:Multiple peg-in-hole assembly is one of the fundamental tasks in robotic assembly. In the multiple peg-in-hole task for large-sized parts, it is challenging for a single manipulator to simultaneously align multiple distant pegs and holes, necessitating tightly coupled multi-manipulator systems. For such Multi-manipulator Multiple Peg-in-Hole (MMPiH) tasks, we proposes a collaborative visual servo control framework that uses only the monocular in-hand cameras of each manipulator to reduce positioning errors. Initially, we train a state classification neural network and a positioning neural network. The former is used to divide the states of peg and hole in the image into three categories: obscured, separated and overlapped, while the latter determines the position of the peg and hole in the image. Based on these findings, we propose a method to integrate the visual features of multiple manipulators using virtual forces, which can naturally combine with the cooperative controller of the multi-manipulator system. To generalize our approach to holes of different appearances, we varied the appearance of the holes during the dataset generation process. The results confirm that by considering the appearance of the holes, classification accuracy and positioning precision can be improved. Finally, the results show that our method achieves an 85% success rate in dual-manipulator dual peg-in-hole tasks with a clearance of 0.2 mm.

Incremental Self-training for Semi-supervised Learning

Apr 14, 2024

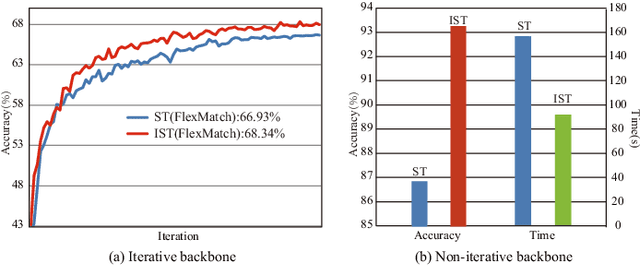

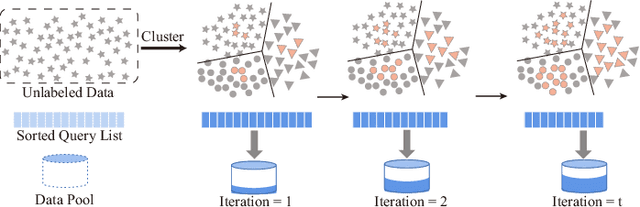

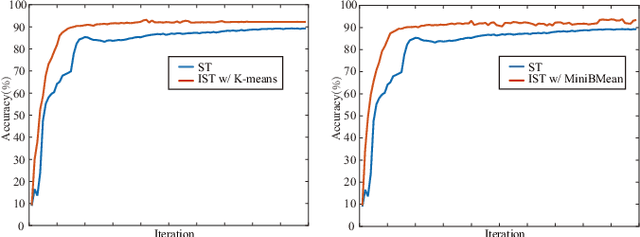

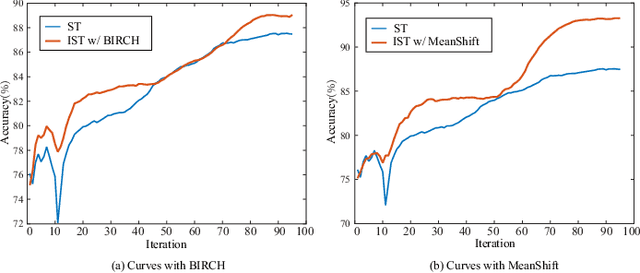

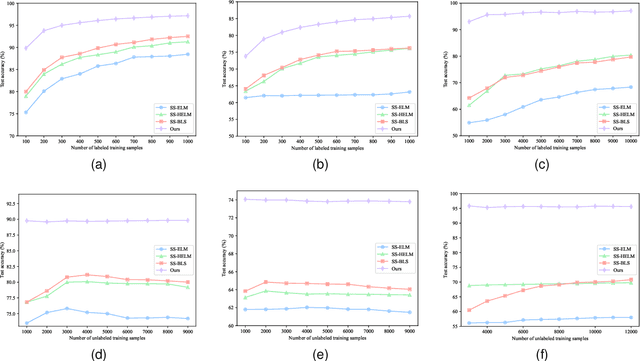

Abstract:Semi-supervised learning provides a solution to reduce the dependency of machine learning on labeled data. As one of the efficient semi-supervised techniques, self-training (ST) has received increasing attention. Several advancements have emerged to address challenges associated with noisy pseudo-labels. Previous works on self-training acknowledge the importance of unlabeled data but have not delved into their efficient utilization, nor have they paid attention to the problem of high time consumption caused by iterative learning. This paper proposes Incremental Self-training (IST) for semi-supervised learning to fill these gaps. Unlike ST, which processes all data indiscriminately, IST processes data in batches and priority assigns pseudo-labels to unlabeled samples with high certainty. Then, it processes the data around the decision boundary after the model is stabilized, enhancing classifier performance. Our IST is simple yet effective and fits existing self-training-based semi-supervised learning methods. We verify the proposed IST on five datasets and two types of backbone, effectively improving the recognition accuracy and learning speed. Significantly, it outperforms state-of-the-art competitors on three challenging image classification tasks.

ConvBLS: An Effective and Efficient Incremental Convolutional Broad Learning System for Image Classification

Apr 01, 2023

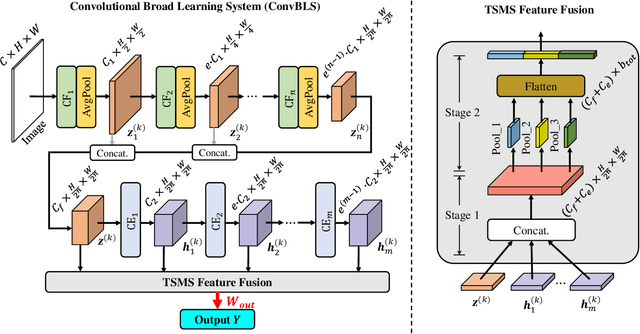

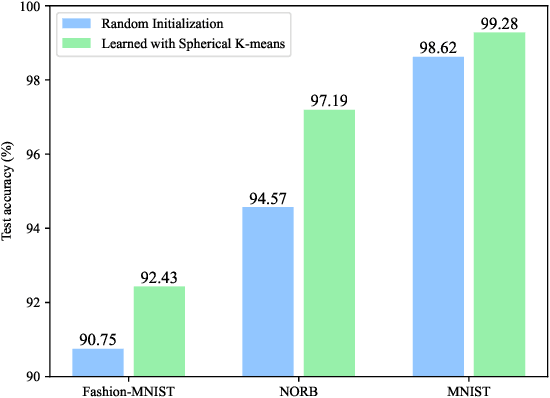

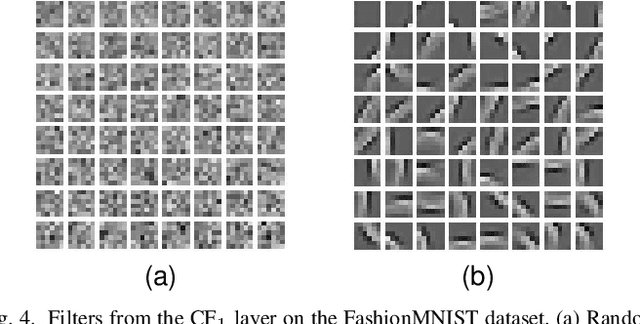

Abstract:Deep learning generally suffers from enormous computational resources and time-consuming training processes. Broad Learning System (BLS) and its convolutional variants have been proposed to mitigate these issues and have achieved superb performance in image classification. However, the existing convolutional-based broad learning system (C-BLS) either lacks an efficient training method and incremental learning capability or suffers from poor performance. To this end, we propose a convolutional broad learning system (ConvBLS) based on the spherical K-means (SKM) algorithm and two-stage multi-scale (TSMS) feature fusion, which consists of the convolutional feature (CF) layer, convolutional enhancement (CE) layer, TSMS feature fusion layer, and output layer. First, unlike the current C-BLS, the simple yet efficient SKM algorithm is utilized to learn the weights of CF layers. Compared with random filters, the SKM algorithm makes the CF layer learn more comprehensive spatial features. Second, similar to the vanilla BLS, CE layers are established to expand the feature space. Third, the TSMS feature fusion layer is proposed to extract more effective multi-scale features through the integration of CF layers and CE layers. Thanks to the above design and the pseudo-inverse calculation of the output layer weights, our proposed ConvBLS method is unprecedentedly efficient and effective. Finally, the corresponding incremental learning algorithms are presented for rapid remodeling if the model deems to expand. Experiments and comparisons demonstrate the superiority of our method.

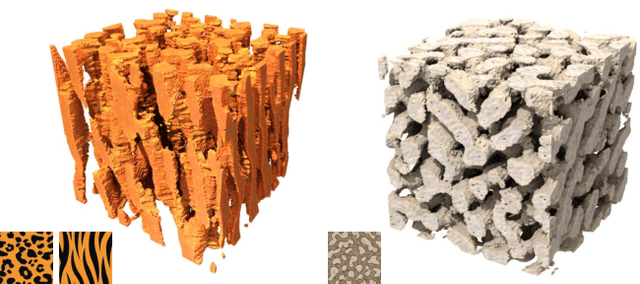

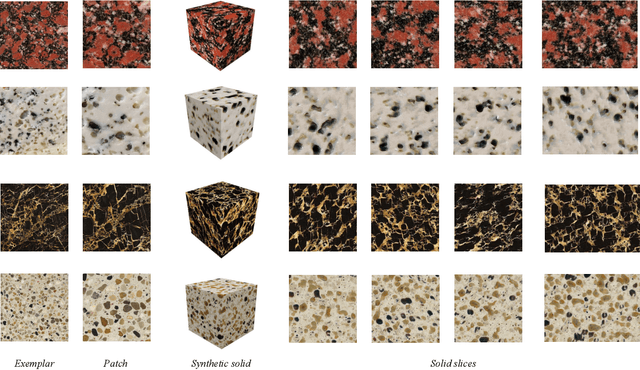

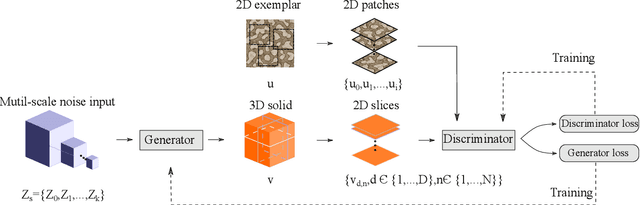

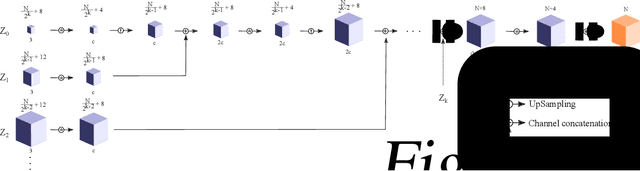

Solid Texture Synthesis using Generative Adversarial Networks

Feb 08, 2021

Abstract:Solid texture synthesis, as an effective way to extend 2D texture to 3D solid texture, exhibits advantages in numerous application domains. However, existing methods generally suffer from synthesis distortion due to the underutilization of texture information. In this paper, we proposed a novel neural network-based approach for the solid texture synthesis based on generative adversarial networks, namely STS-GAN, in which the generator composed of multi-scale modules learns the internal distribution of 2D exemplar and further extends it to a 3D solid texture. In addition, the discriminator evaluates the similarity between 2D exemplar and slices, promoting the generator to synthesize realistic solid texture. Experiment results demonstrate that the proposed method can synthesize high-quality 3D solid texture with similar visual characteristics to the exemplar.

Continuous-time Gaussian Process Trajectory Generation for Multi-robot Formation via Probabilistic Inference

Oct 31, 2020

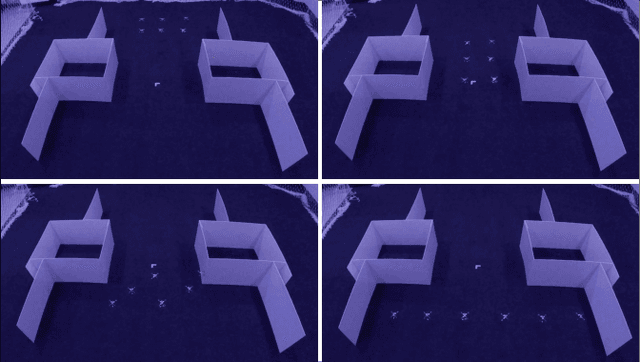

Abstract:In this paper, we extend a famous motion planning approach GPMP2 to multi-robot cases, yielding a novel centralized trajectory generation method for the multi-robot formation. A sparse Gaussian Process model is employed to represent the continuous-time trajectories of all robots as a limited number of states, which improves computational efficiency due to the sparsity. We add constraints to guarantee collision avoidance between individuals as well as formation maintenance, then all constraints and kinematics are formulated on a factor graph. By introducing a global planner, our proposed method can generate trajectories efficiently for a team of robots which have to get through a width-varying area by adaptive formation change. Finally, we provide the implementation of an incremental replanning algorithm to demonstrate the online operation potential of our proposed framework. The experiments in simulation and real world illustrate the feasibility, efficiency and scalability of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge