Jianlin Wang

A Combinatorial Identities Benchmark for Theorem Proving via Automated Theorem Generation

Feb 25, 2025Abstract:Large language models (LLMs) have significantly advanced formal theorem proving, yet the scarcity of high-quality training data constrains their capabilities in complex mathematical domains. Combinatorics, a cornerstone of mathematics, provides essential tools for analyzing discrete structures and solving optimization problems. However, its inherent complexity makes it particularly challenging for automated theorem proving (ATP) for combinatorial identities. To address this, we manually construct LeanComb, combinatorial identities benchmark in Lean, which is, to our knowledge, the first formalized theorem proving benchmark built for combinatorial identities. We develop an Automated Theorem Generator for Combinatorial Identities, ATG4CI, which combines candidate tactics suggested by a self-improving large language model with a Reinforcement Learning Tree Search approach for tactic prediction. By utilizing ATG4CI, we generate a LeanComb-Enhanced dataset comprising 260K combinatorial identities theorems, each with a complete formal proof in Lean, and experimental evaluations demonstrate that models trained on this dataset can generate more effective tactics, thereby improving success rates in automated theorem proving for combinatorial identities.

CuDIP: Enhancing Theorem Proving in LLMs via Curriculum Learning-based Direct Preference Optimization

Feb 25, 2025Abstract:Automated theorem proving (ATP) is one of the most challenging mathematical reasoning tasks for Large Language Models (LLMs). Most existing LLM-based ATP methods rely on supervised fine-tuning, which results in a limited alignment between the theorem proving process and human preferences. Direct Preference Optimization (DPO), which aligns LLMs with human preferences, has shown positive effects for certain tasks. However, the lack of high-quality preference data for theorem proving presents a significant challenge. In this paper, we innovatively apply DPO to formal automated theorem proving and introduces a Curriculum Learning-based DPO Iterative Theorem Proving (CuDIP) method. Specifically, we propose a method for constructing preference data which utilizes LLMs and existing theorem proving data to enhance the diversity of the preference data while reducing the reliance on human preference annotations. We then integrate this preference data construction method with curriculum learning to iteratively fine-tune the theorem proving model through DPO. Experimental results on the MiniF2F and ProofNet datasets demonstrate the effectiveness of the proposed method.

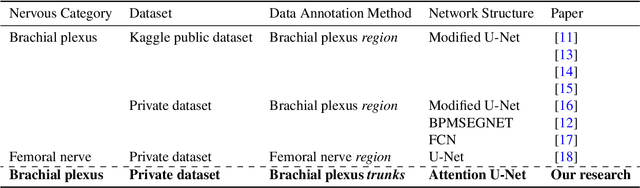

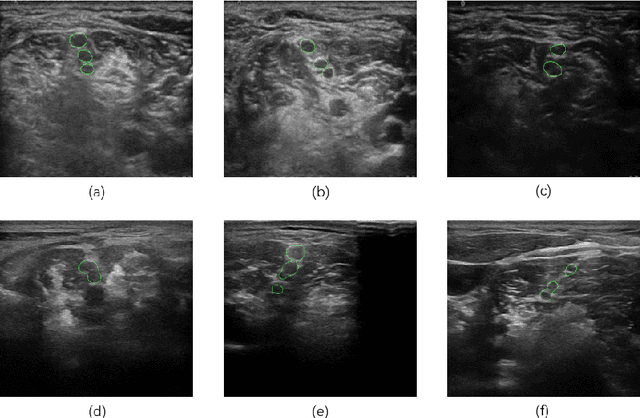

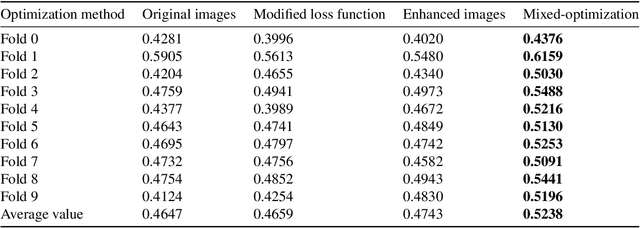

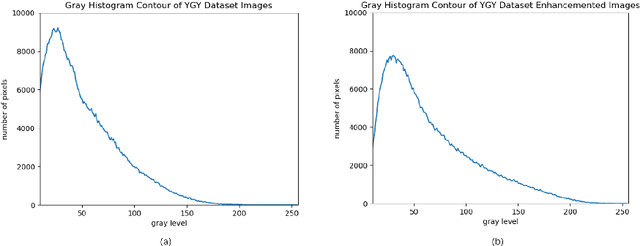

Brachial Plexus Nerve Trunk Segmentation Using Deep Learning: A Comparative Study with Doctors' Manual Segmentation

May 17, 2022

Abstract:Ultrasound-guided nerve block anesthesia (UGNB) is a high-tech visual nerve block anesthesia method that can observe the target nerve and its surrounding structures, the puncture needle's advancement, and local anesthetics spread in real-time. The key in UGNB is nerve identification. With the help of deep learning methods, the automatic identification or segmentation of nerves can be realized, assisting doctors in completing nerve block anesthesia accurately and efficiently. Here, we establish a public dataset containing 320 ultrasound images of brachial plexus (BP). Three experienced doctors jointly produce the BP segmentation ground truth and label brachial plexus trunks. We design a brachial plexus segmentation system (BPSegSys) based on deep learning. BPSegSys achieves experienced-doctor-level nerve identification performance in various experiments. We evaluate BPSegSys' performance in terms of intersection-over-union (IoU), a commonly used performance measure for segmentation experiments. Considering three dataset groups in our established public dataset, the IoU of BPSegSys are 0.5238, 0.4715, and 0.5029, respectively, which exceed the IoU 0.5205, 0.4704, and 0.4979 of experienced doctors. In addition, we show that BPSegSys can help doctors identify brachial plexus trunks more accurately, with IoU improvement up to 27%, which has significant clinical application value.

DC-SPP-YOLO: Dense Connection and Spatial Pyramid Pooling Based YOLO for Object Detection

Mar 20, 2019

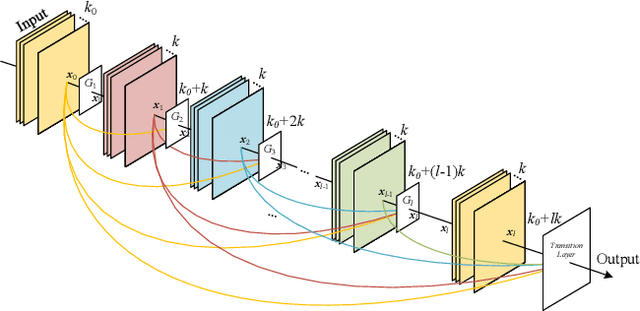

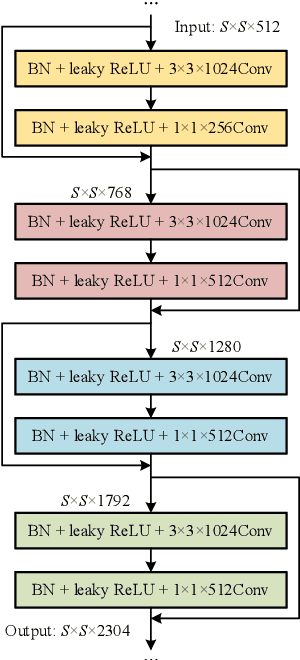

Abstract:Although YOLOv2 approach is extremely fast on object detection; its backbone network has the low ability on feature extraction and fails to make full use of multi-scale local region features, which restricts the improvement of object detection accuracy. Therefore, this paper proposed a DC-SPP-YOLO (Dense Connection and Spatial Pyramid Pooling Based YOLO) approach for ameliorating the object detection accuracy of YOLOv2. Specifically, the dense connection of convolution layers is employed in the backbone network of YOLOv2 to strengthen the feature extraction and alleviate the vanishing-gradient problem. Moreover, an improved spatial pyramid pooling is introduced to pool and concatenate the multi-scale local region features, so that the network can learn the object features more comprehensively. The DC-SPP-YOLO model is established and trained based on a new loss function composed of mean square error and cross entropy, and the object detection is realized. Experiments demonstrate that the mAP (mean Average Precision) of DC-SPP-YOLO proposed on PASCAL VOC datasets and UA-DETRAC datasets is higher than that of YOLOv2; the object detection accuracy of DC-SPP-YOLO is superior to YOLOv2 by strengthening feature extraction and using the multi-scale local region features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge