Jiahui Ye

Baichuan-Omni-1.5 Technical Report

Jan 26, 2025

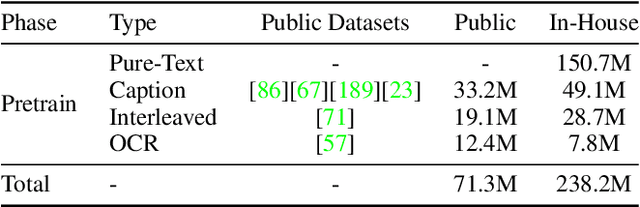

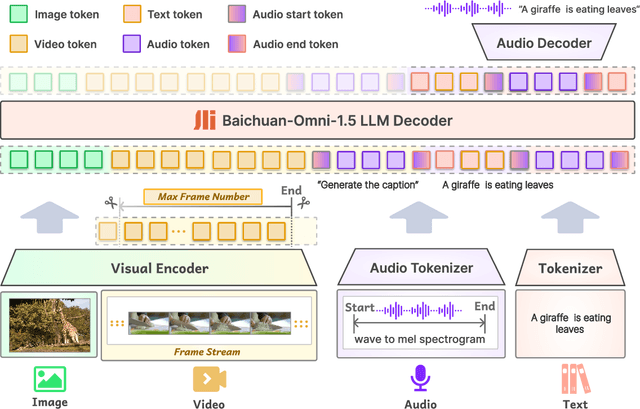

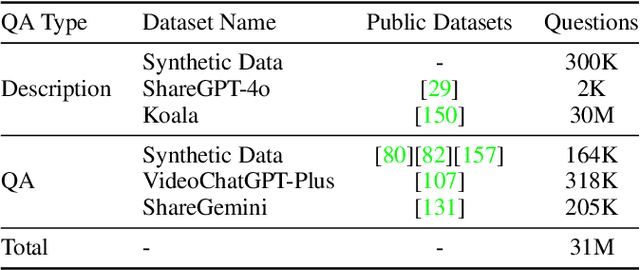

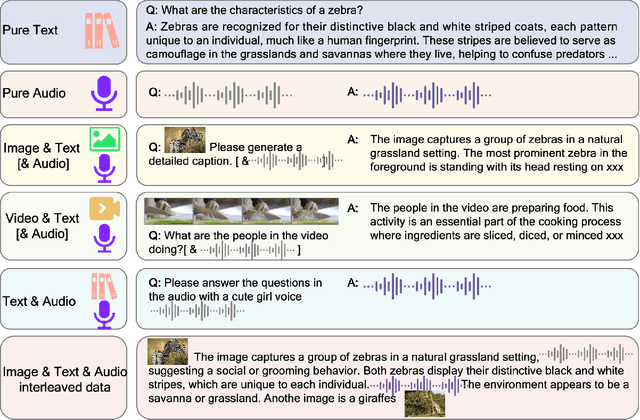

Abstract:We introduce Baichuan-Omni-1.5, an omni-modal model that not only has omni-modal understanding capabilities but also provides end-to-end audio generation capabilities. To achieve fluent and high-quality interaction across modalities without compromising the capabilities of any modality, we prioritized optimizing three key aspects. First, we establish a comprehensive data cleaning and synthesis pipeline for multimodal data, obtaining about 500B high-quality data (text, audio, and vision). Second, an audio-tokenizer (Baichuan-Audio-Tokenizer) has been designed to capture both semantic and acoustic information from audio, enabling seamless integration and enhanced compatibility with MLLM. Lastly, we designed a multi-stage training strategy that progressively integrates multimodal alignment and multitask fine-tuning, ensuring effective synergy across all modalities. Baichuan-Omni-1.5 leads contemporary models (including GPT4o-mini and MiniCPM-o 2.6) in terms of comprehensive omni-modal capabilities. Notably, it achieves results comparable to leading models such as Qwen2-VL-72B across various multimodal medical benchmarks.

Interpretable Selective Learning in Credit Risk

Sep 21, 2022

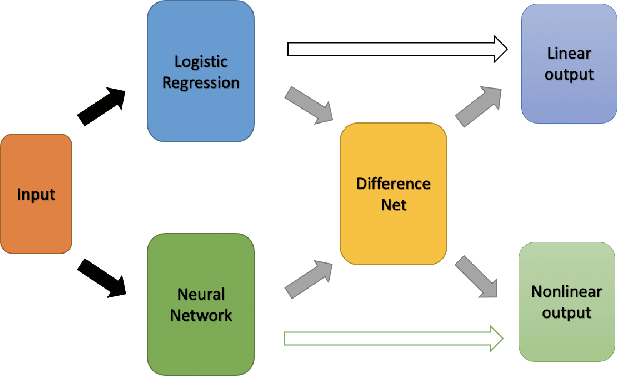

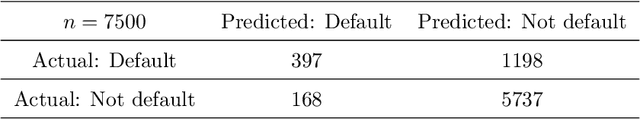

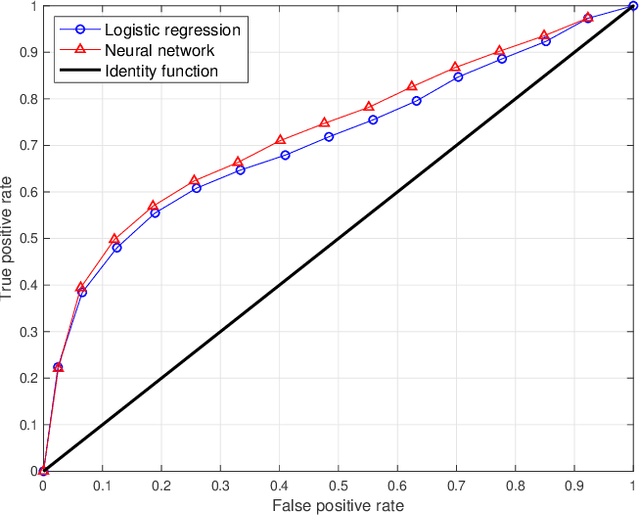

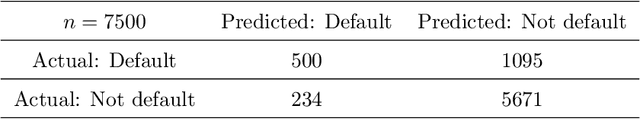

Abstract:The forecasting of the credit default risk has been an important research field for several decades. Traditionally, logistic regression has been widely recognized as a solution due to its accuracy and interpretability. As a recent trend, researchers tend to use more complex and advanced machine learning methods to improve the accuracy of the prediction. Although certain non-linear machine learning methods have better predictive power, they are often considered to lack interpretability by financial regulators. Thus, they have not been widely applied in credit risk assessment. We introduce a neural network with the selective option to increase interpretability by distinguishing whether the datasets can be explained by the linear models or not. We find that, for most of the datasets, logistic regression will be sufficient, with reasonable accuracy; meanwhile, for some specific data portions, a shallow neural network model leads to much better accuracy without significantly sacrificing the interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge