Weicheng Ye

How to address monotonicity for model risk management?

Apr 28, 2023

Abstract:In this paper, we study the problem of establishing the accountability and fairness of transparent machine learning models through monotonicity. Although there have been numerous studies on individual monotonicity, pairwise monotonicity is often overlooked in the existing literature. This paper studies transparent neural networks in the presence of three types of monotonicity: individual monotonicity, weak pairwise monotonicity, and strong pairwise monotonicity. As a means of achieving monotonicity while maintaining transparency, we propose the monotonic groves of neural additive models. As a result of empirical examples, we demonstrate that monotonicity is often violated in practice and that monotonic groves of neural additive models are transparent, accountable, and fair.

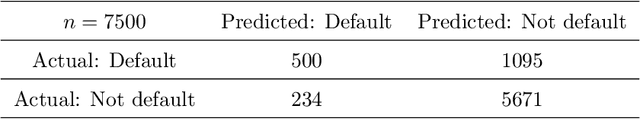

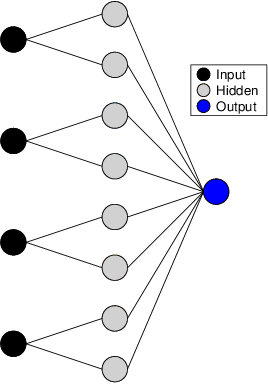

Interpretable Selective Learning in Credit Risk

Sep 21, 2022

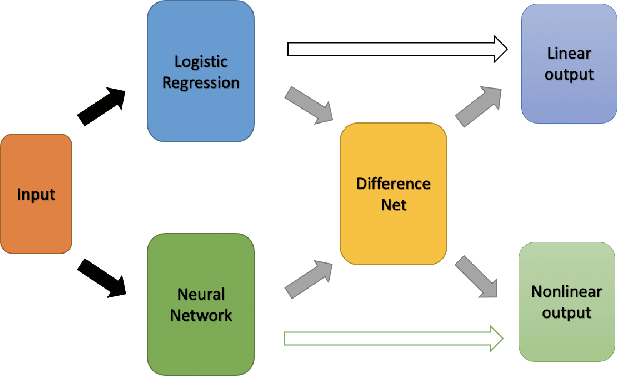

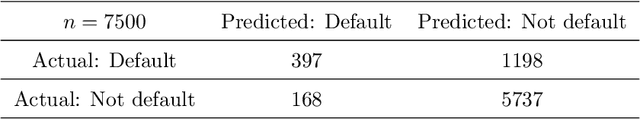

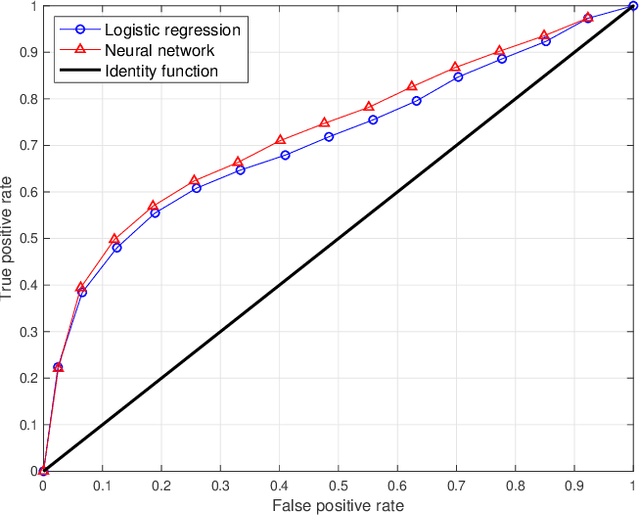

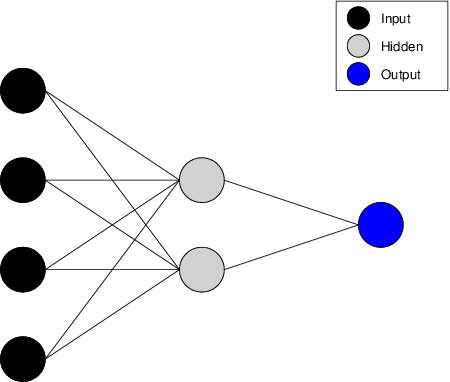

Abstract:The forecasting of the credit default risk has been an important research field for several decades. Traditionally, logistic regression has been widely recognized as a solution due to its accuracy and interpretability. As a recent trend, researchers tend to use more complex and advanced machine learning methods to improve the accuracy of the prediction. Although certain non-linear machine learning methods have better predictive power, they are often considered to lack interpretability by financial regulators. Thus, they have not been widely applied in credit risk assessment. We introduce a neural network with the selective option to increase interpretability by distinguishing whether the datasets can be explained by the linear models or not. We find that, for most of the datasets, logistic regression will be sufficient, with reasonable accuracy; meanwhile, for some specific data portions, a shallow neural network model leads to much better accuracy without significantly sacrificing the interpretability.

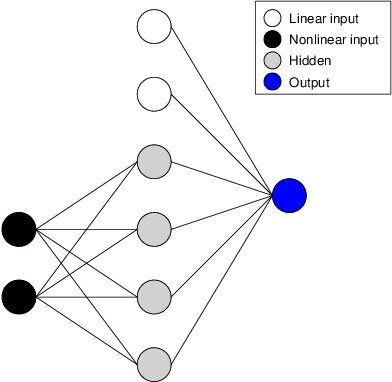

Generalized Gloves of Neural Additive Models: Pursuing transparent and accurate machine learning models in finance

Sep 21, 2022

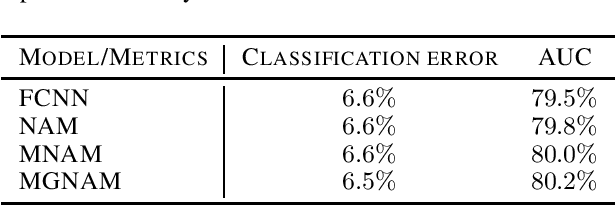

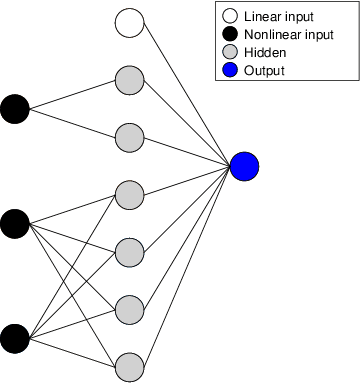

Abstract:For many years, machine learning methods have been used in a wide range of fields, including computer vision and natural language processing. While machine learning methods have significantly improved model performance over traditional methods, their black-box structure makes it difficult for researchers to interpret results. For highly regulated financial industries, transparency, explainability, and fairness are equally, if not more, important than accuracy. Without meeting regulated requirements, even highly accurate machine learning methods are unlikely to be accepted. We address this issue by introducing a novel class of transparent and interpretable machine learning algorithms known as generalized gloves of neural additive models. The generalized gloves of neural additive models separate features into three categories: linear features, individual nonlinear features, and interacted nonlinear features. Additionally, interactions in the last category are only local. The linear and nonlinear components are distinguished by a stepwise selection algorithm, and interacted groups are carefully verified by applying additive separation criteria. Empirical results demonstrate that generalized gloves of neural additive models provide optimal accuracy with the simplest architecture, allowing for a highly accurate, transparent, and explainable approach to machine learning.

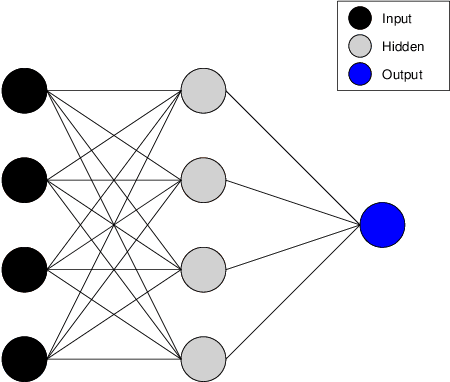

Monotonic Neural Additive Models: Pursuing Regulated Machine Learning Models for Credit Scoring

Sep 21, 2022

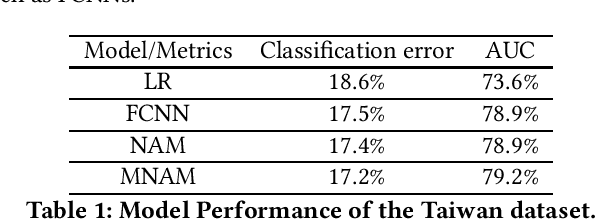

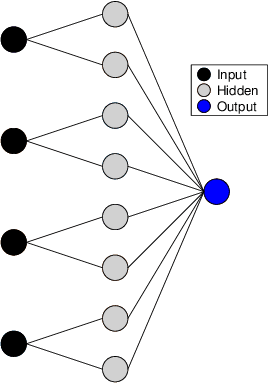

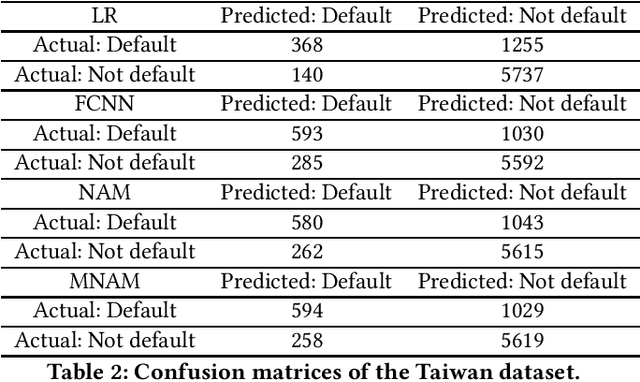

Abstract:The forecasting of credit default risk has been an active research field for several decades. Historically, logistic regression has been used as a major tool due to its compliance with regulatory requirements: transparency, explainability, and fairness. In recent years, researchers have increasingly used complex and advanced machine learning methods to improve prediction accuracy. Even though a machine learning method could potentially improve the model accuracy, it complicates simple logistic regression, deteriorates explainability, and often violates fairness. In the absence of compliance with regulatory requirements, even highly accurate machine learning methods are unlikely to be accepted by companies for credit scoring. In this paper, we introduce a novel class of monotonic neural additive models, which meet regulatory requirements by simplifying neural network architecture and enforcing monotonicity. By utilizing the special architectural features of the neural additive model, the monotonic neural additive model penalizes monotonicity violations effectively. Consequently, the computational cost of training a monotonic neural additive model is similar to that of training a neural additive model, as a free lunch. We demonstrate through empirical results that our new model is as accurate as black-box fully-connected neural networks, providing a highly accurate and regulated machine learning method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge