Jia-Hong Huang

DeepEyeNet: Generating Medical Report for Retinal Images

Sep 16, 2025Abstract:The increasing prevalence of retinal diseases poses a significant challenge to the healthcare system, as the demand for ophthalmologists surpasses the available workforce. This imbalance creates a bottleneck in diagnosis and treatment, potentially delaying critical care. Traditional methods of generating medical reports from retinal images rely on manual interpretation, which is time-consuming and prone to errors, further straining ophthalmologists' limited resources. This thesis investigates the potential of Artificial Intelligence (AI) to automate medical report generation for retinal images. AI can quickly analyze large volumes of image data, identifying subtle patterns essential for accurate diagnosis. By automating this process, AI systems can greatly enhance the efficiency of retinal disease diagnosis, reducing doctors' workloads and enabling them to focus on more complex cases. The proposed AI-based methods address key challenges in automated report generation: (1) A multi-modal deep learning approach captures interactions between textual keywords and retinal images, resulting in more comprehensive medical reports; (2) Improved methods for medical keyword representation enhance the system's ability to capture nuances in medical terminology; (3) Strategies to overcome RNN-based models' limitations, particularly in capturing long-range dependencies within medical descriptions; (4) Techniques to enhance the interpretability of the AI-based report generation system, fostering trust and acceptance in clinical practice. These methods are rigorously evaluated using various metrics and achieve state-of-the-art performance. This thesis demonstrates AI's potential to revolutionize retinal disease diagnosis by automating medical report generation, ultimately improving clinical efficiency, diagnostic accuracy, and patient care.

MaCP: Minimal yet Mighty Adaptation via Hierarchical Cosine Projection

May 29, 2025

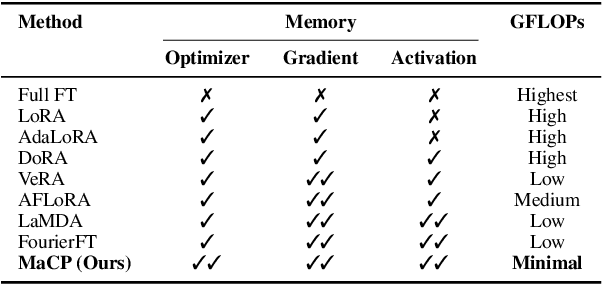

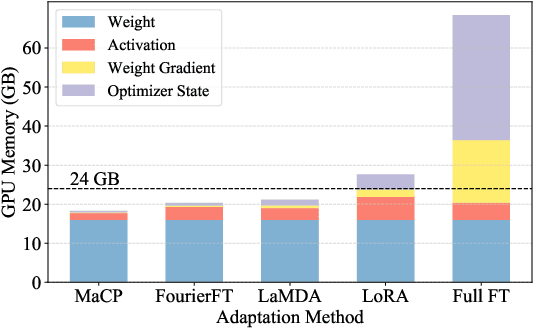

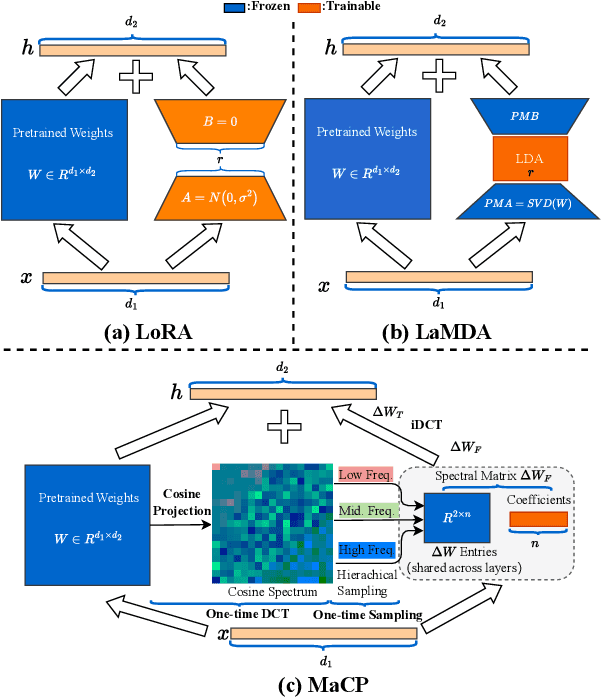

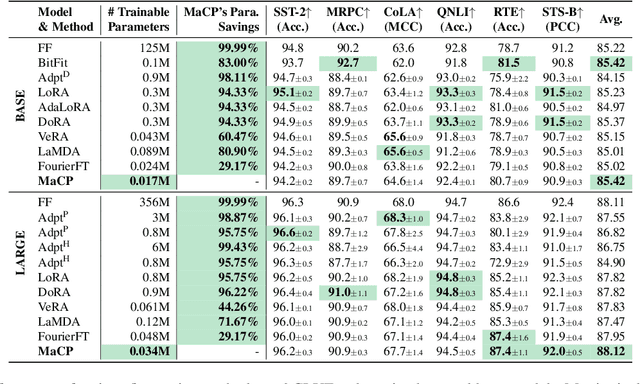

Abstract:We present a new adaptation method MaCP, Minimal yet Mighty adaptive Cosine Projection, that achieves exceptional performance while requiring minimal parameters and memory for fine-tuning large foundation models. Its general idea is to exploit the superior energy compaction and decorrelation properties of cosine projection to improve both model efficiency and accuracy. Specifically, it projects the weight change from the low-rank adaptation into the discrete cosine space. Then, the weight change is partitioned over different levels of the discrete cosine spectrum, and each partition's most critical frequency components are selected. Extensive experiments demonstrate the effectiveness of MaCP across a wide range of single-modality tasks, including natural language understanding, natural language generation, text summarization, as well as multi-modality tasks such as image classification and video understanding. MaCP consistently delivers superior accuracy, significantly reduced computational complexity, and lower memory requirements compared to existing alternatives.

SSH: Sparse Spectrum Adaptation via Discrete Hartley Transformation

Feb 08, 2025

Abstract:Low-rank adaptation (LoRA) has been demonstrated effective in reducing the trainable parameter number when fine-tuning a large foundation model (LLM). However, it still encounters computational and memory challenges when scaling to larger models or addressing more complex task adaptation. In this work, we introduce Sparse Spectrum Adaptation via Discrete Hartley Transformation (SSH), a novel approach that significantly reduces the number of trainable parameters while enhancing model performance. It selects the most informative spectral components across all layers, under the guidance of the initial weights after a discrete Hartley transformation (DHT). The lightweight inverse DHT then projects the spectrum back into the spatial domain for updates. Extensive experiments across both single-modality tasks such as language understanding and generation and multi-modality tasks such as video-text understanding demonstrate that SSH outperforms existing parameter-efficient fine-tuning (PEFT) methods while achieving substantial reductions in computational cost and memory requirements.

Gradient Weight-normalized Low-rank Projection for Efficient LLM Training

Dec 27, 2024Abstract:Large Language Models (LLMs) have shown remarkable performance across various tasks, but the escalating demands on computational resources pose significant challenges, particularly in the extensive utilization of full fine-tuning for downstream tasks. To address this, parameter-efficient fine-tuning (PEFT) methods have been developed, but they often underperform compared to full fine-tuning and struggle with memory efficiency. In this work, we introduce Gradient Weight-Normalized Low-Rank Projection (GradNormLoRP), a novel approach that enhances both parameter and memory efficiency while maintaining comparable performance to full fine-tuning. GradNormLoRP normalizes the weight matrix to improve gradient conditioning, facilitating better convergence during optimization. Additionally, it applies low-rank approximations to the weight and gradient matrices, significantly reducing memory usage during training. Extensive experiments demonstrate that our 8-bit GradNormLoRP reduces optimizer memory usage by up to 89.5% and enables the pre-training of large LLMs, such as LLaMA 7B, on consumer-level GPUs like the NVIDIA RTX 4090, without additional inference costs. Moreover, GradNormLoRP outperforms existing low-rank methods in fine-tuning tasks. For instance, when fine-tuning the RoBERTa model on all GLUE tasks with a rank of 8, GradNormLoRP achieves an average score of 80.65, surpassing LoRA's score of 79.23. These results underscore GradNormLoRP as a promising alternative for efficient LLM pre-training and fine-tuning. Source code and Appendix: https://github.com/Jhhuangkay/Gradient-Weight-normalized-Low-rank-Projection-for-Efficient-LLM-Training

Image2Text2Image: A Novel Framework for Label-Free Evaluation of Image-to-Text Generation with Text-to-Image Diffusion Models

Nov 08, 2024

Abstract:Evaluating the quality of automatically generated image descriptions is a complex task that requires metrics capturing various dimensions, such as grammaticality, coverage, accuracy, and truthfulness. Although human evaluation provides valuable insights, its cost and time-consuming nature pose limitations. Existing automated metrics like BLEU, ROUGE, METEOR, and CIDEr attempt to fill this gap, but they often exhibit weak correlations with human judgment. To address this challenge, we propose a novel evaluation framework called Image2Text2Image, which leverages diffusion models, such as Stable Diffusion or DALL-E, for text-to-image generation. In the Image2Text2Image framework, an input image is first processed by a selected image captioning model, chosen for evaluation, to generate a textual description. Using this generated description, a diffusion model then creates a new image. By comparing features extracted from the original and generated images, we measure their similarity using a designated similarity metric. A high similarity score suggests that the model has produced a faithful textual description, while a low score highlights discrepancies, revealing potential weaknesses in the model's performance. Notably, our framework does not rely on human-annotated reference captions, making it a valuable tool for assessing image captioning models. Extensive experiments and human evaluations validate the efficacy of our proposed Image2Text2Image evaluation framework. The code and dataset will be published to support further research in the community.

Parameter-Efficient Fine-Tuning via Selective Discrete Cosine Transform

Oct 09, 2024Abstract:In the era of large language models, parameter-efficient fine-tuning (PEFT) has been extensively studied. However, these approaches usually rely on the space domain, which encounters storage challenges especially when handling extensive adaptations or larger models. The frequency domain, in contrast, is more effective in compressing trainable parameters while maintaining the expressive capability. In this paper, we propose a novel Selective Discrete Cosine Transformation (sDCTFT) fine-tuning scheme to push this frontier. Its general idea is to exploit the superior energy compaction and decorrelation properties of DCT to improve both model efficiency and accuracy. Specifically, it projects the weight change from the low-rank adaptation into the discrete cosine space. Then, the weight change is partitioned over different levels of the discrete cosine spectrum, and the most critical frequency components in each partition are selected. Extensive experiments on four benchmark datasets demonstrate the superior accuracy, reduced computational cost, and lower storage requirements of the proposed method over the prior arts. For instance, when performing instruction tuning on the LLaMA3.1-8B model, sDCTFT outperforms LoRA with just 0.05M trainable parameters compared to LoRA's 38.2M, and surpasses FourierFT with 30\% less trainable parameters. The source code will be publicly available.

Personalized Video Summarization using Text-Based Queries and Conditional Modeling

Aug 27, 2024Abstract:The proliferation of video content on platforms like YouTube and Vimeo presents significant challenges in efficiently locating relevant information. Automatic video summarization aims to address this by extracting and presenting key content in a condensed form. This thesis explores enhancing video summarization by integrating text-based queries and conditional modeling to tailor summaries to user needs. Traditional methods often produce fixed summaries that may not align with individual requirements. To overcome this, we propose a multi-modal deep learning approach that incorporates both textual queries and visual information, fusing them at different levels of the model architecture. Evaluation metrics such as accuracy and F1-score assess the quality of the generated summaries. The thesis also investigates improving text-based query representations using contextualized word embeddings and specialized attention networks. This enhances the semantic understanding of queries, leading to better video summaries. To emulate human-like summarization, which accounts for both visual coherence and abstract factors like storyline consistency, we introduce a conditional modeling approach. This method uses multiple random variables and joint distributions to capture key summarization components, resulting in more human-like and explainable summaries. Addressing data scarcity in fully supervised learning, the thesis proposes a segment-level pseudo-labeling approach. This self-supervised method generates additional data, improving model performance even with limited human-labeled datasets. In summary, this research aims to enhance automatic video summarization by incorporating text-based queries, improving query representations, introducing conditional modeling, and addressing data scarcity, thereby creating more effective and personalized video summaries.

A Comparative Analysis of Faithfulness Metrics and Humans in Citation Evaluation

Aug 22, 2024

Abstract:Large language models (LLMs) often generate content with unsupported or unverifiable content, known as "hallucinations." To address this, retrieval-augmented LLMs are employed to include citations in their content, grounding the content in verifiable sources. Despite such developments, manually assessing how well a citation supports the associated statement remains a major challenge. Previous studies tackle this challenge by leveraging faithfulness metrics to estimate citation support automatically. However, they limit this citation support estimation to a binary classification scenario, neglecting fine-grained citation support in practical scenarios. To investigate the effectiveness of faithfulness metrics in fine-grained scenarios, we propose a comparative evaluation framework that assesses the metric effectiveness in distinguishing citations between three-category support levels: full, partial, and no support. Our framework employs correlation analysis, classification evaluation, and retrieval evaluation to measure the alignment between metric scores and human judgments comprehensively. Our results indicate no single metric consistently excels across all evaluations, highlighting the complexity of accurately evaluating fine-grained support levels. Particularly, we find that the best-performing metrics struggle to distinguish partial support from full or no support. Based on these findings, we provide practical recommendations for developing more effective metrics.

Beyond Relevant Documents: A Knowledge-Intensive Approach for Query-Focused Summarization using Large Language Models

Aug 19, 2024Abstract:Query-focused summarization (QFS) is a fundamental task in natural language processing with broad applications, including search engines and report generation. However, traditional approaches assume the availability of relevant documents, which may not always hold in practical scenarios, especially in highly specialized topics. To address this limitation, we propose a novel knowledge-intensive approach that reframes QFS as a knowledge-intensive task setup. This approach comprises two main components: a retrieval module and a summarization controller. The retrieval module efficiently retrieves potentially relevant documents from a large-scale knowledge corpus based on the given textual query, eliminating the dependence on pre-existing document sets. The summarization controller seamlessly integrates a powerful large language model (LLM)-based summarizer with a carefully tailored prompt, ensuring the generated summary is comprehensive and relevant to the query. To assess the effectiveness of our approach, we create a new dataset, along with human-annotated relevance labels, to facilitate comprehensive evaluation covering both retrieval and summarization performance. Extensive experiments demonstrate the superior performance of our approach, particularly its ability to generate accurate summaries without relying on the availability of relevant documents initially. This underscores our method's versatility and practical applicability across diverse query scenarios.

Automated Retinal Image Analysis and Medical Report Generation through Deep Learning

Aug 14, 2024Abstract:The increasing prevalence of retinal diseases poses a significant challenge to the healthcare system, as the demand for ophthalmologists surpasses the available workforce. This imbalance creates a bottleneck in diagnosis and treatment, potentially delaying critical care. Traditional methods of generating medical reports from retinal images rely on manual interpretation, which is time-consuming and prone to errors, further straining ophthalmologists' limited resources. This thesis investigates the potential of Artificial Intelligence (AI) to automate medical report generation for retinal images. AI can quickly analyze large volumes of image data, identifying subtle patterns essential for accurate diagnosis. By automating this process, AI systems can greatly enhance the efficiency of retinal disease diagnosis, reducing doctors' workloads and enabling them to focus on more complex cases. The proposed AI-based methods address key challenges in automated report generation: (1) Improved methods for medical keyword representation enhance the system's ability to capture nuances in medical terminology; (2) A multi-modal deep learning approach captures interactions between textual keywords and retinal images, resulting in more comprehensive medical reports; (3) Techniques to enhance the interpretability of the AI-based report generation system, fostering trust and acceptance in clinical practice. These methods are rigorously evaluated using various metrics and achieve state-of-the-art performance. This thesis demonstrates AI's potential to revolutionize retinal disease diagnosis by automating medical report generation, ultimately improving clinical efficiency, diagnostic accuracy, and patient care. [https://github.com/Jhhuangkay/DeepOpht-Medical-Report-Generation-for-Retinal-Images-via-Deep-Models-and-Visual-Explanation]

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge