Anuj Pathania

MaCP: Minimal yet Mighty Adaptation via Hierarchical Cosine Projection

May 29, 2025

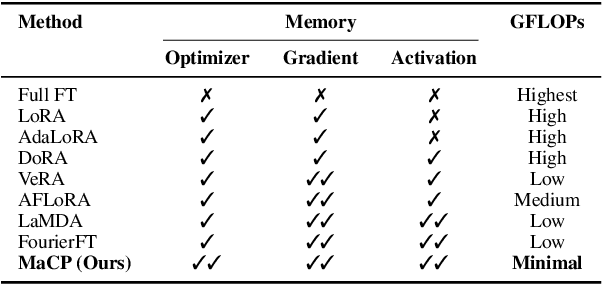

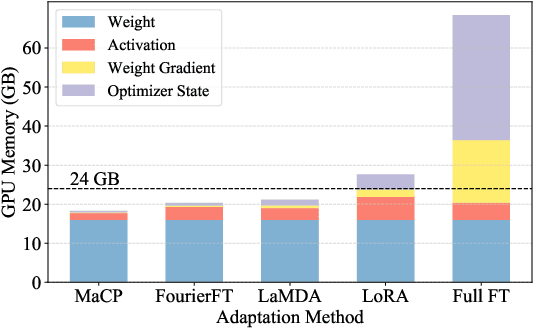

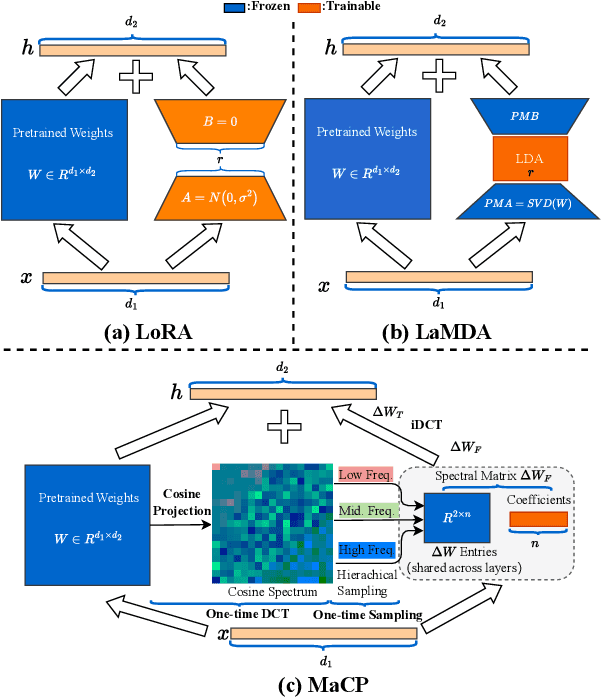

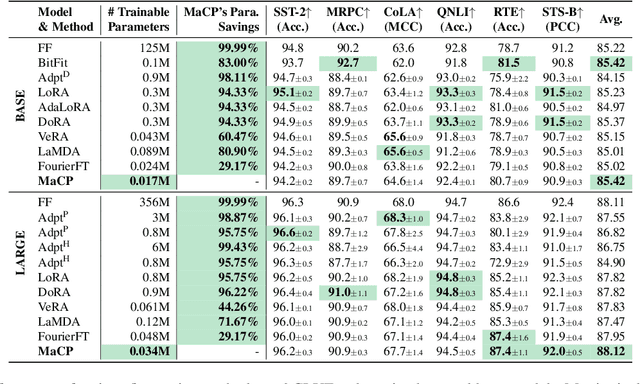

Abstract:We present a new adaptation method MaCP, Minimal yet Mighty adaptive Cosine Projection, that achieves exceptional performance while requiring minimal parameters and memory for fine-tuning large foundation models. Its general idea is to exploit the superior energy compaction and decorrelation properties of cosine projection to improve both model efficiency and accuracy. Specifically, it projects the weight change from the low-rank adaptation into the discrete cosine space. Then, the weight change is partitioned over different levels of the discrete cosine spectrum, and each partition's most critical frequency components are selected. Extensive experiments demonstrate the effectiveness of MaCP across a wide range of single-modality tasks, including natural language understanding, natural language generation, text summarization, as well as multi-modality tasks such as image classification and video understanding. MaCP consistently delivers superior accuracy, significantly reduced computational complexity, and lower memory requirements compared to existing alternatives.

SSH: Sparse Spectrum Adaptation via Discrete Hartley Transformation

Feb 08, 2025

Abstract:Low-rank adaptation (LoRA) has been demonstrated effective in reducing the trainable parameter number when fine-tuning a large foundation model (LLM). However, it still encounters computational and memory challenges when scaling to larger models or addressing more complex task adaptation. In this work, we introduce Sparse Spectrum Adaptation via Discrete Hartley Transformation (SSH), a novel approach that significantly reduces the number of trainable parameters while enhancing model performance. It selects the most informative spectral components across all layers, under the guidance of the initial weights after a discrete Hartley transformation (DHT). The lightweight inverse DHT then projects the spectrum back into the spatial domain for updates. Extensive experiments across both single-modality tasks such as language understanding and generation and multi-modality tasks such as video-text understanding demonstrate that SSH outperforms existing parameter-efficient fine-tuning (PEFT) methods while achieving substantial reductions in computational cost and memory requirements.

Parameter-Efficient Fine-Tuning via Selective Discrete Cosine Transform

Oct 09, 2024Abstract:In the era of large language models, parameter-efficient fine-tuning (PEFT) has been extensively studied. However, these approaches usually rely on the space domain, which encounters storage challenges especially when handling extensive adaptations or larger models. The frequency domain, in contrast, is more effective in compressing trainable parameters while maintaining the expressive capability. In this paper, we propose a novel Selective Discrete Cosine Transformation (sDCTFT) fine-tuning scheme to push this frontier. Its general idea is to exploit the superior energy compaction and decorrelation properties of DCT to improve both model efficiency and accuracy. Specifically, it projects the weight change from the low-rank adaptation into the discrete cosine space. Then, the weight change is partitioned over different levels of the discrete cosine spectrum, and the most critical frequency components in each partition are selected. Extensive experiments on four benchmark datasets demonstrate the superior accuracy, reduced computational cost, and lower storage requirements of the proposed method over the prior arts. For instance, when performing instruction tuning on the LLaMA3.1-8B model, sDCTFT outperforms LoRA with just 0.05M trainable parameters compared to LoRA's 38.2M, and surpasses FourierFT with 30\% less trainable parameters. The source code will be publicly available.

Neural Network Inference on Mobile SoCs

Aug 24, 2019

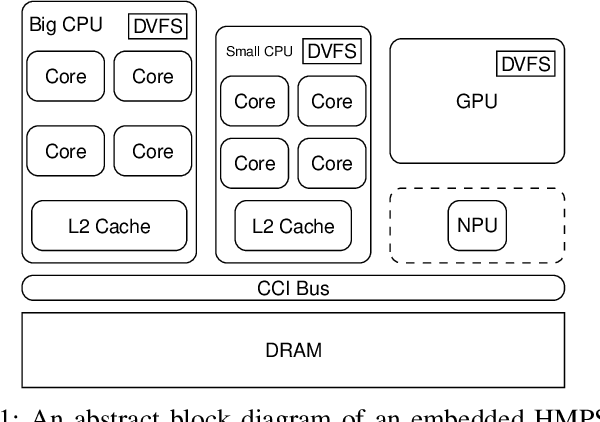

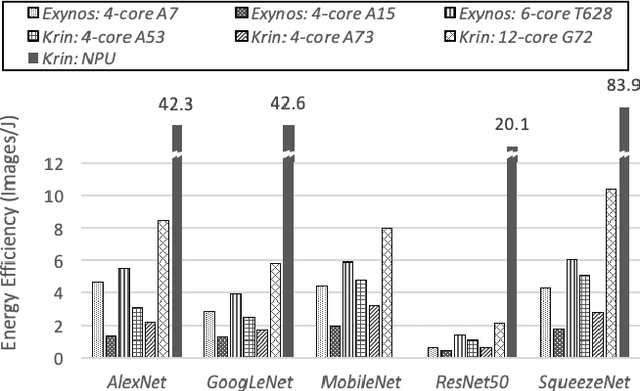

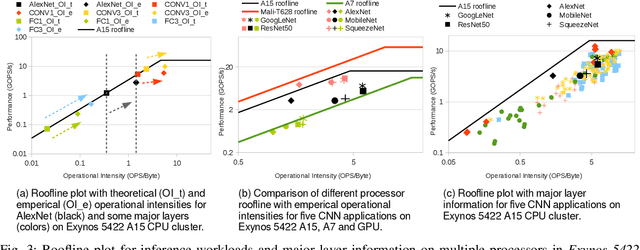

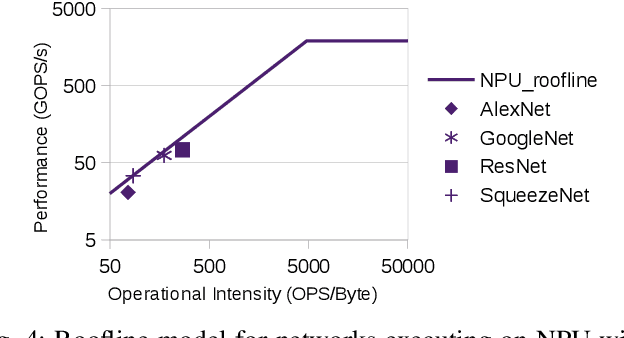

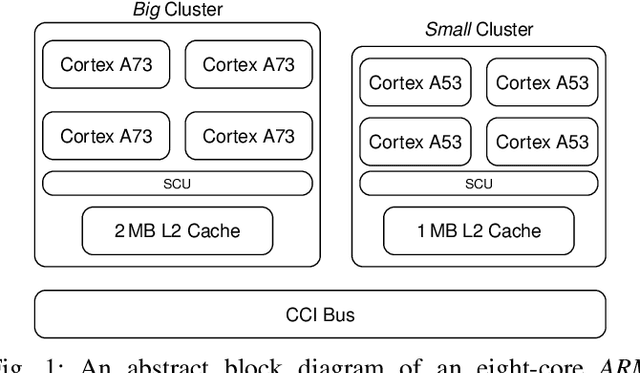

Abstract:The ever-increasing demand from mobile Machine Learning (ML) applications calls for evermore powerful on-chip computing resources. Mobile devices are empowered with Heterogeneous Multi-Processor Systems on Chips (HMPSoCs) to process ML workloads such as Convolutional Neural Network (CNN) inference. HMPSoCs house several different types of ML capable components on-die, such as CPU, GPU, and accelerators. These different components are capable of independently performing inference but with very different power-performance characteristics. In this article, we provide a quantitative evaluation of the inference capabilities of the different components on HMPSoCs. We also present insights behind their respective power-performance behaviour. Finally, we explore the performance limit of the HMPSoCs by synergistically engaging all the components concurrently.

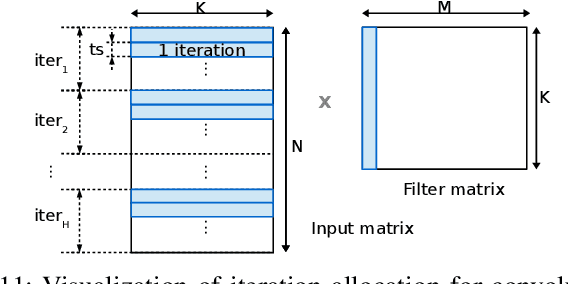

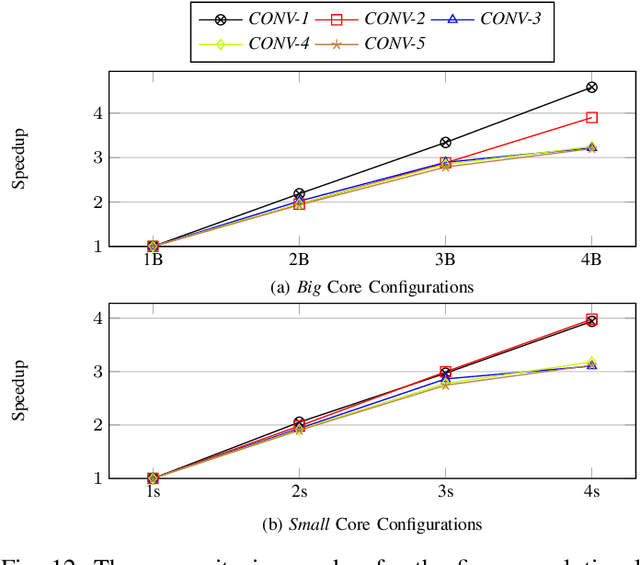

High-Throughput CNN Inference on Embedded ARM big.LITTLE Multi-Core Processors

Mar 14, 2019

Abstract:IoT Edge intelligence requires Convolutional Neural Network (CNN) inference to take place in the edge device itself. ARM big.LITTLE architecture is at the heart of common commercial edge devices. It comprises of single-ISA heterogeneous multi-cores grouped in homogeneous clusters that enables performance and power trade-offs. However, high communication overhead involved in parallelization of computation from a convolution kernel across clusters is detrimental to throughput. We present an alternative framework called Pipe-it that employs a pipelined design to split the convolutional layers across clusters while limiting the parallelization of their respective kernels to the assigned clusters. We develop a performance prediction model that, from convolutional layer descriptors, predicts the execution time of each layer individually on all different core types and number of cores. Pipe-it then exploits the predictions to create a balanced pipeline using an efficient design space exploration algorithm. Pipe-it on average results in 39% higher throughput than the highest antecedent throughput.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge