Jiří Martínek

Well-calibrated Confidence Measures for Multi-label Text Classification with a Large Number of Labels

Dec 14, 2023Abstract:We extend our previous work on Inductive Conformal Prediction (ICP) for multi-label text classification and present a novel approach for addressing the computational inefficiency of the Label Powerset (LP) ICP, arrising when dealing with a high number of unique labels. We present experimental results using the original and the proposed efficient LP-ICP on two English and one Czech language data-sets. Specifically, we apply the LP-ICP on three deep Artificial Neural Network (ANN) classifiers of two types: one based on contextualised (bert) and two on non-contextualised (word2vec) word-embeddings. In the LP-ICP setting we assign nonconformity scores to label-sets from which the corresponding p-values and prediction-sets are determined. Our approach deals with the increased computational burden of LP by eliminating from consideration a significant number of label-sets that will surely have p-values below the specified significance level. This reduces dramatically the computational complexity of the approach while fully respecting the standard CP guarantees. Our experimental results show that the contextualised-based classifier surpasses the non-contextualised-based ones and obtains state-of-the-art performance for all data-sets examined. The good performance of the underlying classifiers is carried on to their ICP counterparts without any significant accuracy loss, but with the added benefits of ICP, i.e. the confidence information encapsulated in the prediction sets. We experimentally demonstrate that the resulting prediction sets can be tight enough to be practically useful even though the set of all possible label-sets contains more than $1e+16$ combinations. Additionally, the empirical error rates of the obtained prediction-sets confirm that our outputs are well-calibrated.

Re-ranking for Writer Identification and Writer Retrieval

Jul 14, 2020

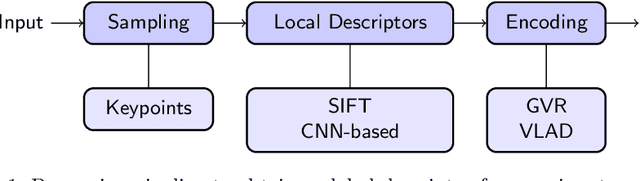

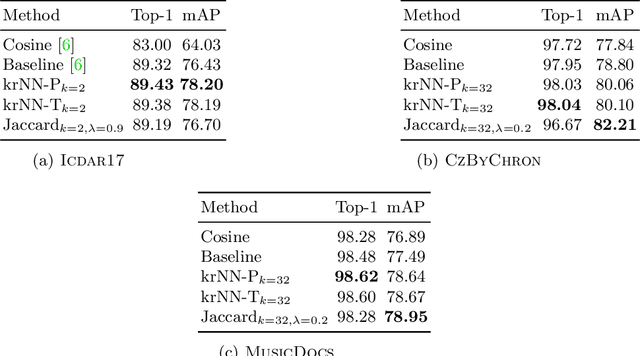

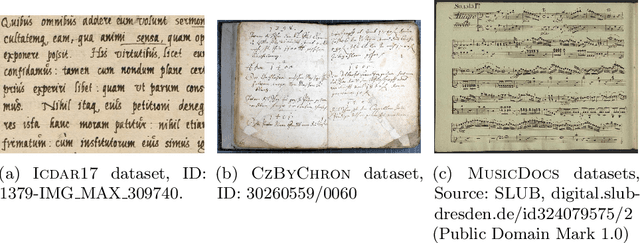

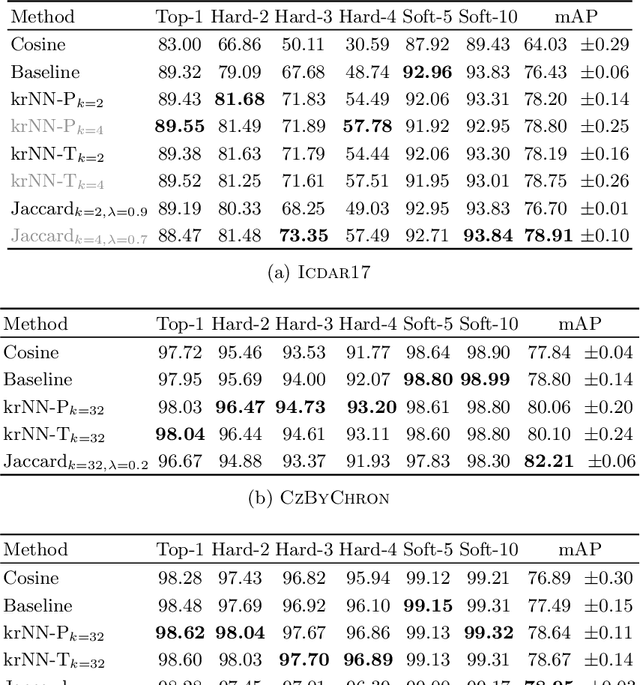

Abstract:Automatic writer identification is a common problem in document analysis. State-of-the-art methods typically focus on the feature extraction step with traditional or deep-learning-based techniques. In retrieval problems, re-ranking is a commonly used technique to improve the results. Re-ranking refines an initial ranking result by using the knowledge contained in the ranked result, e. g., by exploiting nearest neighbor relations. To the best of our knowledge, re-ranking has not been used for writer identification/retrieval. A possible reason might be that publicly available benchmark datasets contain only few samples per writer which makes a re-ranking less promising. We show that a re-ranking step based on k-reciprocal nearest neighbor relationships is advantageous for writer identification, even if only a few samples per writer are available. We use these reciprocal relationships in two ways: encode them into new vectors, as originally proposed, or integrate them in terms of query-expansion. We show that both techniques outperform the baseline results in terms of mAP on three writer identification datasets.

Cross-lingual Transfer Learning for Dialogue Act Recognition

May 19, 2020

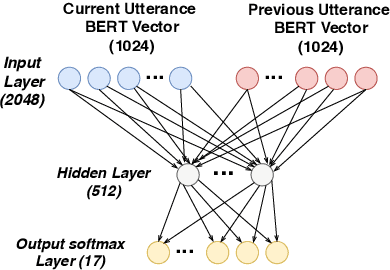

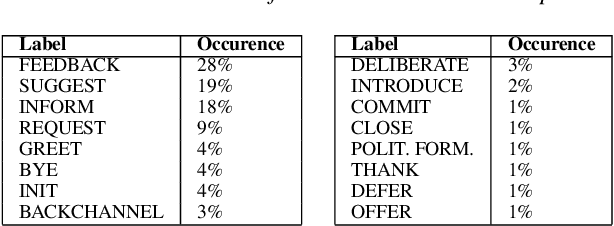

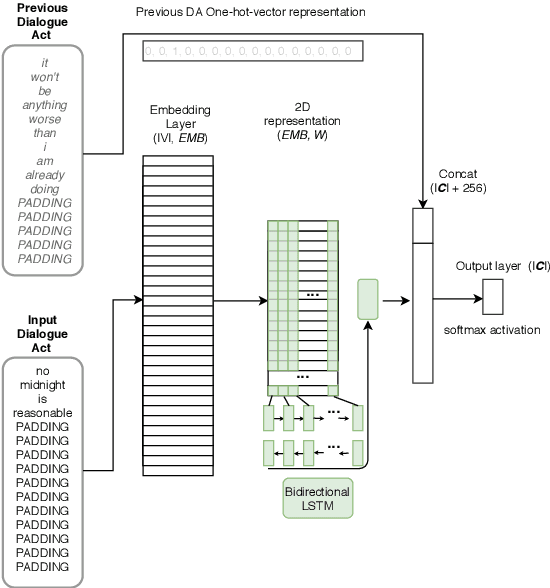

Abstract:This paper deals with cross-lingual transfer learning for dialogue act (DA) recognition. Besides generic contextual information gathered from pre-trained BERT embeddings, our objective is to transfer models trained on a standard English DA corpus to two other languages, German and French, and to potentially very different types of dialogue with different dialogue acts than the standard well-known DA corpora. The proposed approach thus studies the applicability of automatic DA recognition to specific tasks that may not benefit from a large enough number of manual annotations. A key component of our architecture is the automatic translation module, which limitations are addressed by stacking both foreign and translated words sequences into the same model. We further compare both CNN and multi-head self-attention to compute the speaker turn embeddings and show that in low-resource situations, the best results are obtained by combining all sources of transferred information.

Multi-lingual Dialogue Act Recognition with Deep Learning Methods

Apr 11, 2019

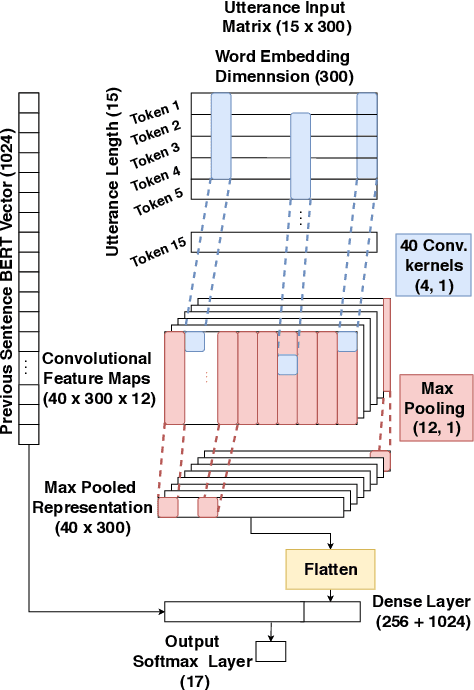

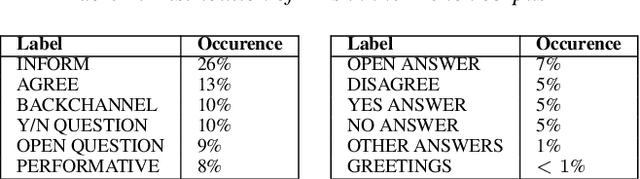

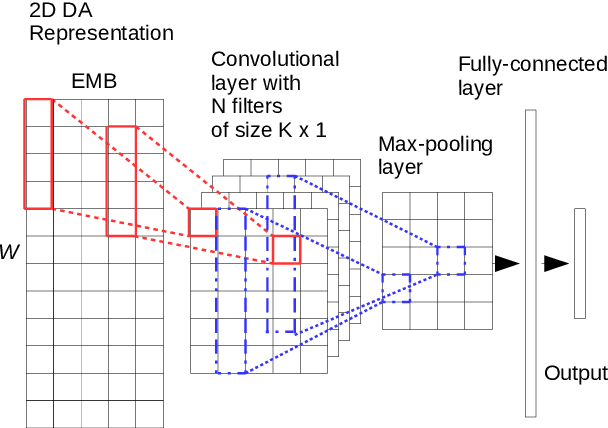

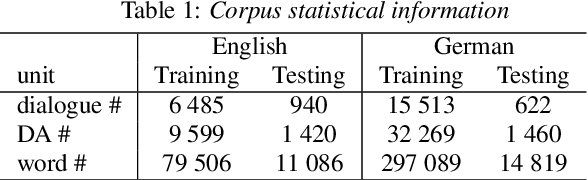

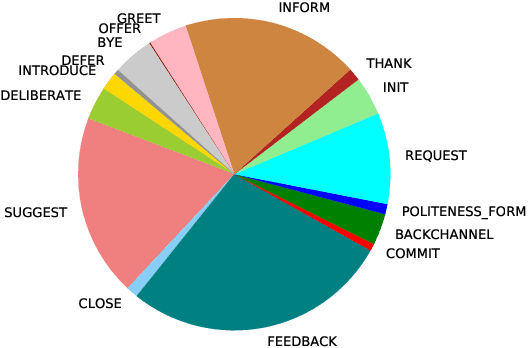

Abstract:This paper deals with multi-lingual dialogue act (DA) recognition. The proposed approaches are based on deep neural networks and use word2vec embeddings for word representation. Two multi-lingual models are proposed for this task. The first approach uses one general model trained on the embeddings from all available languages. The second method trains the model on a single pivot language and a linear transformation method is used to project other languages onto the pivot language. The popular convolutional neural network and LSTM architectures with different set-ups are used as classifiers. To the best of our knowledge this is the first attempt at multi-lingual DA recognition using neural networks. The multi-lingual models are validated experimentally on two languages from the Verbmobil corpus.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge