Cross-lingual Transfer Learning for Dialogue Act Recognition

Paper and Code

May 19, 2020

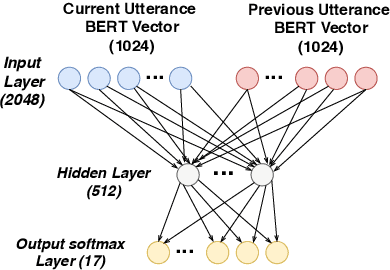

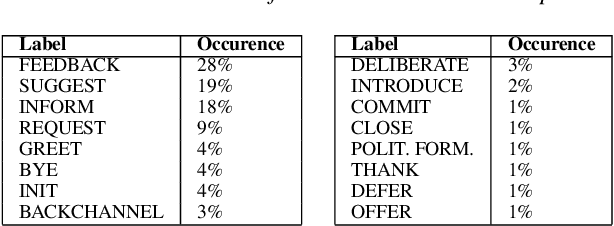

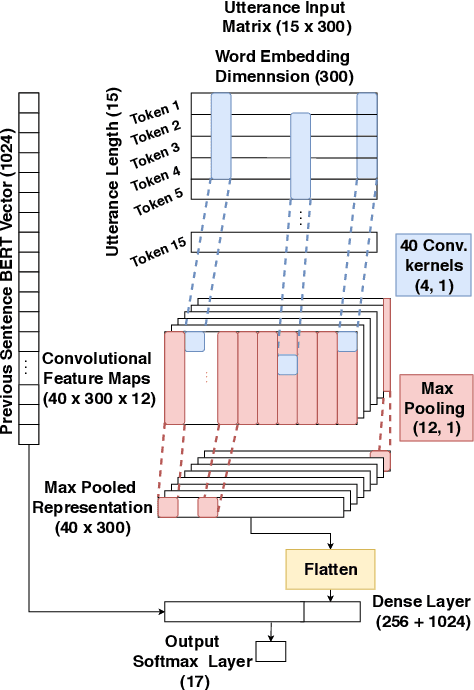

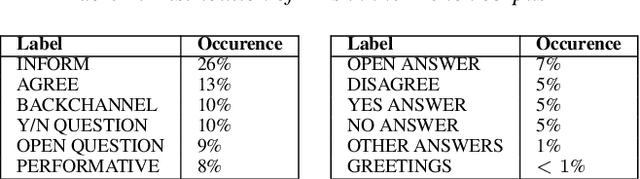

This paper deals with cross-lingual transfer learning for dialogue act (DA) recognition. Besides generic contextual information gathered from pre-trained BERT embeddings, our objective is to transfer models trained on a standard English DA corpus to two other languages, German and French, and to potentially very different types of dialogue with different dialogue acts than the standard well-known DA corpora. The proposed approach thus studies the applicability of automatic DA recognition to specific tasks that may not benefit from a large enough number of manual annotations. A key component of our architecture is the automatic translation module, which limitations are addressed by stacking both foreign and translated words sequences into the same model. We further compare both CNN and multi-head self-attention to compute the speaker turn embeddings and show that in low-resource situations, the best results are obtained by combining all sources of transferred information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge