Jeremy Gummeson

FeatureSense: Protecting Speaker Attributes in Always-On Audio Sensing System

May 30, 2025

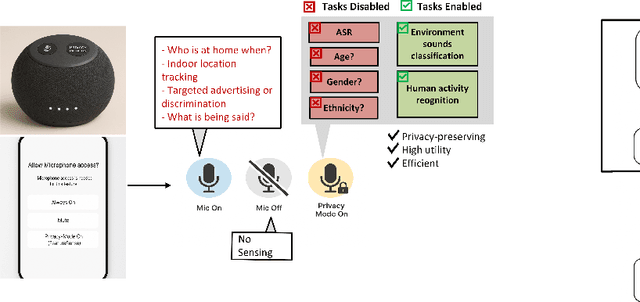

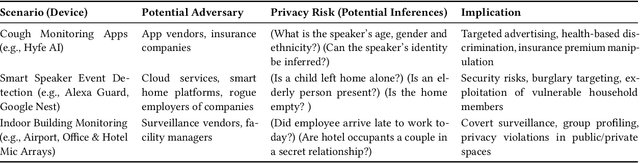

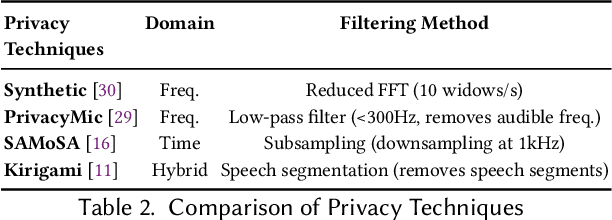

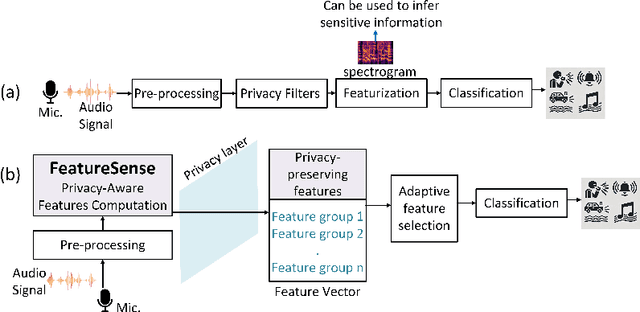

Abstract:Audio is a rich sensing modality that is useful for a variety of human activity recognition tasks. However, the ubiquitous nature of smartphones and smart speakers with always-on microphones has led to numerous privacy concerns and a lack of trust in deploying these audio-based sensing systems. This paper addresses this critical challenge of preserving user privacy when using audio for sensing applications while maintaining utility. While prior work focuses primarily on protecting recoverable speech content, we show that sensitive speaker-specific attributes such as age and gender can still be inferred after masking speech and propose a comprehensive privacy evaluation framework to assess this speaker attribute leakage. We design and implement FeatureSense, an open-source library that provides a set of generalizable privacy-aware audio features that can be used for wide range of sensing applications. We present an adaptive task-specific feature selection algorithm that optimizes the privacy-utility-cost trade-off based on the application requirements. Through our extensive evaluation, we demonstrate the high utility of FeatureSense across a diverse set of sensing tasks. Our system outperforms existing privacy techniques by 60.6% in preserving user-specific privacy. This work provides a foundational framework for ensuring trust in audio sensing by enabling effective privacy-aware audio classification systems.

Sonicmesh: Enhancing 3D Human Mesh Reconstruction in Vision-Impaired Environments With Acoustic Signals

Dec 15, 2024Abstract:3D Human Mesh Reconstruction (HMR) from 2D RGB images faces challenges in environments with poor lighting, privacy concerns, or occlusions. These weaknesses of RGB imaging can be complemented by acoustic signals, which are widely available, easy to deploy, and capable of penetrating obstacles. However, no existing methods effectively combine acoustic signals with RGB data for robust 3D HMR. The primary challenges include the low-resolution images generated by acoustic signals and the lack of dedicated processing backbones. We introduce SonicMesh, a novel approach combining acoustic signals with RGB images to reconstruct 3D human mesh. To address the challenges of low resolution and the absence of dedicated processing backbones in images generated by acoustic signals, we modify an existing method, HRNet, for effective feature extraction. We also integrate a universal feature embedding technique to enhance the precision of cross-dimensional feature alignment, enabling SonicMesh to achieve high accuracy. Experimental results demonstrate that SonicMesh accurately reconstructs 3D human mesh in challenging environments such as occlusions, non-line-of-sight scenarios, and poor lighting.

Towards Privacy-Preserving Audio Classification Systems

Apr 27, 2024

Abstract:Audio signals can reveal intimate details about a person's life, including their conversations, health status, emotions, location, and personal preferences. Unauthorized access or misuse of this information can have profound personal and social implications. In an era increasingly populated by devices capable of audio recording, safeguarding user privacy is a critical obligation. This work studies the ethical and privacy concerns in current audio classification systems. We discuss the challenges and research directions in designing privacy-preserving audio sensing systems. We propose privacy-preserving audio features that can be used to classify wide range of audio classes, while being privacy preserving.

FlowSense: Monitoring Airflow in Building Ventilation Systems Using Audio Sensing

Feb 22, 2022

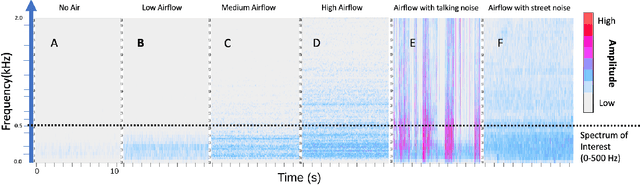

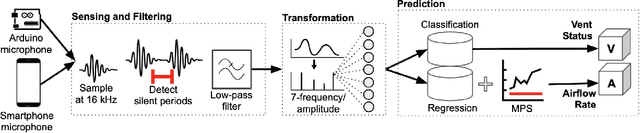

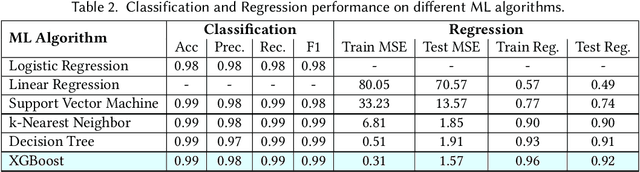

Abstract:Proper indoor ventilation through buildings' heating, ventilation, and air conditioning (HVAC) systems has become an increasing public health concern that significantly impacts individuals' health and safety at home, work, and school. While much work has progressed in providing energy-efficient and user comfort for HVAC systems through IoT devices and mobile-sensing approaches, ventilation is an aspect that has received lesser attention despite its importance. With a motivation to monitor airflow from building ventilation systems through commodity sensing devices, we present FlowSense, a machine learning-based algorithm to predict airflow rate from sensed audio data in indoor spaces. Our ML technique can predict the state of an air vent-whether it is on or off-as well as the rate of air flowing through active vents. By exploiting a low-pass filter to obtain low-frequency audio signals, we put together a privacy-preserving pipeline that leverages a silence detection algorithm to only sense for sounds of air from HVAC air vent when no human speech is detected. We also propose the Minimum Persistent Sensing (MPS) as a post-processing algorithm to reduce interference from ambient noise, including ongoing human conversation, office machines, and traffic noises. Together, these techniques ensure user privacy and improve the robustness of FlowSense. We validate our approach yielding over 90% accuracy in predicting vent status and 0.96 MSE in predicting airflow rate when the device is placed within 2.25 meters away from an air vent. Additionally, we demonstrate how our approach as a mobile audio-sensing platform is robust to smartphone models, distance, and orientation. Finally, we evaluate FlowSense privacy-preserving pipeline through a user study and a Google Speech Recognition service, confirming that the audio signals we used as input data are inaudible and inconstructible.

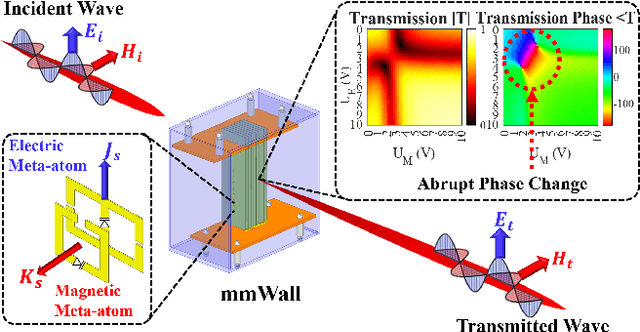

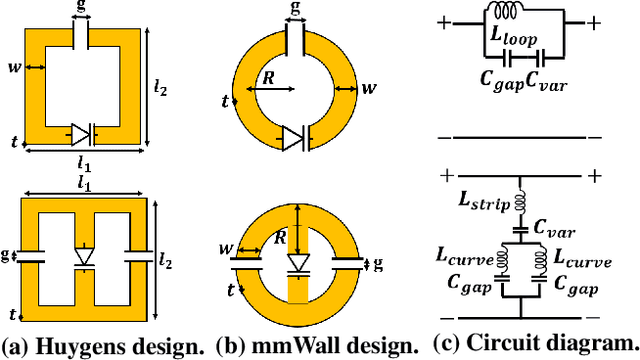

mmWall: A Reconfigurable Metamaterial Surface for mmWave Networks

Feb 02, 2021

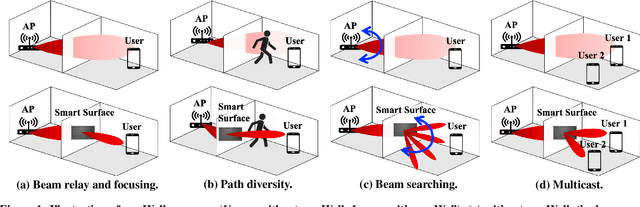

Abstract:To support faster and more efficient networks, mobile operators and service providers are bringing 5G millimeter wave (mmWave) networks indoors. However, due to their high directionality, mmWave links are extremely vulnerable to blockage by walls and human mobility. To address these challenges, we exploit advances in artificially engineered metamaterials, introducing a wall-mounted smart metasurface, called mmWall, that enables a fast mmWave beam relay through the wall and redirects the beam power to another direction when a human body blocks a line-of-sight path. Moreover, our mmWall supports multiple users and fast beam alignment by generating multi-armed beams. We sketch the design of a real-time system by considering (1) how to design a programmable, metamaterial-based surface that refracts the incoming signal to one or more arbitrary directions, and (2) how to split an incoming mmWave beam into multiple outgoing beams and arbitrarily control the beam energy between these beams. Preliminary results show the mmWall metasurface steers the outgoing beam in a full 360-degrees, with an 89.8% single-beam efficiency and 74.5% double-beam efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge