Jens Barth

Robust and Efficient Writer-Independent IMU-Based Handwriting Recognization

Feb 28, 2025Abstract:Online handwriting recognition (HWR) using data from inertial measurement units (IMUs) remains challenging due to variations in writing styles and the limited availability of high-quality annotated datasets. Traditional models often struggle to recognize handwriting from unseen writers, making writer-independent (WI) recognition a crucial but difficult problem. This paper presents an HWR model with an encoder-decoder structure for IMU data, featuring a CNN-based encoder for feature extraction and a BiLSTM decoder for sequence modeling, which supports inputs of varying lengths. Our approach demonstrates strong robustness and data efficiency, outperforming existing methods on WI datasets, including the WI split of the OnHW dataset and our own dataset. Extensive evaluations show that our model maintains high accuracy across different age groups and writing conditions while effectively learning from limited data. Through comprehensive ablation studies, we analyze key design choices, achieving a balance between accuracy and efficiency. These findings contribute to the development of more adaptable and scalable HWR systems for real-world applications.

Benchmarking Online Sequence-to-Sequence and Character-based Handwriting Recognition from IMU-Enhanced Pens

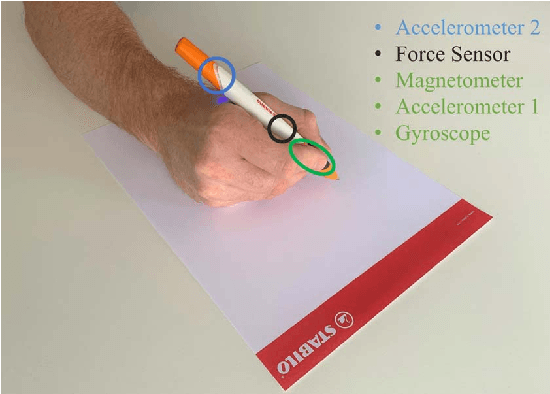

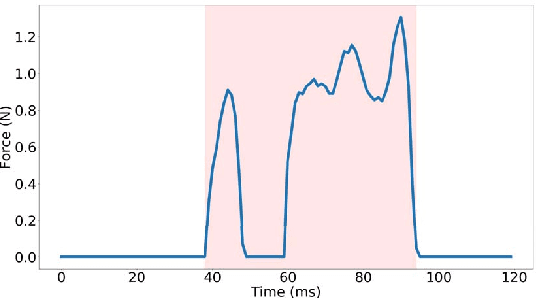

Feb 14, 2022Abstract:Handwriting is one of the most frequently occurring patterns in everyday life and with it come challenging applications such as handwriting recognition (HWR), writer identification, and signature verification. In contrast to offline HWR that only uses spatial information (i.e., images), online HWR (OnHWR) uses richer spatio-temporal information (i.e., trajectory data or inertial data). While there exist many offline HWR datasets, there is only little data available for the development of OnHWR methods as it requires hardware-integrated pens. This paper presents data and benchmark models for real-time sequence-to-sequence (seq2seq) learning and single character-based recognition. Our data is recorded by a sensor-enhanced ballpoint pen, yielding sensor data streams from triaxial accelerometers, a gyroscope, a magnetometer and a force sensor at 100Hz. We propose a variety of datasets including equations and words for both the writer-dependent and writer-independent tasks. We provide an evaluation benchmark for seq2seq and single character-based HWR using recurrent and temporal convolutional networks and Transformers combined with a connectionist temporal classification (CTC) loss and cross entropy losses. Our methods do not resort to language or lexicon models.

Digitizing Handwriting with a Sensor Pen: A Writer-Independent Recognizer

Jul 08, 2021

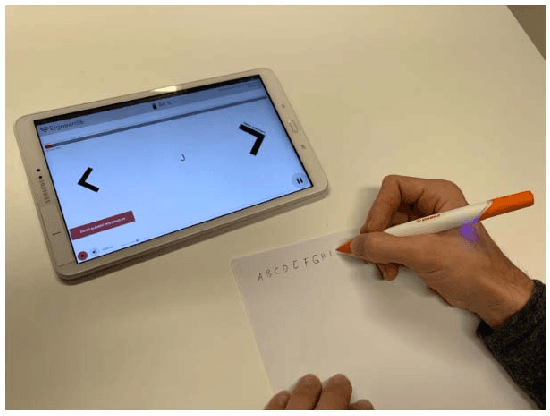

Abstract:Online handwriting recognition has been studied for a long time with only few practicable results when writing on normal paper. Previous approaches using sensor-based devices encountered problems that limited the usage of the developed systems in real-world applications. This paper presents a writer-independent system that recognizes characters written on plain paper with the use of a sensor-equipped pen. This system is applicable in real-world applications and requires no user-specific training for recognition. The pen provides linear acceleration, angular velocity, magnetic field, and force applied by the user, and acts as a digitizer that transforms the analogue signals of the sensors into timeseries data while writing on regular paper. The dataset we collected with this pen consists of Latin lower-case and upper-case alphabets. We present the results of a convolutional neural network model for letter classification and show that this approach is practical and achieves promising results for writer-independent character recognition. This work aims at providing a realtime handwriting recognition system to be used for writing on normal paper.

Towards an IMU-based Pen Online Handwriting Recognizer

May 26, 2021

Abstract:Most online handwriting recognition systems require the use of specific writing surfaces to extract positional data. In this paper we present a online handwriting recognition system for word recognition which is based on inertial measurement units (IMUs) for digitizing text written on paper. This is obtained by means of a sensor-equipped pen that provides acceleration, angular velocity, and magnetic forces streamed via Bluetooth. Our model combines convolutional and bidirectional LSTM networks, and is trained with the Connectionist Temporal Classification loss that allows the interpretation of raw sensor data into words without the need of sequence segmentation. We use a dataset of words collected using multiple sensor-enhanced pens and evaluate our model on distinct test sets of seen and unseen words achieving a character error rate of 17.97% and 17.08%, respectively, without the use of a dictionary or language model

Stride Length Estimation with Deep Learning

Mar 09, 2017

Abstract:Accurate estimation of spatial gait characteristics is critical to assess motor impairments resulting from neurological or musculoskeletal disease. Currently, however, methodological constraints limit clinical applicability of state-of-the-art double integration approaches to gait patterns with a clear zero-velocity phase. We describe a novel approach to stride length estimation that uses deep convolutional neural networks to map stride-specific inertial sensor data to the resulting stride length. The model is trained on a publicly available and clinically relevant benchmark dataset consisting of 1220 strides from 101 geriatric patients. Evaluation is done in a 10-fold cross validation and for three different stride definitions. Even though best results are achieved with strides defined from mid-stance to mid-stance with average accuracy and precision of 0.01 $\pm$ 5.37 cm, performance does not strongly depend on stride definition. The achieved precision outperforms state-of-the-art methods evaluated on this benchmark dataset by 3.0 cm (36%). Due to the independence of stride definition, the proposed method is not subject to the methodological constrains that limit applicability of state-of-the-art double integration methods. Furthermore, precision on the benchmark dataset could be improved. With more precise mobile stride length estimation, new insights to the progression of neurological disease or early indications might be gained. Due to the independence of stride definition, previously uncharted diseases in terms of mobile gait analysis can now be investigated by re-training and applying the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge