Jenhao Hsiao

Catch Missing Details: Image Reconstruction with Frequency Augmented Variational Autoencoder

May 04, 2023Abstract:The popular VQ-VAE models reconstruct images through learning a discrete codebook but suffer from a significant issue in the rapid quality degradation of image reconstruction as the compression rate rises. One major reason is that a higher compression rate induces more loss of visual signals on the higher frequency spectrum which reflect the details on pixel space. In this paper, a Frequency Complement Module (FCM) architecture is proposed to capture the missing frequency information for enhancing reconstruction quality. The FCM can be easily incorporated into the VQ-VAE structure, and we refer to the new model as Frequency Augmented VAE (FA-VAE). In addition, a Dynamic Spectrum Loss (DSL) is introduced to guide the FCMs to balance between various frequencies dynamically for optimal reconstruction. FA-VAE is further extended to the text-to-image synthesis task, and a Cross-attention Autoregressive Transformer (CAT) is proposed to obtain more precise semantic attributes in texts. Extensive reconstruction experiments with different compression rates are conducted on several benchmark datasets, and the results demonstrate that the proposed FA-VAE is able to restore more faithfully the details compared to SOTA methods. CAT also shows improved generation quality with better image-text semantic alignment.

VideoXum: Cross-modal Visual and Textural Summarization of Videos

Mar 21, 2023

Abstract:Video summarization aims to distill the most important information from a source video to produce either an abridged clip or a textual narrative. Traditionally, different methods have been proposed depending on whether the output is a video or text, thus ignoring the correlation between the two semantically related tasks of visual summarization and textual summarization. We propose a new joint video and text summarization task. The goal is to generate both a shortened video clip along with the corresponding textual summary from a long video, collectively referred to as a cross-modal summary. The generated shortened video clip and text narratives should be semantically well aligned. To this end, we first build a large-scale human-annotated dataset -- VideoXum (X refers to different modalities). The dataset is reannotated based on ActivityNet. After we filter out the videos that do not meet the length requirements, 14,001 long videos remain in our new dataset. Each video in our reannotated dataset has human-annotated video summaries and the corresponding narrative summaries. We then design a novel end-to-end model -- VTSUM-BILP to address the challenges of our proposed task. Moreover, we propose a new metric called VT-CLIPScore to help evaluate the semantic consistency of cross-modality summary. The proposed model achieves promising performance on this new task and establishes a benchmark for future research.

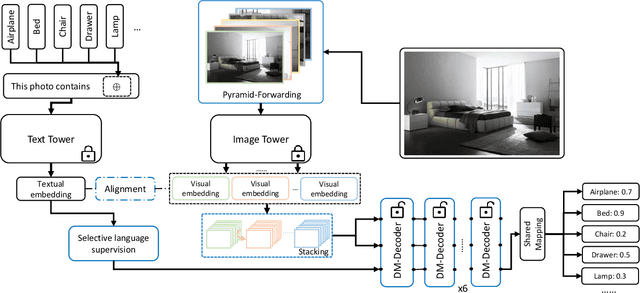

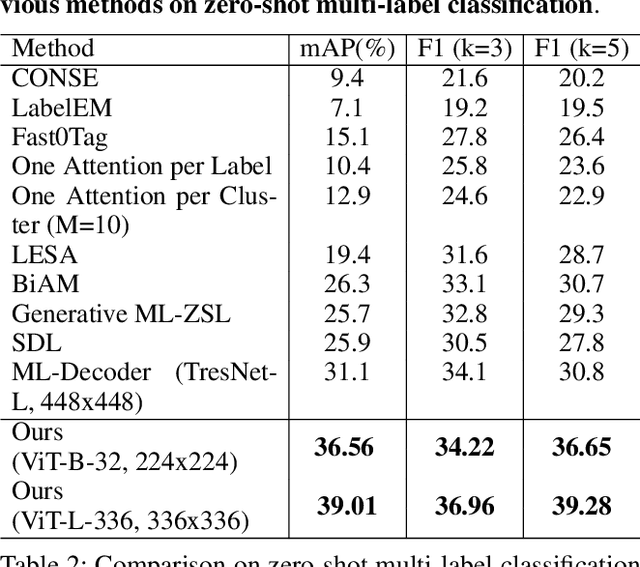

A Dual Modality Approach For Multi-Label Classification

Aug 19, 2022

Abstract:In computer vision, multi-label classification, including zero-shot multi-label classification are important tasks with many real-world applications. In this paper, we propose a novel algorithm, Aligned Dual moDality ClaSsifier (ADDS), which includes a Dual-Modal decoder (DM-decoder) with alignment between visual and textual features, for multi-label classification tasks. Moreover, we design a simple and yet effective method called Pyramid-Forwarding to enhance the performance for inputs with high resolutions. Extensive experiments conducted on standard multi-label benchmark datasets, MS-COCO and NUS-WIDE, demonstrate that our approach significantly outperforms previous methods and provides state-of-the-art performance for conventional multi-label classification, zero-shot multi-label classification, and an extreme case called single-to-multi label classification where models trained on single-label datasets (ImageNet-1k, ImageNet-21k) are tested on multi-label ones (MS-COCO and NUS-WIDE). We also analyze how visual-textual alignment contributes to the proposed approach, validate the significance of the DM-decoder, and demonstrate the effectiveness of Pyramid-Forwarding on vision transformer.

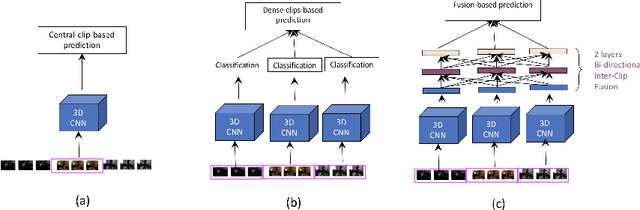

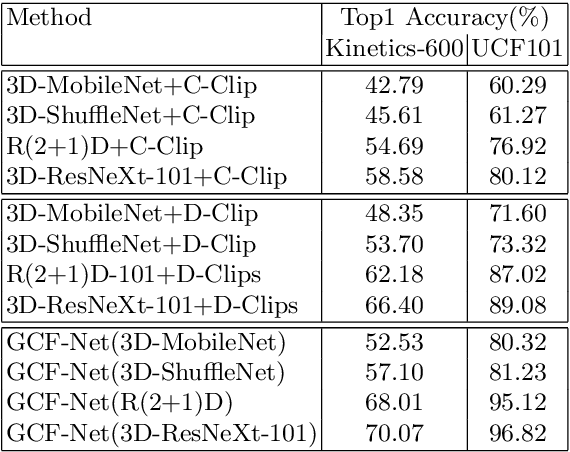

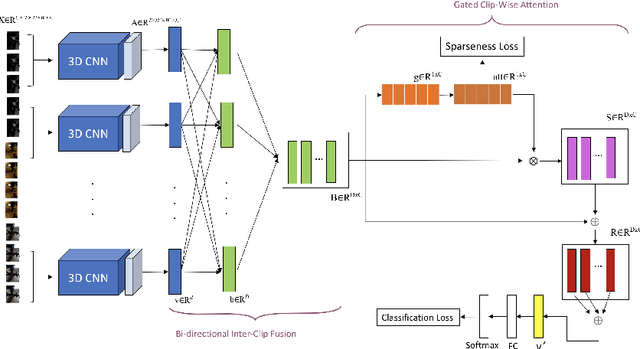

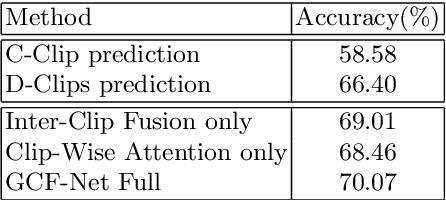

GCF-Net: Gated Clip Fusion Network for Video Action Recognition

Feb 02, 2021

Abstract:In recent years, most of the accuracy gains for video action recognition have come from the newly designed CNN architectures (e.g., 3D-CNNs). These models are trained by applying a deep CNN on single clip of fixed temporal length. Since each video segment are processed by the 3D-CNN module separately, the corresponding clip descriptor is local and the inter-clip relationships are inherently implicit. Common method that directly averages the clip-level outputs as a video-level prediction is prone to fail due to the lack of mechanism that can extract and integrate relevant information to represent the video. In this paper, we introduce the Gated Clip Fusion Network (GCF-Net) that can greatly boost the existing video action classifiers with the cost of a tiny computation overhead. The GCF-Net explicitly models the inter-dependencies between video clips to strengthen the receptive field of local clip descriptors. Furthermore, the importance of each clip to an action event is calculated and a relevant subset of clips is selected accordingly for a video-level analysis. On a large benchmark dataset (Kinetics-600), the proposed GCF-Net elevates the accuracy of existing action classifiers by 11.49% (based on central clip) and 3.67% (based on densely sampled clips) respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge