Jasper S. Wijnands

Urban feature analysis from aerial remote sensing imagery using self-supervised and semi-supervised computer vision

Aug 17, 2022

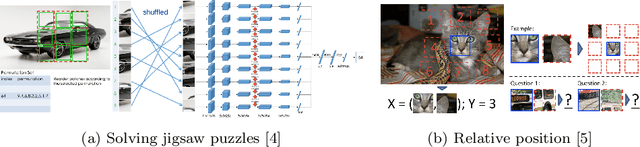

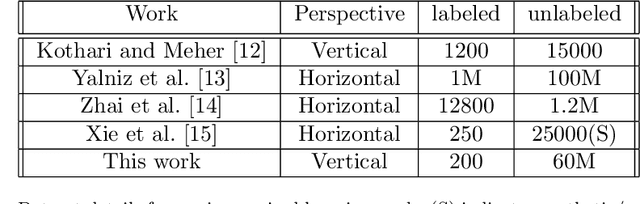

Abstract:Analysis of overhead imagery using computer vision is a problem that has received considerable attention in academic literature. Most techniques that operate in this space are both highly specialised and require expensive manual annotation of large datasets. These problems are addressed here through the development of a more generic framework, incorporating advances in representation learning which allows for more flexibility in analysing new categories of imagery with limited labeled data. First, a robust representation of an unlabeled aerial imagery dataset was created based on the momentum contrast mechanism. This was subsequently specialised for different tasks by building accurate classifiers with as few as 200 labeled images. The successful low-level detection of urban infrastructure evolution over a 10-year period from 60 million unlabeled images, exemplifies the substantial potential of our approach to advance quantitative urban research.

Self-Supervision, Remote Sensing and Abstraction: Representation Learning Across 3 Million Locations

Mar 08, 2022

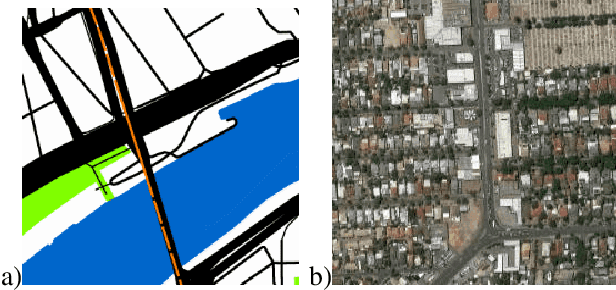

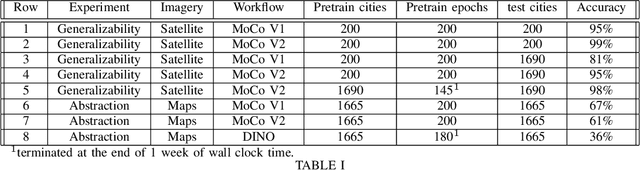

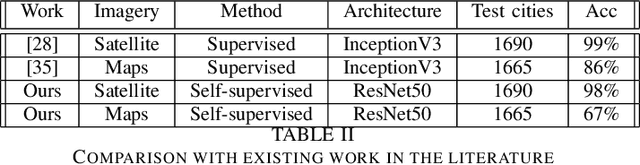

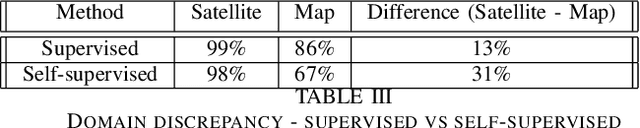

Abstract:Self-supervision based deep learning classification approaches have received considerable attention in academic literature. However, the performance of such methods on remote sensing imagery domains remains under-explored. In this work, we explore contrastive representation learning methods on the task of imagery-based city classification, an important problem in urban computing. We use satellite and map imagery across 2 domains, 3 million locations and more than 1500 cities. We show that self-supervised methods can build a generalizable representation from as few as 200 cities, with representations achieving over 95\% accuracy in unseen cities with minimal additional training. We also find that the performance discrepancy of such methods, when compared to supervised methods, induced by the domain discrepancy between natural imagery and abstract imagery is significant for remote sensing imagery. We compare all analysis against existing supervised models from academic literature and open-source our models for broader usage and further criticism.

Identifying safe intersection design through unsupervised feature extraction from satellite imagery

Oct 29, 2020

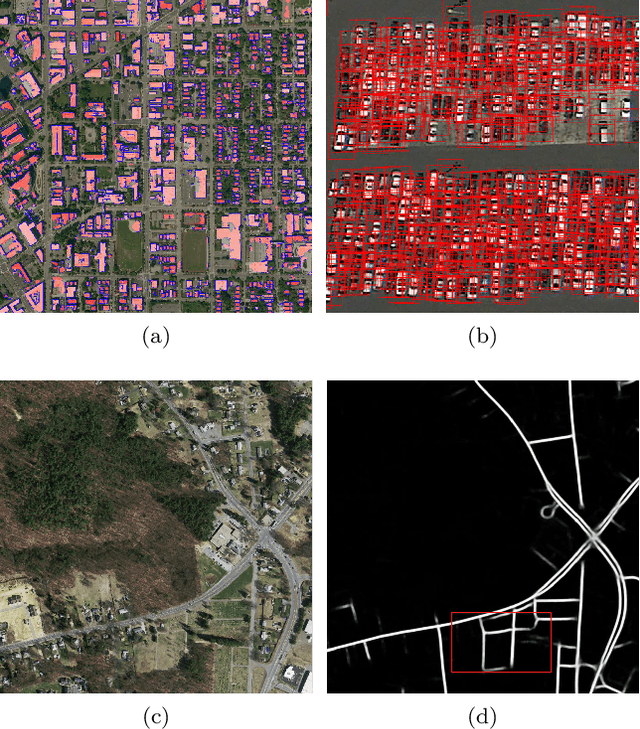

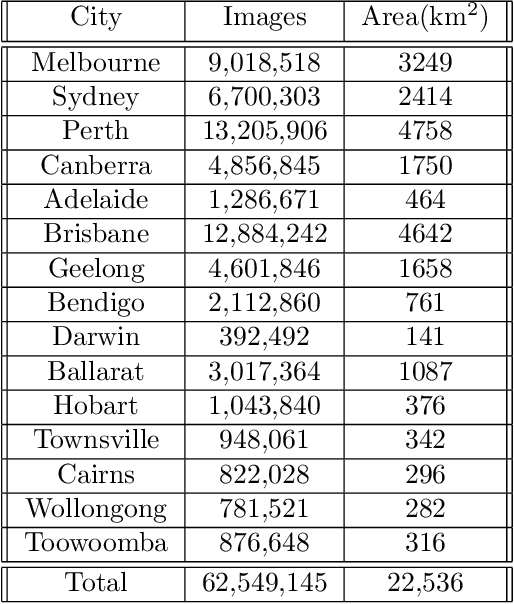

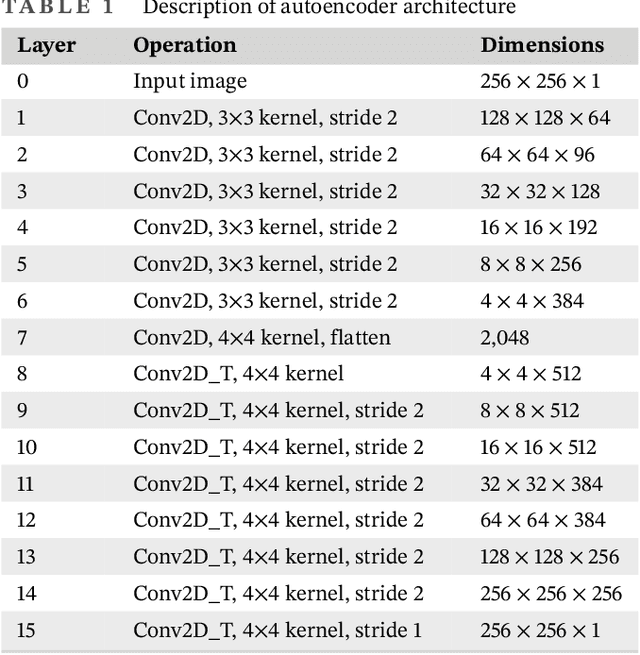

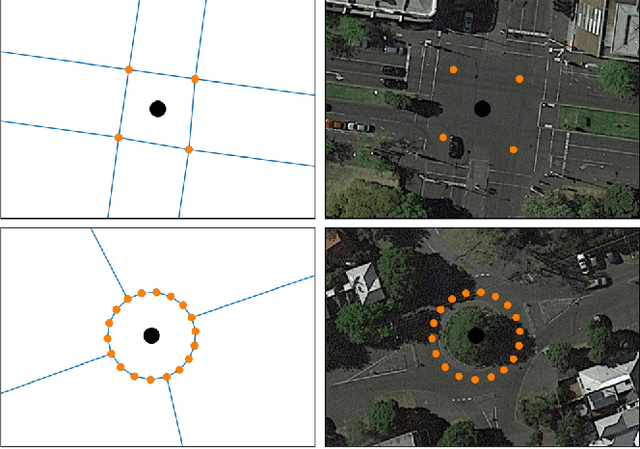

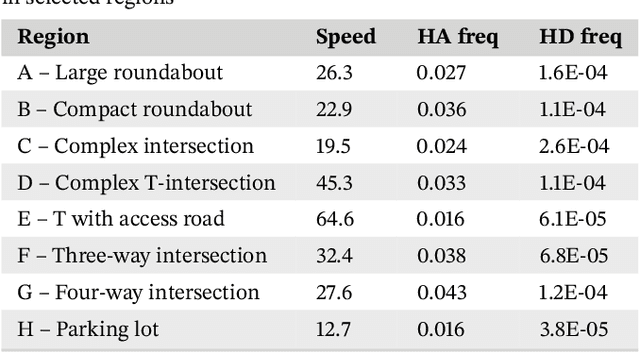

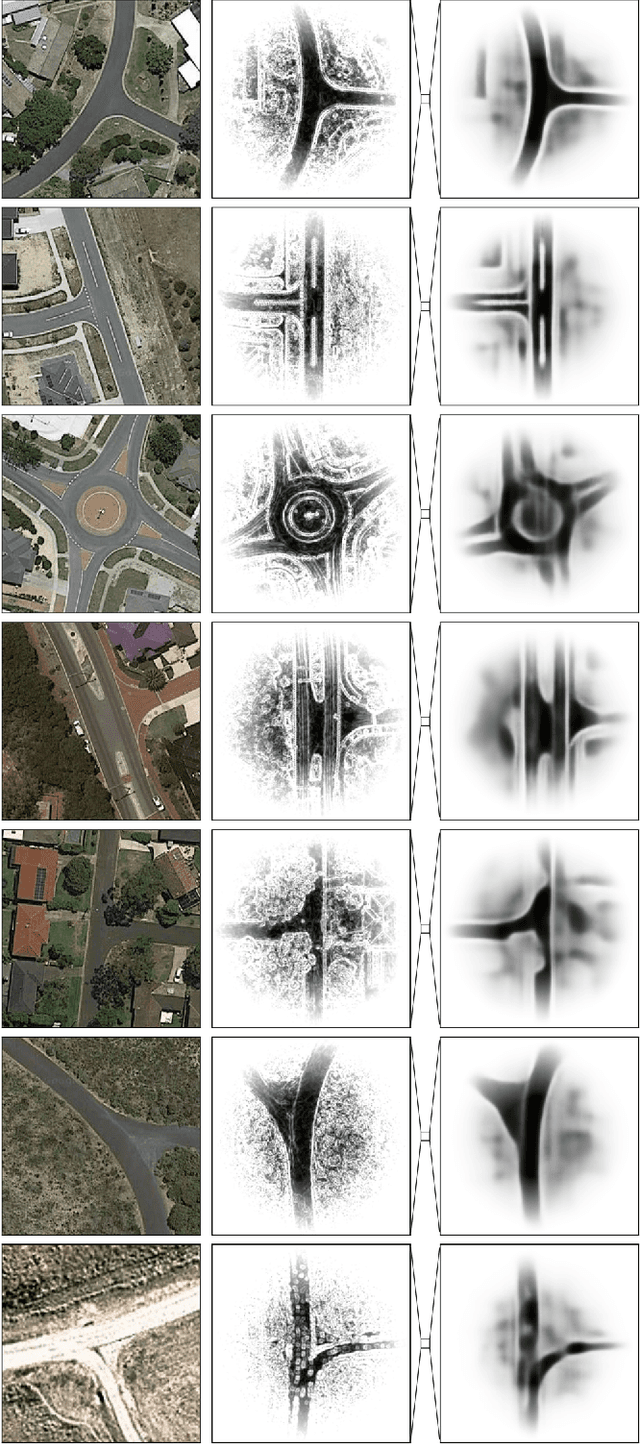

Abstract:The World Health Organization has listed the design of safer intersections as a key intervention to reduce global road trauma. This article presents the first study to systematically analyze the design of all intersections in a large country, based on aerial imagery and deep learning. Approximately 900,000 satellite images were downloaded for all intersections in Australia and customized computer vision techniques emphasized the road infrastructure. A deep autoencoder extracted high-level features, including the intersection's type, size, shape, lane markings, and complexity, which were used to cluster similar designs. An Australian telematics data set linked infrastructure design to driving behaviors captured during 66 million kilometers of driving. This showed more frequent hard acceleration events (per vehicle) at four- than three-way intersections, relatively low hard deceleration frequencies at T-intersections, and consistently low average speeds on roundabouts. Overall, domain-specific feature extraction enabled the identification of infrastructure improvements that could result in safer driving behaviors, potentially reducing road trauma.

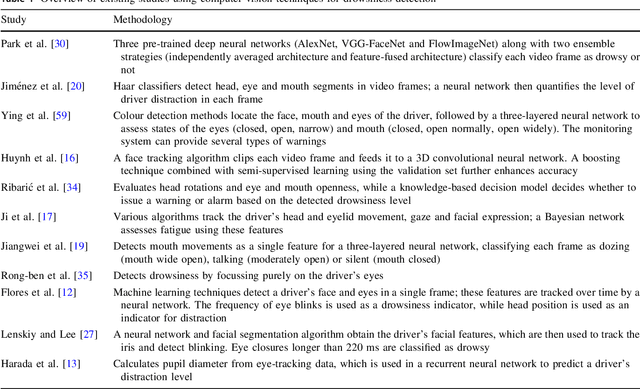

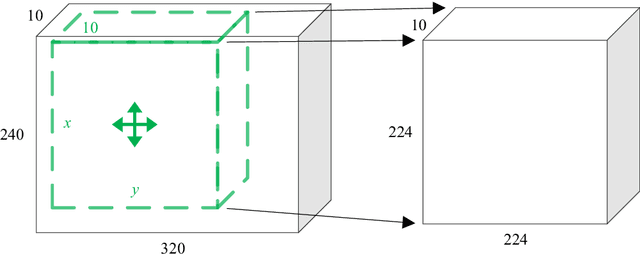

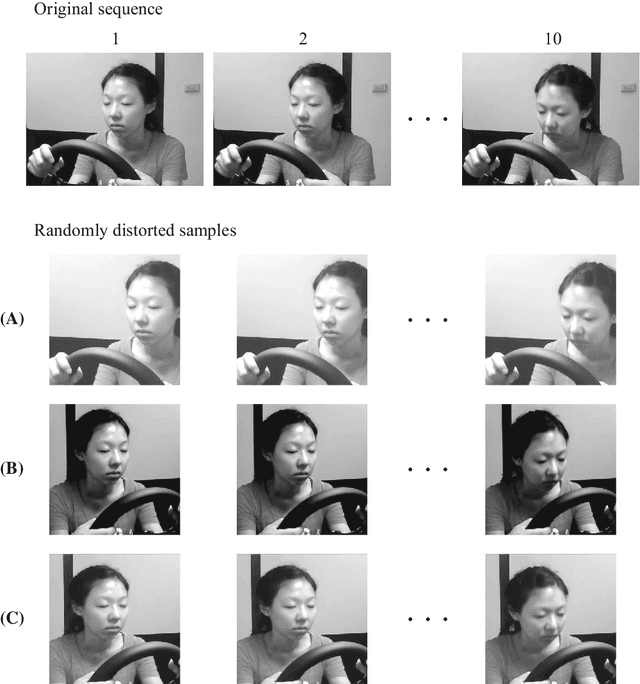

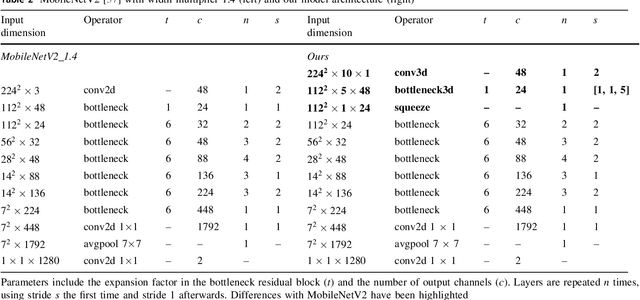

Real-time monitoring of driver drowsiness on mobile platforms using 3D neural networks

Oct 15, 2019

Abstract:Driver drowsiness increases crash risk, leading to substantial road trauma each year. Drowsiness detection methods have received considerable attention, but few studies have investigated the implementation of a detection approach on a mobile phone. Phone applications reduce the need for specialised hardware and hence, enable a cost-effective roll-out of the technology across the driving population. While it has been shown that three-dimensional (3D) operations are more suitable for spatiotemporal feature learning, current methods for drowsiness detection commonly use frame-based, multi-step approaches. However, computationally expensive techniques that achieve superior results on action recognition benchmarks (e.g. 3D convolutions, optical flow extraction) create bottlenecks for real-time, safety-critical applications on mobile devices. Here, we show how depthwise separable 3D convolutions, combined with an early fusion of spatial and temporal information, can achieve a balance between high prediction accuracy and real-time inference requirements. In particular, increased accuracy is achieved when assessment requires motion information, for example, when sunglasses conceal the eyes. Further, a custom TensorFlow-based smartphone application shows the true impact of various approaches on inference times and demonstrates the effectiveness of real-time monitoring based on out-of-sample data to alert a drowsy driver. Our model is pre-trained on ImageNet and Kinetics and fine-tuned on a publicly available Driver Drowsiness Detection dataset. Fine-tuning on large naturalistic driving datasets could further improve accuracy to obtain robust in-vehicle performance. Overall, our research is a step towards practical deep learning applications, potentially preventing micro-sleeps and reducing road trauma.

* 13 pages, 2 figures, 'Online First' version. For associated mp4 files, see journal website

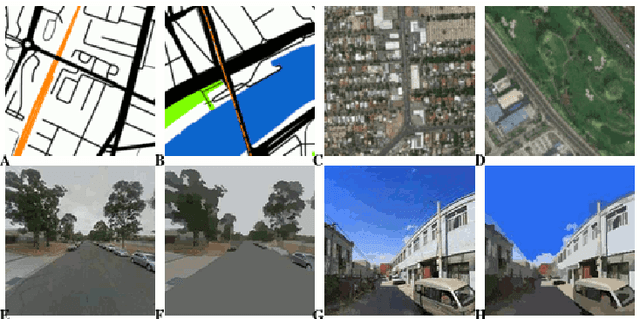

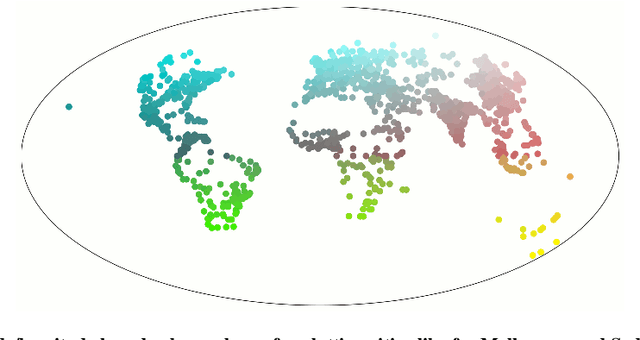

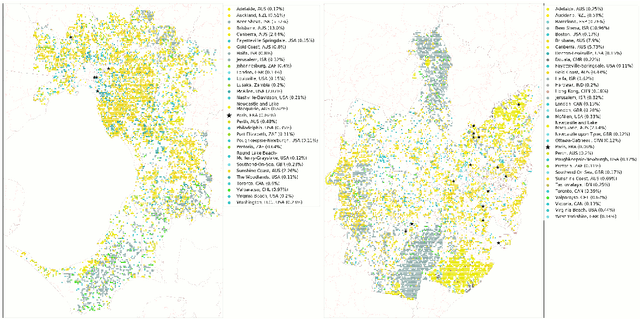

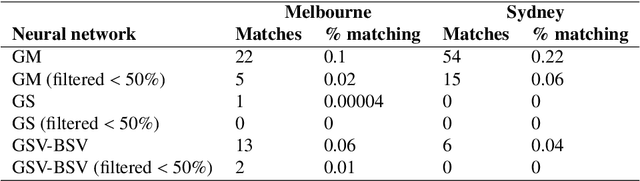

The 'Paris-end' of town? Urban typology through machine learning

Oct 08, 2019

Abstract:The confluence of recent advances in availability of geospatial information, computing power, and artificial intelligence offers new opportunities to understand how and where our cities differ or are alike. Departing from a traditional `top-down' analysis of urban design features, this project analyses millions of images of urban form (consisting of street view, satellite imagery, and street maps) to find shared characteristics. A (novel) neural network-based framework is trained with imagery from the largest 1692 cities in the world and the resulting models are used to compare within-city locations from Melbourne and Sydney to determine the closest connections between these areas and their international comparators. This work demonstrates a new, consistent, and objective method to begin to understand the relationship between cities and their health, transport, and environmental consequences of their design. The results show specific advantages and disadvantages using each type of imagery. Neural networks trained with map imagery will be highly influenced by the mix of roads, public transport, and green and blue space as well as the structure of these elements. The colours of natural and built features stand out as dominant characteristics in satellite imagery. The use of street view imagery will emphasise the features of a human scaled visual geography of streetscapes. Finally, and perhaps most importantly, this research also answers the age-old question, ``Is there really a `Paris-end' to your city?''.

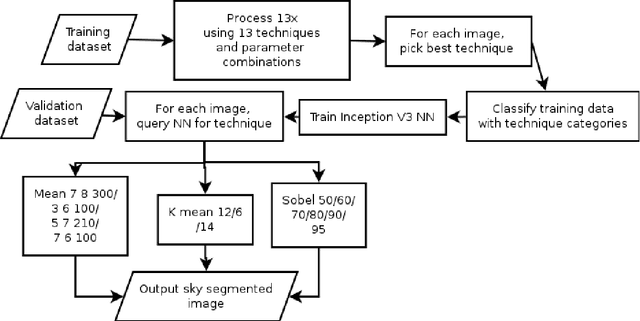

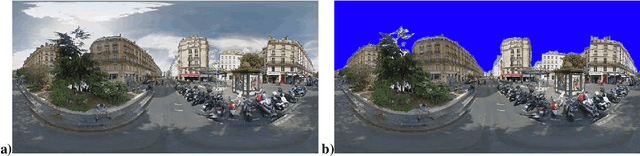

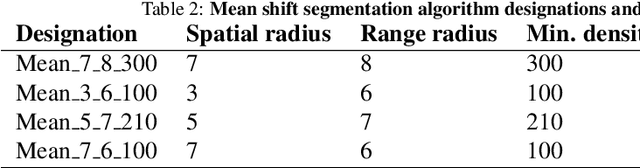

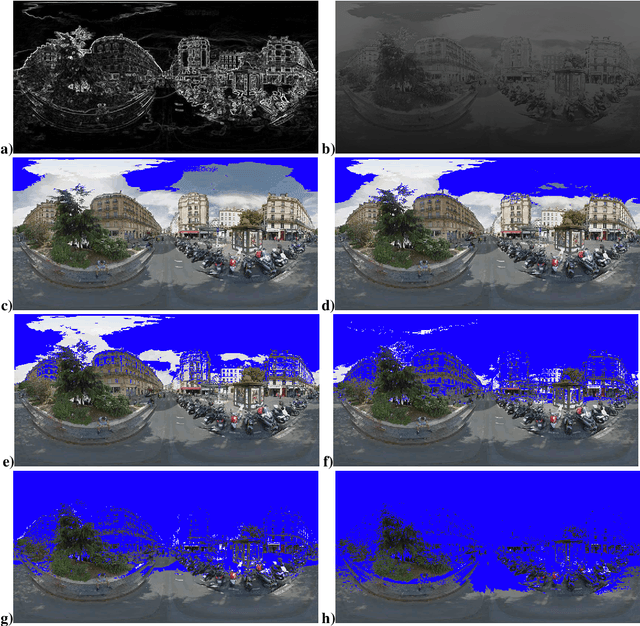

Sky pixel detection in outdoor imagery using an adaptive algorithm and machine learning

Oct 08, 2019

Abstract:Computer vision techniques allow automated detection of sky pixels in outdoor imagery. Multiple applications exist for this information across a large number of research areas. In urban climate, sky detection is an important first step in gathering information about urban morphology and sky view factors. However, capturing accurate results remains challenging and becomes even more complex using imagery captured under a variety of lighting and weather conditions. To address this problem, we present a new sky pixel detection system demonstrated to produce accurate results using a wide range of outdoor imagery types. Images are processed using a selection of mean-shift segmentation, K-means clustering, and Sobel filters to mark sky pixels in the scene. The algorithm for a specific image is chosen by a convolutional neural network, trained with 25,000 images from the Skyfinder data set, reaching 82% accuracy with the top three classes. This selection step allows the sky marking to follow an adaptive process and to use different techniques and parameters to best suit a particular image. An evaluation of fourteen different techniques and parameter sets shows that no single technique can perform with high accuracy across varied Skyfinder and Google Street View data sets. However, by using our adaptive process, large increases in accuracy are observed. The resulting system is shown to perform better than other published techniques.

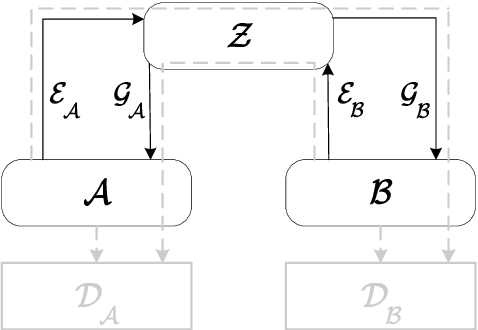

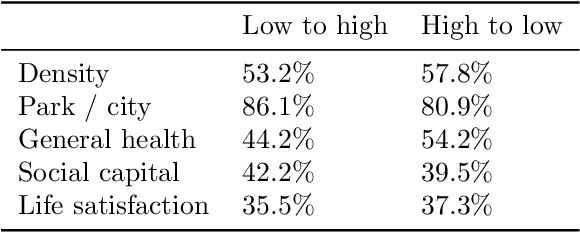

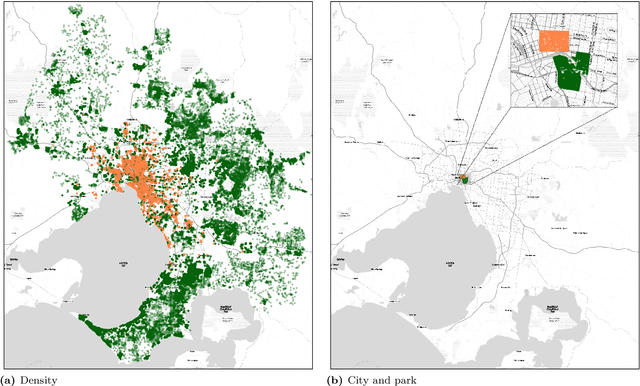

Streetscape augmentation using generative adversarial networks: insights related to health and wellbeing

May 14, 2019

Abstract:Deep learning using neural networks has provided advances in image style transfer, merging the content of one image (e.g., a photo) with the style of another (e.g., a painting). Our research shows this concept can be extended to analyse the design of streetscapes in relation to health and wellbeing outcomes. An Australian population health survey (n=34,000) was used to identify the spatial distribution of health and wellbeing outcomes, including general health and social capital. For each outcome, the most and least desirable locations formed two domains. Streetscape design was sampled using around 80,000 Google Street View images per domain. Generative adversarial networks translated these images from one domain to the other, preserving the main structure of the input image, but transforming the `style' from locations where self-reported health was bad to locations where it was good. These translations indicate that areas in Melbourne with good general health are characterised by sufficient green space and compactness of the urban environment, whilst streetscape imagery related to high social capital contained more and wider footpaths, fewer fences and more grass. Beyond identifying relationships, the method is a first step towards computer-generated design interventions that have the potential to improve population health and wellbeing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge