Jan Van Eijgen

Enhancing Retinal Vessel Segmentation Generalization via Layout-Aware Generative Modelling

Mar 03, 2025

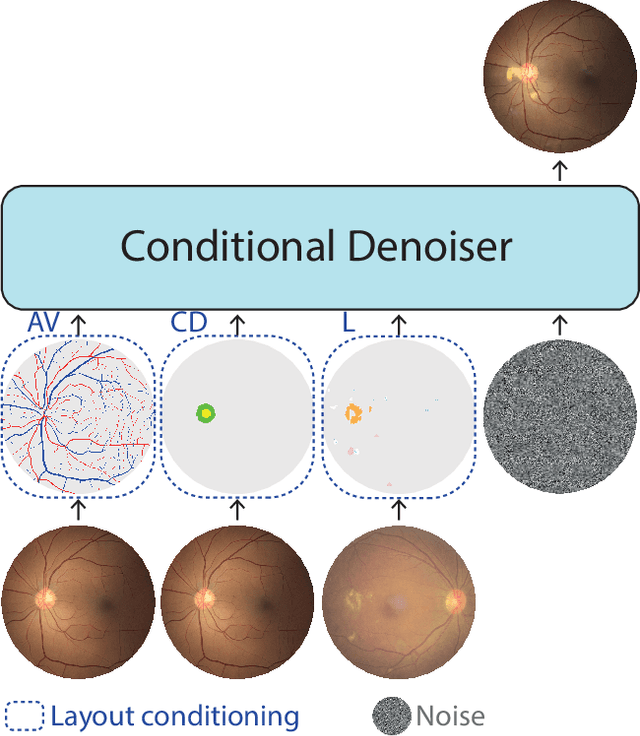

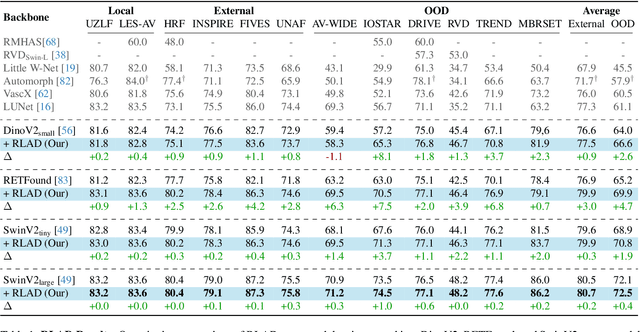

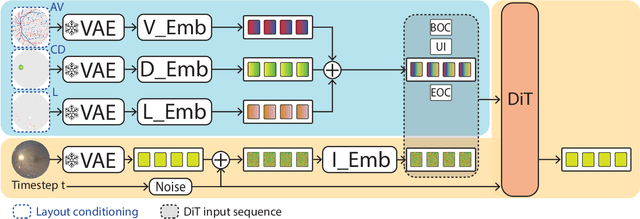

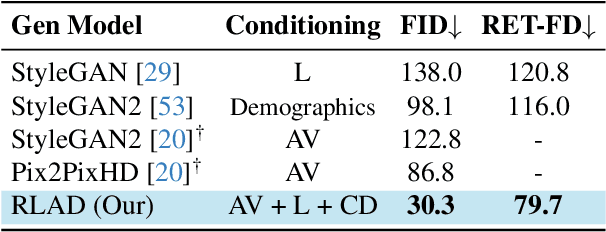

Abstract:Generalization in medical segmentation models is challenging due to limited annotated datasets and imaging variability. To address this, we propose Retinal Layout-Aware Diffusion (RLAD), a novel diffusion-based framework for generating controllable layout-aware images. RLAD conditions image generation on multiple key layout components extracted from real images, ensuring high structural fidelity while enabling diversity in other components. Applied to retinal fundus imaging, we augmented the training datasets by synthesizing paired retinal images and vessel segmentations conditioned on extracted blood vessels from real images, while varying other layout components such as lesions and the optic disc. Experiments demonstrated that RLAD-generated data improved generalization in retinal vessel segmentation by up to 8.1%. Furthermore, we present REYIA, a comprehensive dataset comprising 586 manually segmented retinal images. To foster reproducibility and drive innovation, both our code and dataset will be made publicly accessible.

GONet: A Generalizable Deep Learning Model for Glaucoma Detection

Feb 26, 2025

Abstract:Glaucomatous optic neuropathy (GON) is a prevalent ocular disease that can lead to irreversible vision loss if not detected early and treated. The traditional diagnostic approach for GON involves a set of ophthalmic examinations, which are time-consuming and require a visit to an ophthalmologist. Recent deep learning models for automating GON detection from digital fundus images (DFI) have shown promise but often suffer from limited generalizability across different ethnicities, disease groups and examination settings. To address these limitations, we introduce GONet, a robust deep learning model developed using seven independent datasets, including over 119,000 DFIs with gold-standard annotations and from patients of diverse geographic backgrounds. GONet consists of a DINOv2 pre-trained self-supervised vision transformers fine-tuned using a multisource domain strategy. GONet demonstrated high out-of-distribution generalizability, with an AUC of 0.85-0.99 in target domains. GONet performance was similar or superior to state-of-the-art works and was significantly superior to the cup-to-disc ratio, by up to 21.6%. GONet is available at [URL provided on publication]. We also contribute a new dataset consisting of 768 DFI with GON labels as open access.

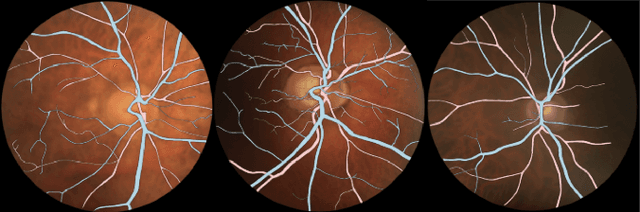

LUNet: Deep Learning for the Segmentation of Arterioles and Venules in High Resolution Fundus Images

Sep 11, 2023Abstract:The retina is the only part of the human body in which blood vessels can be accessed non-invasively using imaging techniques such as digital fundus images (DFI). The spatial distribution of the retinal microvasculature may change with cardiovascular diseases and thus the eyes may be regarded as a window to our hearts. Computerized segmentation of the retinal arterioles and venules (A/V) is essential for automated microvasculature analysis. Using active learning, we created a new DFI dataset containing 240 crowd-sourced manual A/V segmentations performed by fifteen medical students and reviewed by an ophthalmologist, and developed LUNet, a novel deep learning architecture for high resolution A/V segmentation. LUNet architecture includes a double dilated convolutional block that aims to enhance the receptive field of the model and reduce its parameter count. Furthermore, LUNet has a long tail that operates at high resolution to refine the segmentation. The custom loss function emphasizes the continuity of the blood vessels. LUNet is shown to significantly outperform two state-of-the-art segmentation algorithms on the local test set as well as on four external test sets simulating distribution shifts across ethnicity, comorbidities, and annotators. We make the newly created dataset open access (upon publication).

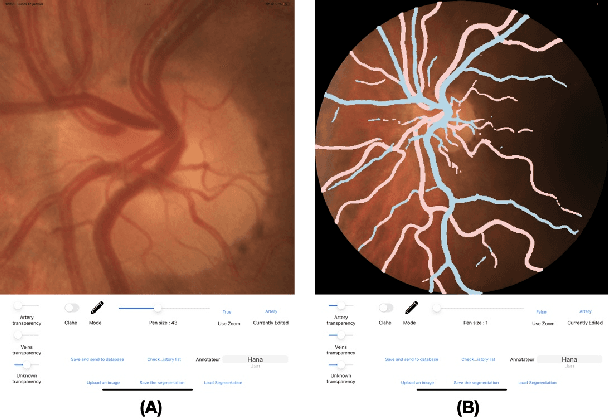

Lirot.ai: A Novel Platform for Crowd-Sourcing Retinal Image Segmentations

Aug 22, 2022

Abstract:Introduction: For supervised deep learning (DL) tasks, researchers need a large annotated dataset. In medical data science, one of the major limitations to develop DL models is the lack of annotated examples in large quantity. This is most often due to the time and expertise required to annotate. We introduce Lirot.ai, a novel platform for facilitating and crowd-sourcing image segmentations. Methods: Lirot.ai is composed of three components; an iPadOS client application named Lirot.ai-app, a backend server named Lirot.ai-server and a python API name Lirot.ai-API. Lirot.ai-app was developed in Swift 5.6 and Lirot.ai-server is a firebase backend. Lirot.ai-API allows the management of the database. Lirot.ai-app can be installed on as many iPadOS devices as needed so that annotators may be able to perform their segmentation simultaneously and remotely. We incorporate Apple Pencil compatibility, making the segmentation faster, more accurate, and more intuitive for the expert than any other computer-based alternative. Results: We demonstrate the usage of Lirot.ai for the creation of a retinal fundus dataset with reference vasculature segmentations. Discussion and future work: We will use active learning strategies to continue enlarging our retinal fundus dataset by including a more efficient process to select the images to be annotated and distribute them to annotators.

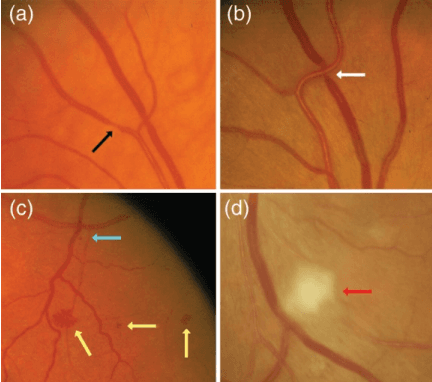

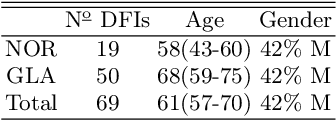

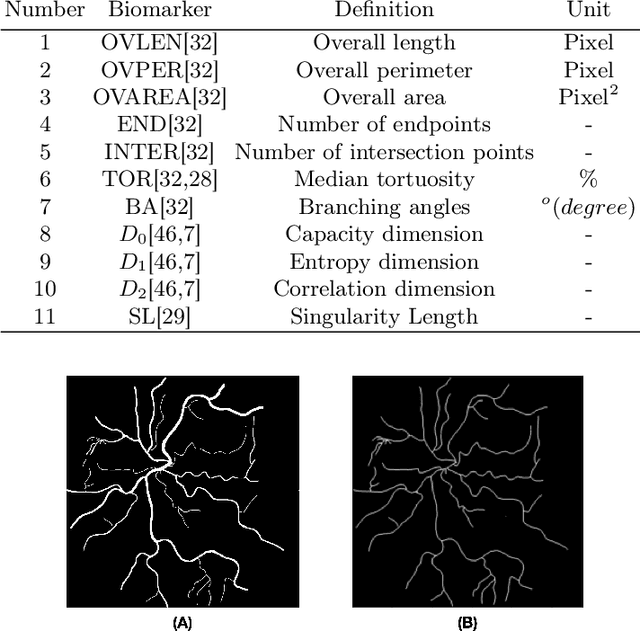

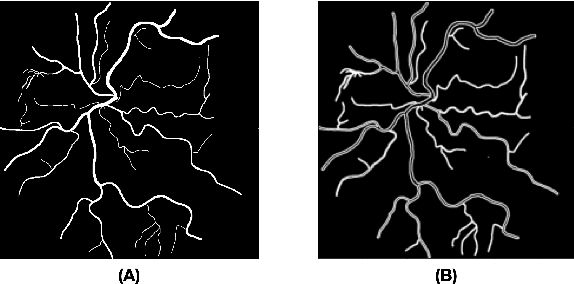

PVBM: A Python Vasculature Biomarker Toolbox Based On Retinal Blood Vessel Segmentation

Jul 31, 2022

Abstract:Introduction: Blood vessels can be non-invasively visualized from a digital fundus image (DFI). Several studies have shown an association between cardiovascular risk and vascular features obtained from DFI. Recent advances in computer vision and image segmentation enable automatising DFI blood vessel segmentation. There is a need for a resource that can automatically compute digital vasculature biomarkers (VBM) from these segmented DFI. Methods: In this paper, we introduce a Python Vasculature BioMarker toolbox, denoted PVBM. A total of 11 VBMs were implemented. In particular, we introduce new algorithmic methods to estimate tortuosity and branching angles. Using PVBM, and as a proof of usability, we analyze geometric vascular differences between glaucomatous patients and healthy controls. Results: We built a fully automated vasculature biomarker toolbox based on DFI segmentations and provided a proof of usability to characterize the vascular changes in glaucoma. For arterioles and venules, all biomarkers were significant and lower in glaucoma patients compared to healthy controls except for tortuosity, venular singularity length and venular branching angles. Conclusion: We have automated the computation of 11 VBMs from retinal blood vessel segmentation. The PVBM toolbox is made open source under a GNU GPL 3 license and is available on physiozoo.com (following publication).

FundusQ-Net: a Regression Quality Assessment Deep Learning Algorithm for Fundus Images Quality Grading

May 02, 2022

Abstract:Objective: Ophthalmological pathologies such as glaucoma, diabetic retinopathy and age-related macular degeneration are major causes of blindness and vision impairment. There is a need for novel decision support tools that can simplify and speed up the diagnosis of these pathologies. A key step in this process is to automatically estimate the quality of the fundus images to make sure these are interpretable by a human operator or a machine learning model. We present a novel fundus image quality scale and deep learning (DL) model that can estimate fundus image quality relative to this new scale. Methods: A total of 1,245 images were graded for quality by two ophthalmologists within the range 1-10, with a resolution of 0.5. A DL regression model was trained for fundus image quality assessment. The architecture used was Inception-V3. The model was developed using a total of 89,947 images from 6 databases, of which 1,245 were labeled by the specialists and the remaining 88,702 images were used for pre-training and semi-supervised learning. The final DL model was evaluated on an internal test set (n=209) as well as an external test set (n=194). Results: The final DL model, denoted FundusQ-Net, achieved a mean absolute error of 0.61 (0.54-0.68) on the internal test set. When evaluated as a binary classification model on the public DRIMDB database as an external test set the model obtained an accuracy of 99%. Significance: the proposed algorithm provides a new robust tool for automated quality grading of fundus images.

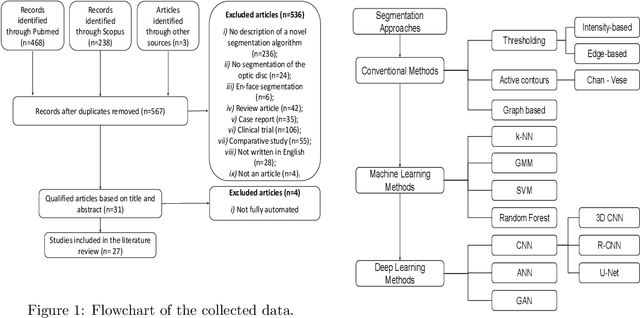

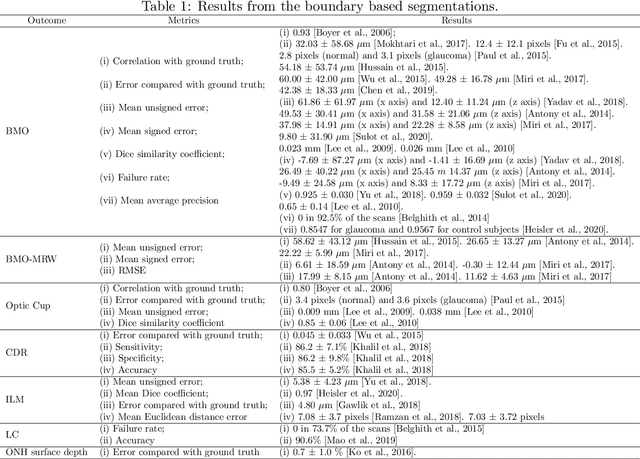

Automatic Segmentation of the Optic Nerve Head Region in Optical Coherence Tomography: A Methodological Review

Sep 06, 2021

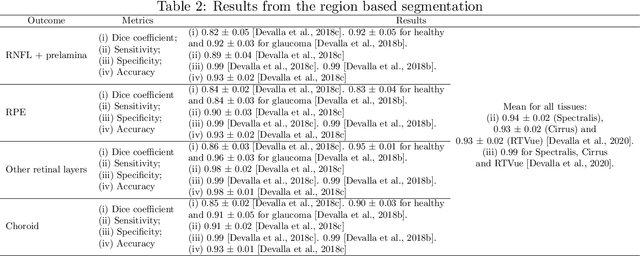

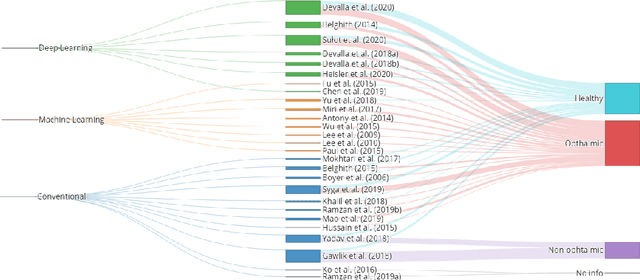

Abstract:The optic nerve head represents the intraocular section of the optic nerve (ONH), which is prone to damage by intraocular pressure. The advent of optical coherence tomography (OCT) has enabled the evaluation of novel optic nerve head parameters, namely the depth and curvature of the lamina cribrosa (LC). Together with the Bruch's membrane opening minimum-rim-width, these seem to be promising optic nerve head parameters for diagnosis and monitoring of retinal diseases such as glaucoma. Nonetheless, these optical coherence tomography derived biomarkers are mostly extracted through manual segmentation, which is time-consuming and prone to bias, thus limiting their usability in clinical practice. The automatic segmentation of optic nerve head in OCT scans could further improve the current clinical management of glaucoma and other diseases. This review summarizes the current state-of-the-art in automatic segmentation of the ONH in OCT. PubMed and Scopus were used to perform a systematic review. Additional works from other databases (IEEE, Google Scholar and ARVO IOVS) were also included, resulting in a total of 27 reviewed studies. For each algorithm, the methods, the size and type of dataset used for validation, and the respective results were carefully analyzed. The results show that deep learning-based algorithms provide the highest accuracy, sensitivity and specificity for segmenting the different structures of the ONH including the LC. However, a lack of consensus regarding the definition of segmented regions, extracted parameters and validation approaches has been observed, highlighting the importance and need of standardized methodologies for ONH segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge