J. Parker Mitchell

Hyperparameter Optimization in Binary Communication Networks for Neuromorphic Deployment

Apr 21, 2020

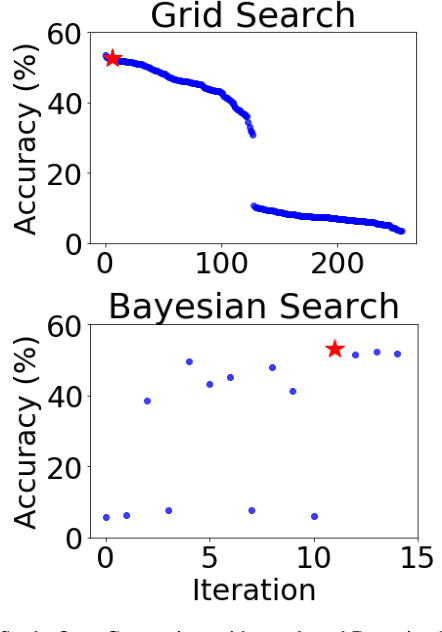

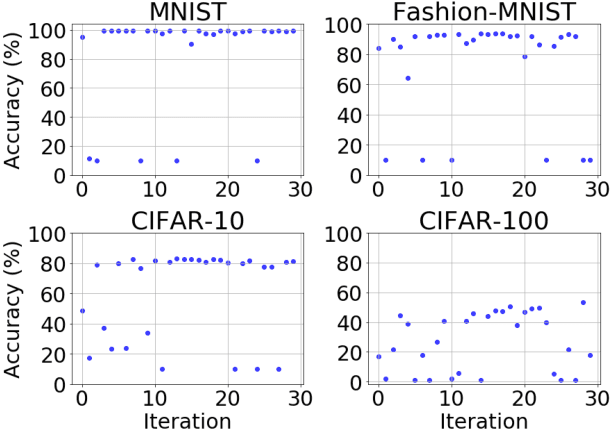

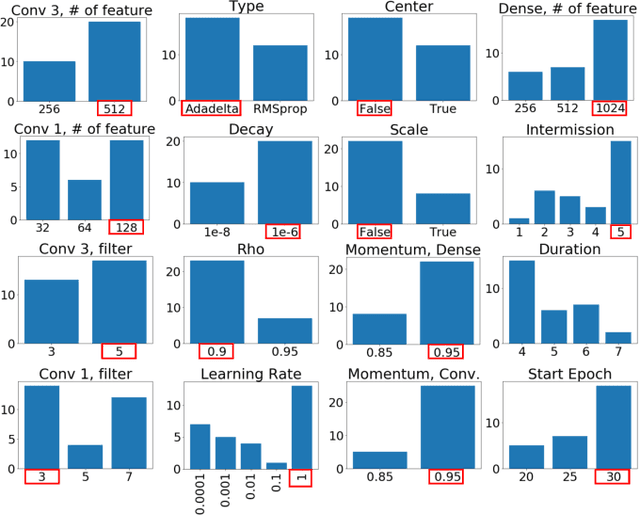

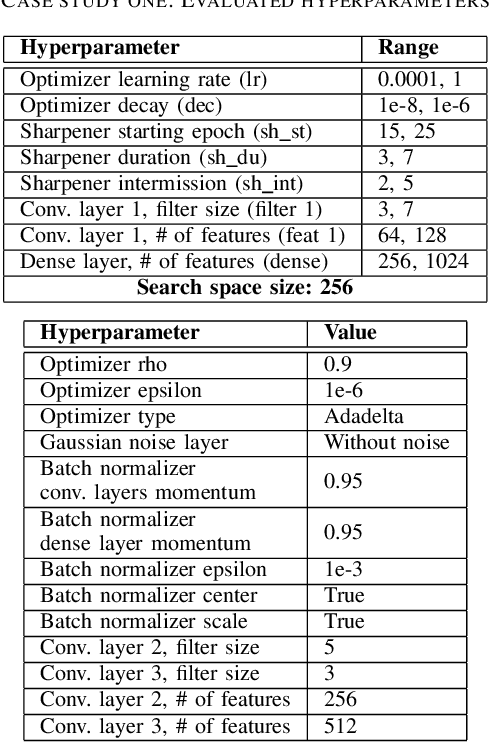

Abstract:Training neural networks for neuromorphic deployment is non-trivial. There have been a variety of approaches proposed to adapt back-propagation or back-propagation-like algorithms appropriate for training. Considering that these networks often have very different performance characteristics than traditional neural networks, it is often unclear how to set either the network topology or the hyperparameters to achieve optimal performance. In this work, we introduce a Bayesian approach for optimizing the hyperparameters of an algorithm for training binary communication networks that can be deployed to neuromorphic hardware. We show that by optimizing the hyperparameters on this algorithm for each dataset, we can achieve improvements in accuracy over the previous state-of-the-art for this algorithm on each dataset (by up to 15 percent). This jump in performance continues to emphasize the potential when converting traditional neural networks to binary communication applicable to neuromorphic hardware.

Multi-Objective Optimization for Size and Resilience of Spiking Neural Networks

Feb 04, 2020

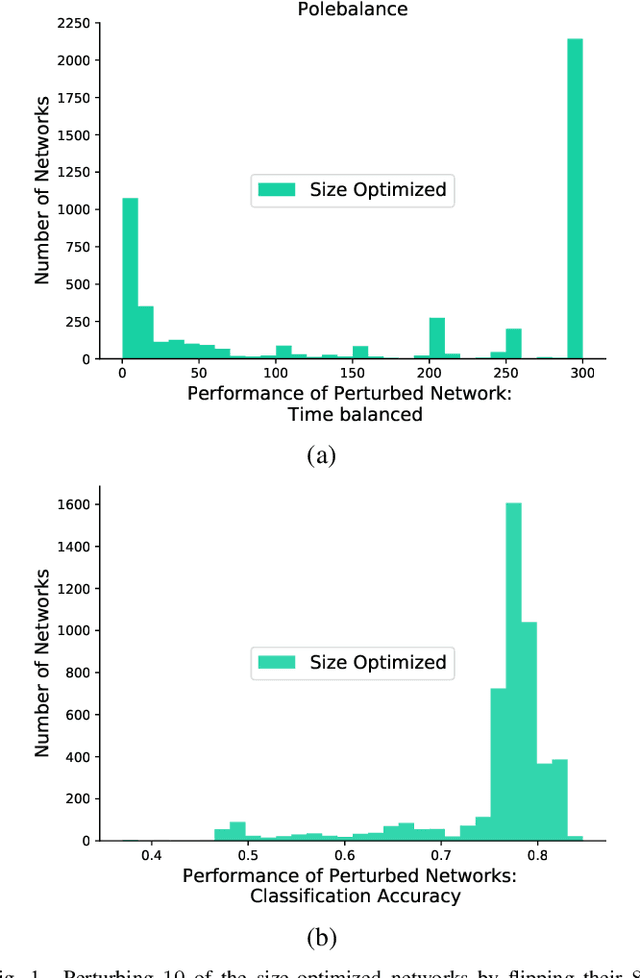

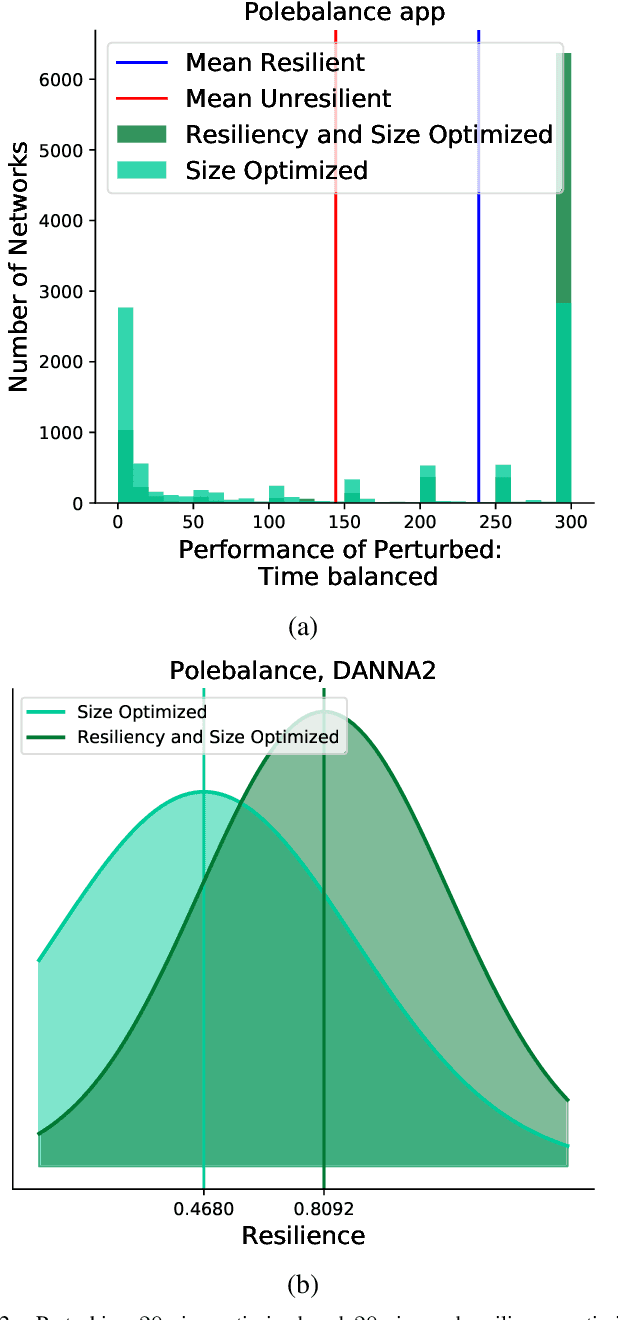

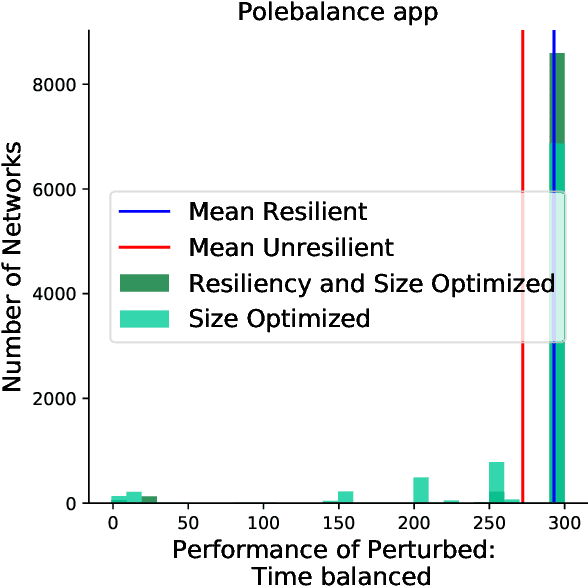

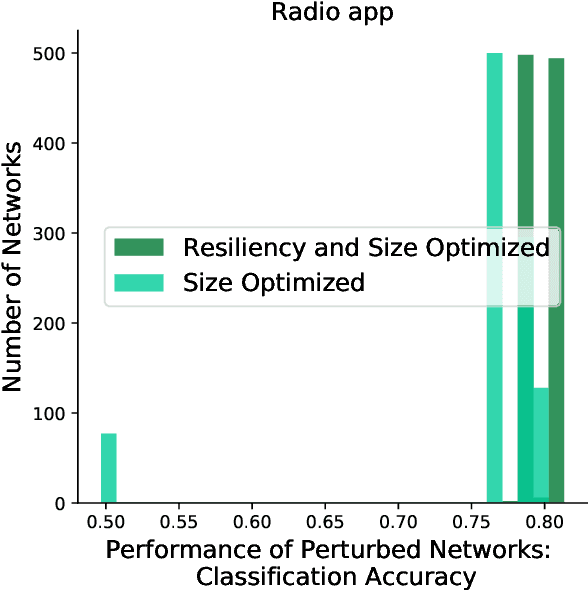

Abstract:Inspired by the connectivity mechanisms in the brain, neuromorphic computing architectures model Spiking Neural Networks (SNNs) in silicon. As such, neuromorphic architectures are designed and developed with the goal of having small, low power chips that can perform control and machine learning tasks. However, the power consumption of the developed hardware can greatly depend on the size of the network that is being evaluated on the chip. Furthermore, the accuracy of a trained SNN that is evaluated on chip can change due to voltage and current variations in the hardware that perturb the learned weights of the network. While efforts are made on the hardware side to minimize those perturbations, a software based strategy to make the deployed networks more resilient can help further alleviate that issue. In this work, we study Spiking Neural Networks in two neuromorphic architecture implementations with the goal of decreasing their size, while at the same time increasing their resiliency to hardware faults. We leverage an evolutionary algorithm to train the SNNs and propose a multiobjective fitness function to optimize the size and resiliency of the SNN. We demonstrate that this strategy leads to well-performing, small-sized networks that are more resilient to hardware faults.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge