Mihaela Dimovska

An algorithm for reconstruction of triangle-free linear dynamic networks with verification of correctness

Mar 05, 2020

Abstract:Reconstructing a network of dynamic systems from observational data is an active area of research. Many approaches guarantee a consistent reconstruction under the relatively strong assumption that the network dynamics is governed by strictly causal transfer functions. However, in many practical scenarios, strictly causal models are not adequate to describe the system and it is necessary to consider models with dynamics that include direct feedthrough terms. In presence of direct feedthroughs, guaranteeing a consistent reconstruction is a more challenging task. Indeed, under no additional assumptions on the network, we prove that, even in the limit of infinite data, any reconstruction method is susceptible to inferring edges that do not exist in the true network (false positives) or not detecting edges that are present in the network (false negative). However, for a class of triangle-free networks introduced in this article, some consistency guarantees can be provided. We present a method that either exactly recovers the topology of a triangle-free network certifying its correctness or outputs a graph that is sparser than the topology of the actual network, specifying that such a graph has no false positives, but there are false negatives.

Multi-Objective Optimization for Size and Resilience of Spiking Neural Networks

Feb 04, 2020

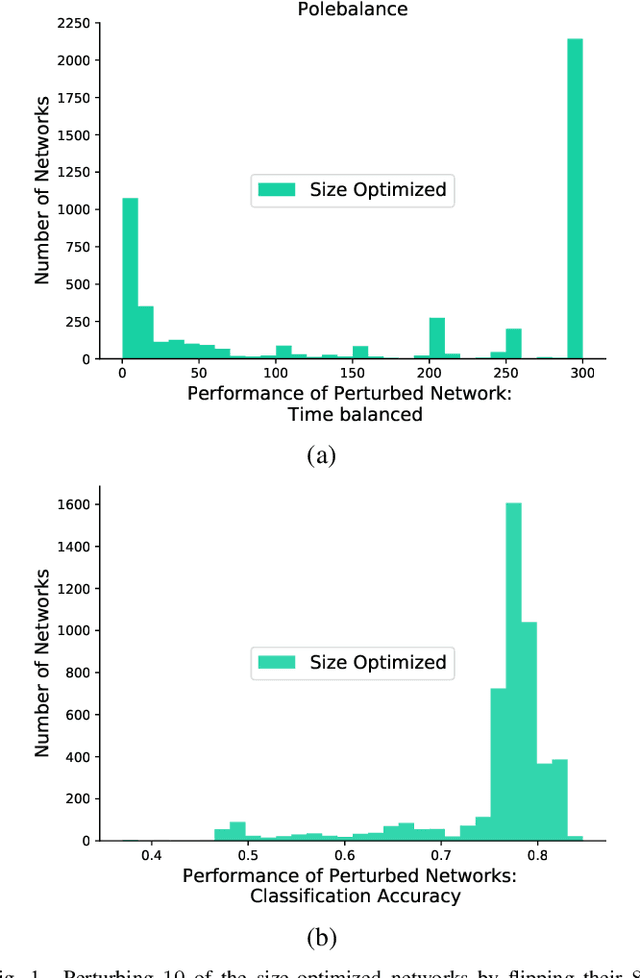

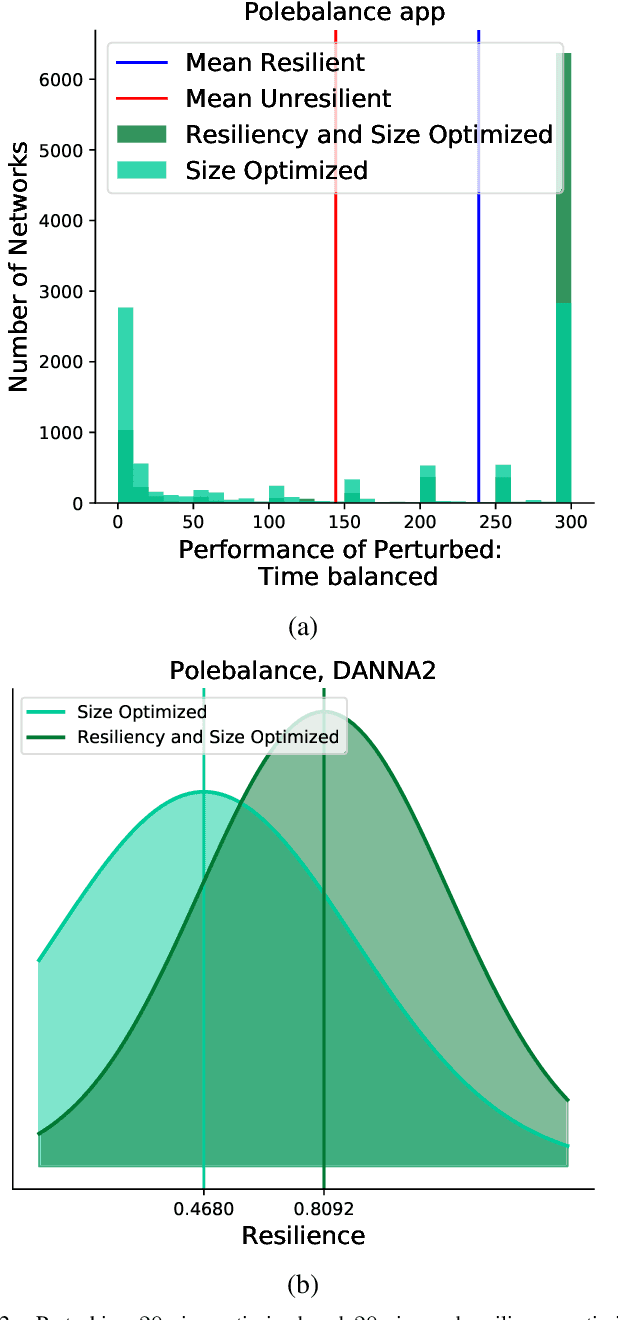

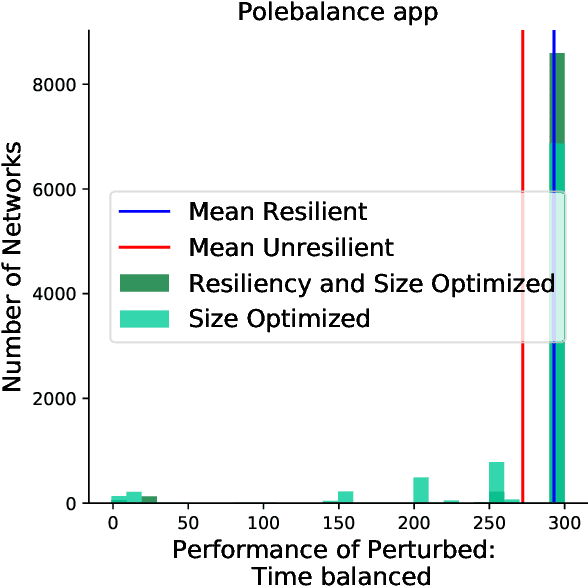

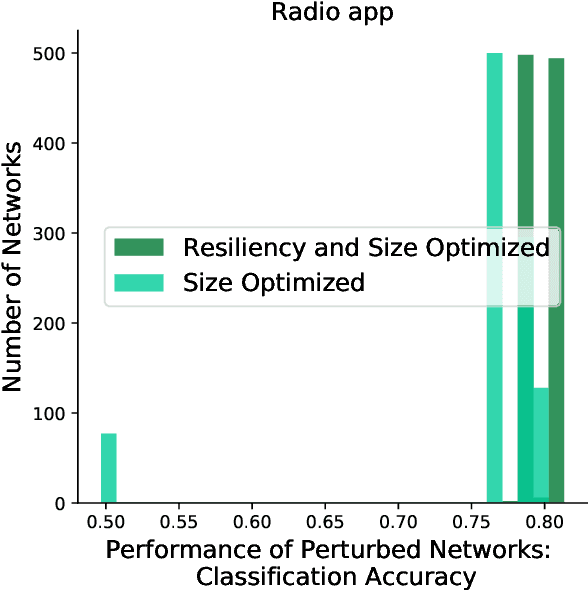

Abstract:Inspired by the connectivity mechanisms in the brain, neuromorphic computing architectures model Spiking Neural Networks (SNNs) in silicon. As such, neuromorphic architectures are designed and developed with the goal of having small, low power chips that can perform control and machine learning tasks. However, the power consumption of the developed hardware can greatly depend on the size of the network that is being evaluated on the chip. Furthermore, the accuracy of a trained SNN that is evaluated on chip can change due to voltage and current variations in the hardware that perturb the learned weights of the network. While efforts are made on the hardware side to minimize those perturbations, a software based strategy to make the deployed networks more resilient can help further alleviate that issue. In this work, we study Spiking Neural Networks in two neuromorphic architecture implementations with the goal of decreasing their size, while at the same time increasing their resiliency to hardware faults. We leverage an evolutionary algorithm to train the SNNs and propose a multiobjective fitness function to optimize the size and resiliency of the SNN. We demonstrate that this strategy leads to well-performing, small-sized networks that are more resilient to hardware faults.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge