Jérémy Anger

L1BSR: Exploiting Detector Overlap for Self-Supervised Single-Image Super-Resolution of Sentinel-2 L1B Imagery

Apr 17, 2023

Abstract:High-resolution satellite imagery is a key element for many Earth monitoring applications. Satellites such as Sentinel-2 feature characteristics that are favorable for super-resolution algorithms such as aliasing and band-misalignment. Unfortunately the lack of reliable high-resolution (HR) ground truth limits the application of deep learning methods to this task. In this work we propose L1BSR, a deep learning-based method for single-image super-resolution and band alignment of Sentinel-2 L1B 10m bands. The method is trained with self-supervision directly on real L1B data by leveraging overlapping areas in L1B images produced by adjacent CMOS detectors, thus not requiring HR ground truth. Our self-supervised loss is designed to enforce the super-resolved output image to have all the bands correctly aligned. This is achieved via a novel cross-spectral registration network (CSR) which computes an optical flow between images of different spectral bands. The CSR network is also trained with self-supervision using an Anchor-Consistency loss, which we also introduce in this work. We demonstrate the performance of the proposed approach on synthetic and real L1B data, where we show that it obtains comparable results to supervised methods.

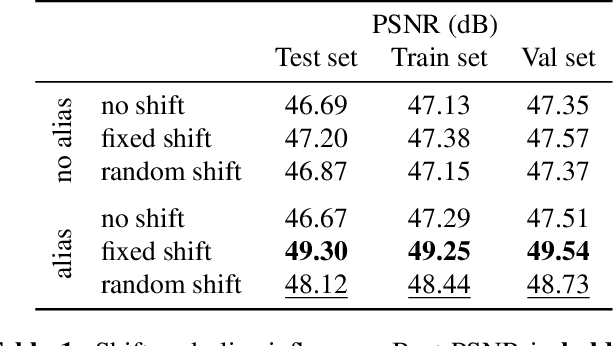

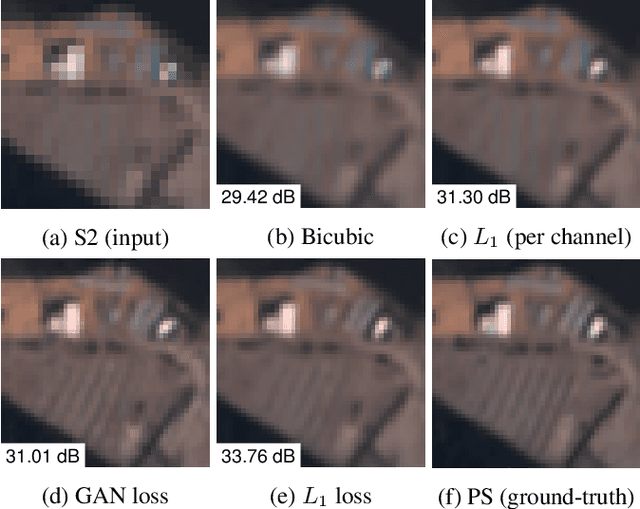

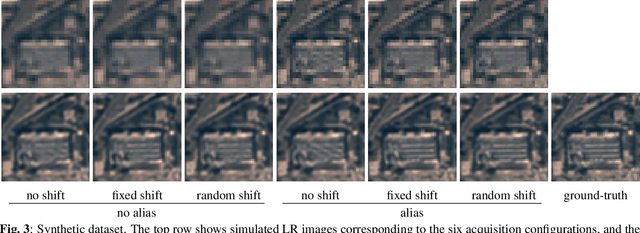

On The Role of Alias and Band-Shift for Sentinel-2 Super-Resolution

Feb 22, 2023

Abstract:In this work, we study the problem of single-image super-resolution (SISR) of Sentinel-2 imagery. We show that thanks to its unique sensor specification, namely the inter-band shift and alias, that deep-learning methods are able to recover fine details. By training a model using a simple $L_1$ loss, results are free of hallucinated details. For this study, we build a dataset of pairs of images Sentinel-2/PlanetScope to train and evaluate our super-resolution (SR) model.

Self-Supervised Super-Resolution for Multi-Exposure Push-Frame Satellites

May 04, 2022

Abstract:Modern Earth observation satellites capture multi-exposure bursts of push-frame images that can be super-resolved via computational means. In this work, we propose a super-resolution method for such multi-exposure sequences, a problem that has received very little attention in the literature. The proposed method can handle the signal-dependent noise in the inputs, process sequences of any length, and be robust to inaccuracies in the exposure times. Furthermore, it can be trained end-to-end with self-supervision, without requiring ground truth high resolution frames, which makes it especially suited to handle real data. Central to our method are three key contributions: i) a base-detail decomposition for handling errors in the exposure times, ii) a noise-level-aware feature encoding for improved fusion of frames with varying signal-to-noise ratio and iii) a permutation invariant fusion strategy by temporal pooling operators. We evaluate the proposed method on synthetic and real data and show that it outperforms by a significant margin existing single-exposure approaches that we adapted to the multi-exposure case.

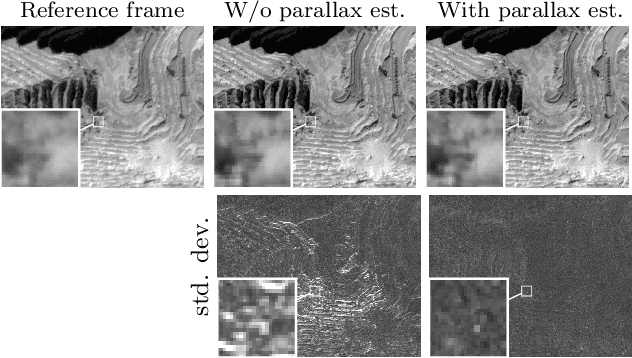

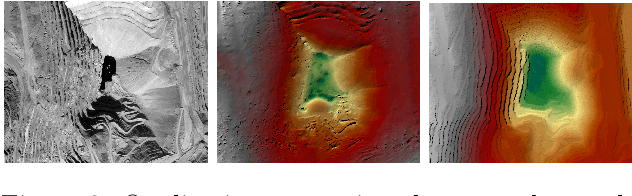

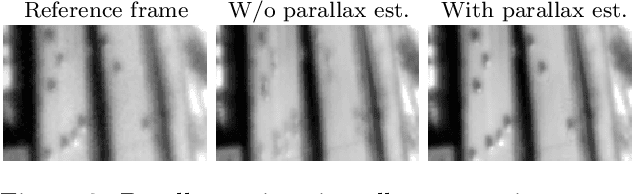

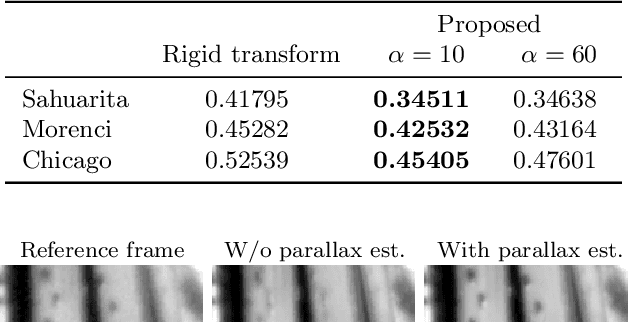

Parallax estimation for push-frame satellite imagery: application to super-resolution and 3D surface modeling from Skysat products

Feb 03, 2021

Abstract:Recent constellations of satellites, including the Skysat constellation, are able to acquire bursts of images. This new acquisition mode allows for modern image restoration techniques, including multi-frame super-resolution. As the satellite moves during the acquisition of the burst, elevation changes in the scene translate into noticeable parallax. This parallax hinders the results of the restoration. To cope with this issue, we propose a novel parallax estimation method. The method is composed of a linear Plane+Parallax decomposition of the apparent motion and a multi-frame optical flow algorithm that exploits all frames simultaneously. Using SkySat L1A images, we show that the estimated per-pixel displacements are important for applying multi-frame super-resolution on scenes containing elevation changes and that can also be used to estimate a coarse 3D surface model.

Proba-V-ref: Repurposing the Proba-V challenge for reference-aware super resolution

Jan 26, 2021

Abstract:The PROBA-V Super-Resolution challenge distributes real low-resolution image series and corresponding high-resolution targets to advance research on Multi-Image Super Resolution (MISR) for satellite images. However, in the PROBA-V dataset the low-resolution image corresponding to the high-resolution target is not identified. We argue that in doing so, the challenge ranks the proposed methods not only by their MISR performance, but mainly by the heuristics used to guess which image in the series is the most similar to the high-resolution target. We demonstrate this by improving the performance obtained by the two winners of the challenge only by using a different reference image, which we compute following a simple heuristic. Based on this, we propose PROBA-V-REF a variant of the PROBA-V dataset, in which the reference image in the low-resolution series is provided, and show that the ranking between the methods changes in this setting. This is relevant to many practical use cases of MISR where the goal is to super-resolve a specific image of the series, i.e. the reference is known. The proposed PROBA-V-REF should better reflect the performance of the different methods for this reference-aware MISR problem.

Self-Supervised training for blind multi-frame video denoising

May 05, 2020

Abstract:We propose a self-supervised approach for training multi-frame video denoising networks. These networks predict frame t from a window of frames around t. Our self-supervised approach benefits from the video temporal consistency by penalizing a loss between the predicted frame t and a neighboring target frame, which are aligned using an optical flow. We use the proposed strategy for online internal learning, where a pre-trained network is fine-tuned to denoise a new unknown noise type from a single video. After a few frames, the proposed fine-tuning reaches and sometimes surpasses the performance of a state-of-the-art network trained with supervision. In addition, for a wide range of noise types, it can be applied blindly without knowing the noise distribution. We demonstrate this by showing results on blind denoising of different synthetic and realistic noises.

Efficient Blind Deblurring under High Noise Levels

May 16, 2019

Abstract:The goal of blind image deblurring is to recover a sharp image from a motion blurred one without knowing the camera motion. Current state-of-the-art methods have a remarkably good performance on images with no noise or very low noise levels. However, the noiseless assumption is not realistic considering that low light conditions are the main reason for the presence of motion blur due to requiring longer exposure times. In fact, motion blur and high to moderate noise often appear together. Most works approach this problem by first estimating the blur kernel $k$ and then deconvolving the noisy blurred image. In this work, we first show that current state-of-the-art kernel estimation methods based on the $\ell_0$ gradient prior can be adapted to handle high noise levels while keeping their efficiency. Then, we show that a fast non-blind deconvolution method can be significantly improved by first denoising the blurry image. The proposed approach yields results that are equivalent to those obtained with much more computationally demanding methods.

Assessing the Sharpness of Satellite Images: Study of the PlanetScope Constellation

Apr 19, 2019

Abstract:New micro-satellite constellations enable unprecedented systematic monitoring applications thanks to their wide coverage and short revisit capabilities. However, the large volumes of images that they produce have uneven qualities, creating the need for automatic quality assessment methods. In this work, we quantify the sharpness of images from the PlanetScope constellation by estimating the blur kernel from each image. Once the kernel has been estimated, it is possible to compute an absolute measure of sharpness which allows to discard low quality images and deconvolve blurry images before any further processing. The method is fully blind and automatic, and since it does not require the knowledge of any satellite specifications it can be ported to other constellations.

Modeling Realistic Degradations in Non-blind Deconvolution

Jun 04, 2018

Abstract:Most image deblurring methods assume an over-simplistic image formation model and as a result are sensitive to more realistic image degradations. We propose a novel variational framework, that explicitly handles pixel saturation, noise, quantization, as well as non-linear camera response function due to e.g., gamma correction. We show that accurately modeling a more realistic image acquisition pipeline leads to significant improvements, both in terms of image quality and PSNR. Furthermore, we show that incorporating the non-linear response in both the data and the regularization terms of the proposed energy leads to a more detailed restoration than a naive inversion of the non-linear curve. The minimization of the proposed energy is performed using stochastic optimization. A dataset consisting of realistically degraded images is created in order to evaluate the method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge