Thibaud Ehret

AMIAD

Diachronic Stereo Matching for Multi-Date Satellite Imagery

Jan 30, 2026Abstract:Recent advances in image-based satellite 3D reconstruction have progressed along two complementary directions. On one hand, multi-date approaches using NeRF or Gaussian-splatting jointly model appearance and geometry across many acquisitions, achieving accurate reconstructions on opportunistic imagery with numerous observations. On the other hand, classical stereoscopic reconstruction pipelines deliver robust and scalable results for simultaneous or quasi-simultaneous image pairs. However, when the two images are captured months apart, strong seasonal, illumination, and shadow changes violate standard stereoscopic assumptions, causing existing pipelines to fail. This work presents the first Diachronic Stereo Matching method for satellite imagery, enabling reliable 3D reconstruction from temporally distant pairs. Two advances make this possible: (1) fine-tuning a state-of-the-art deep stereo network that leverages monocular depth priors, and (2) exposing it to a dataset specifically curated to include a diverse set of diachronic image pairs. In particular, we start from a pretrained MonSter model, trained initially on a mix of synthetic and real datasets such as SceneFlow and KITTI, and fine-tune it on a set of stereo pairs derived from the DFC2019 remote sensing challenge. This dataset contains both synchronic and diachronic pairs under diverse seasonal and illumination conditions. Experiments on multi-date WorldView-3 imagery demonstrate that our approach consistently surpasses classical pipelines and unadapted deep stereo models on both synchronic and diachronic settings. Fine-tuning on temporally diverse images, together with monocular priors, proves essential for enabling 3D reconstruction from previously incompatible acquisition dates. Left image (winter) Right image (autumn) DSM geometry Ours (1.23 m) Zero-shot (3.99 m) LiDAR GT Figure 1. Output geometry for a winter-autumn image pair from Omaha (OMA 331 test scene). Our method recovers accurate geometry despite the diachronic nature of the pair, exhibiting strong appearance changes, which cause existing zero-shot methods to fail. Missing values due to perspective shown in black. Mean altitude error in parentheses; lower is better.

Remote Sensing Change Detection via Weak Temporal Supervision

Jan 05, 2026Abstract:Semantic change detection in remote sensing aims to identify land cover changes between bi-temporal image pairs. Progress in this area has been limited by the scarcity of annotated datasets, as pixel-level annotation is costly and time-consuming. To address this, recent methods leverage synthetic data or generate artificial change pairs, but out-of-domain generalization remains limited. In this work, we introduce a weak temporal supervision strategy that leverages additional temporal observations of existing single-temporal datasets, without requiring any new annotations. Specifically, we extend single-date remote sensing datasets with new observations acquired at different times and train a change detection model by assuming that real bi-temporal pairs mostly contain no change, while pairing images from different locations to generate change examples. To handle the inherent noise in these weak labels, we employ an object-aware change map generation and an iterative refinement process. We validate our approach on extended versions of the FLAIR and IAILD aerial datasets, achieving strong zero-shot and low-data regime performance across different benchmarks. Lastly, we showcase results over large areas in France, highlighting the scalability potential of our method.

EOGS: Gaussian Splatting for Earth Observation

Dec 17, 2024

Abstract:Recently, Gaussian splatting has emerged as a strong alternative to NeRF, demonstrating impressive 3D modeling capabilities while requiring only a fraction of the training and rendering time. In this paper, we show how the standard Gaussian splatting framework can be adapted for remote sensing, retaining its high efficiency. This enables us to achieve state-of-the-art performance in just a few minutes, compared to the day-long optimization required by the best-performing NeRF-based Earth observation methods. The proposed framework incorporates remote-sensing improvements from EO-NeRF, such as radiometric correction and shadow modeling, while introducing novel components, including sparsity, view consistency, and opacity regularizations.

Structure Tensor Representation for Robust Oriented Object Detection

Nov 15, 2024

Abstract:Oriented object detection predicts orientation in addition to object location and bounding box. Precisely predicting orientation remains challenging due to angular periodicity, which introduces boundary discontinuity issues and symmetry ambiguities. Inspired by classical works on edge and corner detection, this paper proposes to represent orientation in oriented bounding boxes as a structure tensor. This representation combines the strengths of Gaussian-based methods and angle-coder solutions, providing a simple yet efficient approach that is robust to angular periodicity issues without additional hyperparameters. Extensive evaluations across five datasets demonstrate that the proposed structure tensor representation outperforms previous methods in both fully-supervised and weakly supervised tasks, achieving high precision in angular prediction with minimal computational overhead. Thus, this work establishes structure tensors as a robust and modular alternative for encoding orientation in oriented object detection. We make our code publicly available, allowing for seamless integration into existing object detectors.

Exploring Robust Features for Few-Shot Object Detection in Satellite Imagery

Mar 08, 2024

Abstract:The goal of this paper is to perform object detection in satellite imagery with only a few examples, thus enabling users to specify any object class with minimal annotation. To this end, we explore recent methods and ideas from open-vocabulary detection for the remote sensing domain. We develop a few-shot object detector based on a traditional two-stage architecture, where the classification block is replaced by a prototype-based classifier. A large-scale pre-trained model is used to build class-reference embeddings or prototypes, which are compared to region proposal contents for label prediction. In addition, we propose to fine-tune prototypes on available training images to boost performance and learn differences between similar classes, such as aircraft types. We perform extensive evaluations on two remote sensing datasets containing challenging and rare objects. Moreover, we study the performance of both visual and image-text features, namely DINOv2 and CLIP, including two CLIP models specifically tailored for remote sensing applications. Results indicate that visual features are largely superior to vision-language models, as the latter lack the necessary domain-specific vocabulary. Lastly, the developed detector outperforms fully supervised and few-shot methods evaluated on the SIMD and DIOR datasets, despite minimal training parameters.

Portraying the Need for Temporal Data in Flood Detection via Sentinel-1

Mar 06, 2024

Abstract:Identifying flood affected areas in remote sensing data is a critical problem in earth observation to analyze flood impact and drive responses. While a number of methods have been proposed in the literature, there are two main limitations in available flood detection datasets: (1) a lack of region variability is commonly observed and/or (2) they require to distinguish permanent water bodies from flooded areas from a single image, which becomes an ill-posed setup. Consequently, we extend the globally diverse MMFlood dataset to multi-date by providing one year of Sentinel-1 observations around each flood event. To our surprise, we notice that the definition of flooded pixels in MMFlood is inconsistent when observing the entire image sequence. Hence, we re-frame the flood detection task as a temporal anomaly detection problem, where anomalous water bodies are segmented from a Sentinel-1 temporal sequence. From this definition, we provide a simple method inspired by the popular video change detector ViBe, results of which quantitatively align with the SAR image time series, providing a reasonable baseline for future works.

Radar Fields: An Extension of Radiance Fields to SAR

Dec 20, 2023

Abstract:Radiance fields have been a major breakthrough in the field of inverse rendering, novel view synthesis and 3D modeling of complex scenes from multi-view image collections. Since their introduction, it was shown that they could be extended to other modalities such as LiDAR, radio frequencies, X-ray or ultrasound. In this paper, we show that, despite the important difference between optical and synthetic aperture radar (SAR) image formation models, it is possible to extend radiance fields to radar images thus presenting the first "radar fields". This allows us to learn surface models using only collections of radar images, similar to how regular radiance fields are learned and with the same computational complexity on average. Thanks to similarities in how both fields are defined, this work also shows a potential for hybrid methods combining both optical and SAR images.

Reducing False Alarms in Video Surveillance by Deep Feature Statistical Modeling

Jul 09, 2023

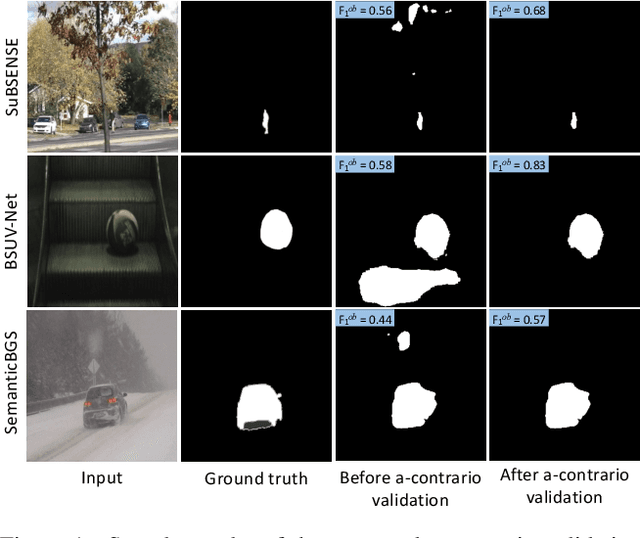

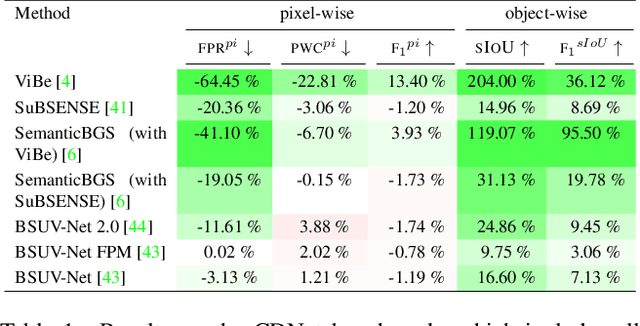

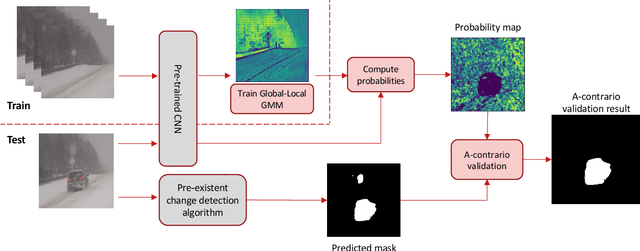

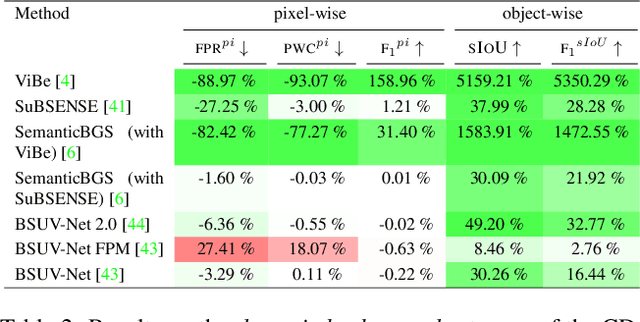

Abstract:Detecting relevant changes is a fundamental problem of video surveillance. Because of the high variability of data and the difficulty of properly annotating changes, unsupervised methods dominate the field. Arguably one of the most critical issues to make them practical is to reduce their false alarm rate. In this work, we develop a method-agnostic weakly supervised a-contrario validation process, based on high dimensional statistical modeling of deep features, to reduce the number of false alarms of any change detection algorithm. We also raise the insufficiency of the conventionally used pixel-wise evaluation, as it fails to precisely capture the performance needs of most real applications. For this reason, we complement pixel-wise metrics with object-wise metrics and evaluate the impact of our approach at both pixel and object levels, on six methods and several sequences from different datasets. Experimental results reveal that the proposed a-contrario validation is able to largely reduce the number of false alarms at both pixel and object levels.

Detecting Methane Plumes using PRISMA: Deep Learning Model and Data Augmentation

Nov 17, 2022

Abstract:The new generation of hyperspectral imagers, such as PRISMA, has improved significantly our detection capability of methane (CH4) plumes from space at high spatial resolution (30m). We present here a complete framework to identify CH4 plumes using images from the PRISMA satellite mission and a deep learning model able to detect plumes over large areas. To compensate for the relative scarcity of PRISMA images, we trained our model by transposing high resolution plumes from Sentinel-2 to PRISMA. Our methodology thus avoids computationally expensive synthetic plume generation from Large Eddy Simulations by generating a broad and realistic training database, and paves the way for large-scale detection of methane plumes using future hyperspectral sensors (EnMAP, EMIT, CarbonMapper).

NeRF, meet differential geometry!

Jun 29, 2022

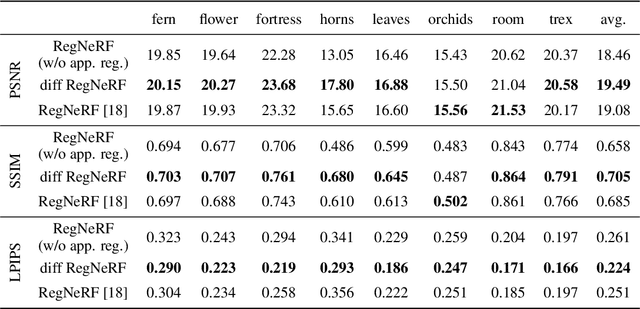

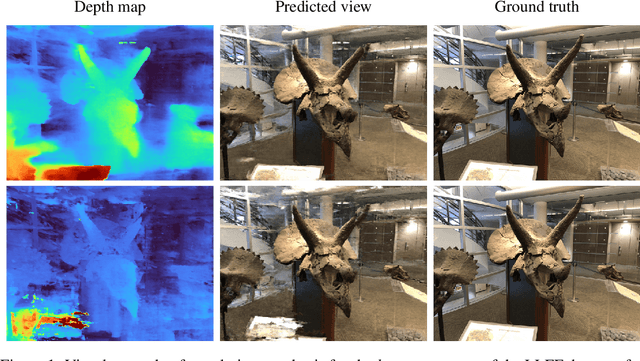

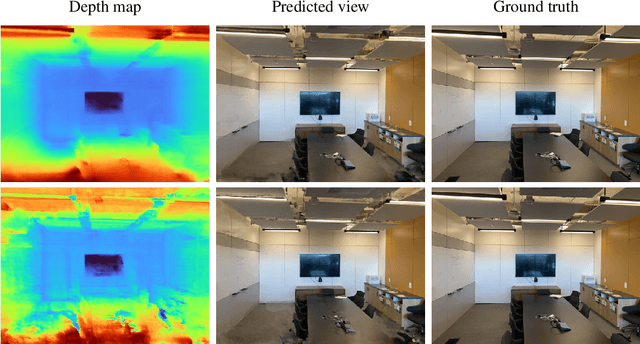

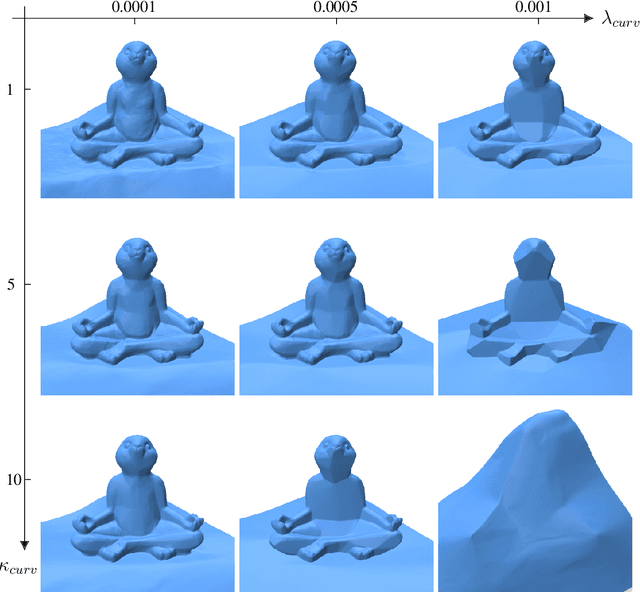

Abstract:Neural radiance fields, or NeRF, represent a breakthrough in the field of novel view synthesis and 3D modeling of complex scenes from multi-view image collections. Numerous recent works have been focusing on making the models more robust, by means of regularization, so as to be able to train with possibly inconsistent and/or very sparse data. In this work, we scratch the surface of how differential geometry can provide regularization tools for robustly training NeRF-like models, which are modified so as to represent continuous and infinitely differentiable functions. In particular, we show how these tools yield a direct mathematical formalism of previously proposed NeRF variants aimed at improving the performance in challenging conditions (i.e. RegNeRF). Based on this, we show how the same formalism can be used to natively encourage the regularity of surfaces (by means of Gaussian and Mean Curvatures) making it possible, for example, to learn surfaces from a very limited number of views.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge