Roger Marí

AMIAD

Diachronic Stereo Matching for Multi-Date Satellite Imagery

Jan 30, 2026Abstract:Recent advances in image-based satellite 3D reconstruction have progressed along two complementary directions. On one hand, multi-date approaches using NeRF or Gaussian-splatting jointly model appearance and geometry across many acquisitions, achieving accurate reconstructions on opportunistic imagery with numerous observations. On the other hand, classical stereoscopic reconstruction pipelines deliver robust and scalable results for simultaneous or quasi-simultaneous image pairs. However, when the two images are captured months apart, strong seasonal, illumination, and shadow changes violate standard stereoscopic assumptions, causing existing pipelines to fail. This work presents the first Diachronic Stereo Matching method for satellite imagery, enabling reliable 3D reconstruction from temporally distant pairs. Two advances make this possible: (1) fine-tuning a state-of-the-art deep stereo network that leverages monocular depth priors, and (2) exposing it to a dataset specifically curated to include a diverse set of diachronic image pairs. In particular, we start from a pretrained MonSter model, trained initially on a mix of synthetic and real datasets such as SceneFlow and KITTI, and fine-tune it on a set of stereo pairs derived from the DFC2019 remote sensing challenge. This dataset contains both synchronic and diachronic pairs under diverse seasonal and illumination conditions. Experiments on multi-date WorldView-3 imagery demonstrate that our approach consistently surpasses classical pipelines and unadapted deep stereo models on both synchronic and diachronic settings. Fine-tuning on temporally diverse images, together with monocular priors, proves essential for enabling 3D reconstruction from previously incompatible acquisition dates. Left image (winter) Right image (autumn) DSM geometry Ours (1.23 m) Zero-shot (3.99 m) LiDAR GT Figure 1. Output geometry for a winter-autumn image pair from Omaha (OMA 331 test scene). Our method recovers accurate geometry despite the diachronic nature of the pair, exhibiting strong appearance changes, which cause existing zero-shot methods to fail. Missing values due to perspective shown in black. Mean altitude error in parentheses; lower is better.

ShinyNeRF: Digitizing Anisotropic Appearance in Neural Radiance Fields

Dec 25, 2025Abstract:Recent advances in digitization technologies have transformed the preservation and dissemination of cultural heritage. In this vein, Neural Radiance Fields (NeRF) have emerged as a leading technology for 3D digitization, delivering representations with exceptional realism. However, existing methods struggle to accurately model anisotropic specular surfaces, typically observed, for example, on brushed metals. In this work, we introduce ShinyNeRF, a novel framework capable of handling both isotropic and anisotropic reflections. Our method is capable of jointly estimating surface normals, tangents, specular concentration, and anisotropy magnitudes of an Anisotropic Spherical Gaussian (ASG) distribution, by learning an approximation of the outgoing radiance as an encoded mixture of isotropic von Mises-Fisher (vMF) distributions. Experimental results show that ShinyNeRF not only achieves state-of-the-art performance on digitizing anisotropic specular reflections, but also offers plausible physical interpretations and editing of material properties compared to existing methods.

Radar Fields: An Extension of Radiance Fields to SAR

Dec 20, 2023

Abstract:Radiance fields have been a major breakthrough in the field of inverse rendering, novel view synthesis and 3D modeling of complex scenes from multi-view image collections. Since their introduction, it was shown that they could be extended to other modalities such as LiDAR, radio frequencies, X-ray or ultrasound. In this paper, we show that, despite the important difference between optical and synthetic aperture radar (SAR) image formation models, it is possible to extend radiance fields to radar images thus presenting the first "radar fields". This allows us to learn surface models using only collections of radar images, similar to how regular radiance fields are learned and with the same computational complexity on average. Thanks to similarities in how both fields are defined, this work also shows a potential for hybrid methods combining both optical and SAR images.

NeRF, meet differential geometry!

Jun 29, 2022

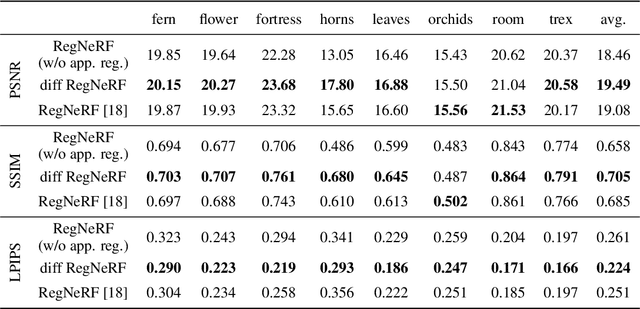

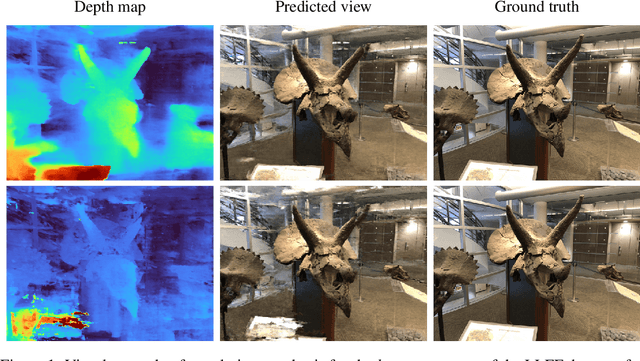

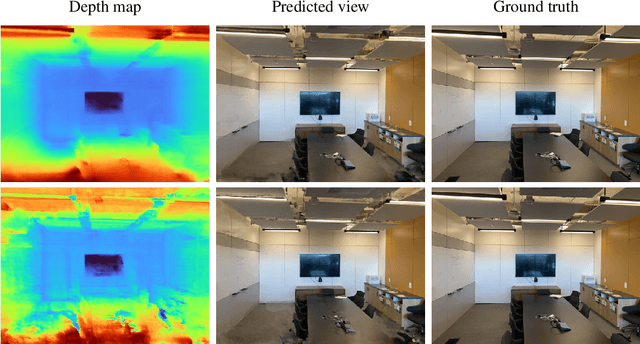

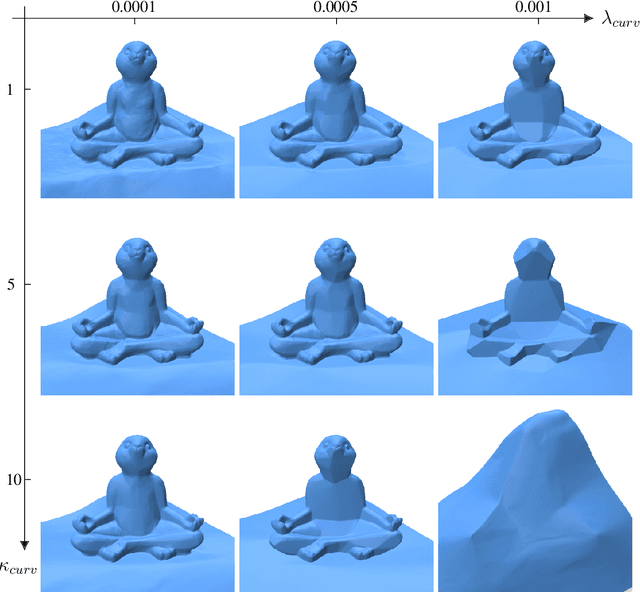

Abstract:Neural radiance fields, or NeRF, represent a breakthrough in the field of novel view synthesis and 3D modeling of complex scenes from multi-view image collections. Numerous recent works have been focusing on making the models more robust, by means of regularization, so as to be able to train with possibly inconsistent and/or very sparse data. In this work, we scratch the surface of how differential geometry can provide regularization tools for robustly training NeRF-like models, which are modified so as to represent continuous and infinitely differentiable functions. In particular, we show how these tools yield a direct mathematical formalism of previously proposed NeRF variants aimed at improving the performance in challenging conditions (i.e. RegNeRF). Based on this, we show how the same formalism can be used to natively encourage the regularity of surfaces (by means of Gaussian and Mean Curvatures) making it possible, for example, to learn surfaces from a very limited number of views.

Sat-NeRF: Learning Multi-View Satellite Photogrammetry With Transient Objects and Shadow Modeling Using RPC Cameras

Mar 16, 2022

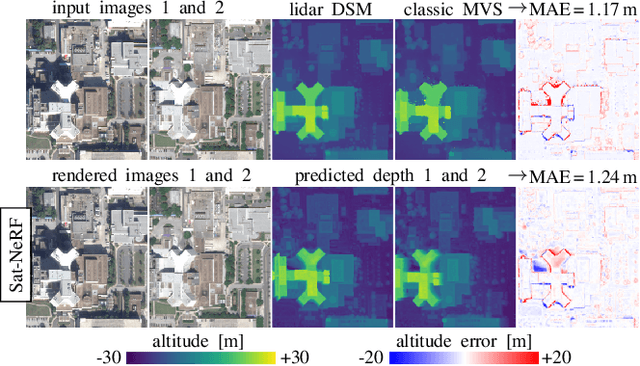

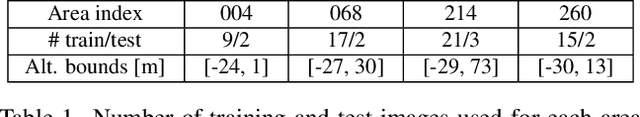

Abstract:We introduce the Satellite Neural Radiance Field (Sat-NeRF), a new end-to-end model for learning multi-view satellite photogrammetry in the wild. Sat-NeRF combines some of the latest trends in neural rendering with native satellite camera models, represented by rational polynomial coefficient (RPC) functions. The proposed method renders new views and infers surface models of similar quality to those obtained with traditional state-of-the-art stereo pipelines. Multi-date images exhibit significant changes in appearance, mainly due to varying shadows and transient objects (cars, vegetation). Robustness to these challenges is achieved by a shadow-aware irradiance model and uncertainty weighting to deal with transient phenomena that cannot be explained by the position of the sun. We evaluate Sat-NeRF using WorldView-3 images from different locations and stress the advantages of applying a bundle adjustment to the satellite camera models prior to training. This boosts the network performance and can optionally be used to extract additional cues for depth supervision.

Automatic Stockpile Volume Monitoring using Multi-view Stereo from SkySat Imagery

Mar 01, 2021

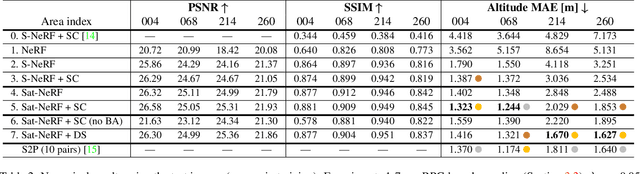

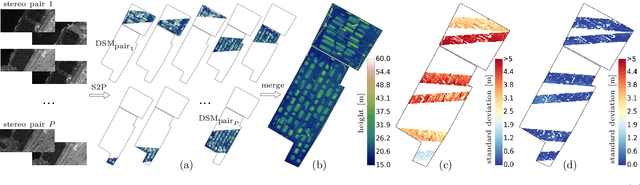

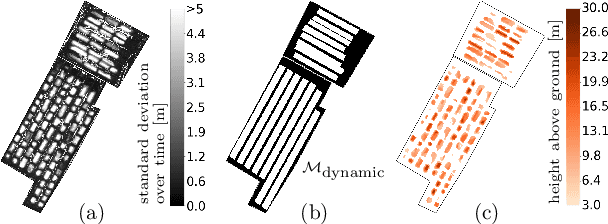

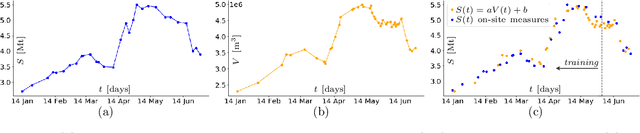

Abstract:This paper proposes a system for automatic surface volume monitoring from time series of SkySat pushframe imagery. A specific challenge of building and comparing large 3D models from SkySat data is to correct inconsistencies between the camera models associated to the multiple views that are necessary to cover the area at a given time, where these camera models are represented as Rational Polynomial Cameras (RPCs). We address the problem by proposing a date-wise RPC refinement, able to handle dynamic areas covered by sets of partially overlapping views. The cameras are refined by means of a rotation that compensates for errors due to inaccurate knowledge of the satellite attitude. The refined RPCs are then used to reconstruct multiple consistent Digital Surface Models (DSMs) from different stereo pairs at each date. RPC refinement strengthens the consistency between the DSMs of each date, which is extremely beneficial to accurately measure volumes in the 3D surface models. The system is tested in a real case scenario, to monitor large coal stockpiles. Our volume estimates are validated with measurements collected on site in the same period of time.

Robust Rational Polynomial Camera Modelling for SAR and Pushbroom Imaging

Feb 26, 2021

Abstract:The Rational Polynomial Camera (RPC) model can be used to describe a variety of image acquisition systems in remote sensing, notably optical and Synthetic Aperture Radar (SAR) sensors. RPC functions relate 3D to 2D coordinates and vice versa, regardless of physical sensor specificities, which has made them an essential tool to harness satellite images in a generic way. This article describes a terrain-independent algorithm to accurately derive a RPC model from a set of 3D-2D point correspondences based on a regularized least squares fit. The performance of the method is assessed by varying the point correspondences and the size of the area that they cover. We test the algorithm on SAR and optical data, to derive RPCs from physical sensor models or from other RPC models after composition with corrective functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge