Isao Ishikawa

Generative Modeling through Spectral Analysis of Koopman Operator

Dec 21, 2025

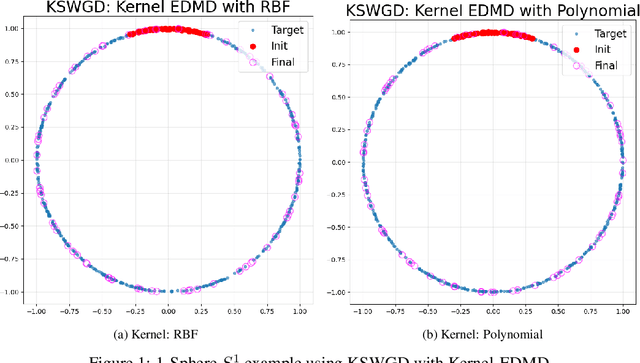

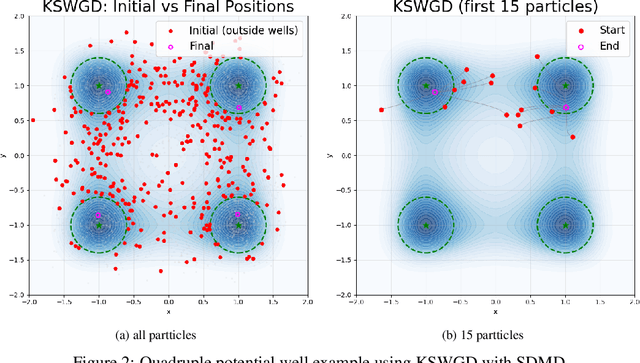

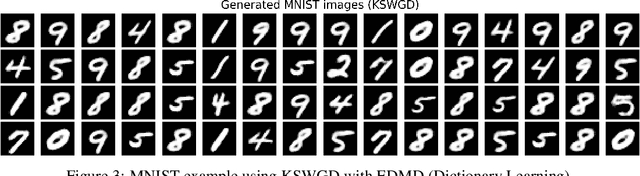

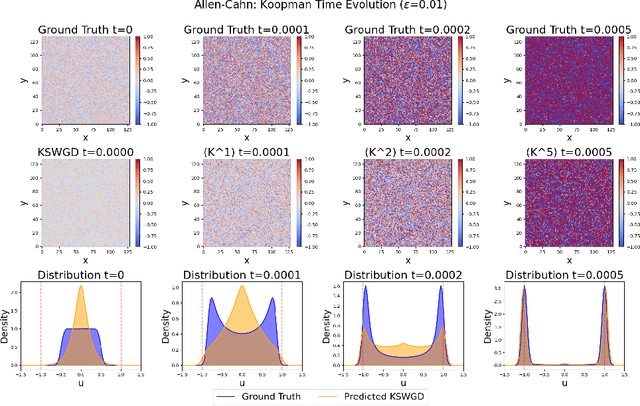

Abstract:We propose Koopman Spectral Wasserstein Gradient Descent (KSWGD), a generative modeling framework that combines operator-theoretic spectral analysis with optimal transport. The novel insight is that the spectral structure required for accelerated Wasserstein gradient descent can be directly estimated from trajectory data via Koopman operator approximation which can eliminate the need for explicit knowledge of the target potential or neural network training. We provide rigorous convergence analysis and establish connection to Feynman-Kac theory that clarifies the method's probabilistic foundation. Experiments across diverse settings, including compact manifold sampling, metastable multi-well systems, image generation, and high dimensional stochastic partial differential equation, demonstrate that KSWGD consistently achieves faster convergence than other existing methods while maintaining high sample quality.

Why High-rank Neural Networks Generalize?: An Algebraic Framework with RKHSs

Sep 26, 2025

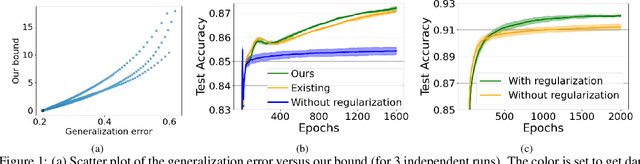

Abstract:We derive a new Rademacher complexity bound for deep neural networks using Koopman operators, group representations, and reproducing kernel Hilbert spaces (RKHSs). The proposed bound describes why the models with high-rank weight matrices generalize well. Although there are existing bounds that attempt to describe this phenomenon, these existing bounds can be applied to limited types of models. We introduce an algebraic representation of neural networks and a kernel function to construct an RKHS to derive a bound for a wider range of realistic models. This work paves the way for the Koopman-based theory for Rademacher complexity bounds to be valid for more practical situations.

Generalization Through Growth: Hidden Dynamics Controls Depth Dependence

May 21, 2025Abstract:Recent theory has reduced the depth dependence of generalization bounds from exponential to polynomial and even depth-independent rates, yet these results remain tied to specific architectures and Euclidean inputs. We present a unified framework for arbitrary \blue{pseudo-metric} spaces in which a depth-\(k\) network is the composition of continuous hidden maps \(f:\mathcal{X}\to \mathcal{X}\) and an output map \(h:\mathcal{X}\to \mathbb{R}\). The resulting bound $O(\sqrt{(\alpha + \log \beta(k))/n})$ isolates the sole depth contribution in \(\beta(k)\), the word-ball growth of the semigroup generated by the hidden layers. By Gromov's theorem polynomial (resp. exponential) growth corresponds to virtually nilpotent (resp. expanding) dynamics, revealing a geometric dichotomy behind existing $O(\sqrt{k})$ (sublinear depth) and $\tilde{O}(1)$ (depth-independent) rates. We further provide covering-number estimates showing that expanding dynamics yield an exponential parameter saving via compositional expressivity. Our results decouple specification from implementation, offering architecture-agnostic and dynamical-systems-aware guarantees applicable to modern deep-learning paradigms such as test-time inference and diffusion models.

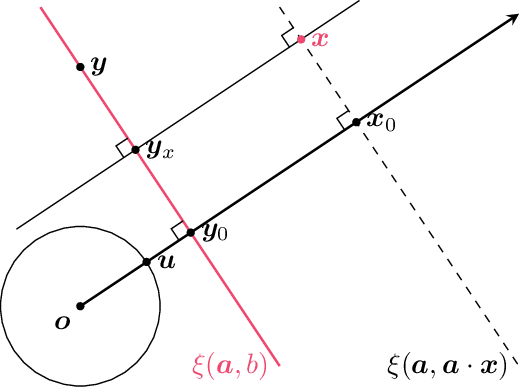

Constructive Universal Approximation Theorems for Deep Joint-Equivariant Networks by Schur's Lemma

May 22, 2024

Abstract:We present a unified constructive universal approximation theorem covering a wide range of learning machines including both shallow and deep neural networks based on the group representation theory. Constructive here means that the distribution of parameters is given in a closed-form expression (called the ridgelet transform). Contrary to the case of shallow models, expressive power analysis of deep models has been conducted in a case-by-case manner. Recently, Sonoda et al. (2023a,b) developed a systematic method to show a constructive approximation theorem from scalar-valued joint-group-invariant feature maps, covering a formal deep network. However, each hidden layer was formalized as an abstract group action, so it was not possible to cover real deep networks defined by composites of nonlinear activation function. In this study, we extend the method for vector-valued joint-group-equivariant feature maps, so to cover such real networks.

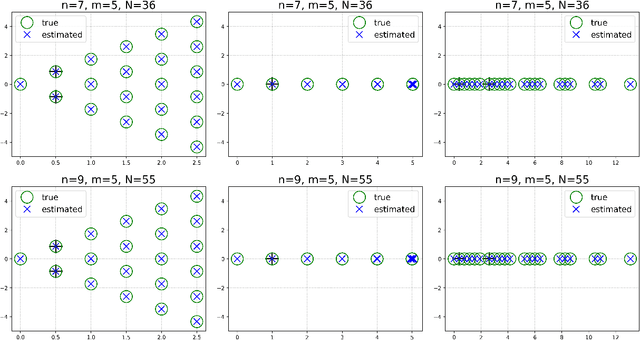

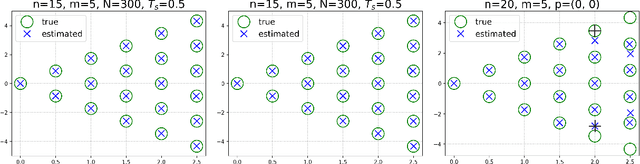

Finite-dimensional approximations of push-forwards on locally analytic functionals and truncation of least-squares polynomials

Apr 16, 2024Abstract:This paper introduces a theoretical framework for investigating analytic maps from finite discrete data, elucidating mathematical machinery underlying the polynomial approximation with least-squares in multivariate situations. Our approach is to consider the push-forward on the space of locally analytic functionals, instead of directly handling the analytic map itself. We establish a methodology enabling appropriate finite-dimensional approximation of the push-forward from finite discrete data, through the theory of the Fourier--Borel transform and the Fock space. Moreover, we prove a rigorous convergence result with a convergence rate. As an application, we prove that it is not the least-squares polynomial, but the polynomial obtained by truncating its higher-degree terms, that approximates analytic functions and further allows for approximation beyond the support of the data distribution. One advantage of our theory is that it enables us to apply linear algebraic operations to the finite-dimensional approximation of the push-forward. Utilizing this, we prove the convergence of a method for approximating an analytic vector field from finite data of the flow map of an ordinary differential equation.

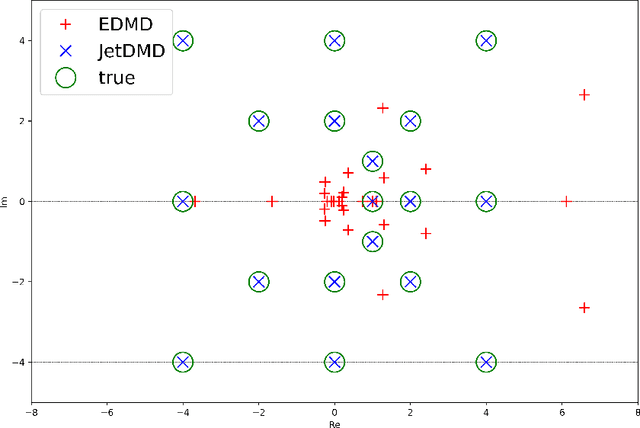

Koopman operators with intrinsic observables in rigged reproducing kernel Hilbert spaces

Mar 14, 2024

Abstract:This paper presents a novel approach for estimating the Koopman operator defined on a reproducing kernel Hilbert space (RKHS) and its spectra. We propose an estimation method, what we call Jet Dynamic Mode Decomposition (JetDMD), leveraging the intrinsic structure of RKHS and the geometric notion known as jets to enhance the estimation of the Koopman operator. This method refines the traditional Extended Dynamic Mode Decomposition (EDMD) in accuracy, especially in the numerical estimation of eigenvalues. This paper proves JetDMD's superiority through explicit error bounds and convergence rate for special positive definite kernels, offering a solid theoretical foundation for its performance. We also delve into the spectral analysis of the Koopman operator, proposing the notion of extended Koopman operator within a framework of rigged Hilbert space. This notion leads to a deeper understanding of estimated Koopman eigenfunctions and capturing them outside the original function space. Through the theory of rigged Hilbert space, our study provides a principled methodology to analyze the estimated spectrum and eigenfunctions of Koopman operators, and enables eigendecomposition within a rigged RKHS. We also propose a new effective method for reconstructing the dynamical system from temporally-sampled trajectory data of the dynamical system with solid theoretical guarantee. We conduct several numerical simulations using the van der Pol oscillator, the Duffing oscillator, the H\'enon map, and the Lorenz attractor, and illustrate the performance of JetDMD with clear numerical computations of eigenvalues and accurate predictions of the dynamical systems.

A unified Fourier slice method to derive ridgelet transform for a variety of depth-2 neural networks

Feb 25, 2024Abstract:To investigate neural network parameters, it is easier to study the distribution of parameters than to study the parameters in each neuron. The ridgelet transform is a pseudo-inverse operator that maps a given function $f$ to the parameter distribution $\gamma$ so that a network $\mathtt{NN}[\gamma]$ reproduces $f$, i.e. $\mathtt{NN}[\gamma]=f$. For depth-2 fully-connected networks on a Euclidean space, the ridgelet transform has been discovered up to the closed-form expression, thus we could describe how the parameters are distributed. However, for a variety of modern neural network architectures, the closed-form expression has not been known. In this paper, we explain a systematic method using Fourier expressions to derive ridgelet transforms for a variety of modern networks such as networks on finite fields $\mathbb{F}_p$, group convolutional networks on abstract Hilbert space $\mathcal{H}$, fully-connected networks on noncompact symmetric spaces $G/K$, and pooling layers, or the $d$-plane ridgelet transform.

Joint Group Invariant Functions on Data-Parameter Domain Induce Universal Neural Networks

Oct 05, 2023

Abstract:The symmetry and geometry of input data are considered to be encoded in the internal data representation inside the neural network, but the specific encoding rule has been less investigated. By focusing on a joint group invariant function on the data-parameter domain, we present a systematic rule to find a dual group action on the parameter domain from a group action on the data domain. Further, we introduce generalized neural networks induced from the joint invariant functions, and present a new group theoretic proof of their universality theorems by using Schur's lemma. Since traditional universality theorems were demonstrated based on functional analytical methods, this study sheds light on the group theoretic aspect of the approximation theory, connecting geometric deep learning to abstract harmonic analysis.

Deep Ridgelet Transform: Voice with Koopman Operator Proves Universality of Formal Deep Networks

Oct 05, 2023

Abstract:We identify hidden layers inside a DNN with group actions on the data space, and formulate the DNN as a dual voice transform with respect to Koopman operator, a linear representation of the group action. Based on the group theoretic arguments, particularly by using Schur's lemma, we show a simple proof of the universality of those DNNs.

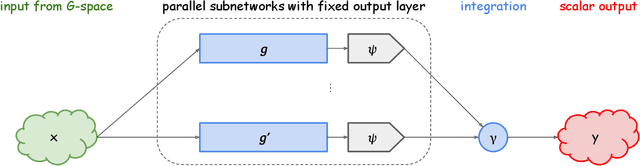

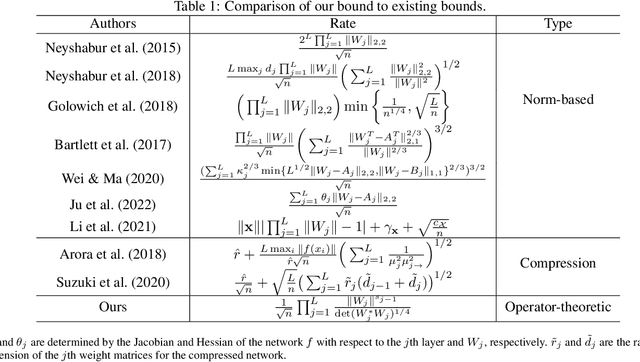

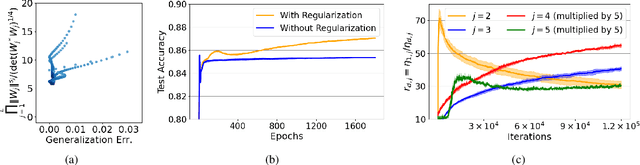

Koopman-Based Bound for Generalization: New Aspect of Neural Networks Regarding Nonlinear Noise Filtering

Feb 12, 2023

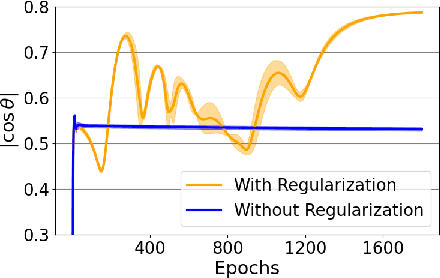

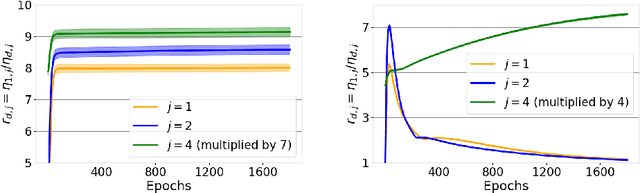

Abstract:We propose a new bound for generalization of neural networks using Koopman operators. Unlike most of the existing works, we focus on the role of the final nonlinear transformation of the networks. Our bound is described by the reciprocal of the determinant of the weight matrices and is tighter than existing norm-based bounds when the weight matrices do not have small singular values. According to existing theories about the low-rankness of the weight matrices, it may be counter-intuitive that we focus on the case where singular values of weight matrices are not small. However, motivated by the final nonlinear transformation, we can see that our result sheds light on a new perspective regarding a noise filtering property of neural networks. Since our bound comes from Koopman operators, this work also provides a connection between operator-theoretic analysis and generalization of neural networks. Numerical results support the validity of our theoretical results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge