Iman Soltani Bozchalooi

Targeted collapse regularized autoencoder for anomaly detection: black hole at the center

Jun 22, 2023

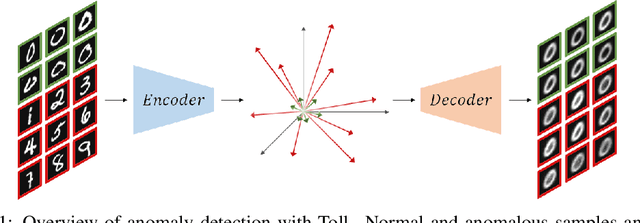

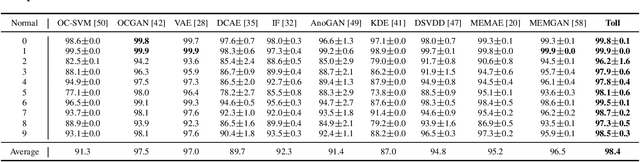

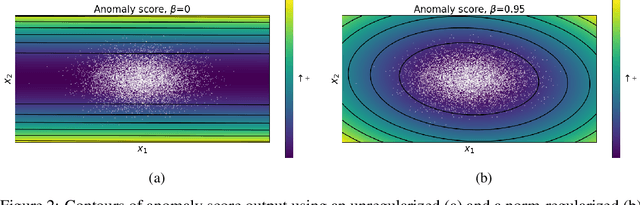

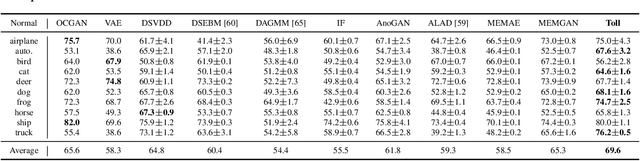

Abstract:Autoencoders have been extensively used in the development of recent anomaly detection techniques. The premise of their application is based on the notion that after training the autoencoder on normal training data, anomalous inputs will exhibit a significant reconstruction error. Consequently, this enables a clear differentiation between normal and anomalous samples. In practice, however, it is observed that autoencoders can generalize beyond the normal class and achieve a small reconstruction error on some of the anomalous samples. To improve the performance, various techniques propose additional components and more sophisticated training procedures. In this work, we propose a remarkably straightforward alternative: instead of adding neural network components, involved computations, and cumbersome training, we complement the reconstruction loss with a computationally light term that regulates the norm of representations in the latent space. The simplicity of our approach minimizes the requirement for hyperparameter tuning and customization for new applications which, paired with its permissive data modality constraint, enhances the potential for successful adoption across a broad range of applications. We test the method on various visual and tabular benchmarks and demonstrate that the technique matches and frequently outperforms alternatives. We also provide a theoretical analysis and numerical simulations that help demonstrate the underlying process that unfolds during training and how it can help with anomaly detection. This mitigates the black-box nature of autoencoder-based anomaly detection algorithms and offers an avenue for further investigation of advantages, fail cases, and potential new directions.

Anomaly Detection with Domain Adaptation

Jun 05, 2020

Abstract:We study the problem of semi-supervised anomaly detection with domain adaptation. Given a set of normal data from a source domain and a limited amount of normal examples from a target domain, the goal is to have a well-performing anomaly detector in the target domain. We propose the Invariant Representation Anomaly Detection (IRAD) to solve this problem where we first learn to extract a domain-invariant representation. The extraction is achieved by an across-domain encoder trained together with source-specific encoders and generators by adversarial learning. An anomaly detector is then trained using the learnt representations. We evaluate IRAD extensively on digits images datasets (MNIST, USPS and SVHN) and object recognition datasets (Office-Home). Experimental results show that IRAD outperforms baseline models by a wide margin across different datasets. We derive a theoretical lower bound for the joint error that explains the performance decay from overtraining and also an upper bound for the generalization error.

Memory Augmented Generative Adversarial Networks for Anomaly Detection

Feb 07, 2020

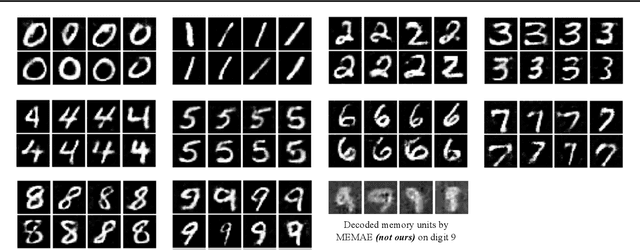

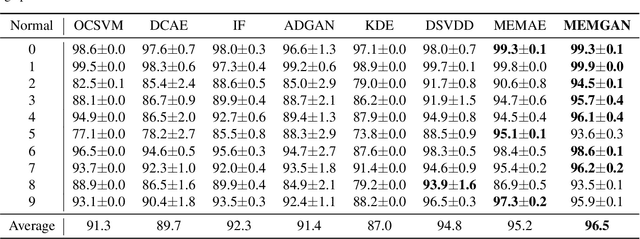

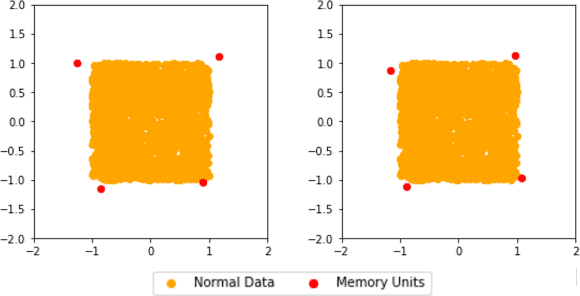

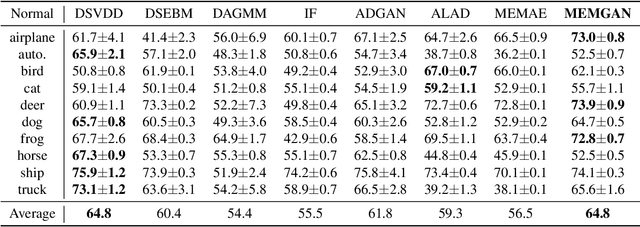

Abstract:In this paper, we present a memory-augmented algorithm for anomaly detection. Classical anomaly detection algorithms focus on learning to model and generate normal data, but typically guarantees for detecting anomalous data are weak. The proposed Memory Augmented Generative Adversarial Networks (MEMGAN) interacts with a memory module for both the encoding and generation processes. Our algorithm is such that most of the \textit{encoded} normal data are inside the convex hull of the memory units, while the abnormal data are isolated outside. Such a remarkable property leads to good (resp.\ poor) reconstruction for normal (resp.\ abnormal) data and therefore provides a strong guarantee for anomaly detection. Decoded memory units in MEMGAN are more interpretable and disentangled than previous methods, which further demonstrates the effectiveness of the memory mechanism. Experimental results on twenty anomaly detection datasets of CIFAR-10 and MNIST show that MEMGAN demonstrates significant improvements over previous anomaly detection methods.

Regularized Cycle Consistent Generative Adversarial Network for Anomaly Detection

Jan 18, 2020

Abstract:In this paper, we investigate algorithms for anomaly detection. Previous anomaly detection methods focus on modeling the distribution of non-anomalous data provided during training. However, this does not necessarily ensure the correct detection of anomalous data. We propose a new Regularized Cycle Consistent Generative Adversarial Network (RCGAN) in which deep neural networks are adversarially trained to better recognize anomalous samples. This approach is based on leveraging a penalty distribution with a new definition of the loss function and novel use of discriminator networks. It is based on a solid mathematical foundation, and proofs show that our approach has stronger guarantees for detecting anomalous examples compared to the current state-of-the-art. Experimental results on both real-world and synthetic data show that our model leads to significant and consistent improvements on previous anomaly detection benchmarks. Notably, RCGAN improves on the state-of-the-art on the KDDCUP, Arrhythmia, Thyroid, Musk and CIFAR10 datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge