Ilana Nisky

Effect of Performance Feedback Timing on Motor Learning for a Surgical Training Task

Aug 25, 2025Abstract:Objective: Robot-assisted minimally invasive surgery (RMIS) has become the gold standard for a variety of surgical procedures, but the optimal method of training surgeons for RMIS is unknown. We hypothesized that real-time, rather than post-task, error feedback would better increase learning speed and reduce errors. Methods: Forty-two surgical novices learned a virtual version of the ring-on-wire task, a canonical task in RMIS training. We investigated the impact of feedback timing with multi-sensory (haptic and visual) cues in three groups: (1) real-time error feedback, (2) trial replay with error feedback, and (3) no error feedback. Results: Participant performance was evaluated based on the accuracy of ring position and orientation during the task. Participants who received real-time feedback outperformed other groups in ring orientation. Additionally, participants who received feedback in replay outperformed participants who did not receive any error feedback on ring orientation during long, straight path sections. There were no significant differences between groups for ring position overall, but participants who received real-time feedback outperformed the other groups in positional accuracy on tightly curved path sections. Conclusion: The addition of real-time haptic and visual error feedback improves learning outcomes in a virtual surgical task over error feedback in replay or no error feedback at all. Significance: This work demonstrates that multi-sensory error feedback delivered in real time leads to better training outcomes as compared to the same feedback delivered after task completion. This novel method of training may enable surgical trainees to develop skills with greater speed and accuracy.

Advances in Compliance Detection: Novel Models Using Vision-Based Tactile Sensors

Jun 17, 2025Abstract:Compliance is a critical parameter for describing objects in engineering, agriculture, and biomedical applications. Traditional compliance detection methods are limited by their lack of portability and scalability, rely on specialized, often expensive equipment, and are unsuitable for robotic applications. Moreover, existing neural network-based approaches using vision-based tactile sensors still suffer from insufficient prediction accuracy. In this paper, we propose two models based on Long-term Recurrent Convolutional Networks (LRCNs) and Transformer architectures that leverage RGB tactile images and other information captured by the vision-based sensor GelSight to predict compliance metrics accurately. We validate the performance of these models using multiple metrics and demonstrate their effectiveness in accurately estimating compliance. The proposed models exhibit significant performance improvement over the baseline. Additionally, we investigated the correlation between sensor compliance and object compliance estimation, which revealed that objects that are harder than the sensor are more challenging to estimate.

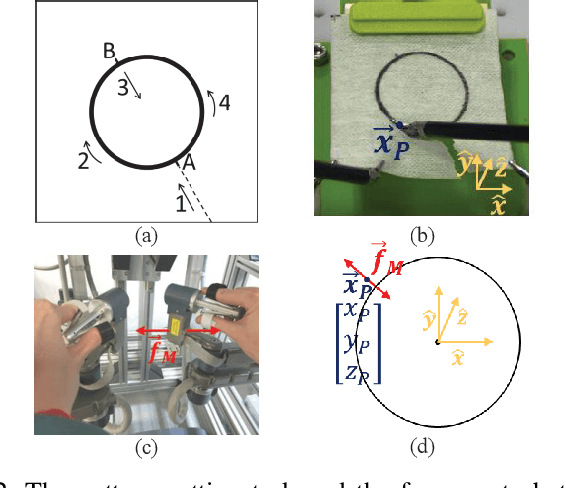

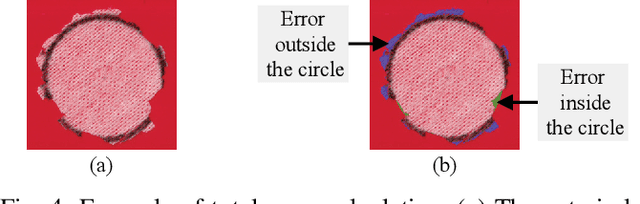

Video-Based Detection and Analysis of Errors in Robotic Surgical Training

Apr 28, 2025Abstract:Robot-assisted minimally invasive surgeries offer many advantages but require complex motor tasks that take surgeons years to master. There is currently a lack of knowledge on how surgeons acquire these robotic surgical skills. To help bridge this gap, we previously followed surgical residents learning complex surgical training dry-lab tasks on a surgical robot over six months. Errors are an important measure for self-training and for skill evaluation, but unlike in virtual simulations, in dry-lab training, errors are difficult to monitor automatically. Here, we analyzed the errors in the ring tower transfer task, in which surgical residents moved a ring along a curved wire as quickly and accurately as possible. We developed an image-processing algorithm to detect collision errors and achieved detection accuracy of ~95%. Using the detected errors and task completion time, we found that the surgical residents decreased their completion time and number of errors over the six months. This analysis provides a framework for detecting collision errors in similar surgical training tasks and sheds light on the learning process of the surgical residents.

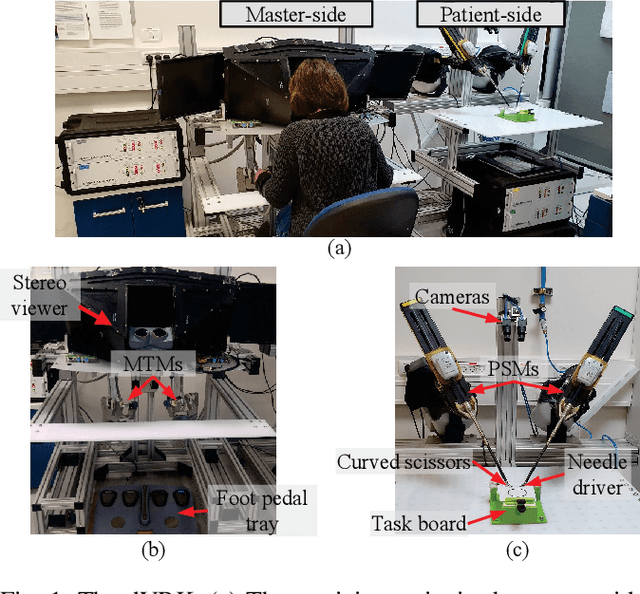

Dataset and Analysis of Long-Term Skill Acquisition in Robot-Assisted Minimally Invasive Surgery

Mar 27, 2025Abstract:Objective: We aim to investigate long-term robotic surgical skill acquisition among surgical residents and the effects of training intervals and fatigue on performance. Methods: For six months, surgical residents participated in three training sessions once a month, surrounding a single 26-hour hospital shift. In each shift, they participated in training sessions scheduled before, during, and after the shift. In each training session, they performed three dry-lab training tasks: Ring Tower Transfer, Knot-Tying, and Suturing. We collected a comprehensive dataset, including videos synchronized with kinematic data, activity tracking, and scans of the suturing pads. Results: We collected a dataset of 972 trials performed by 18 residents of different surgical specializations. Participants demonstrated consistent performance improvement across all tasks. In addition, we found variations in between-shift learning and forgetting across metrics and tasks, and hints for possible effects of fatigue. Conclusion: The findings from our first analysis shed light on the long-term learning processes of robotic surgical skills with extended intervals and varying levels of fatigue. Significance: This study lays the groundwork for future research aimed at optimizing training protocols and enhancing AI applications in surgery, ultimately contributing to improved patient outcomes. The dataset will be made available upon acceptance of our journal submission.

Haptic Guidance and Haptic Error Amplification in a Virtual Surgical Robotic Training Environment

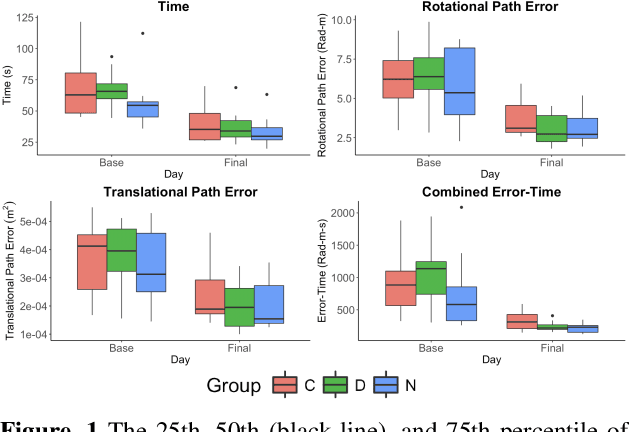

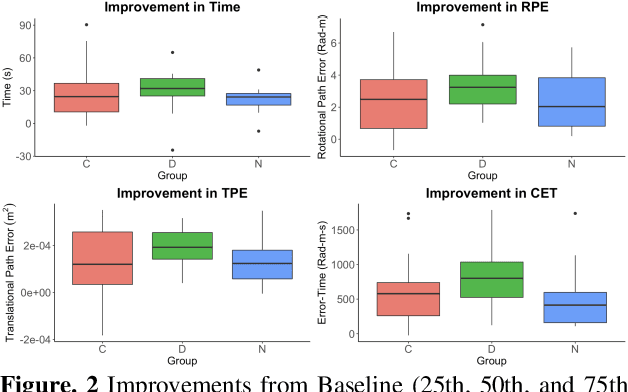

Sep 11, 2023Abstract:Teleoperated robotic systems have introduced more intuitive control for minimally invasive surgery, but the optimal method for training remains unknown. Recent motor learning studies have demonstrated that exaggeration of errors helps trainees learn to perform tasks with greater speed and accuracy. We hypothesized that training in a force field that pushes the operator away from a desired path would improve their performance on a virtual reality ring-on-wire task. Forty surgical novices trained under a no-force, guidance, or error-amplifying force field over five days. Completion time, translational and rotational path error, and combined error-time were evaluated under no force field on the final day. The groups significantly differed in combined error-time, with the guidance group performing the worst. Error-amplifying field participants showed the most improvement and did not plateau in their performance during training, suggesting that learning was still ongoing. Guidance field participants had the worst performance on the final day, confirming the guidance hypothesis. Participants with high initial path error benefited more from guidance. Participants with high initial combined error-time benefited more from guidance and error-amplifying force field training. Our results suggest that error-amplifying and error-reducing haptic training for robot-assisted telesurgery benefits trainees of different abilities differently.

Using LOR Syringe Probes as a Method to Reduce Errors in Epidural Analgesia -- a Robotic Simulation Study

May 07, 2023Abstract:Epidural analgesia involves injection of anesthetics into the epidural space, using a Touhy needle to proceed through the layers in the epidural region and a "loss of resistance" (LOR) syringe to sense the environment stiffness. The anesthesiologist's case experience is one of the leading causes of accidental dural puncture and failed epidural - the two most common complications of epidural analgesia. Robotic simulation is an appealing solution to help train novices in this task. Another benefit of it is the ability to record the kinematic information throughout the procedure. In this work, we used a haptic bimanual simulator, that we designed and validated in previous work, to explore the effect LOR probing strategies had on procedure outcomes. Our results indicate that most participants probed more with the LOR syringe in successful trials, compared to unsuccessful trials. Furthermore, this result was more prominent in the three layers preceding the epidural space. Our findings can assist in creating better instructions for training novices in the task of epidural analgesia. We posit that instructing anesthesia residents to use the LOR syringe more extensively and educating them to do so more when they are in proximity to the epidural space can help improve skill acquisition in this task.

Design and Validation of a Bimanual Haptic Epidural Needle Insertion Simulator

Jan 26, 2023Abstract:The case experience of anesthesiologists is one of the leading causes of accidental dural puncture and failed epidural - the most common complications of epidural analgesia. We designed a bimanual haptic simulator to train anesthesiologists and optimize epidural analgesia skill acquisition, and present a validation study conducted with 15 anesthesiologists of different competency levels from several hospitals in Israel. Our simulator emulates the forces applied on the epidural (Touhy) needle, held by one hand, and those applied on the Loss of Resistance (LOR) syringe, held by the second hand. The resistance is calculated based on a model of the Epidural region layers that is parameterized by the weight of the patient. We measured the movements of both haptic devices, and quantified the rate of results (success, failed epidurals and dural punctures), insertion strategies, and answers of participants to questionnaires about their perception of the realism of the simulation. We demonstrated good construct validity by showing that the simulator can distinguish between real-life novices and experts. Good face and content validity were shown in experienced users' perception of the simulator as realistic and well-targeted. We found differences in strategies between different level anesthesiologists, and suggest trainee-based instruction in advanced training stages.

Robot-Assisted Surgical Training Over Several Days in a Virtual Surgical Environment with Divergent and Convergent Force Fields

Sep 23, 2021

Abstract:Surgical procedures require a high level of technical skill to ensure efficiency and patient safety. Due to the direct effect of surgeon skill on patient outcomes, the development of cost-effective and realistic training methods is imperative to accelerate skill acquisition. Teleoperated robotic devices allow for intuitive ergonomic control, but the learning curve for these systems remains steep. Recent studies in motor learning have shown that visual or physical exaggeration of errors helps trainees to learn to perform tasks faster and more accurately. In this study, we extended the work from two previous studies to investigate the performance of subjects in different force field training conditions, including convergent (assistive), divergent (resistive), and no force field (null).

* 2 pages, 2 figures, Hamlyn Symposium on Medical Robotics 2019

Predicting the Timing of Camera Movements From the Kinematics of Instruments in Robotic-Assisted Surgery Using Artificial Neural Networks

Sep 23, 2021

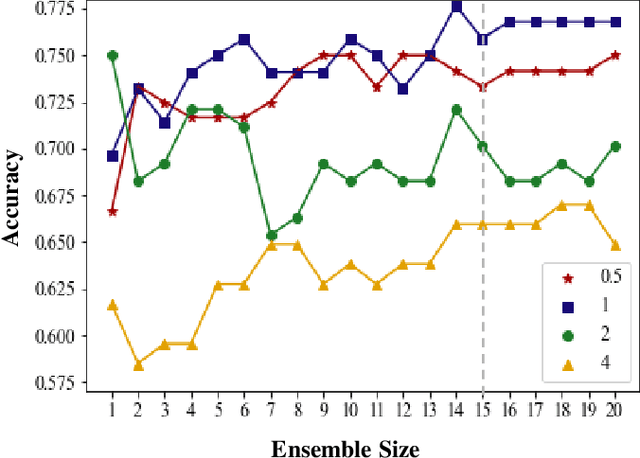

Abstract:Robotic-assisted surgeries benefit both surgeons and patients, however, surgeons frequently need to adjust the endoscopic camera to achieve good viewpoints. Simultaneously controlling the camera and the surgical instruments is impossible, and consequentially, these camera adjustments repeatedly interrupt the surgery. Autonomous camera control could help overcome this challenge, but most existing systems are reactive, e.g., by having the camera follow the surgical instruments. We propose a predictive approach for anticipating when camera movements will occur using artificial neural networks. We used the kinematic data of the surgical instruments, which were recorded during robotic-assisted surgical training on porcine models. We split the data into segments, and labeled each either as a segment that immediately precedes a camera movement, or one that does not. Due to the large class imbalance, we trained an ensemble of networks, each on a balanced sub-set of the training data. We found that the instruments' kinematic data can be used to predict when camera movements will occur, and evaluated the performance on different segment durations and ensemble sizes. We also studied how much in advance an upcoming camera movement can be predicted, and found that predicting a camera movement 0.25, 0.5, and 1 second before they occurred achieved 98%, 94%, and 84% accuracy relative to the prediction of an imminent camera movement. This indicates that camera movement events can be predicted early enough to leave time for computing and executing an autonomous camera movement and suggests that an autonomous camera controller for RAMIS may one day be feasible.

Combining Time-Dependent Force Perturbations in Robot-Assisted Surgery Training

May 09, 2021

Abstract:Teleoperated robot-assisted minimally-invasive surgery (RAMIS) offers many advantages over open surgery. However, there are still no guidelines for training skills in RAMIS. Motor learning theories have the potential to improve the design of RAMIS training but they are based on simple movements that do not resemble the complex movements required in surgery. To fill this gap, we designed an experiment to investigate the effect of time-dependent force perturbations on the learning of a pattern-cutting surgical task. Thirty participants took part in the experiment: (1) a control group that trained without perturbations, and (2) a 1Hz group that trained with 1Hz periodic force perturbations that pushed each participant's hand inwards and outwards in the radial direction. We monitored their learning using four objective metrics and found that participants in the 1Hz group learned how to overcome the perturbations and improved their performances during training without impairing their performances after the perturbations were removed. Our results present an important step toward understanding the effect of adding perturbations to RAMIS training protocols and improving RAMIS training for the benefit of surgeons and patients.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge