Anthony M. Jarc

Haptic Guidance and Haptic Error Amplification in a Virtual Surgical Robotic Training Environment

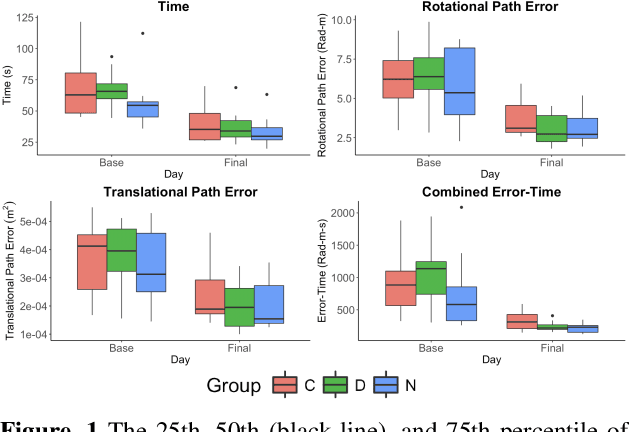

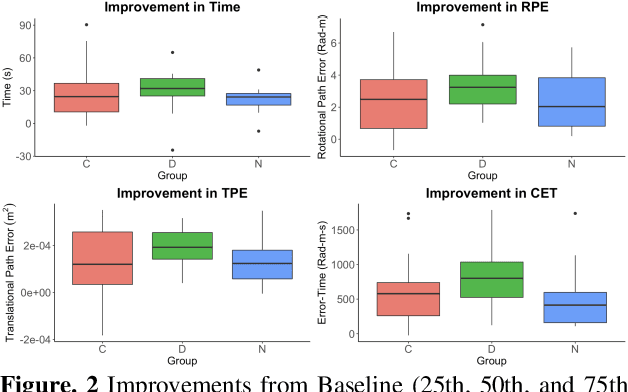

Sep 11, 2023Abstract:Teleoperated robotic systems have introduced more intuitive control for minimally invasive surgery, but the optimal method for training remains unknown. Recent motor learning studies have demonstrated that exaggeration of errors helps trainees learn to perform tasks with greater speed and accuracy. We hypothesized that training in a force field that pushes the operator away from a desired path would improve their performance on a virtual reality ring-on-wire task. Forty surgical novices trained under a no-force, guidance, or error-amplifying force field over five days. Completion time, translational and rotational path error, and combined error-time were evaluated under no force field on the final day. The groups significantly differed in combined error-time, with the guidance group performing the worst. Error-amplifying field participants showed the most improvement and did not plateau in their performance during training, suggesting that learning was still ongoing. Guidance field participants had the worst performance on the final day, confirming the guidance hypothesis. Participants with high initial path error benefited more from guidance. Participants with high initial combined error-time benefited more from guidance and error-amplifying force field training. Our results suggest that error-amplifying and error-reducing haptic training for robot-assisted telesurgery benefits trainees of different abilities differently.

Robot-Assisted Surgical Training Over Several Days in a Virtual Surgical Environment with Divergent and Convergent Force Fields

Sep 23, 2021

Abstract:Surgical procedures require a high level of technical skill to ensure efficiency and patient safety. Due to the direct effect of surgeon skill on patient outcomes, the development of cost-effective and realistic training methods is imperative to accelerate skill acquisition. Teleoperated robotic devices allow for intuitive ergonomic control, but the learning curve for these systems remains steep. Recent studies in motor learning have shown that visual or physical exaggeration of errors helps trainees to learn to perform tasks faster and more accurately. In this study, we extended the work from two previous studies to investigate the performance of subjects in different force field training conditions, including convergent (assistive), divergent (resistive), and no force field (null).

* 2 pages, 2 figures, Hamlyn Symposium on Medical Robotics 2019

Toward Force Estimation in Robot-Assisted Surgery using Deep Learning with Vision and Robot State

Nov 13, 2020

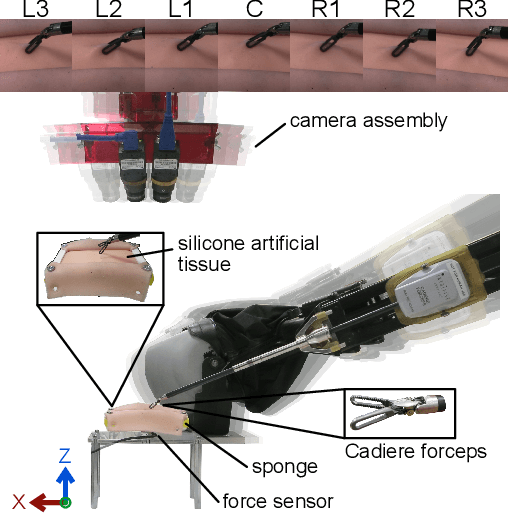

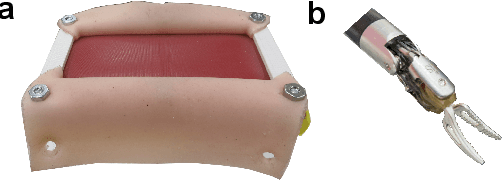

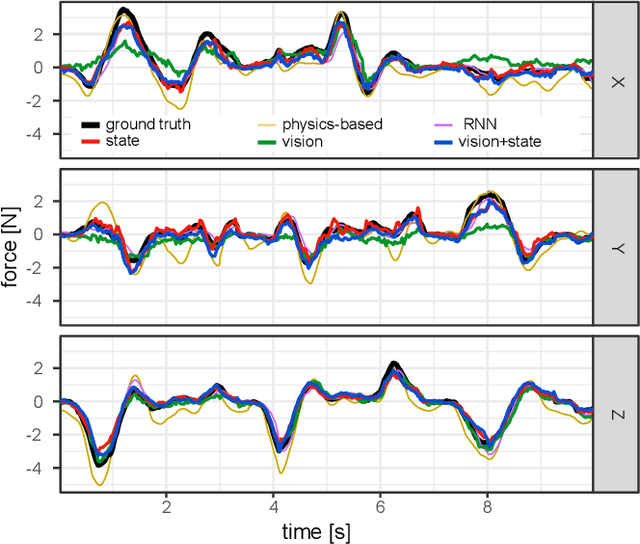

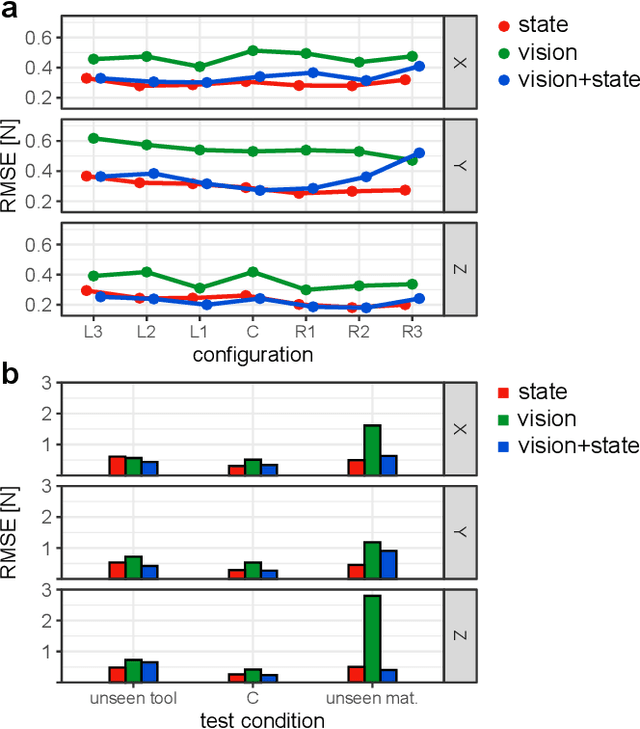

Abstract:Knowledge of interaction forces during teleoperated robot-assisted surgery could be used to enable force feedback to human operators and evaluate tissue handling skill. However, direct force sensing at the end-effector is challenging because it requires biocompatible, sterilizable, and cost-effective sensors. Vision-based deep learning using convolutional neural networks is a promising approach for providing useful force estimates, though questions remain about generalization to new scenarios and real-time inference. We present a force estimation neural network that uses RGB images and robot state as inputs. Using a self-collected dataset, we compared the network to variants that included only a single input type, and evaluated how they generalized to new viewpoints, workspace positions, materials, and tools. We found that vision-based networks were sensitive to shifts in viewpoints, while state-only networks were robust to changes in workspace. The network with both state and vision inputs had the highest accuracy for an unseen tool, and was moderately robust to changes in viewpoints. Through feature removal studies, we found that using only position features produced better accuracy than using only force features as input. The network with both state and vision inputs outperformed a physics-based baseline model in accuracy. It showed comparable accuracy but faster computation times than a baseline recurrent neural network, making it better suited for real-time applications.

Task Dynamics of Prior Training Influence Visual Force Estimation Ability During Teleoperation of a Minimally Invasive Surgical Robot

Apr 28, 2020

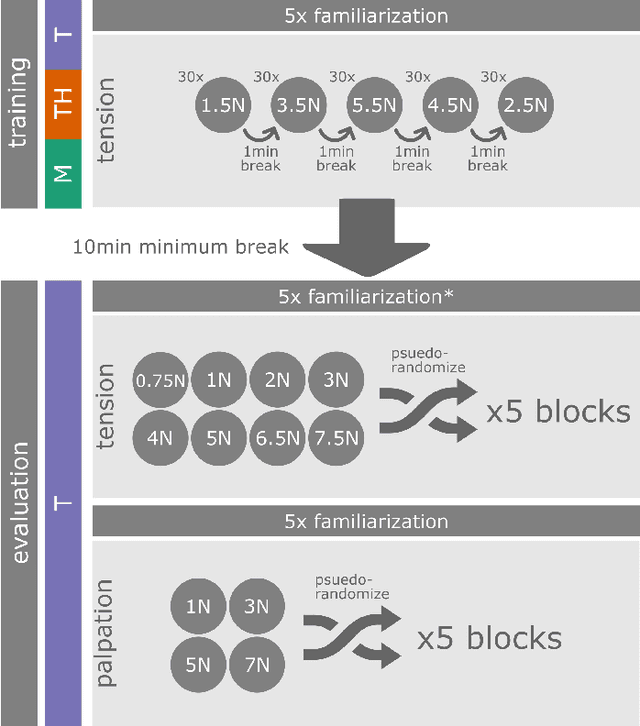

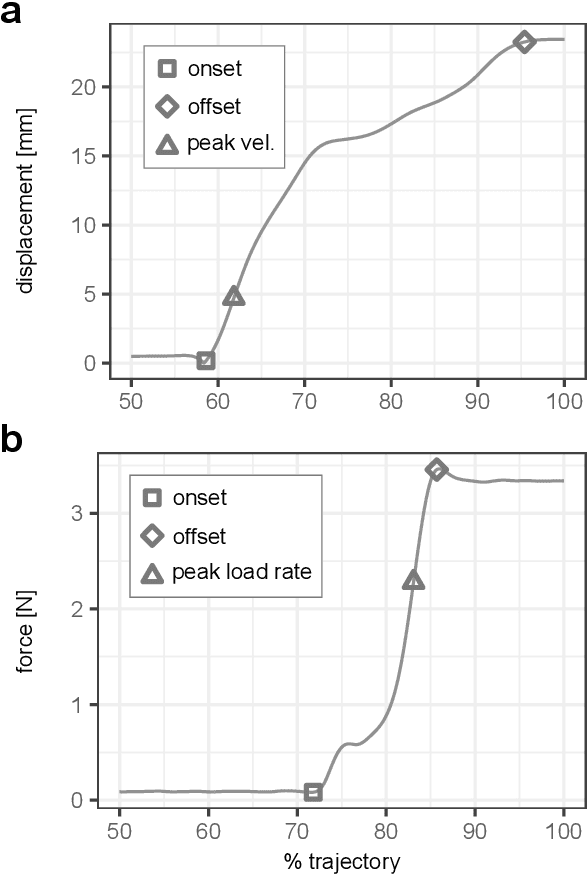

Abstract:The lack of haptic feedback in Robot-assisted Minimally Invasive Surgery (RMIS) is a potential barrier to safe tissue handling during surgery. Bayesian modeling theory suggests that surgeons with experience in open or laparoscopic surgery can develop priors of tissue stiffness that translate to better force estimation abilities during RMIS compared to surgeons with no experience. To test if prior haptic experience leads to improved force estimation ability in teleoperation, 33 participants were assigned to one of three training conditions: manual manipulation, teleoperation with force feedback, or teleoperation without force feedback, and learned to tension a silicone sample to a set of force values. They were then asked to perform the tension task, and a previously unencountered palpation task, to a different set of force values under teleoperation without force feedback. Compared to the teleoperation groups, the manual group had higher force error in the tension task outside the range of forces they had trained on, but showed better speed-accuracy functions in the palpation task at low force levels. This suggests that the dynamics of the training modality affect force estimation ability during teleoperation, with the prior haptic experience accessible if formed under the same dynamics as the task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge