Huiqiang Xie

Coarse-to-Fine Semantic Communication Systems for Text Transmission

Apr 02, 2025

Abstract:Achieving more powerful semantic representations and semantic understanding is one of the key problems in improving the performance of semantic communication systems. This work focuses on enhancing the semantic understanding of the text data to improve the effectiveness of semantic exchange. We propose a novel semantic communication system for text transmission, in which the semantic understanding is enhanced by coarse-to-fine processing. Especially, a dual attention mechanism is proposed to capture both the coarse and fine semantic information. Numerical experiments show the proposed system outperforms the benchmarks in terms of bilingual evaluation, sentence similarity, and robustness under various channel conditions.

Hybrid Digital-Analog Semantic Communications

May 21, 2024

Abstract:Digital and analog semantic communications (SemCom) face inherent limitations such as data security concerns in analog SemCom, as well as leveling-off and cliff-edge effects in digital SemCom. In order to overcome these challenges, we propose a novel SemCom framework and a corresponding system called HDA-DeepSC, which leverages a hybrid digital-analog approach for multimedia transmission. This is achieved through the introduction of digital-analog allocation and fusion modules. To strike a balance between data rate and distortion, we design new loss functions that take into account long-distance dependencies in the semantic distortion constraint, essential information recovery in the channel distortion constraint, and optimal bit stream generation in the rate constraint. Additionally, we propose denoising diffusion-based signal detection techniques, which involve carefully designed variance schedules and sampling algorithms to refine transmitted signals. Through extensive numerical experiments, we will demonstrate that HDA-DeepSC exhibits robustness to channel variations and is capable of supporting various communication scenarios. Our proposed framework outperforms existing benchmarks in terms of peak signal-to-noise ratio and multi-scale structural similarity, showcasing its superiority in semantic communication quality.

Semantic MIMO Systems for Speech-to-Text Transmission

May 13, 2024Abstract:Semantic communications have been utilized to execute numerous intelligent tasks by transmitting task-related semantic information instead of bits. In this article, we propose a semantic-aware speech-to-text transmission system for the single-user multiple-input multiple-output (MIMO) and multi-user MIMO communication scenarios, named SAC-ST. Particularly, a semantic communication system to serve the speech-to-text task at the receiver is first designed, which compresses the semantic information and generates the low-dimensional semantic features by leveraging the transformer module. In addition, a novel semantic-aware network is proposed to facilitate the transmission with high semantic fidelity to identify the critical semantic information and guarantee it is recovered accurately. Furthermore, we extend the SAC-ST with a neural network-enabled channel estimation network to mitigate the dependence on accurate channel state information and validate the feasibility of SAC-ST in practical communication environments. Simulation results will show that the proposed SAC-ST outperforms the communication framework without the semantic-aware network for speech-to-text transmission over the MIMO channels in terms of the speech-to-text metrics, especially in the low signal-to-noise regime. Moreover, the SAC-ST with the developed channel estimation network is comparable to the SAC-ST with perfect channel state information.

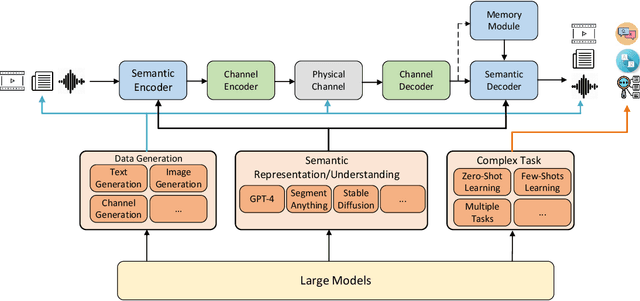

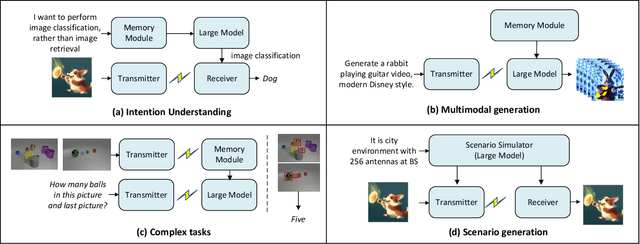

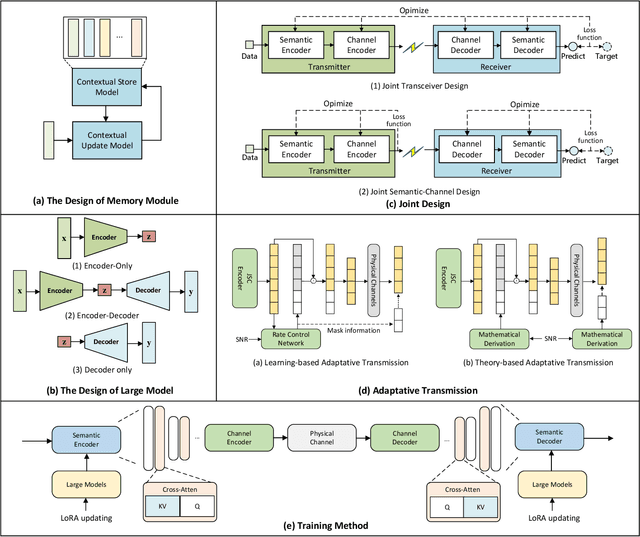

Towards Intelligent Communications: Large Model Empowered Semantic Communications

Feb 20, 2024

Abstract:Deep learning enabled semantic communications have shown great potential to significantly improve transmission efficiency and alleviate spectrum scarcity, by effectively exchanging the semantics behind the data. Recently, the emergence of large models, boasting billions of parameters, has unveiled remarkable human-like intelligence, offering a promising avenue for advancing semantic communication by enhancing semantic understanding and contextual understanding. This article systematically investigates the large model-empowered semantic communication systems from potential applications to system design. First, we propose a new semantic communication architecture that seamlessly integrates large models into semantic communication through the introduction of a memory module. Then, the typical applications are illustrated to show the benefits of the new architecture. Besides, we discuss the key designs in implementing the new semantic communication systems from module design to system training. Finally, the potential research directions are identified to boost the large model-empowered semantic communications.

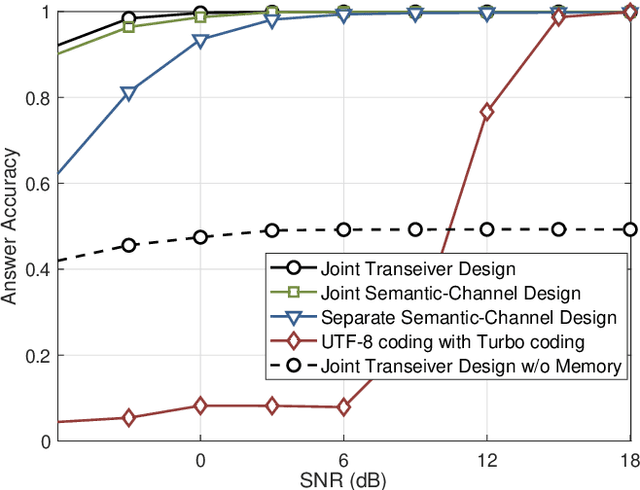

Semantic Communication with Memory

Mar 22, 2023Abstract:While semantic communication succeeds in efficiently transmitting due to the strong capability to extract the essential semantic information, it is still far from the intelligent or human-like communications. In this paper, we introduce an essential component, memory, into semantic communications to mimic human communications. Particularly, we investigate a deep learning (DL) based semantic communication system with memory, named Mem-DeepSC, by considering the scenario question answer task. We exploit the universal Transformer based transceiver to extract the semantic information and introduce the memory module to process the context information. Moreover, we derive the relationship between the length of semantic signal and the channel noise to validate the possibility of dynamic transmission. Specially, we propose two dynamic transmission methods to enhance the transmission reliability as well as to reduce the communication overhead by masking some unessential elements, which are recognized through training the model with mutual information. Numerical results show that the proposed Mem-DeepSC is superior to benchmarks in terms of answer accuracy and transmission efficiency, i.e., number of transmitted symbols.

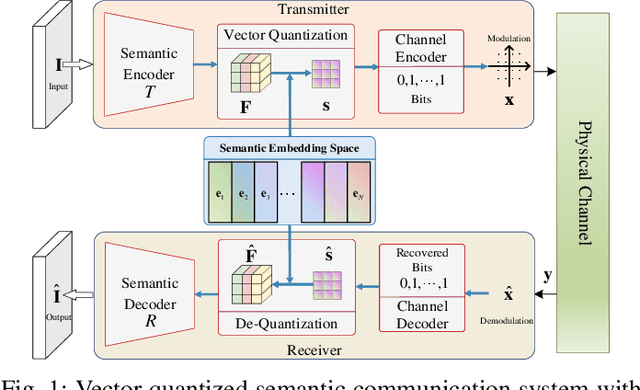

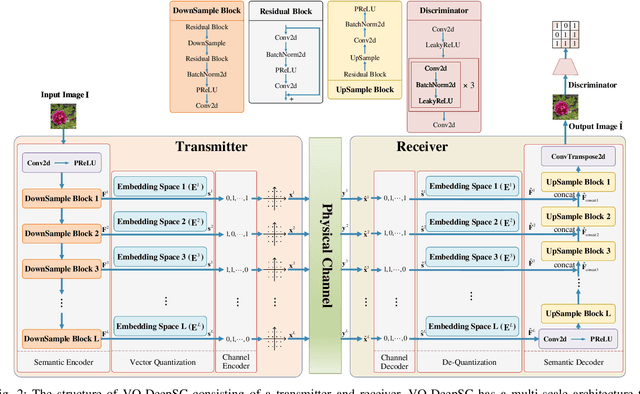

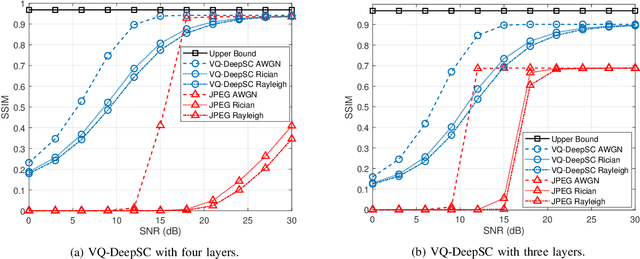

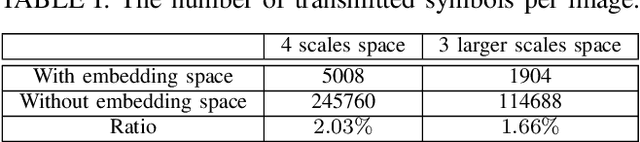

Vector Quantized Semantic Communication System

Sep 23, 2022

Abstract:Although analog semantic communication systems have received considerable attention in the literature, there is less work on digital semantic communication systems. In this paper, we develop a deep learning (DL)-enabled vector quantized (VQ) semantic communication system for image transmission, named VQ-DeepSC. Specifically, we propose a convolutional neural network (CNN)-based transceiver to extract multi-scale semantic features of images and introduce multi-scale semantic embedding spaces to perform semantic feature quantization, rendering the data compatible with digital communication systems. Furthermore, we employ adversarial training to improve the quality of received images by introducing a PatchGAN discriminator. Experimental results demonstrate that the proposed VQ-DeepSC outperforms traditional image transmission methods in terms of SSIM.

Task-Oriented Multi-User Semantic Communications

Dec 19, 2021

Abstract:While semantic communications have shown the potential in the case of single-modal single-users, its applications to the multi-user scenario remain limited. In this paper, we investigate deep learning (DL) based multi-user semantic communication systems for transmitting single-modal data and multimodal data, respectively. We will adopt three intelligent tasks, including, image retrieval, machine translation, and visual question answering (VQA) as the transmission goal of semantic communication systems. We will then propose a Transformer based unique framework to unify the structure of transmitters for different tasks. For the single-modal multi-user system, we will propose two Transformer based models, named, DeepSC-IR and DeepSC-MT, to perform image retrieval and machine translation, respectively. In this case, DeepSC-IR is trained to optimize the distance in embedding space between images and DeepSC-MT is trained to minimize the semantic errors by recovering the semantic meaning of sentences. For the multimodal multi-user system, we develop a Transformer enabled model, named, DeepSC-VQA, for the VQA task by extracting text-image information at the transmitters and fusing it at the receiver. In particular, a novel layer-wise Transformer is designed to help fuse multimodal data by adding connection between each of the encoder and decoder layers. Numerical results will show that the proposed models are superior to traditional communications in terms of the robustness to channels, computational complexity, transmission delay, and the task-execution performance at various task-specific metrics.

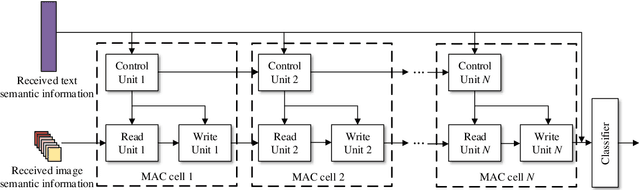

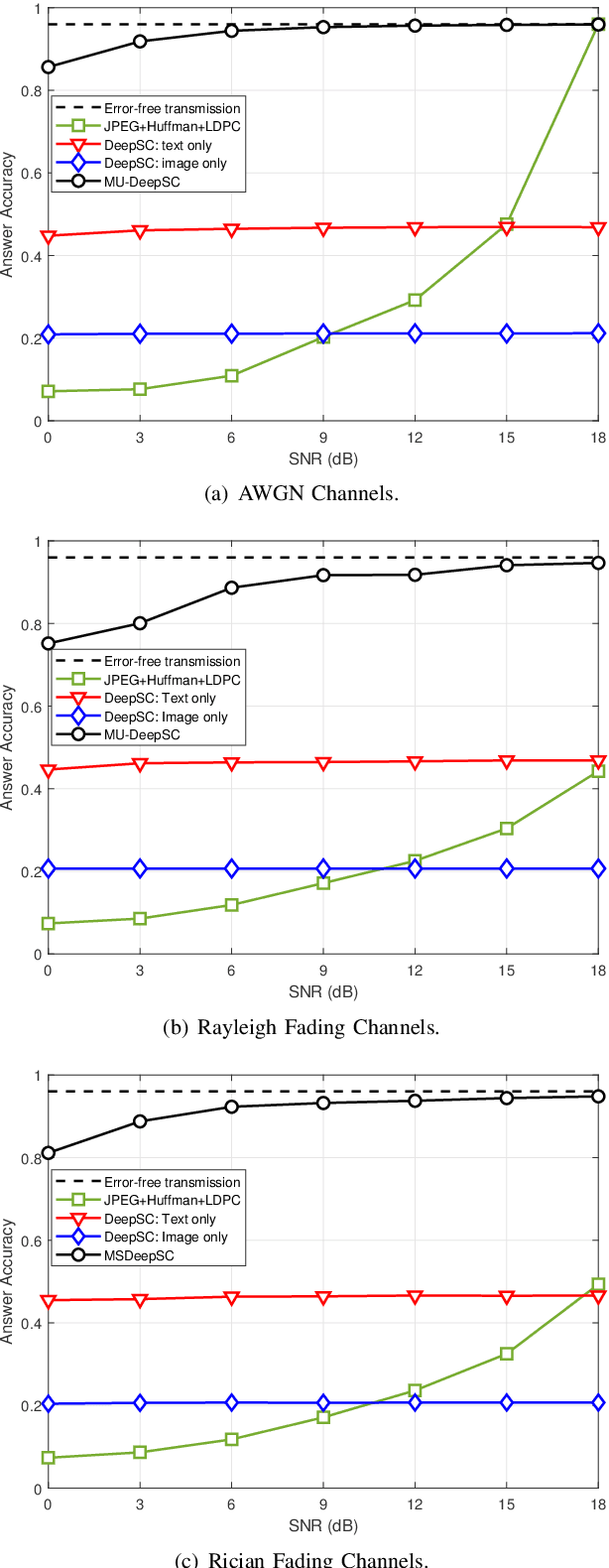

Task-Oriented Multi-User Semantic Communications for Multimodal Data

Aug 16, 2021

Abstract:Semantic communications focus on the successful transmission of information relevant to the transmission task. In this paper, we investigate multi-users transmission for multimodal data in a task semantic communication system. We take the vision-answering as the semantic transmission task, in which part of the users transmit images and the other users transmit text to inquiry the information about the images. The receiver will provide answers based on the image and text from multiple users in the considered system. To exploit the correlation between the multimodal data from multiple users, we proposed a deep neural network enabled multi-user semantic communication system, named MU-DeepSC, for the visual question answering (VQA) task, in which the answer is highly dependent on the related image and text from the multiple users. Particularly, based on the memory, attention, and composition (MAC) neural network, we jointly design the transceiver and merge the MAC network to capture the features from the correlated multimodal data for serving the transmission task. The MU-DeepSC extracts the semantic information of image and text from different users and then generates the corresponding answers. Simulation results validate the feasibility of the proposed MU-DeepSC, which is more robust to various channel conditions than the traditional communication systems, especially in the low signal-to-noise (SNR) regime.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge