Towards Intelligent Communications: Large Model Empowered Semantic Communications

Paper and Code

Feb 20, 2024

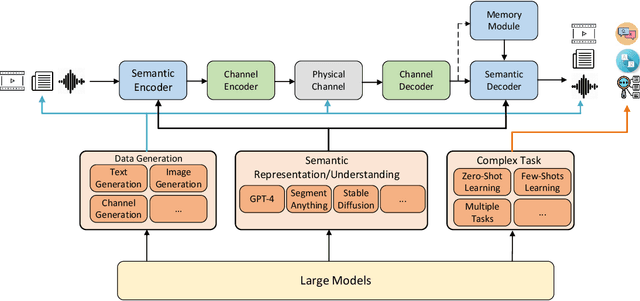

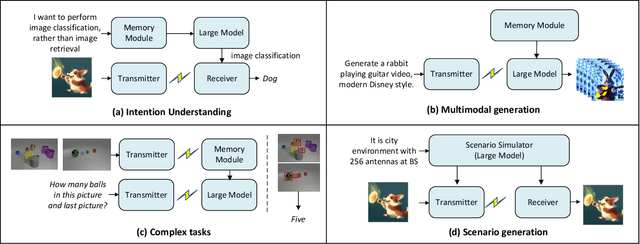

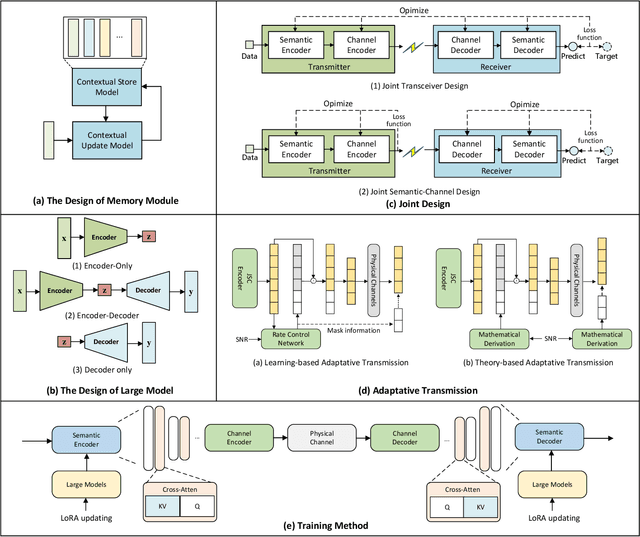

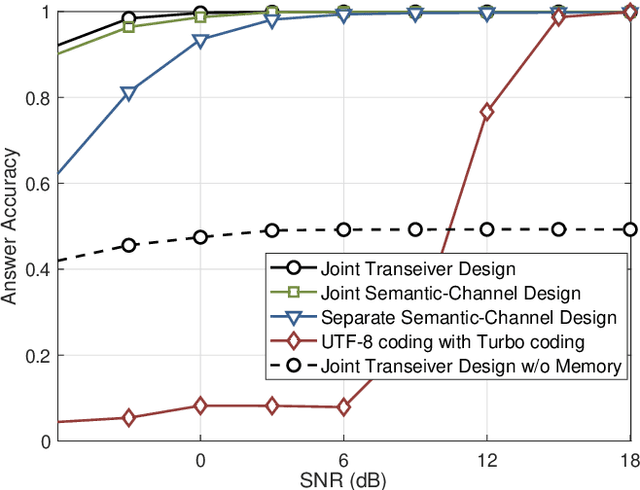

Deep learning enabled semantic communications have shown great potential to significantly improve transmission efficiency and alleviate spectrum scarcity, by effectively exchanging the semantics behind the data. Recently, the emergence of large models, boasting billions of parameters, has unveiled remarkable human-like intelligence, offering a promising avenue for advancing semantic communication by enhancing semantic understanding and contextual understanding. This article systematically investigates the large model-empowered semantic communication systems from potential applications to system design. First, we propose a new semantic communication architecture that seamlessly integrates large models into semantic communication through the introduction of a memory module. Then, the typical applications are illustrated to show the benefits of the new architecture. Besides, we discuss the key designs in implementing the new semantic communication systems from module design to system training. Finally, the potential research directions are identified to boost the large model-empowered semantic communications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge