Huanjun Wu

Non-Imaging Medical Data Synthesis for Trustworthy AI: A Comprehensive Survey

Sep 17, 2022

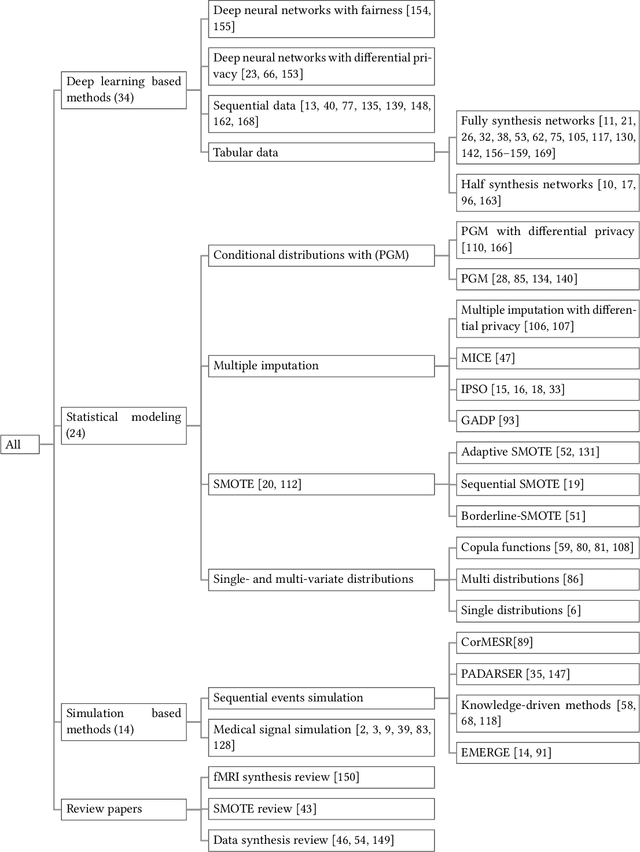

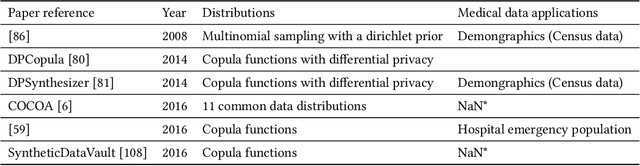

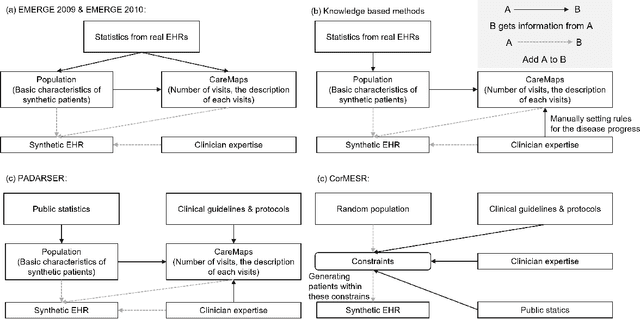

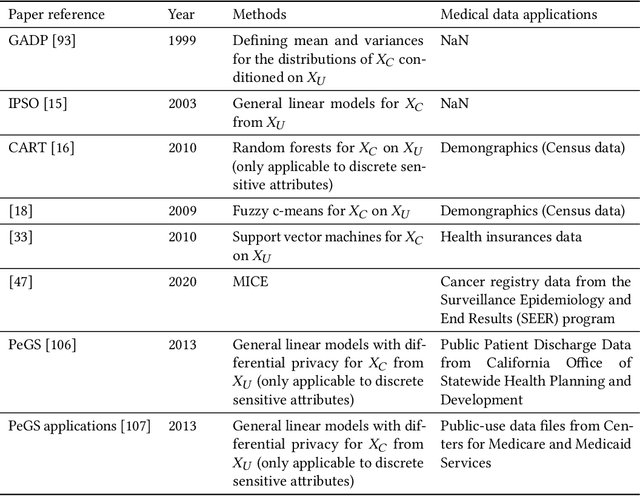

Abstract:Data quality is the key factor for the development of trustworthy AI in healthcare. A large volume of curated datasets with controlled confounding factors can help improve the accuracy, robustness and privacy of downstream AI algorithms. However, access to good quality datasets is limited by the technical difficulty of data acquisition and large-scale sharing of healthcare data is hindered by strict ethical restrictions. Data synthesis algorithms, which generate data with a similar distribution as real clinical data, can serve as a potential solution to address the scarcity of good quality data during the development of trustworthy AI. However, state-of-the-art data synthesis algorithms, especially deep learning algorithms, focus more on imaging data while neglecting the synthesis of non-imaging healthcare data, including clinical measurements, medical signals and waveforms, and electronic healthcare records (EHRs). Thus, in this paper, we will review the synthesis algorithms, particularly for non-imaging medical data, with the aim of providing trustworthy AI in this domain. This tutorial-styled review paper will provide comprehensive descriptions of non-imaging medical data synthesis on aspects including algorithms, evaluations, limitations and future research directions.

Data and Physics Driven Learning Models for Fast MRI -- Fundamentals and Methodologies from CNN, GAN to Attention and Transformers

Apr 01, 2022

Abstract:Research studies have shown no qualms about using data driven deep learning models for downstream tasks in medical image analysis, e.g., anatomy segmentation and lesion detection, disease diagnosis and prognosis, and treatment planning. However, deep learning models are not the sovereign remedy for medical image analysis when the upstream imaging is not being conducted properly (with artefacts). This has been manifested in MRI studies, where the scanning is typically slow, prone to motion artefacts, with a relatively low signal to noise ratio, and poor spatial and/or temporal resolution. Recent studies have witnessed substantial growth in the development of deep learning techniques for propelling fast MRI. This article aims to (1) introduce the deep learning based data driven techniques for fast MRI including convolutional neural network and generative adversarial network based methods, (2) survey the attention and transformer based models for speeding up MRI reconstruction, and (3) detail the research in coupling physics and data driven models for MRI acceleration. Finally, we will demonstrate through a few clinical applications, explain the importance of data harmonisation and explainable models for such fast MRI techniques in multicentre and multi-scanner studies, and discuss common pitfalls in current research and recommendations for future research directions.

Fast MRI Reconstruction: How Powerful Transformers Are?

Jan 23, 2022

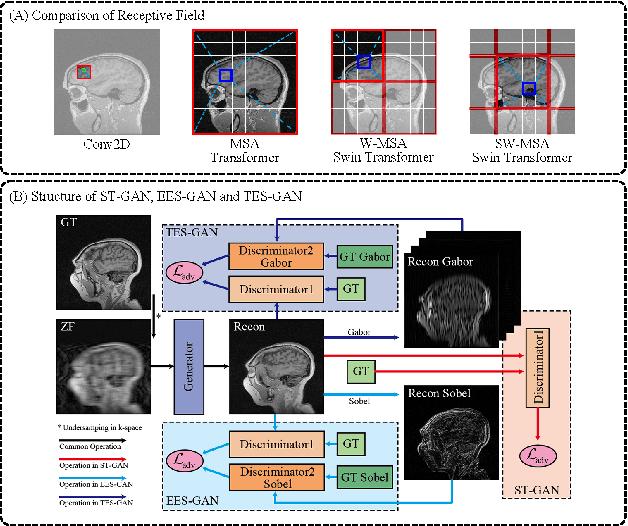

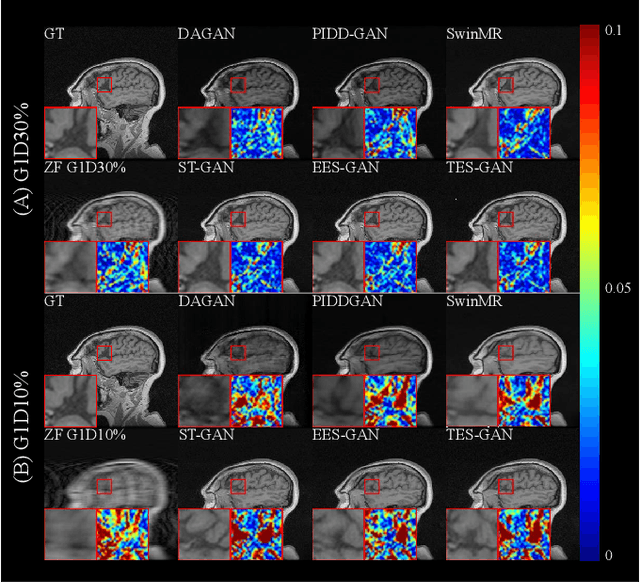

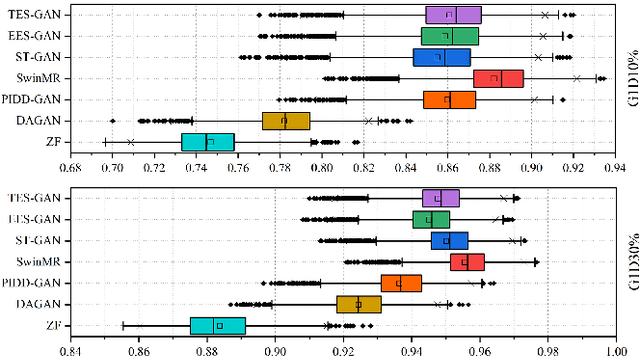

Abstract:Magnetic resonance imaging (MRI) is a widely used non-radiative and non-invasive method for clinical interrogation of organ structures and metabolism, with an inherently long scanning time. Methods by k-space undersampling and deep learning based reconstruction have been popularised to accelerate the scanning process. This work focuses on investigating how powerful transformers are for fast MRI by exploiting and comparing different novel network architectures. In particular, a generative adversarial network (GAN) based Swin transformer (ST-GAN) was introduced for the fast MRI reconstruction. To further preserve the edge and texture information, edge enhanced GAN based Swin transformer (EESGAN) and texture enhanced GAN based Swin transformer (TES-GAN) were also developed, where a dual-discriminator GAN structure was applied. We compared our proposed GAN based transformers, standalone Swin transformer and other convolutional neural networks based based GAN model in terms of the evaluation metrics PSNR, SSIM and FID. We showed that transformers work well for the MRI reconstruction from different undersampling conditions. The utilisation of GAN's adversarial structure improves the quality of images reconstructed when undersampled for 30% or higher.

Swin Transformer for Fast MRI

Jan 10, 2022

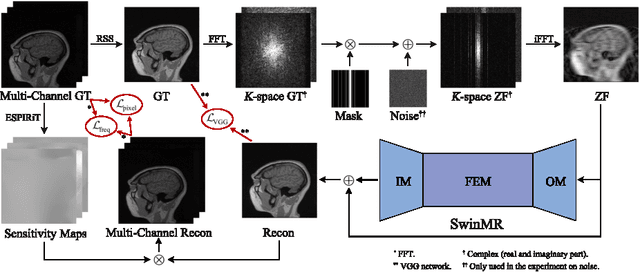

Abstract:Magnetic resonance imaging (MRI) is an important non-invasive clinical tool that can produce high-resolution and reproducible images. However, a long scanning time is required for high-quality MR images, which leads to exhaustion and discomfort of patients, inducing more artefacts due to voluntary movements of the patients and involuntary physiological movements. To accelerate the scanning process, methods by k-space undersampling and deep learning based reconstruction have been popularised. This work introduced SwinMR, a novel Swin transformer based method for fast MRI reconstruction. The whole network consisted of an input module (IM), a feature extraction module (FEM) and an output module (OM). The IM and OM were 2D convolutional layers and the FEM was composed of a cascaded of residual Swin transformer blocks (RSTBs) and 2D convolutional layers. The RSTB consisted of a series of Swin transformer layers (STLs). The shifted windows multi-head self-attention (W-MSA/SW-MSA) of STL was performed in shifted windows rather than the multi-head self-attention (MSA) of the original transformer in the whole image space. A novel multi-channel loss was proposed by using the sensitivity maps, which was proved to reserve more textures and details. We performed a series of comparative studies and ablation studies in the Calgary-Campinas public brain MR dataset and conducted a downstream segmentation experiment in the Multi-modal Brain Tumour Segmentation Challenge 2017 dataset. The results demonstrate our SwinMR achieved high-quality reconstruction compared with other benchmark methods, and it shows great robustness with different undersampling masks, under noise interruption and on different datasets. The code is publicly available at https://github.com/ayanglab/SwinMR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge