Hongyan Zhou

Improving Robustness of Deep Convolutional Neural Networks via Multiresolution Learning

Sep 28, 2023

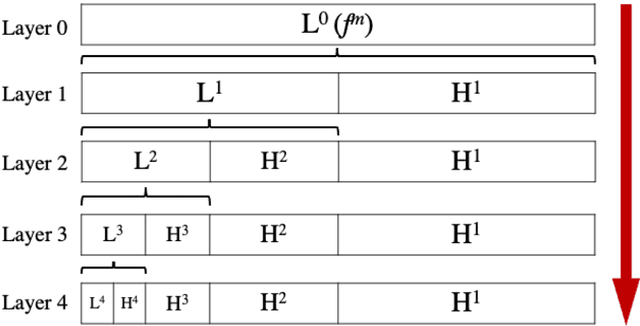

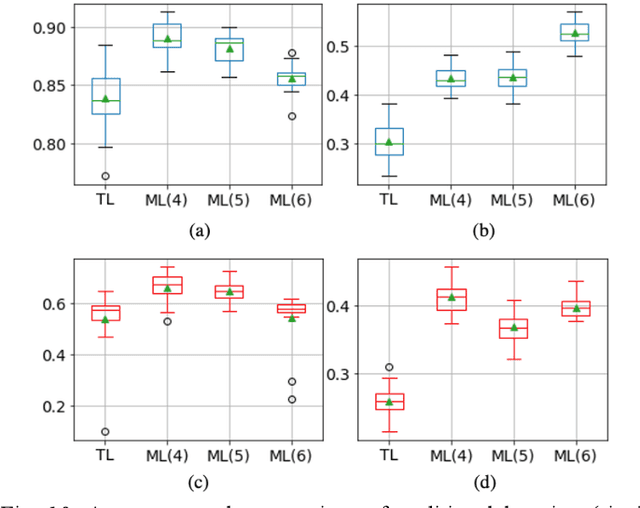

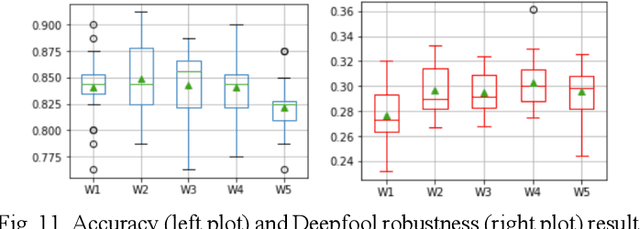

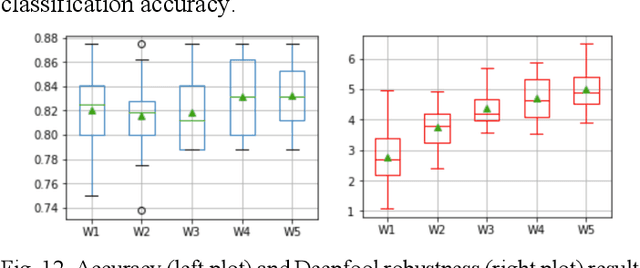

Abstract:The current learning process of deep learning, regardless of any deep neural network (DNN) architecture and/or learning algorithm used, is essentially a single resolution training. We explore multiresolution learning and show that multiresolution learning can significantly improve robustness of DNN models for both 1D signal and 2D signal (image) prediction problems. We demonstrate this improvement in terms of both noise and adversarial robustness as well as with small training dataset size. Our results also suggest that it may not be necessary to trade standard accuracy for robustness with multiresolution learning, which is, interestingly, contrary to the observation obtained from the traditional single resolution learning setting.

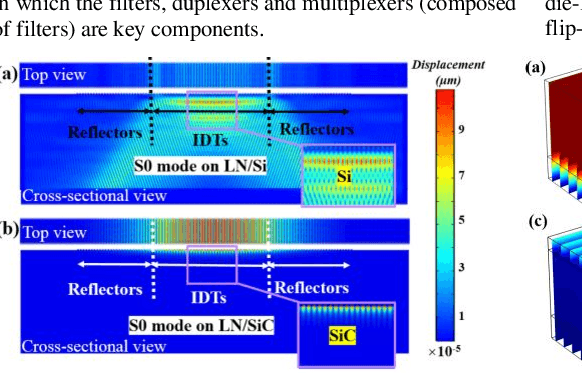

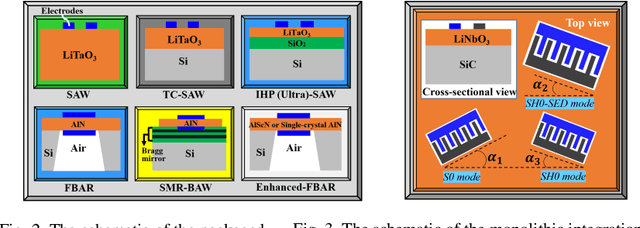

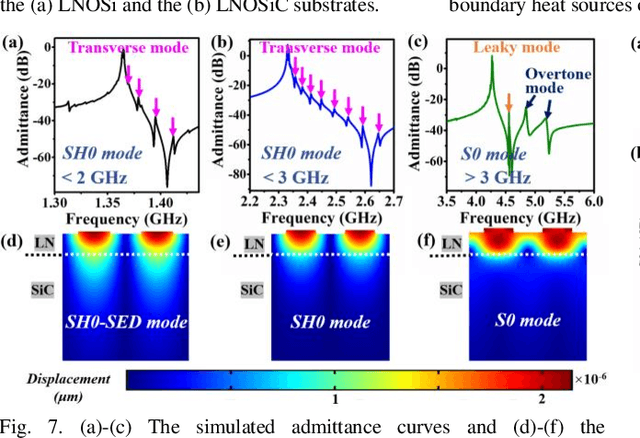

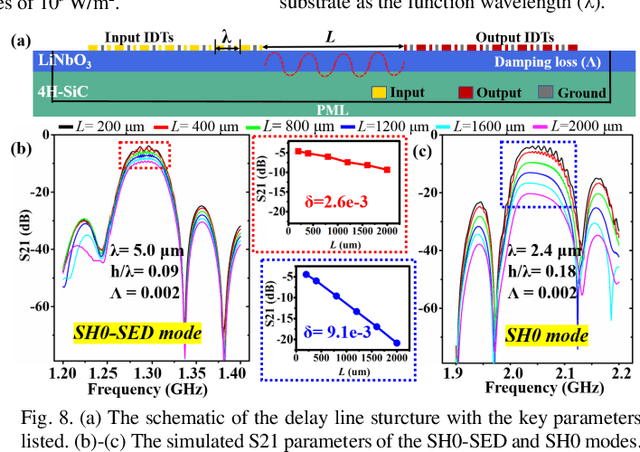

Monolithic Integrated Multiband Acoustic Devices on Heterogeneous Substrate for Sub-6 GHz RF-FEMs

Oct 20, 2021

Abstract:Monolithic integration of multiband (1.4~ 6.0 GHz) RF acoustic devices were successfully demonstrated within the same process flow by using the lithium niobate (LN) thin film on silicon carbide (LNOSiC) substrate. A novel surface mode with sinking energy distribution was proposed, exhibiting reduced propagation loss. Surface wave and Lamb wave resonators with suppressed transverse modes and leaky modes were demonstrated, showing scalable resonances from 1.4 to 5.7 GHz, electromechanical coupling coefficients (k2) between 7.9% and 29.3%, and maximum Bode-Q (Qmax) larger than 3200. Arrayed filters with a small footprint (4.0 x 2.5 mm2) but diverse center frequencies (fc) and 3-dB fractional bandwidths (FBW) were achieved, showing fc from 1.4 to 6.0 GHz, FBW between 3.3% and 13.3%, and insertion loss (IL) between 0.59 and 2.10 dB. These results may promote the progress of hundred-filter sub-6 GHz RF front-end modules (RF-FEMs).

Model Adaptation via Model Interpolation and Boosting for Web Search Ranking

Jul 22, 2019

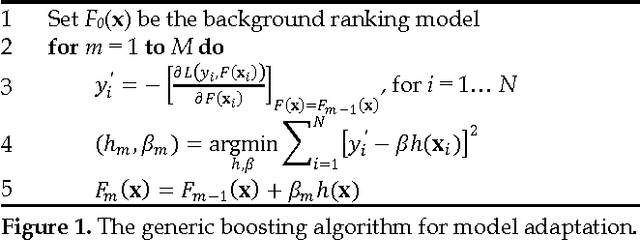

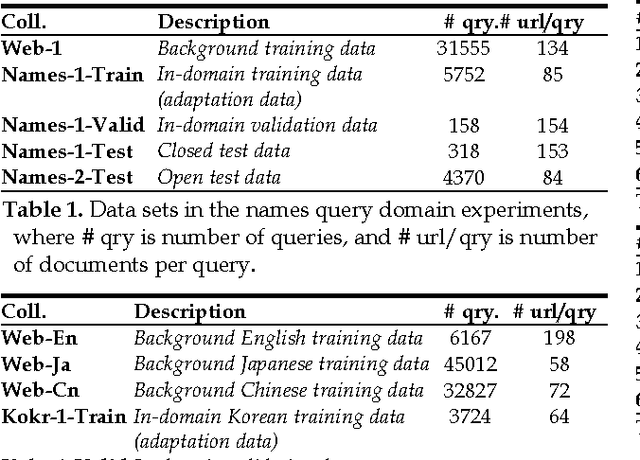

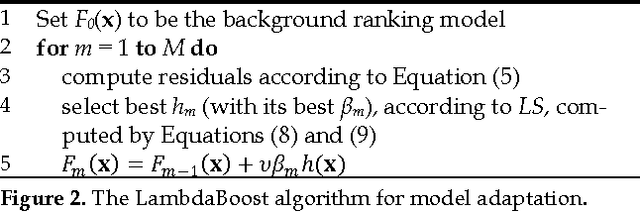

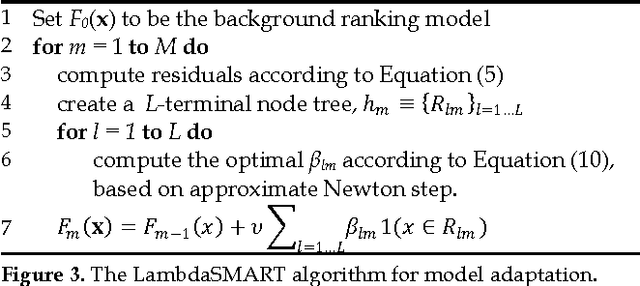

Abstract:This paper explores two classes of model adaptation methods for Web search ranking: Model Interpolation and error-driven learning approaches based on a boosting algorithm. The results show that model interpolation, though simple, achieves the best results on all the open test sets where the test data is very different from the training data. The tree-based boosting algorithm achieves the best performance on most of the closed test sets where the test data and the training data are similar, but its performance drops significantly on the open test sets due to the instability of trees. Several methods are explored to improve the robustness of the algorithm, with limited success.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge