Hongming Luo

Restoration of User Videos Shared on Social Media

Aug 26, 2022

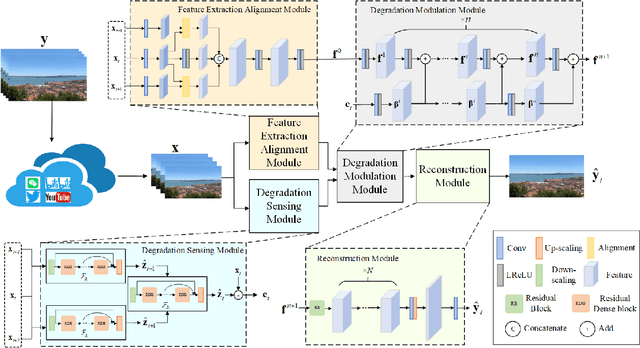

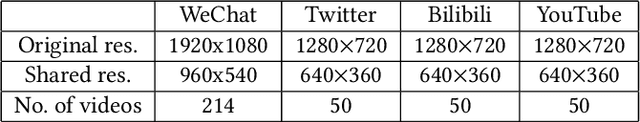

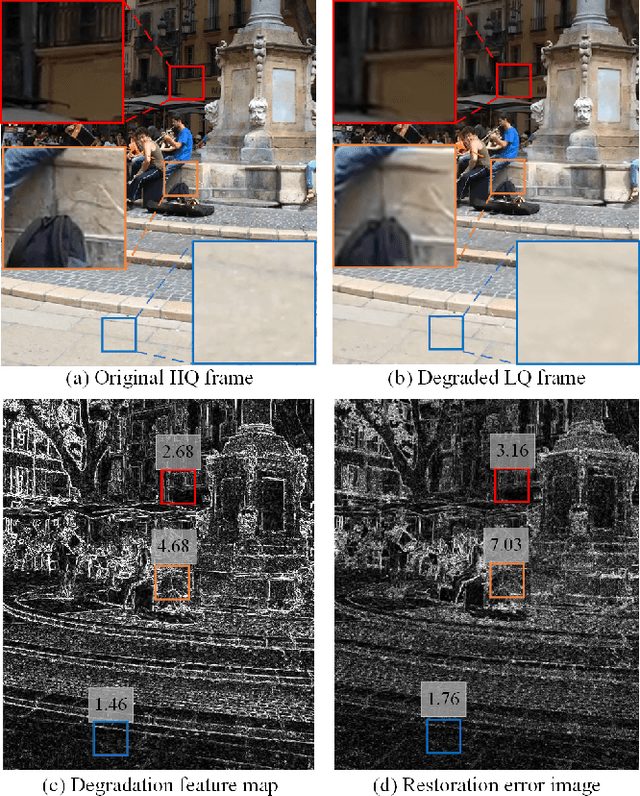

Abstract:User videos shared on social media platforms usually suffer from degradations caused by unknown proprietary processing procedures, which means that their visual quality is poorer than that of the originals. This paper presents a new general video restoration framework for the restoration of user videos shared on social media platforms. In contrast to most deep learning-based video restoration methods that perform end-to-end mapping, where feature extraction is mostly treated as a black box, in the sense that what role a feature plays is often unknown, our new method, termed Video restOration through adapTive dEgradation Sensing (VOTES), introduces the concept of a degradation feature map (DFM) to explicitly guide the video restoration process. Specifically, for each video frame, we first adaptively estimate its DFM to extract features representing the difficulty of restoring its different regions. We then feed the DFM to a convolutional neural network (CNN) to compute hierarchical degradation features to modulate an end-to-end video restoration backbone network, such that more attention is paid explicitly to potentially more difficult to restore areas, which in turn leads to enhanced restoration performance. We will explain the design rationale of the VOTES framework and present extensive experimental results to show that the new VOTES method outperforms various state-of-the-art techniques both quantitatively and qualitatively. In addition, we contribute a large scale real-world database of user videos shared on different social media platforms. Codes and datasets are available at https://github.com/luohongming/VOTES.git

Super-resolving Compressed Images via Parallel and Series Integration of Artifact Reduction and Resolution Enhancement

Mar 04, 2021

Abstract:In this paper, we propose a novel compressed image super resolution (CISR) framework based on parallel and series integration of artifact removal and resolution enhancement. Based on maximum a posterior inference for estimating a clean low-resolution (LR) input image and a clean high resolution (HR) output image from down-sampled and compressed observations, we have designed a CISR architecture consisting of two deep neural network modules: the artifact reduction module (ARM) and resolution enhancement module (REM). ARM and REM work in parallel with both taking the compressed LR image as their inputs, while they also work in series with REM taking the output of ARM as one of its inputs and ARM taking the output of REM as its other input. A unique property of our CSIR system is that a single trained model is able to super-resolve LR images compressed by different methods to various qualities. This is achieved by exploiting deep neural net-works capacity for handling image degradations, and the parallel and series connections between ARM and REM to reduce the dependency on specific degradations. ARM and REM are trained simultaneously by the deep unfolding technique. Experiments are conducted on a mixture of JPEG and WebP compressed images without a priori knowledge of the compression type and com-pression factor. Visual and quantitative comparisons demonstrate the superiority of our method over state-of-the-art super resolu-tion methods.Code link: https://github.com/luohongming/CISR_PSI

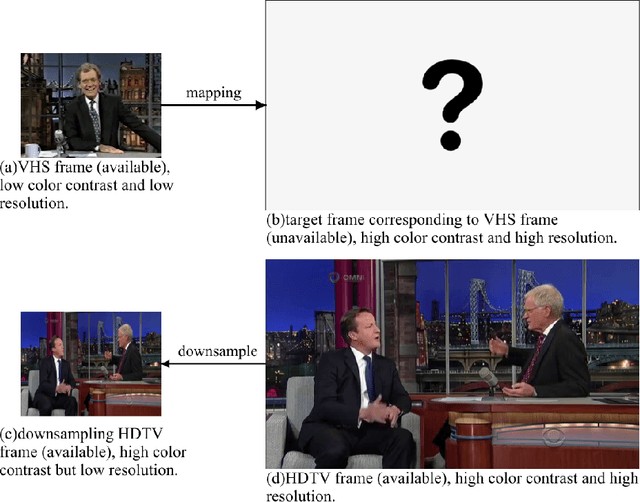

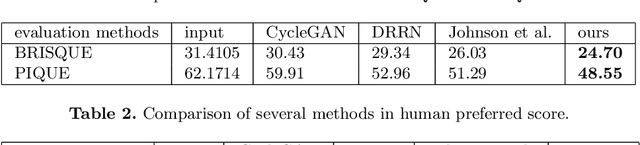

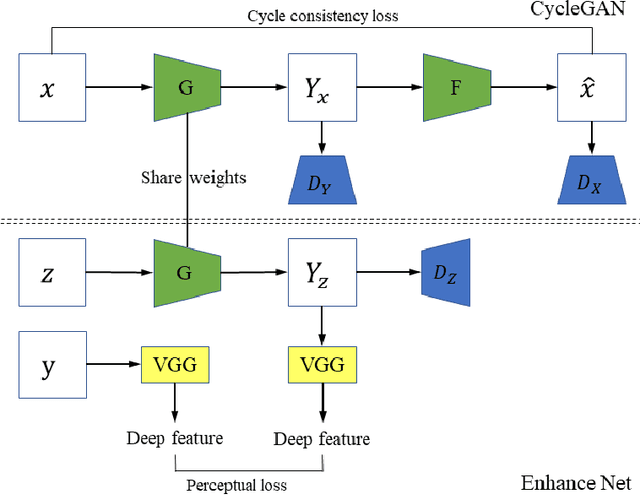

VHS to HDTV Video Translation using Multi-task Adversarial Learning

Jan 07, 2021

Abstract:There are large amount of valuable video archives in Video Home System (VHS) format. However, due to the analog nature, their quality is often poor. Compared to High-definition television (HDTV), VHS video not only has a dull color appearance but also has a lower resolution and often appears blurry. In this paper, we focus on the problem of translating VHS video to HDTV video and have developed a solution based on a novel unsupervised multi-task adversarial learning model. Inspired by the success of generative adversarial network (GAN) and CycleGAN, we employ cycle consistency loss, adversarial loss and perceptual loss together to learn a translation model. An important innovation of our work is the incorporation of super-resolution model and color transfer model that can solve unsupervised multi-task problem. To our knowledge, this is the first work that dedicated to the study of the relation between VHS and HDTV and the first computational solution to translate VHS to HDTV. We present experimental results to demonstrate the effectiveness of our solution qualitatively and quantitatively.

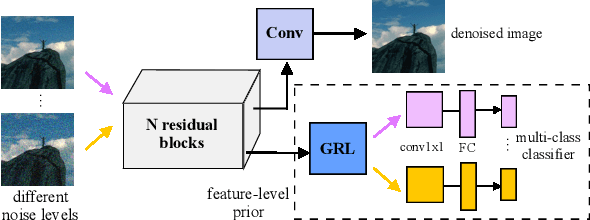

Learning Deep Image Priors for Blind Image Denoising

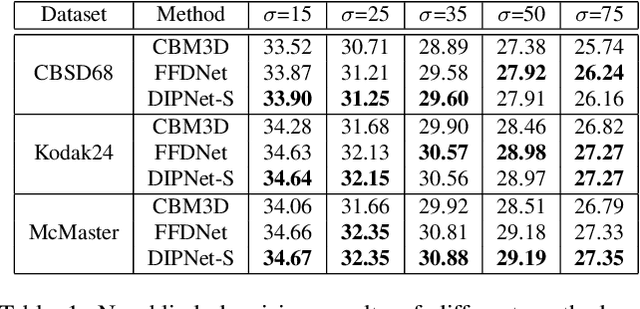

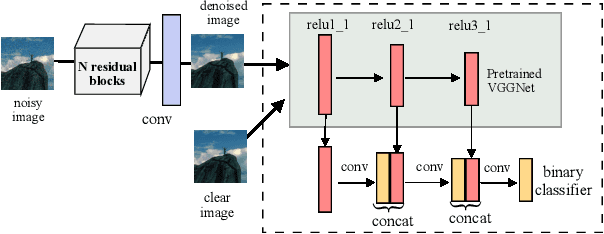

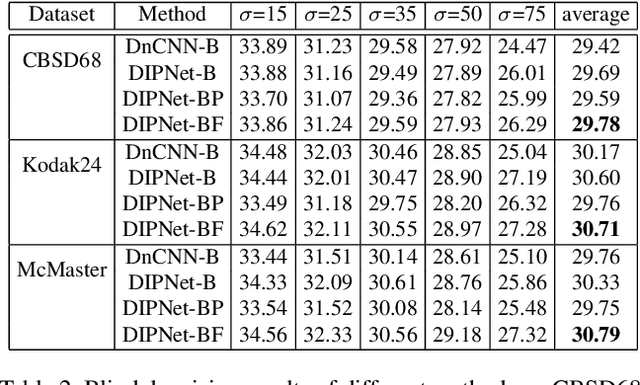

Jun 04, 2019

Abstract:Image denoising is the process of removing noise from noisy images, which is an image domain transferring task, i.e., from a single or several noise level domains to a photo-realistic domain. In this paper, we propose an effective image denoising method by learning two image priors from the perspective of domain alignment. We tackle the domain alignment on two levels. 1) the feature-level prior is to learn domain-invariant features for corrupted images with different level noise; 2) the pixel-level prior is used to push the denoised images to the natural image manifold. The two image priors are based on $\mathcal{H}$-divergence theory and implemented by learning classifiers in adversarial training manners. We evaluate our approach on multiple datasets. The results demonstrate the effectiveness of our approach for robust image denoising on both synthetic and real-world noisy images. Furthermore, we show that the feature-level prior is capable of alleviating the discrepancy between different level noise. It can be used to improve the blind denoising performance in terms of distortion measures (PSNR and SSIM), while pixel-level prior can effectively improve the perceptual quality to ensure the realistic outputs, which is further validated by subjective evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge