Hongliang Wang

College of Surveying and Geo-Informatics, Tongji University

Evaluating Text-to-Image Generative Models: An Empirical Study on Human Image Synthesis

Mar 08, 2024

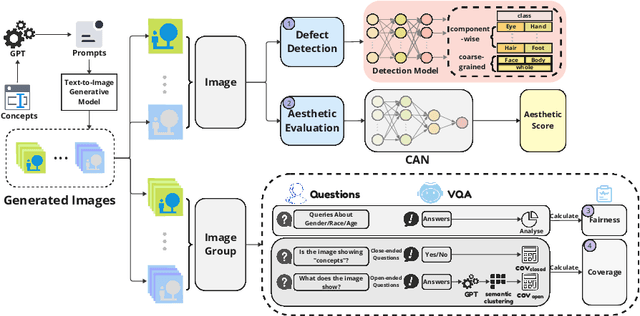

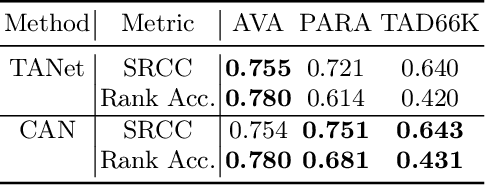

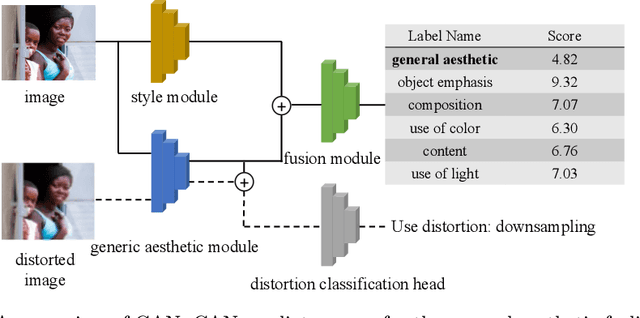

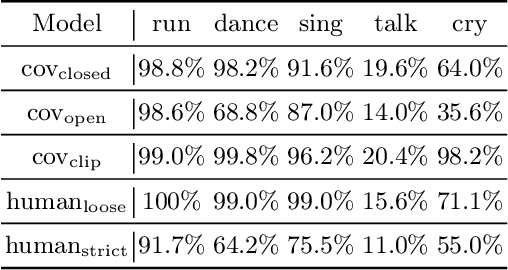

Abstract:In this paper, we present an empirical study introducing a nuanced evaluation framework for text-to-image (T2I) generative models, applied to human image synthesis. Our framework categorizes evaluations into two distinct groups: first, focusing on image qualities such as aesthetics and realism, and second, examining text conditions through concept coverage and fairness. We introduce an innovative aesthetic score prediction model that assesses the visual appeal of generated images and unveils the first dataset marked with low-quality regions in generated human images to facilitate automatic defect detection. Our exploration into concept coverage probes the model's effectiveness in interpreting and rendering text-based concepts accurately, while our analysis of fairness reveals biases in model outputs, with an emphasis on gender, race, and age. While our study is grounded in human imagery, this dual-faceted approach is designed with the flexibility to be applicable to other forms of image generation, enhancing our understanding of generative models and paving the way to the next generation of more sophisticated, contextually aware, and ethically attuned generative models. We will release our code, the data used for evaluating generative models and the dataset annotated with defective areas soon.

Mars Rover Localization Based on A2G Obstacle Distribution Pattern Matching

Oct 07, 2022Abstract:Rover localization is one of the perquisites for large scale rover exploration. In NASA's Mars 2020 mission, the Ingenuity helicopter is carried together with the rover, which is capable of obtaining high-resolution imagery of Mars terrain, and it is possible to perform localization based on aerial-to-ground (A2G) imagery correspondence. However, considering the low-texture nature of the Mars terrain, and large perspective changes between UAV and rover imagery, traditional image matching methods will struggle to obtain valid image correspondence. In this paper we propose a novel pipeline for Mars rover localization. An algorithm combing image-based rock detection and rock distribution pattern matching is used to acquire A2G imagery correspondence, thus establishing the rover position in a UAV-generated ground map. Feasibility of this method is evaluated on sample data from a Mars analogue environment. The proposed method can serve as a reliable assist in future Mars missions.

Classify Respiratory Abnormality in Lung Sounds Using STFT and a Fine-Tuned ResNet18 Network

Aug 30, 2022

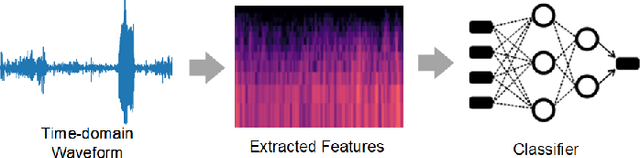

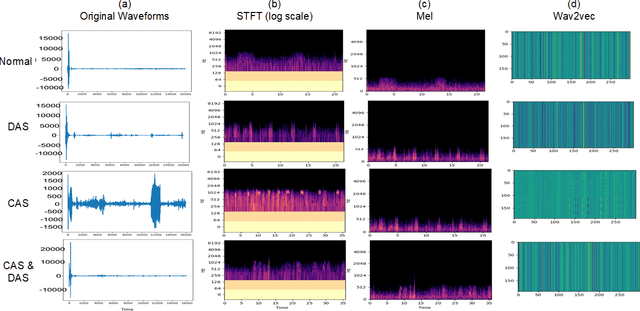

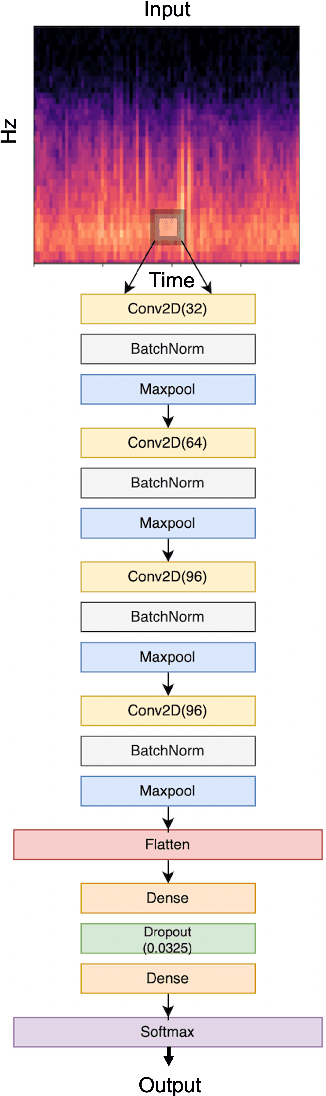

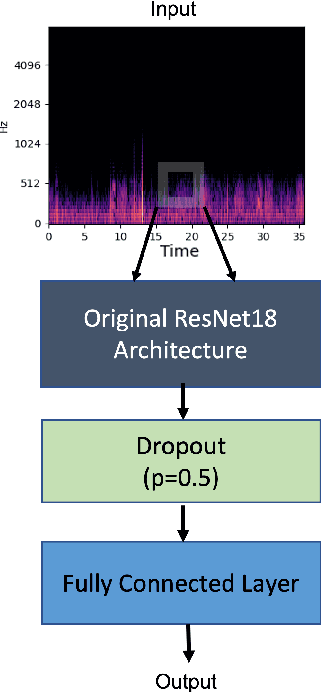

Abstract:Recognizing patterns in lung sounds is crucial to detecting and monitoring respiratory diseases. Current techniques for analyzing respiratory sounds demand domain experts and are subject to interpretation. Hence an accurate and automatic respiratory sound classification system is desired. In this work, we took a data-driven approach to classify abnormal lung sounds. We compared the performance using three different feature extraction techniques, which are short-time Fourier transformation (STFT), Mel spectrograms, and Wav2vec, as well as three different classifiers, including pre-trained ResNet18, LightCNN, and Audio Spectrogram Transformer. Our key contributions include the bench-marking of different audio feature extractors and neural network based classifiers, and the implementation of a complete pipeline using STFT and a fine-tuned ResNet18 network. The proposed method achieved Harmonic Scores of 0.89, 0.80, 0.71, 0.36 for tasks 1-1, 1-2, 2-1 and 2-2, respectively on the testing sets in the IEEE BioCAS 2022 Grand Challenge on Respiratory Sound Classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge