Hian Hian See

Extended Tactile Perception: Vibration Sensing through Tools and Grasped Objects

Jun 01, 2021

Abstract:Humans display the remarkable ability to sense the world through tools and other held objects. For example, we are able to pinpoint impact locations on a held rod and tell apart different textures using a rigid probe. In this work, we consider how we can enable robots to have a similar capacity, i.e., to embody tools and extend perception using standard grasped objects. We propose that vibro-tactile sensing using dynamic tactile sensors on the robot fingers, along with machine learning models, enables robots to decipher contact information that is transmitted as vibrations along rigid objects. This paper reports on extensive experiments using the BioTac micro-vibration sensor and a new event dynamic sensor, the NUSkin, capable of multi-taxel sensing at 4~kHz. We demonstrate that fine localization on a held rod is possible using our approach (with errors less than 1 cm on a 20 cm rod). Next, we show that vibro-tactile perception can lead to reasonable grasp stability prediction during object handover, and accurate food identification using a standard fork. We find that multi-taxel vibro-tactile sensing at sufficiently high sampling rate (above 2 kHz) led to the best performance across the various tasks and objects. Taken together, our results provides both evidence and guidelines for using vibro-tactile perception to extend tactile perception, which we believe will lead to enhanced competency with tools and better physical human-robot-interaction.

Event-Driven Visual-Tactile Sensing and Learning for Robots

Sep 15, 2020

Abstract:This work contributes an event-driven visual-tactile perception system, comprising a novel biologically-inspired tactile sensor and multi-modal spike-based learning. Our neuromorphic fingertip tactile sensor, NeuTouch, scales well with the number of taxels thanks to its event-based nature. Likewise, our Visual-Tactile Spiking Neural Network (VT-SNN) enables fast perception when coupled with event sensors. We evaluate our visual-tactile system (using the NeuTouch and Prophesee event camera) on two robot tasks: container classification and rotational slip detection. On both tasks, we observe good accuracies relative to standard deep learning methods. We have made our visual-tactile datasets freely-available to encourage research on multi-modal event-driven robot perception, which we believe is a promising approach towards intelligent power-efficient robot systems.

ST-MNIST -- The Spiking Tactile MNIST Neuromorphic Dataset

May 08, 2020

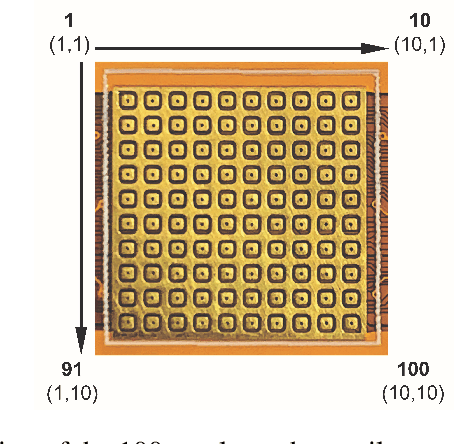

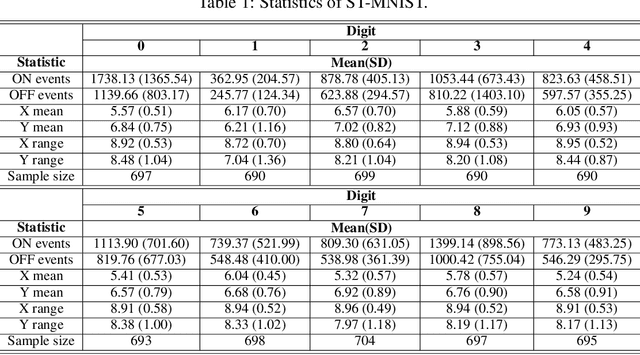

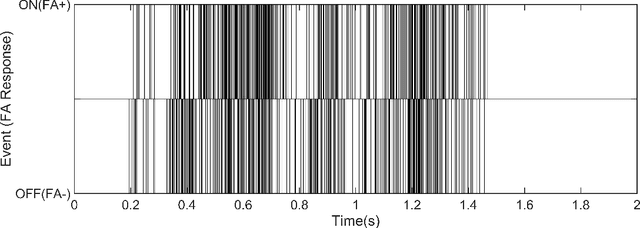

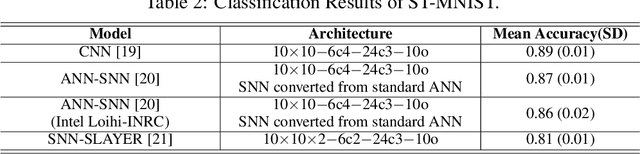

Abstract:Tactile sensing is an essential modality for smart robots as it enables them to interact flexibly with physical objects in their environment. Recent advancements in electronic skins have led to the development of data-driven machine learning methods that exploit this important sensory modality. However, current datasets used to train such algorithms are limited to standard synchronous tactile sensors. There is a dearth of neuromorphic event-based tactile datasets, principally due to the scarcity of large-scale event-based tactile sensors. Having such datasets is crucial for the development and evaluation of new algorithms that process spatio-temporal event-based data. For example, evaluating spiking neural networks on conventional frame-based datasets is considered sub-optimal. Here, we debut a novel neuromorphic Spiking Tactile MNIST (ST-MNIST) dataset, which comprises handwritten digits obtained by human participants writing on a neuromorphic tactile sensor array. We also describe an initial effort to evaluate our ST-MNIST dataset using existing artificial and spiking neural network models. The classification accuracies provided herein can serve as performance benchmarks for future work. We anticipate that our ST-MNIST dataset will be of interest and useful to the neuromorphic and robotics research communities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge